GRpc Gateway完整配置文档

GRPC+Gateway配置与使用

前提需求:

可执行文件:

需要包括的可执行文件有:

protoc.exe

protoc-gen-go.exe

protoc-gen-grpc-gateway.exe

protoc-gen-swagger.exe

为方便使用,已经将四个exe文件打包为压缩包,并上传百度网盘

链接: https://pan.baidu.com/s/1F9updJQfuqSgvghrcODRbQ 提取码: ti5h

压缩包名字为 : GRPC_GATEWAY_所需的相关文件.zip

请将以上四个 exe 文件解压并放入 $PATH 目录下.

如果链接已经失效,或者想知道如何 自己手动build产生以上可执行文件, 请跳转至本文档的最后一章节.

依赖文件:

需要的依赖文件:

包括但不限于

annotations.proto

http.proto

descriptor.proto

...

为方便使用,已经将所需的依赖库文件打包为压缩包,并上传百度网盘

链接: https://pan.baidu.com/s/1F9updJQfuqSgvghrcODRbQ 提取码: ti5h

压缩包名字为 : GRPC_GATEWAY_所需的相关文件.zip

请将以上依赖库文件解压并放入C:/grpcgateway 目录下.

如果链接已经失效,或者想知道以上依赖文件的来源, 请跳转至本文档的最后一章节.

代码依赖库

go get -u google.golang.org/grpc

go get -u github.com/golang/protobuf/proto

go get -u github.com/grpc-ecosystem/grpc-gateway

编写一个*.proto接口文件

一个简单的同时支持GRPC和Gateway的例子:

syntax = "proto3";

package echo;

import "google/api/annotations.proto";

message StringMessage {

string value = 1;

}

service EchoService {

rpc Echo(StringMessage) returns (StringMessage) {

option (google.api.http) = {

post: "/v1/echo"

body: "*"

};

}

}

解读:

例子中,

1.package echo 是包名, 根据proto产生出来的代码文件,会以echo这个名字命名函数.

2.通过 message StringMessage {} 定义了一个数据类型(相当于结构体)

3.rpc Echo(StringMessage) returns (StringMessage) 定义了一个函数,其request格式和response格式都是StringMessage . 其中,需要在函数体内部, 规定 http方法,以及路由.

option (google.api.http) = {

post: “/v1/echo”

body: “*”

};规定了 http 方法是 post, 路由是 /v1/echo 要post的数据是 StringMessage

编译 *.proto 接口文件

首先打开 Powershell,或者 MINGW64 或者 Bash(Linux)

进入*.proto文件所在的文件夹

第一步,产生 grpc 对应的代码文件

在命令行输入

protoc -IC:/grpcgateway -I. \

--go_out=plugins=grpc:. \

./*.proto

命令行的解读:

第一行:规定了依赖库文件的目录地址,这里规定的目录地址是 C:/grpcgateway

第二行:规定了编译插件是grpc,输出的代码是golang

第三行:规定了*.proto文件的所在路径, 此命令表示在当前目录下寻找任意 *.proto文件

通过该命令即可产生 grpc 的 stub代码

第二步,产生 grpc gateway 对应的代码文件

在命令行输入

protoc -IC:/grpcgateway -I. \

--grpc-gateway_out=logtostderr=true:. \

./*.proto

命令行的解读:

第一行:规定了依赖库文件的目录地址,这里规定的目录地址是 C:/grpcgateway

第二行:规定了编译插件是grpc-gateway_out,输出的代码是golang

第三行:规定了*.proto文件的所在路径, 此命令表示在当前目录下寻找任意 *.proto文件

编译成功后,会产生的代码文件是:

编写GRPC的服务:

这里介绍的是最Basic的方式,可能有现有的框架以更优的方式来实现.

最朴素的,使用echo.pb.go 文件(GRPC的stub代码文件)的方式是:

对于服务端:

一个最朴素的代码

package main

import (

"log"

"net"

"golang.org/x/net/context"

"google.golang.org/grpc"

pb "test_grpc/gw/pb"

)

const (

port = ":51001"

)

type server struct {

}

func (s *server) Echo(c context.Context, v *pb.StringMessage) (*pb.StringMessage, error) {

result := &pb.StringMessage{Value: v.Value}

return result, nil

}

func main() {

lis, err := net.Listen("tcp", port)

if err != nil {

log.Fatalf("failed to listen: %v", err)

}

// Creates a new gRPC server

s := grpc.NewServer()

pb.RegisterEchoServiceServer(s, &server{})

s.Serve(lis)

}

代码解读:

- 在import中,通过 import pb “test_grpc/gw/pb” 将stub代码引入进来

- 使用 s := grpc.NewServer() 和 s.Serve(lis) 建立服务器

- 使用stub中的代码, pb.RegisterEchoServiceServer(s, &server{}) 其中 server 是我们定义的结构体,用来实例化GRPC的 interface

- func (s *server) Echo(context.Context, *pb.StringMessage) 这个函数就是实例化接口后的函数

对于客户端:

一个最朴素的代码

package main

import (

"fmt"

"log"

"golang.org/x/net/context"

"google.golang.org/grpc"

pb "test_grpc/gw/pb"

)

const (

address = "localhost:51001"

)

func main() {

// Set up a connection to the gRPC server.

conn, err := grpc.Dial(address, grpc.WithInsecure())

if err != nil {

log.Fatalf("did not connect: %v", err)

}

defer conn.Close()

client := pb.NewEchoServiceClient(conn)

msg := &pb.StringMessage{Value: "hello"}

result, err := client.Echo(context.Background(), msg)

if err == nil {

fmt.Print(result.Value)

} else {

fmt.Print(err.Error())

}

}

代码解读:

- address配置了GRPC服务端的地址

- 通过 conn, err := grpc.Dial(address, grpc.WithInsecure()) 获得一个连接

- client := pb.NewEchoServiceClient(conn) 使用stub代码中的NewEchoServiceClient函数, 通过conn连接创建对象实例

- client.Echo(context.Background(), msg)调用对象方法

编写GRPC Gateway服务:

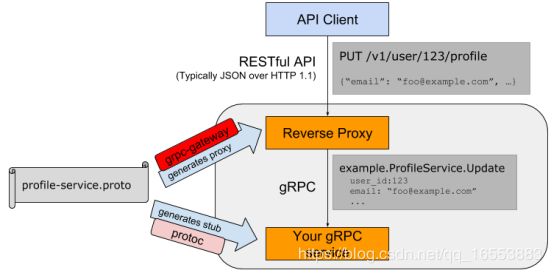

如下图:

GRPC Gateway 是一个反向代理服务. 它接受客户端的请求,并转化为 GRPC 的请求方法, 从GRPC服务器上获取数据, 然后解析成json文本返回给客户端.

而产生的代码文件 echo.pb.gw.go 就是Gateway反向代理的支持代码.

最朴素的使用 echo.pb.gw.go 的代码是:

package main

import (

"flag"

"fmt"

"net/http"

gw "test/gw/pb"

"github.com/grpc-ecosystem/grpc-gateway/runtime"

"golang.org/x/net/context"

"google.golang.org/grpc"

)

var (

echoEndpoint = flag.String("echo_endpoint", "localhost:51001", "endpoint of YourService")

)

func run() error {

ctx := context.Background()

ctx, cancel := context.WithCancel(ctx)

defer cancel()

mux := runtime.NewServeMux()

opts := []grpc.DialOption{grpc.WithInsecure()}

err := gw.RegisterEchoServiceHandlerFromEndpoint(ctx, mux, *echoEndpoint, opts)

if err != nil {

return err

}

return http.ListenAndServe(":9090", mux)

}

func main() {

if err := run(); err != nil {

fmt.Print(err.Error())

}

}

代码解释:

- echoEndpoint 定义了GRPC服务器的相关信息,包括ip地址和端口

- gw.RegisterEchoServiceHandlerFromEndpoint 通过gateway支持代码中的函数注册服务

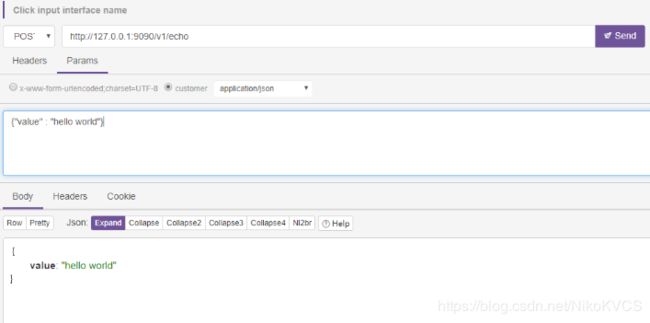

测试服务

首先运行GRPC 服务器: localhost:51001

其次运行Gateway服务器: http://localhost:9090

根据 *.proto 的编写, 我们知道该api的 http 方法是 post, 路由是 /v1/echo 要post的数据是 StringMessage

使用postman进行测试

选择post方法,

url 为 http://127.0.0.1:9090/v1/echo

发送的数据是一个json : {“value” : “hello world”}

压力测试

对照组的代码(纯http):

package main

import (

"encoding/json"

"fmt"

"io/ioutil"

"net/http"

)

type StringMessage struct {

Value string

}

func Echo(w http.ResponseWriter, r *http.Request) {

buff, _ := ioutil.ReadAll(r.Body)

request := &StringMessage{}

json.Unmarshal(buff, request)

result := &StringMessage{}

result.Value = request.Value

byte, _ := json.Marshal(result)

fmt.Fprintf(w, string(byte))

}

func main() {

http.HandleFunc("/v1/echo", Echo)

if err := http.ListenAndServe(":9000", nil); err != nil {

fmt.Println("ListenAndServe err", err)

}

}

实验组1:本解决方案的GRpc Gateway

Ubuntu 内对照实验

使用wrk进行测试

Lua 脚本为

wrk.method = "POST"

wrk.body = '{"value" : "hello world"}'

wrk命令为

./wrk -c1000 -t5 http://127.0.0.1:9000 --script=post.lua --latency --timeout 1s

低数据量压测(返回1B数据):

对照实验组测试结果(为确保可靠性,采取3次重复实验)

Begin to test Naive group

Running 10s test @ http://127.0.0.1:9000/v1/echo

5 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 460.33us 14.74ms 999.83ms 99.86%

Req/Sec 19.96k 11.42k 33.01k 66.92%

Latency Distribution

50% 27.00us

75% 28.00us

90% 35.00us

99% 120.00us

287578 requests in 10.07s, 38.40MB read

Socket errors: connect 0, read 0, write 0, timeout 154

Requests/sec: 28555.77

Transfer/sec: 3.81MB

Running 10s test @ http://127.0.0.1:9000/v1/echo

5 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 186.71us 7.54ms 919.75ms 99.82%

Req/Sec 23.07k 11.79k 33.30k 74.22%

Latency Distribution

50% 27.00us

75% 28.00us

90% 32.00us

99% 104.00us

311186 requests in 10.05s, 41.55MB read

Socket errors: connect 0, read 0, write 0, timeout 162

Requests/sec: 30968.61

Transfer/sec: 4.13MB

Running 10s test @ http://127.0.0.1:9000/v1/echo

5 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 367.30us 12.44ms 974.46ms 99.82%

Req/Sec 19.49k 12.02k 31.50k 74.48%

Latency Distribution

50% 28.00us

75% 29.00us

90% 34.00us

99% 108.00us

303441 requests in 10.04s, 40.51MB read

Socket errors: connect 0, read 0, write 0, timeout 244

Requests/sec: 30209.99

Transfer/sec: 4.03MB

实验组1(GRPC Gateway)

Begin to test GRPC Gateway group

Running 10s test @ http://127.0.0.1:9090/v1/echo

5 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 116.98ms 78.26ms 975.40ms 82.53%

Req/Sec 1.65k 549.93 4.60k 84.10%

Latency Distribution

50% 114.01ms

75% 160.12ms

90% 173.03ms

99% 400.55ms

78999 requests in 10.05s, 13.34MB read

Socket errors: connect 0, read 0, write 0, timeout 162

Requests/sec: 7861.09

Transfer/sec: 1.33MB

Running 10s test @ http://127.0.0.1:9090/v1/echo

5 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 113.84ms 75.99ms 969.75ms 82.80%

Req/Sec 1.78k 1.06k 8.87k 95.36%

Latency Distribution

50% 112.80ms

75% 160.30ms

90% 170.48ms

99% 361.25ms

80821 requests in 10.05s, 13.64MB read

Socket errors: connect 0, read 0, write 0, timeout 118

Requests/sec: 8043.14

Transfer/sec: 1.36MB

Running 10s test @ http://127.0.0.1:9090/v1/echo

5 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 112.56ms 69.62ms 996.74ms 81.22%

Req/Sec 1.78k 1.05k 8.70k 94.70%

Latency Distribution

50% 112.83ms

75% 160.00ms

90% 168.90ms

99% 322.07ms

80517 requests in 10.04s, 13.59MB read

Socket errors: connect 0, read 0, write 0, timeout 183

Requests/sec: 8015.85

Transfer/sec: 1.35MB

中数据量压测(返回1KB数据):

对照实验组测试结果(为确保可靠性,采取3次重复实验)

Begin to test Naive group

Running 10s test @ http://127.0.0.1:9000/v1/echo

5 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 393.88us 13.11ms 971.95ms 99.87%

Req/Sec 10.92k 7.30k 22.43k 54.80%

Latency Distribution

50% 37.00us

75% 39.00us

90% 50.00us

99% 252.00us

206044 requests in 10.08s, 338.76MB read

Socket errors: connect 0, read 0, write 0, timeout 174

Requests/sec: 20446.49

Transfer/sec: 33.62MB

Running 10s test @ http://127.0.0.1:9000/v1/echo

5 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 377.99us 12.26ms 980.44ms 99.85%

Req/Sec 11.20k 6.61k 21.97k 58.38%

Latency Distribution

50% 37.00us

75% 39.00us

90% 49.00us

99% 240.00us

214487 requests in 10.04s, 352.65MB read

Socket errors: connect 0, read 0, write 0, timeout 161

Requests/sec: 21352.97

Transfer/sec: 35.11MB

Running 10s test @ http://127.0.0.1:9000/v1/echo

5 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 452.24us 14.48ms 988.18ms 99.83%

Req/Sec 18.70k 6.44k 22.49k 85.09%

Latency Distribution

50% 37.00us

75% 39.00us

90% 49.00us

99% 230.00us

215774 requests in 10.05s, 354.76MB read

Socket errors: connect 0, read 0, write 0, timeout 133

Requests/sec: 21465.42

Transfer/sec: 35.29MB

实验组1(GRPC Gateway)

Running 10s test @ http://127.0.0.1:9090/v1/echo

5 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 137.69ms 77.44ms 969.58ms 71.17%

Req/Sec 1.33k 488.89 3.62k 85.26%

Latency Distribution

50% 135.99ms

75% 198.51ms

90% 215.25ms

99% 273.30ms

63275 requests in 10.06s, 106.27MB read

Socket errors: connect 0, read 0, write 0, timeout 308

Requests/sec: 6292.18

Transfer/sec: 10.57MB

Running 10s test @ http://127.0.0.1:9090/v1/echo

5 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 122.03ms 69.62ms 979.16ms 63.50%

Req/Sec 1.34k 696.89 5.67k 87.82%

Latency Distribution

50% 118.44ms

75% 174.87ms

90% 205.86ms

99% 226.60ms

63008 requests in 10.05s, 105.82MB read

Socket errors: connect 0, read 0, write 0, timeout 473

Requests/sec: 6270.56

Transfer/sec: 10.53MB

Running 10s test @ http://127.0.0.1:9090/v1/echo

5 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 134.67ms 86.14ms 992.38ms 77.67%

Req/Sec 1.41k 0.88k 6.86k 91.63%

Latency Distribution

50% 128.12ms

75% 194.81ms

90% 214.88ms

99% 344.52ms

62149 requests in 10.05s, 104.37MB read

Socket errors: connect 0, read 0, write 0, timeout 300

Requests/sec: 6184.34

Transfer/sec: 10.39MB

高数据量压测(返回70KB数据):

wrk命令为

./wrk -c10 -t5 http://127.0.0.1:9000 --script=post.lua --latency --timeout 1s

对照实验组测试结果(为确保可靠性,采取3次重复实验)

Begin to test Naive group

Running 10s test @ http://127.0.0.1:9000/v1/echo

5 threads and 10 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 7.07ms 56.39ms 832.75ms 98.29%

Req/Sec 1.48k 0.90k 2.21k 71.09%

Latency Distribution

50% 437.00us

75% 522.00us

90% 605.00us

99% 305.60ms

21444 requests in 10.03s, 1.66GB read

Socket errors: connect 0, read 0, write 0, timeout 36

Requests/sec: 2138.94

Transfer/sec: 169.08MB

Running 10s test @ http://127.0.0.1:9000/v1/echo

5 threads and 10 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 466.18us 137.83us 8.69ms 91.64%

Req/Sec 1.31k 0.92k 2.21k 70.29%

Latency Distribution

50% 435.00us

75% 512.00us

90% 595.00us

99% 741.00us

21452 requests in 10.03s, 1.66GB read

Socket errors: connect 0, read 0, write 0, timeout 44

Requests/sec: 2139.46

Transfer/sec: 169.12MB

Running 10s test @ http://127.0.0.1:9000/v1/echo

5 threads and 10 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 1.59ms 17.30ms 438.85ms 99.42%

Req/Sec 1.79k 694.50 2.22k 83.93%

Latency Distribution

50% 439.00us

75% 528.00us

90% 612.00us

99% 1.01ms

21102 requests in 10.03s, 1.63GB read

Socket errors: connect 0, read 0, write 0, timeout 19

Requests/sec: 2104.79

Transfer/sec: 166.37MB

实验组1(GRPC Gateway)

Begin to test GRPC Gateway group

Running 10s test @ http://127.0.0.1:9090/v1/echo

5 threads and 10 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 10.36ms 3.02ms 34.10ms 78.13%

Req/Sec 194.40 30.53 250.00 74.40%

Latency Distribution

50% 10.00ms

75% 11.41ms

90% 13.70ms

99% 22.00ms

9730 requests in 10.05s, 769.46MB read

Requests/sec: 968.53

Transfer/sec: 76.59MB

Running 10s test @ http://127.0.0.1:9090/v1/echo

5 threads and 10 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 9.65ms 2.10ms 25.27ms 74.37%

Req/Sec 207.73 15.80 260.00 70.60%

Latency Distribution

50% 9.76ms

75% 10.75ms

90% 12.02ms

99% 14.62ms

10400 requests in 10.05s, 822.44MB read

Requests/sec: 1034.92

Transfer/sec: 81.84MB

Running 10s test @ http://127.0.0.1:9090/v1/echo

5 threads and 10 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 9.39ms 1.85ms 21.56ms 78.20%

Req/Sec 213.51 13.07 250.00 66.20%

Latency Distribution

50% 9.57ms

75% 10.40ms

90% 11.21ms

99% 13.62ms

10687 requests in 10.05s, 845.21MB read

Requests/sec: 1063.91

Transfer/sec: 84.14MB

环境配置与build

对于 protoc-gen-go.exe 文件:

go get -u github.com/golang/protobuf/protoc-gen-go

对于 protoc-gen-swagger.exe 文件:

go get -u github.com/grpc-ecosystem/grpc-gateway/protoc-gen-swagger

protoc-gen-grpc-gateway.exe

go get -u github.com/grpc-ecosystem/grpc-gateway/protoc-gen-grpc-gateway

对于 protoc.exe 文件:

go get -u github.com/protocolbuffers/protobuf

由于 protoc.exe 需要使用 make 进行编译,这里不多详述,具体可看 protobuf 文件夹内的 readme.md 进行编译

压缩包文件的依赖文件的来源:

- $GOPATH\src\github.com\grpc-ecosystem\grpc-gateway\third_party\googleapis 内的 google文件夹

- $GOPATH\src\github.com\protocolbuffers\protobuf\src 内的 google文件夹

protoc命令应包含以上两个来源,比如

protoc -I$GOPATH/src/github.com/protocolbuffers/protobuf/src -I. \

-I$GOPATH/src/github.com/grpc-ecosystem/grpc-gateway/third_party/googleapis \

--go_out=plugins=grpc:. \

./pb/*.proto