图像语义分割(一) —— Fully Convolutional Networks理解与实现

语义分割(零) https://blog.csdn.net/Qer_computerscience/article/details/84778682

来自伯克利的2014CVPR、2015PAMI非常经典的一篇用FCN进行语义分割的文章,后续也都基于此FCN架构进行进一步拓展。

下面对论文中主要思想进行总结

一、文章主要贡献

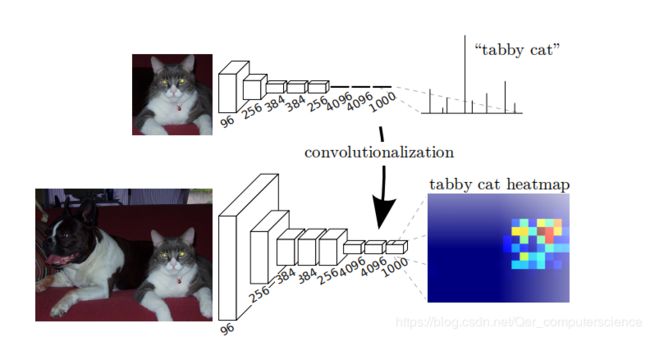

1.全卷积

常用的分类网络例如VGG、GoogleNet、AlexNet等,都是由features和classifier构成,由全连接层进行分类任务。同时也正是由于全连接的存在,导致网络只能接受固定大小的输入以适应固定数量的全连接神经元,而卷积网本身并没有对图像的size进行限制,不同的input size,卷积核依然有固定大小的感受野来产生corresponding features。作者采用了FCN架构,将传统分类网络的分类连接层转换成全卷积网络。(抛弃分类层,或构成dense conv层),在语义分割任务中,只需要将卷积层的输入,上采样缩小倍数到原始大小,就可以统计每一个pixel的误差进而反向传播。

2.多层信息融合

网络本身由很多个卷积部分构成,例如VGG16包含了5个卷积池化组,而global information解决是什么的问题,local information解决在哪里的问题,在卷积网中体现在深层和浅层信息中。Combining what and where,采用多层融合信息,更有利于最终的semantic segmentation。

3.end-to-end

网络端到端训练,基于FCN构成了end to end结构。

二、算法细节

文章采用了将全连接替换成全卷积层,通过1x1卷积核获得相应的感受野,但可以处理不同的输入尺寸了。

混合层的实现需要通过上采样,以VGG的conv5输入为例,经过卷积与池化,map的size经过了32x的下采样,我们可以通过插值的方式实现上采样,同样也可以通过deconvolution实现,同时卷积参数可以学习,能够达到非线性插值的效果。

在混合多层map的时候,如将32x上采样(deconv)2倍到16x,即可以与conv4的16x特征输出层混合,文中采用直接相加的方式,以此类推混合从深到浅层的信息。

三、简单实现

在这里我写了一个简单的pytorch实现版本,基于vgg16模型,混合了32s,16s,8s特征。

net结构如下所示:

import torch

import torch.nn as nn

import torch.optim as optim

from torchvision import models

#build vgg16 net

cfg = {'vgg16' : [64, 64, 'M', 128, 128, 'M', 256, 256, 256, 'M', 512, 512, 512, 'M', 512, 512, 512, 'M']}

ranges = {'vgg16': ((0, 5), (5, 10), (10, 17), (17, 24), (24, 31))}

def make_layers(cfg, batch_norm = False):

layers = []

in_channels = 3

for val in cfg:

if val == 'M':

layers += [nn.MaxPool2d(kernel_size=2, stride=2)]

else:

conv2d = nn.Conv2d(in_channels, val, kernel_size=3, padding=1)

if batch_norm:

layers += [conv2d, nn.BatchNorm2d(val), nn.ReLU(inplace=True)]

else:

layers += [conv2d, nn.ReLU(inplace=True)]

in_channels = val

return nn.Sequential(*layers)

class VGGNet(nn.Module):

def __init__(self, features):

super(VGGNet, self).__init__()

self.features = features

self.ranges = ranges['vgg16']

# self._init_weights()

def _init_weights(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

if m.bias is not None:

nn.init.constant_(m.bias, 0)

elif isinstance(m, nn.BatchNorm2d):

nn.init.constant_(m.weight, 1)

nn.init.constant_(m.bias, 0)

def forward(self, x):

output = {}

for i in range(len(self.ranges)):

for layer in range(self.ranges[i][0], self.ranges[i][1]):

x = self.features[layer](x)

output['x%d'%(i+1)] = x

return output

class FCN32s(nn.Module):

def __init__(self, base_model, n_class):

super().__init__()

self.n_class = n_class

self.base_model = base_model

self.conv = nn.Conv2d(512, n_class, kernel_size=1)

self.relu = nn.ReLU(inplace=True)

self.deconv = nn.ConvTranspose2d(n_class, n_class, kernel_size=64, stride=32, padding=16)

def forward(self, x):

output = self.base_model(x)

x5 = output['x5']

score = self.relu(self.conv(x5))

score = self.deconv(score)

return score

class FCN16s(nn.Module):

def __init__(self, base_model, n_class):

super().__init__()

self.n_class = n_class

self.base_model = base_model

self.relu = nn.ReLU(inplace=True)

self.conv = nn.Conv2d(512, n_class, kernel_size=1)

self.deconv = nn.ConvTranspose2d(n_class, n_class, kernel_size=4, stride=2, padding=1)

self.deconv2 = nn.ConvTranspose2d(n_class, n_class, kernel_size=32, stride=16, padding=8)

self.bn = nn.BatchNorm2d(n_class)

def forward(self, x):

output = self.base_model(x)

x5 = output['x5']

x4 = output['x4']

score = self.relu(self.conv(x5))

score = self.bn(self.deconv(score) + self.relu(self.conv(x4)))

score = self.deconv2(score)

return score

class FCN8s(nn.Module):

def __init__(self, base_model, n_class):

super().__init__()

self.n_class = n_class

self.base_model = base_model

self.relu = nn.ReLU(inplace=True)

self.conv5 = nn.Conv2d(512, n_class, kernel_size=1)

self.conv4 = nn.Conv2d(512, n_class, kernel_size=1)

self.conv3 = nn.Conv2d(256, n_class, kernel_size=1)

self.deconv5 = nn.ConvTranspose2d(n_class, n_class, kernel_size=4, stride=2, padding=1)

self.deconv4 = nn.ConvTranspose2d(n_class, n_class, kernel_size=4, stride=2, padding=1)

self.deconv3 = nn.ConvTranspose2d(n_class, n_class, kernel_size=16, stride=8, padding=4)

self.bn1 = nn.BatchNorm2d(n_class)

self.bn2 = nn.BatchNorm2d(n_class)

def forward(self, x):

output = self.base_model(x)

x5 = output['x5']

x4 = output['x4']

x3 = output['x3']

score = self.relu(self.conv5(x5))

score = self.bn1(self.deconv5(score) + self.relu(self.conv4(x4)))

score = self.bn2(self.deconv4(score) + self.relu(self.conv3(x3)))

score = self.deconv3(score)

return score

if __name__ == '__main__':

print('main:')

model = VGGNet(make_layers(cfg['vgg16']))

# fine-tune model.

model.load_state_dict(torch.load('vgg16_features.pth'))

input = torch.autograd.Variable(torch.randn(5, 3, 160, 160))

y = torch.autograd.Variable(torch.randn(5, 20, 160, 160), requires_grad=False)

fcn = FCN8s(model, 20)

#test loss decrease.

Loss = nn.BCEWithLogitsLoss()

optimizer = optim.SGD(fcn.parameters(), lr=1e-3, momentum=0.9)

for i in range(10):

optimizer.zero_grad()

output = fcn(input)

loss = Loss(output, y)

loss.backward()

print("iter{}, loss {}".format(i, loss.data.item()))

optimizer.step()