Single Shot MultiBox Detector论文翻译——中英文对照

文章作者:Tyan

博客:noahsnail.com | CSDN | 简书 | 云+社区

声明:作者翻译论文仅为学习,如有侵权请联系作者删除博文,谢谢!

SSD: Single Shot MultiBox Detector

Abstract

We present a method for detecting objects in images using a single deep neural network. Our approach, named SSD, discretizes the output space of bounding boxes into a set of default boxes over different aspect ratios and scales per feature map location. At prediction time, the network generates scores for the presence of each object category in each default box and produces adjustments to the box to better match the object shape. Additionally, the network combines predictions from multiple feature maps with different resolutions to naturally handle objects of various sizes. SSD is simple relative to methods that require object proposals because it completely eliminates proposal generation and subsequent pixel or feature resampling stages and encapsulates all computation in a single network. This makes SSD easy to train and straightforward to integrate into systems that require a detection component. Experimental results on the PASCAL VOC, COCO, and ILSVRC datasets confirm that SSD has competitive accuracy to methods that utilize an additional object proposal step and is much faster, while providing a unified framework for both training and inference. For 300 × 300 input, SSD achieves 74.3% mAP on VOC2007 test at 59 FPS on a Nvidia Titan X and for 512 × 512 input, SSD achieves 76.9% mAP, outperforming a comparable state-of-the-art Faster R-CNN model. Compared to other single stage methods, SSD has much better accuracy even with a smaller input image size. Code is available at: https://github.com/weiliu89/caffe/tree/ssd.

摘要

我们提出了一种使用单个深度神经网络来检测图像中的目标的方法。我们的方法命名为SSD,将边界框的输出空间离散化为不同长宽比的一组默认框和并缩放每个特征映射的位置。在预测时,网络会在每个默认框中为每个目标类别的出现生成分数,并对框进行调整以更好地匹配目标形状。此外,网络还结合了不同分辨率的多个特征映射的预测,自然地处理各种尺寸的目标。相对于需要目标提出的方法,SSD非常简单,因为它完全消除了提出生成和随后的像素或特征重新采样阶段,并将所有计算封装到单个网络中。这使得SSD易于训练和直接集成到需要检测组件的系统中。PASCAL VOC,COCO和ILSVRC数据集上的实验结果证实,SSD对于利用额外的目标提出步骤的方法具有竞争性的准确性,并且速度更快,同时为训练和推断提供了统一的框架。对于300×300的输入,SSD在VOC2007测试中以59FPS的速度在Nvidia Titan X上达到 74.3% 的mAP,对于512×512的输入,SSD达到了 76.9% 的mAP,优于参照的最先进的Faster R-CNN模型。与其他单阶段方法相比,即使输入图像尺寸较小,SSD也具有更高的精度。代码获取:https://github.com/weiliu89/caffe/tree/ssd。

1. Introduction

Current state-of-the-art object detection systems are variants of the following approach: hypothesize bounding boxes, resample pixels or features for each box, and apply a high-quality classifier. This pipeline has prevailed on detection benchmarks since the Selective Search work [1] through the current leading results on PASCAL VOC, COCO, and ILSVRC detection all based on Faster R-CNN[2] albeit with deeper features such as [3]. While accurate, these approaches have been too computationally intensive for embedded systems and, even with high-end hardware, too slow for real-time applications.Often detection speed for these approaches is measured in seconds per frame (SPF), and even the fastest high-accuracy detector, Faster R-CNN, operates at only 7 frames per second (FPS). There have been many attempts to build faster detectors by attacking each stage of the detection pipeline (see related work in Sec. 4), but so far, significantly increased speed comes only at the cost of significantly decreased detection accuracy.

1. 引言

目前最先进的目标检测系统是以下方法的变种:假设边界框,每个框重采样像素或特征,并应用一个高质量的分类器。自从选择性搜索[1]通过在PASCAL VOC,COCO和ILSVRC上所有基于Faster R-CNN[2]的检测都取得了当前领先的结果(尽管具有更深的特征如[3]),这种流程在检测基准数据上流行开来。尽管这些方法准确,但对于嵌入式系统而言,这些方法的计算量过大,即使是高端硬件,对于实时应用而言也太慢。通常,这些方法的检测速度是以每帧秒(SPF)度量,甚至最快的高精度检测器,Faster R-CNN,仅以每秒7帧(FPS)的速度运行。已经有很多尝试通过处理检测流程中的每个阶段来构建更快的检测器(参见第4节中的相关工作),但是到目前为止,显著提高的速度仅以显著降低的检测精度为代价。

This paper presents the first deep network based object detector that does not resample pixels or features for bounding box hypotheses and and is as accurate as approaches that do. This results in a significant improvement in speed for high-accuracy detection (59 FPS with mAP 74.3% on VOC2007 test, vs. Faster R-CNN 7 FPS with mAP 73.2% or YOLO 45 FPS with mAP 63.4% ). The fundamental improvement in speed comes from eliminating bounding box proposals and the subsequent pixel or feature resampling stage. We are not the first to do this (cf [4,5]), but by adding a series of improvements, we manage to increase the accuracy significantly over previous attempts. Our improvements include using a small convolutional filter to predict object categories and offsets in bounding box locations, using separate predictors (filters) for different aspect ratio detections, and applying these filters to multiple feature maps from the later stages of a network in order to perform detection at multiple scales. With these modifications——especially using multiple layers for prediction at different scales——we can achieve high-accuracy using relatively low resolution input, further increasing detection speed. While these contributions may seem small independently, we note that the resulting system improves accuracy on real-time detection for PASCAL VOC from 63.4% mAP for YOLO to 74.3% mAP for our SSD. This is a larger relative improvement in detection accuracy than that from the recent, very high-profile work on residual networks [3]. Furthermore, significantly improving the speed of high-quality detection can broaden the range of settings where computer vision is useful.

本文提出了第一个基于深度网络的目标检测器,它不对边界框假设的像素或特征进行重采样,并且与其它方法有一样精确度。这对高精度检测在速度上有显著提高(在VOC2007测试中,59FPS和 74.3% 的mAP,与Faster R-CNN 7FPS和 73.2% 的mAP或者YOLO 45 FPS和 63.4% 的mAP相比)。速度的根本改进来自消除边界框提出和随后的像素或特征重采样阶段。我们并不是第一个这样做的人(查阅[4,5]),但是通过增加一系列改进,我们设法比以前的尝试显著提高了准确性。我们的改进包括使用小型卷积滤波器来预测边界框位置中的目标类别和偏移量,使用不同长宽比检测的单独预测器(滤波器),并将这些滤波器应用于网络后期的多个特征映射中,以执行多尺度检测。通过这些修改——特别是使用多层进行不同尺度的预测——我们可以使用相对较低的分辨率输入实现高精度,进一步提高检测速度。虽然这些贡献可能单独看起来很小,但是我们注意到由此产生的系统将PASCAL VOC实时检测的准确度从YOLO的 63.4% 的mAP提高到我们的SSD的 74.3% 的mAP。相比于最近备受瞩目的残差网络方面的工作[3],在检测精度上这是相对更大的提高。而且,显著提高的高质量检测速度可以扩大计算机视觉使用的设置范围。

We summarize our contributions as follows:

* We introduce SSD, a single-shot detector for multiple categories that is faster than the previous state-of-the-art for single shot detectors (YOLO), and significantly more accurate, in fact as accurate as slower techniques that perform explicit region proposals and pooling (including Faster R-CNN).

The core of SSD is predicting category scores and box offsets for a fixed set of default bounding boxes using small convolutional filters applied to feature maps.

To achieve high detection accuracy we produce predictions of different scales from feature maps of different scales, and explicitly separate predictions by aspect ratio.

These design features lead to simple end-to-end training and high accuracy, even on low resolution input images, further improving the speed vs accuracy trade-off.

Experiments include timing and accuracy analysis on models with varying input size evaluated on PASCAL VOC, COCO, and ILSVRC and are compared to a range of recent state-of-the-art approaches.

我们总结我们的贡献如下:

* 我们引入了SSD,这是一种针对多个类别的单次检测器,比先前的先进的单次检测器(YOLO)更快,并且准确得多,事实上,与执行显式区域提出和池化的更慢的技术具有相同的精度(包括Faster R-CNN)。

SSD的核心是预测固定的一系列默认边界框的类别分数和边界框偏移,使用更小的卷积滤波器应用到特征映射上。

为了实现高检测精度,我们根据不同尺度的特征映射生成不同尺度的预测,并通过纵横比明确分开预测。

这些设计功能使得即使在低分辨率输入图像上也能实现简单的端到端训练和高精度,从而进一步提高速度与精度之间的权衡。

实验包括在PASCAL VOC,COCO和ILSVRC上评估具有不同输入大小的模型的时间和精度分析,并与最近的一系列最新方法进行比较。

2. The Single Shot Detector (SSD)

This section describes our proposed SSD framework for detection (Sec. 2.1) and the associated training methodology (Sec. 2.2). Afterwards, Sec. 2.3 presents dataset-specific model details and experimental results.

2. 单次检测器(SSD)

本节描述我们提出的SSD检测框架(2.1节)和相关的训练方法(2.2节)。之后,2.3节介绍了数据集特有的模型细节和实验结果。

2.1 Model

The SSD approach is based on a feed-forward convolutional network that produces a fixed-size collection of bounding boxes and scores for the presence of object class instances in those boxes, followed by a non-maximum suppression step to produce the final detections. The early network layers are based on a standard architecture used for high quality image classification (truncated before any classification layers), which we will call the base network. We then add auxiliary structure to the network to produce detections with the following key features:

2.1 模型

SSD方法基于前馈卷积网络,该网络产生固定大小的边界框集合,并对这些边界框中存在的目标类别实例进行评分,然后进行非极大值抑制步骤来产生最终的检测结果。早期的网络层基于用于高质量图像分类的标准架构(在任何分类层之前被截断),我们将其称为基础网络。然后,我们将辅助结构添加到网络中以产生具有以下关键特征的检测:

Multi-scale feature maps for detection We add convolutional feature layers to the end of the truncated base network. These layers decrease in size progressively and allow predictions of detections at multiple scales. The convolutional model for predicting detections is different for each feature layer (cf Overfeat[4] and YOLO[5] that operate on a single scale feature map).

用于检测的多尺度特征映射。我们将卷积特征层添加到截取的基础网络的末端。这些层在尺寸上逐渐减小,并允许在多个尺度上对检测结果进行预测。用于预测检测的卷积模型对于每个特征层都是不同的(查阅Overfeat[4]和YOLO[5]在单尺度特征映射上的操作)。

Convolutional predictors for detection Each added feature layer (or optionally an existing feature layer from the base network) can produce a fixed set of detection predictions using a set of convolutional filters. These are indicated on top of the SSD network architecture in Fig. 2. For a feature layer of size m×n with p channels, the basic element for predicting parameters of a potential detection is a 3×3×p small kernel that produces either a score for a category, or a shape offset relative to the default box coordinates. At each of the m×n locations where the kernel is applied, it produces an output value. The bounding box offset output values are measured relative to a default box position relative to each feature map location (cf the architecture of YOLO[5] that uses an intermediate fully connected layer instead of a convolutional filter for this step).

Fig. 2: A comparison between two single shot detection models: SSD and YOLO [5]. Our SSD model adds several feature layers to the end of a base network, which predict the offsets to default boxes of different scales and aspect ratios and their associated confidences. SSD with a 300 × 300 input size significantly outperforms its 448 × 448 YOLO counterpart in accuracy on VOC2007 test while also improving the speed.

用于检测的卷积预测器。每个添加的特征层(或者任选的来自基础网络的现有特征层)可以使用一组卷积滤波器产生固定的检测预测集合。这些在图2中的SSD网络架构的上部指出。对于具有 p 通道的大小为 m×n 的特征层,潜在检测的预测参数的基本元素是 3×3×p 的小核得到某个类别的分数,或者相对于默认框坐标的形状偏移。在应用卷积核的 m×n 的每个位置,它会产生一个输出值。边界框偏移输出值是相对每个特征映射位置的相对默认框位置来度量的(查阅YOLO[5]的架构,该步骤使用中间全连接层而不是卷积滤波器)。

图2:两个单次检测模型的比较:SSD和YOLO[5]。我们的SSD模型在基础网络的末端添加了几个特征层,它预测了不同尺度和长宽比的默认边界框的偏移量及其相关的置信度。300×300输入尺寸的SSD在VOC2007 test上的准确度上明显优于448×448的YOLO的准确度,同时也提高了速度。

Default boxes and aspect ratios We associate a set of default bounding boxes with each feature map cell, for multiple feature maps at the top of the network. The default boxes tile the feature map in a convolutional manner, so that the position of each box relative to its corresponding cell is fixed. At each feature map cell, we predict the offsets relative to the default box shapes in the cell, as well as the per-class scores that indicate the presence of a class instance in each of those boxes. Specifically, for each box out of k at a given location, we compute c class scores and the 4 offsets relative to the original default box shape. This results in a total of (c+4)k filters that are applied around each location in the feature map, yielding (c+4)kmn outputs for a m×n feature map. For an illustration of default boxes, please refer to Fig.1. Our default boxes are similar to the anchor boxes used in Faster R-CNN[2], however we apply them to several feature maps of different resolutions. Allowing different default box shapes in several feature maps let us efficiently discretize the space of possible output box shapes.

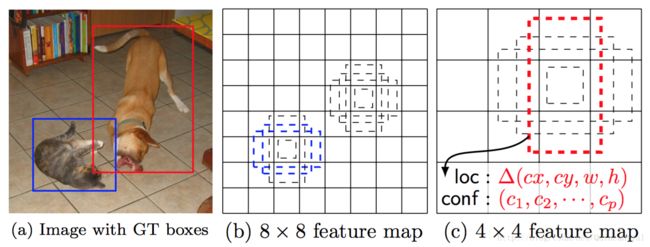

Fig. 1: SSD framework. (a) SSD only needs an input image and ground truth boxes for each object during training. In a convolutional fashion, we evaluate a small set (e.g. 4) of default boxes of different aspect ratios at each location in several feature maps with different scales (e.g. 8 × 8 and 4 × 4 in (b) and (c)). For each default box, we predict both the shape offsets and the confidences for all object categories ( (c1,c2,…,cp) ). At training time, we first match these default boxes to the ground truth boxes. For example, we have matched two default boxes with the cat and one with the dog, which are treated as positives and the rest as negatives. The model loss is a weighted sum between localization loss (e.g. Smooth L1 [6]) and confidence loss (e.g. Softmax).

默认边界框和长宽比。对于网络顶部的多个特征映射,我们将一组默认边界框与每个特征映射单元相关联。默认边界框以卷积的方式平铺特征映射,以便每个边界框相对于其对应单元的位置是固定的。在每个特征映射单元中,我们预测单元中相对于默认边界框形状的偏移量,以及指出每个边界框中存在的每个类别实例的类别分数。具体而言,对于给定位置处的 k 个边界框中的每一个,我们计算 c 个类别分数和相对于原始默认边界框形状的 4 个偏移量。这导致在特征映射中的每个位置周围应用总共 (c+4)k 个滤波器,对于 m×n 的特征映射取得 (c+4)kmn 个输出。有关默认边界框的说明,请参见图1。我们的默认边界框与Faster R-CNN[2]中使用的锚边界框相似,但是我们将它们应用到不同分辨率的几个特征映射上。在几个特征映射中允许不同的默认边界框形状让我们有效地离散可能的输出框形状的空间。

图1:SSD框架。(a)在训练期间,SSD仅需要每个目标的输入图像和真实边界框。以卷积方式,我们评估具有不同尺度(例如(b)和(c)中的8×8和4×4)的几个特征映射中每个位置处不同长宽比的默认框的小集合(例如4个)。对于每个默认边界框,我们预测所有目标类别( (c1,c2,…,cp) )的形状偏移量和置信度。在训练时,我们首先将这些默认边界框与实际的边界框进行匹配。例如,我们已经与猫匹配两个默认边界框,与狗匹配了一个,这被视为积极的,其余的是消极的。模型损失是定位损失(例如,Smooth L1[6])和置信度损失(例如Softmax)之间的加权和。

2.2 Training

The key difference between training SSD and training a typical detector that uses region proposals, is that ground truth information needs to be assigned to specific outputs in the fixed set of detector outputs. Some version of this is also required for training in YOLO[5] and for the region proposal stage of Faster R-CNN[2] and MultiBox[7]. Once this assignment is determined, the loss function and back propagation are applied end-to-end. Training also involves choosing the set of default boxes and scales for detection as well as the hard negative mining and data augmentation strategies.

2.2 训练

训练SSD和训练使用区域提出的典型检测器之间的关键区别在于,需要将真实信息分配给固定的检测器输出集合中的特定输出。在YOLO[5]的训练中、Faster R-CNN[2]和MultiBox[7]的区域提出阶段,一些版本也需要这样的操作。一旦确定了这个分配,损失函数和反向传播就可以应用端到端了。训练也涉及选择默认边界框集合和缩放进行检测,以及难例挖掘和数据增强策略。

Matching strategy During training we need to determine which default boxes correspond to a ground truth detection and train the network accordingly. For each ground truth box we are selecting from default boxes that vary over location, aspect ratio, and scale. We begin by matching each ground truth box to the default box with the best jaccard overlap (as in MultiBox [7]). Unlike MultiBox, we then match default boxes to any ground truth with jaccard overlap higher than a threshold (0.5). This simplifies the learning problem, allowing the network to predict high scores for multiple overlapping default boxes rather than requiring it to pick only the one with maximum overlap.

匹配策略。在训练过程中,我们需要确定哪些默认边界框对应实际边界框的检测,并相应地训练网络。对于每个实际边界框,我们从默认边界框中选择,这些框会在位置,长宽比和尺度上变化。我们首先将每个实际边界框与具有最好的Jaccard重叠(如MultiBox[7])的边界框相匹配。与MultiBox不同的是,我们将默认边界框匹配到Jaccard重叠高于阈值(0.5)的任何实际边界框。这简化了学习问题,允许网络为多个重叠的默认边界框预测高分,而不是要求它只挑选具有最大重叠的一个边界框。

注:Jaccard重叠即IoU。

鉴于CSDN出现排版错乱问题,请看http://noahsnail.com/2017/12/11/2017-12-11-Single%20Shot%20MultiBox%20Detector%E8%AE%BA%E6%96%87%E7%BF%BB%E8%AF%91%E2%80%94%E2%80%94%E4%B8%AD%E8%8B%B1%E6%96%87%E5%AF%B9%E7%85%A7/