安装hive

配置hive

在hdfs中新建目录/user/hive/warehouse

首先启动hadoop任务

hdfs dfs -mkdir /tmp

hdfs dfs -mkdir /user

hdfs dfs -mkdir /user/hive

hdfs dfs -mkdir /user/hive/warehouse

给新建的文件夹增加权限!

hadoop fs -chmod g+w /tmp

hadoop fs -chmod g+w /user/hive/warehouse

将mysql的驱动jar包mysql-connector-java-5.1.39-bin.jar拷入hive的lib目录下面

进入hive的conf目录下面复制一下hive-default.xml.template

并将之命名为:hive-site.xml

cp hive-default.xml.template hive-site.xml

使用vi hive-site.xml

修改下列属性值(通过/指令寻找,如果第一个定位不正确,n寻找下一个)

javax.jdo.option.ConnectionURL

jdbc:mysql://127.0.0.1:3306/hive?createDatabaseIfNotExist=true

JDBC connect string for a JDBC metastore

javax.jdo.option.ConnectionDriverName

com.mysql.jdbc.Driver

Driver class name for a JDBC metastore

javax.jdo.option.ConnectionUserName

root

Username to use against metastore database

javax.jdo.option.ConnectionPassword

sa

password to use against metastore database

hive.exec.local.scratchdir

/usr/tools/apache-hive-2.1.0-bin/tmp

Local scratch space for Hive jobs

hive.downloaded.resources.dir

/usr/tools/apache-hive-2.1.0-bin/tmp/resources

Temporary local directory for added resources in the remote file system.

hive.querylog.location

/usr/tools/apache-hive-2.1.0-bin/tmp

Location of Hive run time structured log file

hive.server2.logging.operation.log.location

/usr/tools/apache-hive-2.1.0-bin/tmp/operation_logs

Top level directory where operation logs are stored if logging functionality is enabled

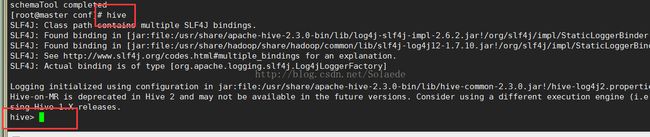

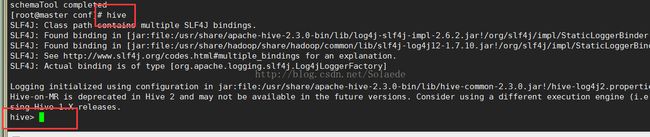

使用schematool 初始化metastore的schema:

schematool -initSchema -dbType mysql

运行hive

Hive 出现下面的页面即为安装成功!