hadoop2.6.5 HDFS的高可用集群搭建

1.先来看一下要搭建hadoop集群的HDFS HA结构图

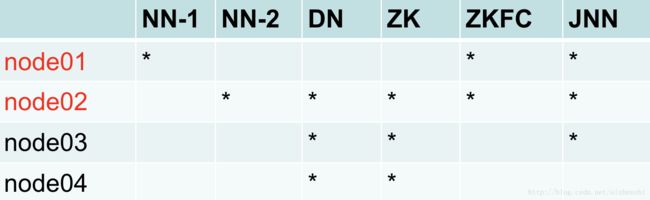

2.要配置的HDFS节点分布图

从分布图上可以看到,节点1和节点2作为namenode,节点2、3、4作为datanode,节点2、3、4作为Zookeeper,节点1和2作为ZKFC,节点1、2、3作为JournalNode。

3.准备4台Server

4.配置Zookeeper

1)下载Zookerper的jar包,下载链接如下:http://download.csdn.net/detail/aizhenshi/9857485

2)下载完成后,放到node2相应目录下,,一般放在和hadoop同级的目录里,然后解压。解压完成后,进入conf文件,执行命令:

cp zoo_sample.cfg zoo.cfg复制该文件。

vi zoo.cfg

# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

dataDir=/var/zk

# the port at which the clients will connect

clientPort=2181

# the maximum number of client connections.

# increase this if you need to handle more clients

#maxClientCnxns=60

#

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

# http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

#

# The number of snapshots to retain in dataDir

#autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to "0" to disable auto purge feature

#autopurge.purgeInterval=1

server.1=node2:2888:3888

server.2=node3:2888:3888

server.3=node4:2888:3888其中dataDir=/var/zk,是Zookeeper的工作目录,server.1=node2:2888:3888,server.2=node3:2888:3888,server.3=node4:2888:3888是Zookeeper要部署在那些服务器上。zk这个目录是要手动创建的。执行以下命令:

mkdir zk

cd zk

echo 1 > myid把Zookeeper文件目录拷贝到其他两个节点上

cp -r zookeeper-3.4/ node3:`pwd`/zookeeper-3.4

cp -r zookeeper-3.4/ node4:`pwd`/zookeeper-3.4在node3执行:

mkdir zk

cd zk

echo 2 > myid在node4执行:

mkdir zk

cd zk

echo 3 > myid进入到zookeeper-3.4目录下的bin目录,并执行:

[root@node2 zookeeper-3.4]# cd bin

[root@node2 bin]# ./zkServer.sh start所有部署了Zookeeper的节点(这里包括node2、node3、node4)都要执行./zkServer.sh start命令。

可使用./zkServer.sh status查看状态。

5.配置hdfs-site.xml

<configuration>

<property>

<name>dfs.replicationname>

<value>3value>

property>

<property>

<name>dfs.nameservicesname>

<value>myclustervalue>

property>

<property>

<name>dfs.ha.namenodes.myclustername>

<value>nn1,nn2value>

property>

<property>

<name>dfs.namenode.rpc-address.mycluster.nn1name>

<value>node1:8020value>

property>

<property>

<name>dfs.namenode.rpc-address.mycluster.nn2name>

<value>node2:8020value>

property>

<property>

<name>dfs.namenode.http-address.mycluster.nn1name>

<value>node1:50070value>

property>

<property>

<name>dfs.namenode.http-address.mycluster.nn2name>

<value>node2:50070value>

property>

<property>

<name>dfs.namenode.shared.edits.dirname>

<value>qjournal://node1:8485;node2:8485;node3:8485/myclustervalue>

property>

<property>

<name>dfs.client.failover.proxy.provider.myclustername>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvidervalue>

property>

<property>

<name>dfs.ha.fencing.methodsname>

<value>sshfencevalue>

property>

<property>

<name>dfs.ha.fencing.ssh.private-key-filesname>

<value>/root/.ssh/id_dsavalue>

property>

<property>

<name>dfs.ha.automatic-failover.enabledname>

<value>truevalue>

property>

configuration>6.配置core-site.xml文件

<configuration>

<property>

<name>fs.defaultFSname>

<value>hdfs://myclustervalue>

property>

<property>

<name>hadoop.tmp.dirname>

<value>/var/hadoop-2.6/havalue>

property>

<property>

<name>ha.zookeeper.quorumname>

<value>node2:2181,node3:2181,node4:2181value>

property>

configuration>7.启动zookeeper集群

避免出现未知的问题,先查看一下部署zookeeper的节点上是否有zookeeper的进程,如果有的话,kill掉zookeeper进程。

在节点2、3、4上执行命令:

zkServer.sh start8.启动JournalNode进程

在节点1、2、3上执行命令:

hadoop-daemon.sh start journalnode9.格式化namenode,并启动hdfs集群

在节点1上执行命令:

hdfs namenode –format

hadoop-deamon.sh start namenode通知节点2作为Standby的namenode,执行命令:

hdfs namenode –bootstrapStandby启动hdfs集群,在节点1上执行命令:

start-dfs.sh10.启动zkfc

先格式化zkfc,在节点1上执行命令:

hdfs zkfc -formatZK启动zkfc,需要在所有namenode节点启动zkfc,执行以下命令:

hadoop-daemon.sh start zkfc至此,一个高可用的hdfs集群就搭建完毕了!