mongo 介绍

mongo 的使用

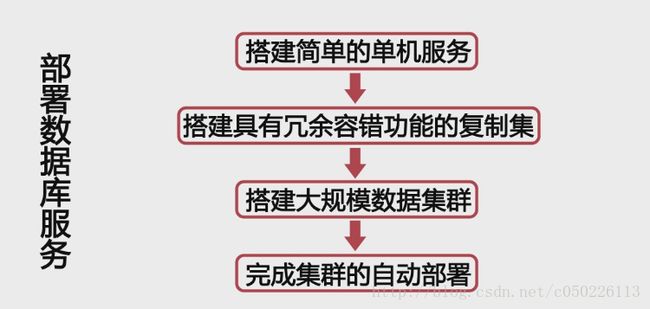

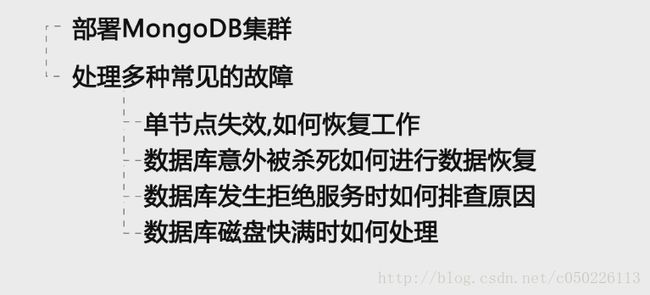

mongo 的运维

mongo 的文档 http://docs.mongoing.com/manual-zh/sharding.html

mongo 的复制集和分片

mongodb replica sets(复制集)

作用:提供了数据的冗余,增加了高可用

概念的梳理

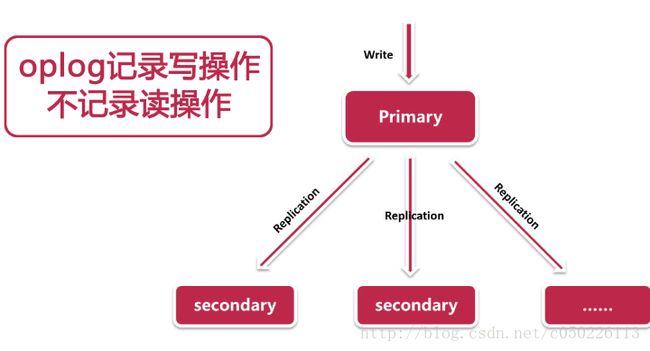

复制集的概念

复制集是从传统的主从结构演变而来,是由一组拥有相同数据集的mongod实例所组成的集群。

复制集的特点:主是唯一的,当不是固定的,只有主节点可写,从节点只能读数据,当复制集中的节点只有

主节点时复制集会将主节点降级为从节点,则复制集将不再提供写服务,只可读

在复制集中有两类节点

数据节点和投票节点,数据节点:存储数据,可以充当主或则从节点,

投票节点:负责选举,不存储数据,不能充当主或则从节点。

安装和简单的启动一个mongo实例

具体的安装过程和简单可以到别人的博客里看下

进入bin目录

有这些 程序 之后 用 mongod 启动一个 mongod的server服务

![]()

./mongod -h一些常用的配置

–port arg 默认27017,无论出于管理还是安全考虑都建议更改

–bind_ip arg 只监听这个绑定的ip

–logpath arg 日志路径

–logappend 日志追加

–pidfilepath arg 有利于脚本管理

–fork

–auth 开启验证

–slowms arg 开启慢查询日志

–profile arg 0=off 1=slow 2=all

–syncdelay arg 刷盘间隔

–oplogSize arg

–replSet arg 复制集名

[root@MiWiFi-R1CM bin]# ./mongod -f ../conf/mongod.conf

about to fork child process, waiting until server is ready for connections.

forked process: 13905

child process started successfully, parent exiting这里的conf文件的配置之后会写

下面来部署一个复制集

新建并连接一台虚拟机

host1:192.168.31.219

[root@MiWiFi-R1CM ~]# ifconfig

enp0s3: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.31.219 netmask 255.255.255.0 broadcast 192.168.31.255

inet6 fe80::a00:27ff:fe5f:664b prefixlen 64 scopeid 0x20

ether 08:00:27:5f:66:4b txqueuelen 1000 (Ethernet)

RX packets 67 bytes 10597 (10.3 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 50 bytes 11397 (11.1 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0填写配置文件 3个28001.conf ,28002.conf ,28003.conf 分别代表了3个实例监听在不同对应的端口 贴出28001中的配置

port=28001

bind_ip=192.168.31.219

dbpath=/usr/local/mongodb/data/28001/

logpath=/usr/local/mongodb/log/28001.log

logappend=true

pidfilepath=/usr/local/mongodb/data/28001/mongo.pid

fork=true

oplogSize=1024

replSet=gegege启动这三个实例

[root@MiWiFi-R1CM bin]# numactl --interleave=all ./mongod -f ../conf/28001.conf

about to fork child process, waiting until server is ready for connections.

forked process: 2098

child process started successfully, parent exiting

[root@MiWiFi-R1CM bin]# ./mongod -f ../conf/28002.conf

about to fork child process, waiting until server is ready for connections.

forked process: 2121

child process started successfully, parent exiting

[root@MiWiFi-R1CM bin]# ./mongod -f ../conf/28003.conf

about to fork child process, waiting until server is ready for connections.

forked process: 2144

child process started successfully, parent exiting

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 883/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 1456/master

tcp 0 0 192.168.31.219:28001 0.0.0.0:* LISTEN 2098/./mongod

tcp 0 0 192.168.31.219:28002 0.0.0.0:* LISTEN 2121/./mongod

tcp 0 0 192.168.31.219:28003 0.0.0.0:* LISTEN 2144/./mongod 创建3个客户端进行连接测试

先进入28001的client

> use admin

> rs.initiate()

{

"info2" : "no configuration specified. Using a default configuration for the set",

"me" : "192.168.31.219:28001",

"ok" : 1

}

gegege:PRIMARY> rs.add('192.168.31.219:28002')

{ "ok" : 1 }

gegege:PRIMARY> rs.add('192.168.31.219:28003')

{ "ok" : 1 }

gegege:PRIMARY> rs.status()

{

"set" : "gegege",

"date" : ISODate("2017-01-15T06:48:07.306Z"),

"myState" : 1,

"term" : NumberLong(1),

"heartbeatIntervalMillis" : NumberLong(2000),

"members" : [

{

"_id" : 0,

"name" : "192.168.31.219:28001",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 76075,

"optime" : {

"ts" : Timestamp(1484462881, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2017-01-15T06:48:01Z"),

"infoMessage" : "could not find member to sync from",

"electionTime" : Timestamp(1484462773, 2),

"electionDate" : ISODate("2017-01-15T06:46:13Z"),

"configVersion" : 3,

"self" : true

},

{

"_id" : 1,

"name" : "192.168.31.219:28002",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 7,

"optime" : {

"ts" : Timestamp(1484462881, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2017-01-15T06:48:01Z"),

"lastHeartbeat" : ISODate("2017-01-15T06:48:05.699Z"),

"lastHeartbeatRecv" : ISODate("2017-01-15T06:48:06.695Z"),

"pingMs" : NumberLong(0),

"syncingTo" : "192.168.31.219:28001",

"configVersion" : 3

},

{

"_id" : 2,

"name" : "192.168.31.219:28003",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 5,

"optime" : {

"ts" : Timestamp(1484462881, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2017-01-15T06:48:01Z"),

"lastHeartbeat" : ISODate("2017-01-15T06:48:05.698Z"),

"lastHeartbeatRecv" : ISODate("2017-01-15T06:48:03.718Z"),

"pingMs" : NumberLong(0),

"configVersion" : 3

}

],

"ok" : 1

}

gegege:PRIMARY> use ljm

gegege:PRIMARY> db.ljm.insert({"name":"lalala"})

WriteResult({ "nInserted" : 1 })在主节点中插入一条数据

切换到一个从节点

gegege:SECONDARY> rs.slaveOk(1)

gegege:SECONDARY> use ljm

switched to db ljm

gegege:SECONDARY> db.ljm.find()

{ "_id" : ObjectId("587b1ca519254fceb471077e"), "name" : "lalala" }

复制集成员的 config的key的

__id, host, arbiterOnly, priority , hidden, votes, slaveDelay, buildIndexes

newconf = rs.conf();

newconf.members[0].priority=5

rs.reconfig(newconf)gegege:PRIMARY> use local

switched to db local

gegege:PRIMARY> db.oplog.find()

gegege:PRIMARY> db.oplog.rs.find()

{ "ts" : Timestamp(1484462773, 1), "h" : NumberLong("3501916664279793709"), "v" : 2, "op" : "n", "ns" : "", "o" : { "msg" : "initiating set" } }

{ "ts" : Timestamp(1484462774, 1), "t" : NumberLong(1), "h" : NumberLong("8730864384105453179"), "v" : 2, "op" : "n", "ns" : "", "o" : { "msg" : "new primary" } }

{ "ts" : Timestamp(1484462879, 1), "t" : NumberLong(1), "h" : NumberLong("-3557419064867120732"), "v" : 2, "op" : "n", "ns" : "", "o" : { "msg" : "Reconfig set", "version" : 2 } }

{ "ts" : Timestamp(1484462881, 1), "t" : NumberLong(1), "h" : NumberLong("5364589923283900903"), "v" : 2, "op" : "n", "ns" : "", "o" : { "msg" : "Reconfig set", "version" : 3 } }

{ "ts" : Timestamp(1484463269, 1), "t" : NumberLong(1), "h" : NumberLong("326681565887499179"), "v" : 2, "op" : "c", "ns" : "ljm.$cmd", "o" : { "create" : "ljm" } }

{ "ts" : Timestamp(1484463269, 2), "t" : NumberLong(1), "h" : NumberLong("-7126353079720928060"), "v" : 2, "op" : "i", "ns" : "ljm.ljm", "o" : { "_id" : ObjectId("587b1ca519254fceb471077e"), "name" : "lalala" } }1:重启一个实例以单机模式,

通常再关闭server之前,使用rs.stepDown() 强制primary成为secondary

2:重新创建一个新大小,

其中包含旧的oplgo的入口条目的oplog

3:重启mongod作为replica set的成员

操作步骤:

1>: Restart a Secondary in Standalone Mode on a Different Port

关闭mongod实例:

repset:PRIMARY> use admin

repset:PRIMARY> db.shutdownServer()

重启mongod实例以单机模式,修改端口,并不要加–replSet参数

#vim /etc/mongo.conf

dbpath=/var/lib/mongodb

logpath=/var/log/mongodb/mongo.log

pidfilepath=/var/run/mongo.pid

directoryperdb=true

logappend=true

#replSet=repset

bind_ip=192.168.1.100,127.0.0.1

port=37017

oplogSize=2000

fork=true# mongod -f /etc/mongo.conf

备份oplog# mongodump –db local –collection ‘oplog.rs’ –port 37017

2>: Recreate the Oplog with a New Size and a Seed Entry

保存oplog的最新的时间点

use local

db.temp.save( db.oplog.rs.find( { }, { ts: 1, h: 1 } ).sort( {$natural : -1} ).limit(1).next() )

db.temp.find()

删除旧的oplog

db.oplog.rs.drop()

3> :Create a New Oplog

创建一个新的Oplog,大小为2G

db.runCommand( { create: “oplog.rs”, capped: true, size: (2 * 1024 * 1024 * 1024) } )

插入前面保存的旧的oplog的时间点的记录

db.oplog.rs.save( db.temp.findOne() )

db.oplog.rs.find()

4>:Restart the Member:

关闭单机实例:

use admin

db.shutdownServer()

修改回配置# vim /etc/mongo.conf

dbpath=/var/lib/mongodb

logpath=/var/log/mongodb/mongo.log

pidfilepath=/var/run/mongo.pid

directoryperdb=true

logappend=true

replSet=repset

bind_ip=192.168.1.100,127.0.0.1

port=37017

oplogSize=2000

fork=true

启动mongod

# mongod -f /etc/mongo.conf

重复上述步骤到所有需要更改的节点。

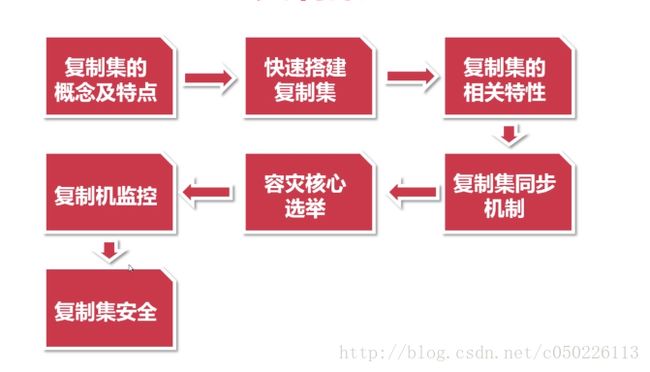

复制集容灾选举(选举是容灾的一个过程,但容灾不只是选举,维护一个健康的集群是容灾的根本目的)

1、 心跳检测

2、维护主节点列表

3、选举准备

4、投票

(了解集群中各个节点的状态信息,当某些节点不可达或新加入了一个节点时。

从节点检测:在满足大多数原则的前提下,根据priority大小,是否是arbiter,optime是否在10s内,把自己加入主节点备选列表中。

主节点检测:在满足大多数原则的前提下,判断是否有两个以上的主节点存在,根据priority大小选择合适的主节点。

选举准备: 在集群中没有主节点时,在满足大多数原则的前提下,判断自己是否拿到线程锁和optime是否是最新的则标记自己为主节点

)

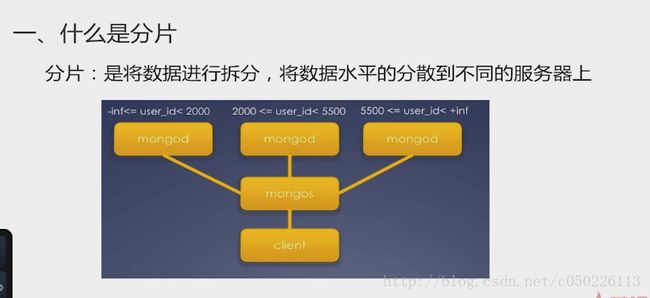

shard 节点

存储数据的节点(单个mongod或则复制集)

confg server

存储的元数据(定位数据的信息) 为mongos 服务.将数据路由到shard

mongos

接受前段的请求 进行对应的消息路由

在192.168.31.159部署4个shard分片和config节点

启动4个shard节点

[root@MiWiFi-R1CM bin]#

./mongod --shardsvr --logpath=/opt/log/s1.log --logappend --dbpath=/opt/data/s1 --fork --port 27017

./mongod --shardsvr --logpath=/opt/log/s2.log --logappend --dbpath=/opt/data/s2 --fork --port 27018

./mongod --shardsvr --logpath=/opt/log/s3.log --logappend --dbpath=/opt/data/s3 --fork --port 27019

./mongod --shardsvr --logpath=/opt/log/s4.log --logappend --dbpath=/opt/data/s4 --fork --port 27020

tcp 0 0 0.0.0.0:27017 0.0.0.0:* LISTEN 3350/./mongod

tcp 0 0 0.0.0.0:27018 0.0.0.0:* LISTEN 3368/./mongod

tcp 0 0 0.0.0.0:27019 0.0.0.0:* LISTEN 3394/./mongod

tcp 0 0 0.0.0.0:27020 0.0.0.0:* LISTEN 3412/./mongod

[root@MiWiFi-R1CM bin]# ./mongod --port 27100 --dbpath=/opt/config --logpath=/opt/log/config.log --logappend --fork

about to fork child process, waiting until server is ready for connections.

forked process: 3791

child process started successfully, parent exiting在192.168.31.228启动一个mongos的节点

[root@MiWiFi-R1CM bin]#

./mongos --port 27017 --logappend --logpath=/opt/log/mongos.log --configdb 192.168.31.159:27100 --fork

2017-01-15T20:38:49.859+0800 W SHARDING [main] Running a sharded cluster with fewer than 3 config servers should only be done for testing purposes and is not recommended for production.

about to fork child process, waiting until server is ready for connections.

forked process: 3391

child process started successfully, parent exiting添加分片

[root@MiWiFi-R1CM bin]# ./mongo 127.0.0.1:27017

MongoDB shell version: 3.2.9

connecting to: 127.0.0.1:27017/test

mongos> use admin

switched to db admin

mongos> db.runCommand({"addShard":"192.168.31.159:27017"})

{ "shardAdded" : "shard0000", "ok" : 1 }

mongos> db.runCommand({"addShard":"192.168.31.159:27018"})

{ "shardAdded" : "shard0001", "ok" : 1 }

mongos> db.runCommand({"addShard":"192.168.31.159:27019"})

{ "shardAdded" : "shard0002", "ok" : 1 }

mongos> db.runCommand({"addShard":"192.168.31.159:27020"})

{ "shardAdded" : "shard0003", "ok" : 1 }

mongos> db.runCommand({ enablesharding:"ljm" })

{ "ok" : 1 }

mongos> db.runCommand({ shardcollection: "ljm.user", key: { _id:hashed}})

{ "collectionsharded" : "ljm.user", "ok" : 1 }分片测试

mongos> for(var i=0;i<10000;i++){db.user.insert({user_id:i%200,time:ISODate(),size:1,type:2})}

WriteResult({ "nInserted" : 1 })

db.runCommand({enablesharding:"ljm"})

db.runCommand({shardcollection:"ljm.user",key:{_id:1}})

db.user.stats()

{

"sharded" : true,

"capped" : false,

"ns" : "ljm.user",

"count" : 10000,

"size" : 810000,

"storageSize" : 262144,

"totalIndexSize" : 94208,

"indexSizes" : {

"_id_" : 94208

},

"avgObjSize" : 81,

"nindexes" : 1,

"nchunks" : 1,

"shards" : {

"shard0000" : {

"ns" : "ljm.user",

"count" : 10000,

"size" : 810000,

"avgObjSize" : 81,

"storageSize" : 262144,

"capped" : false,

"wiredTiger" : {

"metadata" : {

"formatVersion" : 1

},

"creationString" : "allocation_size=4KB,app_metadata=(formatVersion=1),block_allocation=best,block_compressor=snappy,cache_resident=0,checksum=on,colgroups=,collator=,columns=,dictionary=0,encryption=(keyid=,name=),exclusive=0,extractor=,format=btree,huffman_key=,huffman_value=,immutable=0,internal_item_max=0,internal_key_max=0,internal_key_truncate=,internal_page_max=4KB,key_format=q,key_gap=10,leaf_item_max=0,leaf_key_max=0,leaf_page_max=32KB,leaf_value_max=64MB,log=(enabled=),lsm=(auto_throttle=,bloom=,bloom_bit_count=16,bloom_config=,bloom_hash_count=8,bloom_oldest=0,chunk_count_limit=0,chunk_max=5GB,chunk_size=10MB,merge_max=15,merge_min=0),memory_page_max=10m,os_cache_dirty_max=0,os_cache_max=0,prefix_compression=0,prefix_compression_min=4,source=,split_deepen_min_child=0,split_deepen_per_child=0,split_pct=90,type=file,value_format=u",

"type" : "file",

"uri" : "statistics:table:collection-2--3716031408657191999",

"LSM" : {

"bloom filter false positives" : 0,

"bloom filter hits" : 0,

"bloom filter misses" : 0,

"bloom filter pages evicted from cache" : 0,

"bloom filter pages read into cache" : 0,

"bloom filters in the LSM tree" : 0,

"chunks in the LSM tree" : 0,

"highest merge generation in the LSM tree" : 0,

"queries that could have benefited from a Bloom filter that did not exist" : 0,

"sleep for LSM checkpoint throttle" : 0,

"sleep for LSM merge throttle" : 0,

"total size of bloom filters" : 0

},

"block-manager" : {

"allocations requiring file extension" : 33,

"blocks allocated" : 33,

"blocks freed" : 0,

"checkpoint size" : 253952,

"file allocation unit size" : 4096,

"file bytes available for reuse" : 0,

"file magic number" : 120897,

"file major version number" : 1,

"file size in bytes" : 262144,

"minor version number" : 0

},

"btree" : {

"btree checkpoint generation" : 3,

"column-store fixed-size leaf pages" : 0,

"column-store internal pages" : 0,

"column-store variable-size RLE encoded values" : 0,

"column-store variable-size deleted values" : 0,

"column-store variable-size leaf pages" : 0,

"fixed-record size" : 0,

"maximum internal page key size" : 368,

"maximum internal page size" : 4096,

"maximum leaf page key size" : 2867,

"maximum leaf page size" : 32768,

"maximum leaf page value size" : 67108864,

"maximum tree depth" : 3,

"number of key/value pairs" : 0,

"overflow pages" : 0,

"pages rewritten by compaction" : 0,

"row-store internal pages" : 0,

"row-store leaf pages" : 0

},

"cache" : {

"bytes read into cache" : 0,

"bytes written from cache" : 863082,

"checkpoint blocked page eviction" : 0,

"data source pages selected for eviction unable to be evicted" : 0,

"hazard pointer blocked page eviction" : 0,

"in-memory page passed criteria to be split" : 0,

"in-memory page splits" : 0,

"internal pages evicted" : 0,

"internal pages split during eviction" : 0,

"leaf pages split during eviction" : 0,

"modified pages evicted" : 0,

"overflow pages read into cache" : 0,

"overflow values cached in memory" : 0,

"page split during eviction deepened the tree" : 0,

"page written requiring lookaside records" : 0,

"pages read into cache" : 0,

"pages read into cache requiring lookaside entries" : 0,

"pages requested from the cache" : 10004,

"pages written from cache" : 32,

"pages written requiring in-memory restoration" : 0,

"unmodified pages evicted" : 0

},

"compression" : {

"compressed pages read" : 0,

"compressed pages written" : 31,

"page written failed to compress" : 0,

"page written was too small to compress" : 1,

"raw compression call failed, additional data available" : 0,

"raw compression call failed, no additional data available" : 0,

"raw compression call succeeded" : 0

},

"cursor" : {

"bulk-loaded cursor-insert calls" : 0,

"create calls" : 2,

"cursor-insert key and value bytes inserted" : 831426,

"cursor-remove key bytes removed" : 0,

"cursor-update value bytes updated" : 0,

"insert calls" : 10000,

"next calls" : 101,

"prev calls" : 1,

"remove calls" : 0,

"reset calls" : 10003,

"restarted searches" : 0,

"search calls" : 0,

"search near calls" : 0,

"truncate calls" : 0,

"update calls" : 0

},

"reconciliation" : {

"dictionary matches" : 0,

"fast-path pages deleted" : 0,

"internal page key bytes discarded using suffix compression" : 30,

"internal page multi-block writes" : 0,

"internal-page overflow keys" : 0,

"leaf page key bytes discarded using prefix compression" : 0,

"leaf page multi-block writes" : 1,

"leaf-page overflow keys" : 0,

"maximum blocks required for a page" : 31,

"overflow values written" : 0,

"page checksum matches" : 0,

"page reconciliation calls" : 2,

"page reconciliation calls for eviction" : 0,

"pages deleted" : 0

},

"session" : {

"object compaction" : 0,

"open cursor count" : 2

},

"transaction" : {

"update conflicts" : 0

}

},

"nindexes" : 1,

"totalIndexSize" : 94208,

"indexSizes" : {

"_id_" : 94208

},

"ok" : 1

}

},

"ok" : 1

}