Spark SQL 之 Temporary View

Spark SQL 之 Temporary View

spark SQL的 temporary view 是支持原生SQL 的方式之一

spark SQL的 DataFrame 和 DataSet 均可以通过注册 temporary view 的方式来形成视图

案例一: 通过 DataFrame 的方式创建

val spark = SparkSession.builder().config(conf).getOrCreate();

spark.catalog.listTables().show();

val view = spark.createDataFrame(List(Person("Jason",34,"DBA"),Person("Tom",20,"Dev"))).createOrReplaceTempView("V_Person");

spark.catalog.listTables().show();

spark.catalog.listColumns("V_Person").show();

spark.close();

输出日志

+----+--------+-----------+---------+-----------+

|name|database|description|tableType|isTemporary|

+----+--------+-----------+---------+-----------+

+----+--------+-----------+---------+-----------+

+--------+--------+-----------+---------+-----------+

| name|database|description|tableType|isTemporary|

+--------+--------+-----------+---------+-----------+

|v_person| null| null|TEMPORARY| true|

+--------+--------+-----------+---------+-----------+

+----+-----------+--------+--------+-----------+--------+

|name|description|dataType|nullable|isPartition|isBucket|

+----+-----------+--------+--------+-----------+--------+

|name| null| string| true| false| false|

| age| null| int| true| false| false|

| job| null| string| true| false| false|

+----+-----------+--------+--------+-----------+--------+

案例二: Spark sql 支持分组,分析函数等高级查询

spark.sql("SELECT * from V_Person").show();

spark.sql("SELECT count(1) from V_Person").show();

spark.sql("SELECT name,age,job,rank() over(partition by name order by age) as rk from V_Person").show();

输出日志

+-----+---+-------+

| name|age| job|

+-----+---+-------+

|Jason| 34|BigData|

| Tom| 20| Dev|

|Jason| 28| DBA|

+-----+---+-------+

+--------+

|count(1)|

+--------+

| 3|

+--------+

+-----+---+-------+---+

| name|age| job| rk|

+-----+---+-------+---+

|Jason| 28| DBA| 1|

|Jason| 34|BigData| 2|

| Tom| 20| Dev| 1|

+-----+---+-------+---+

案例三: 通过 DataSet 的方式创建

val spark = SparkSession.builder().config(conf).getOrCreate();

spark.catalog.listTables().show();

import spark.implicits._;

spark.createDataset(List(Person("Jason",34,"BigData"),Person("Tom",20,"Dev"),Person("Jason",28,"DBA"))).as[Person].createOrReplaceTempView("V_Person");

spark.catalog.listTables().show();

spark.catalog.listColumns("V_Person").show();

spark.sql("SELECT * from V_Person").show();

spark.sql("SELECT count(1) from V_Person").show();

spark.sql("SELECT name,age,job,rank() over(partition by name order by age) as rk from V_Person").show();

spark.close();

输出日志

+-----+---+-------+

| name|age| job|

+-----+---+-------+

|Jason| 34|BigData|

| Tom| 20| Dev|

|Jason| 28| DBA|

+-----+---+-------+

+--------+

|count(1)|

+--------+

| 3|

+--------+

+-----+---+-------+---+

| name|age| job| rk|

+-----+---+-------+---+

|Jason| 28| DBA| 1|

|Jason| 34|BigData| 2|

| Tom| 20| Dev| 1|

+-----+---+-------+---+

DataFrame 的结果集写入到文件中

val spark = SparkSession.builder().config(conf).getOrCreate();

import spark.implicits._;

spark.createDataset(List(Person("Jason",34,"BigData"),Person("Tom",20,"Dev"),Person("Jason",28,"DBA"))).as[Person].createOrReplaceTempView("V_Person");

val df = spark.sql("SELECT name,age,job,rank() over(partition by name order by age) as rk from V_Person").toDF();

df.write.format("CSV").option("sep","#").save("file:///d://result_jason.csv");

spark.close();

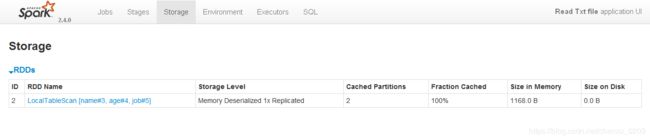

DataFrame 的结果集写入到内存中

val spark = SparkSession.builder().config(conf).getOrCreate();

import spark.implicits._;

val ds = spark.createDataset(List(Person("Jason",34,"BigData"),Person("Tom",20,"Dev"),Person("Jason",28,"DBA"))).as[Person];

ds.cache();

ds.count();

Thread.sleep(600000);

spark.close();

控制台观察

http://10.70.64.37:4040/storage/