- 用Tensorflow进行线性回归和逻辑回归(十)

lishaoan77

tensorflow线性回归tensorboard可视化

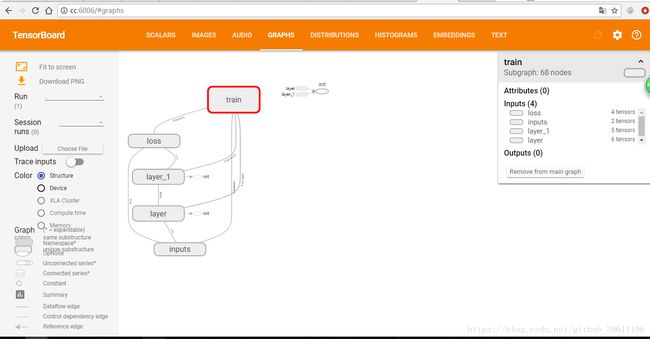

用TensorBoard可视化线性回归模型TensorBoard是一种可视化工具,用于了解、调试和优化模型训练过程。它使用在执行程序时编写的摘要事件。上面定义的模型使用tf.summary.FileWriter来写日志到日志目录/tmp/lr-train.我们可以用命令调用日志目录的TensorBoard,见Example3-13(TensorBoard已黙认安装与TensorFlow一起).Ex

- 强化学习 16G实践以下是基于CQL(Conservative Q-Learning)与QLoRA(Quantized Low-Rank Adaptation)结合的方案相关开源项目及资源,【ai技】

行云流水AI笔记

开源人工智能

根据你提供的CUDA版本(11.5)和NVIDIA驱动错误信息,以下是PyTorch、TensorFlow的兼容版本建议及环境修复方案:1.版本兼容性表框架兼容CUDA版本推荐安装命令(CUDA11.5)PyTorch11.3/11.6pipinstalltorchtorchvisiontorchaudio--extra-index-urlhttps://download.pytorch.org/

- TensorFlow Serving学习笔记3: 组件调用关系

一、整体架构TensorFlowServing采用模块化设计,核心组件包括:Servables:可服务对象(如模型、查找表)Managers:管理Servable生命周期(加载/卸载)Loaders:负责Servable的初始化状态管理Sources:提供新版本Servable的LoaderAspiredVersions:Servable的期望状态集合Core:连接所有组件的核心枢纽APIs:gR

- 【高频考点精讲】前端AI集成实战:从TensorFlow.js到模型部署

全栈老李技术面试

前端高频考点精讲前端javascripthtmlcss面试题reactvue

前端AI集成实战:从TensorFlow.js到模型部署作者:全栈老李更新时间:2025年5月适合人群:前端初学者、进阶开发者版权:本文由全栈老李原创,转载请注明出处。今天咱们聊聊前端工程师如何玩转AI——没错,用JavaScript就能搞机器学习!我是全栈老李,一个喜欢把复杂技术讲简单的实战派。最近发现不少前端同学对AI既好奇又害怕,其实真没想象中那么难,跟着老李走,30分钟让你亲手部署第一

- 聚焦OpenVINO与OpenCV颜色通道转换的实践指南

颜色通道顺序问题:OpenVINO模型RGB输入与OpenCVBGR格式的转换在计算机视觉任务中,框架间的颜色通道差异常导致模型推理错误。以下方法解决OpenVINO模型需要RGB输入而OpenCV默认输出BGR的问题。理解核心差异OpenCV的imread()函数遵循BGR通道顺序,源于历史摄像头硬件的数据格式。而OpenVINO等深度学习框架多采用RGB顺序,与TensorFlow/PyTor

- python打卡训练营Day41

珂宝_

python打卡训练营python

importnumpyasnpfromtensorflowimportkerasfromtensorflow.kerasimportlayers#加载和预处理数据(x_train,y_train),(x_test,y_test)=keras.datasets.mnist.load_data()x_train=x_train.reshape(-1,28,28,1).astype("float32")

- TensorFlow深度学习模型训练:掌握神经网络的构建与优化

瞎了眼的枸杞

深度学习tensorflow神经网络

引言深度学习是人工智能领域的重要分支,它通过模拟人脑的神经网络结构来解决复杂的数据表示和学习问题。TensorFlow作为目前最受欢迎的深度学习框架之一,为开发者提供了强大的工具和丰富的资源。本文将带你了解如何使用TensorFlow进行深度学习模型的训练和优化。TensorFlow的核心概念什么是TensorFlow?定义:TensorFlow是一个用于数值计算的开源库,特别适合于大规模的机器学

- Tensorflow实现经典CNN网络AlexNet

您懂我意思吧

python开发tensorflowcnn人工智能python

1、概念AlexNet在ILSVRC-2012的比赛中获得top5错误率15.3%的突破(第二名为26.2%),其原理来源于2012年Alex的论文《ImageNetClassificationwithDeepConvolutionalNeuralNetworks》,这篇论文是深度学习火爆发展的一个里程碑和分水岭,加上硬件技术的发展,深度学习还会继续火下去。2、AlexNet网络结构由于受限于当时

- TensorFlow Lite (TFLite) 和 PyTorch Mobile介绍2

追心嵌入式

tensorflowpytorch人工智能

以下是TensorFlowLite(TFLite)和PyTorchMobile两大轻量化框架的核心用途、典型应用场景及在嵌入式开发中的实际价值对比,结合你的OrangePiZero3开发板特性进行说明:TensorFlowLite(TFLite)核心用途嵌入式设备推理:将训练好的TensorFlow模型转换为轻量格式,在资源受限设备(如手机、边缘计算盒子、OrangePi)上高效运行。硬件加速:通

- Spring中如何使用AI

Mn孟

spring人工智能java后端

Spring是一个用于构建Java应用程序的开源框架,它可以与各种AI技术集成。要在Spring中使用AI,首先需要选择一种AI技术,如机器学习、自然语言处理等。然后可以使用SpringBoot来构建应用程序,并使用相应的AI框架或库来实现AI功能。例如,可以使用TensorFlow或PyTorch来实现机器学习功能,使用NLTK或spaCy来实现自然语言处理功能。此外,还可以使用SpringCl

- C++(个人学习总结,不断更新......)

一、初识C++1.1C++简介C++是由BjarneStroustrup研发的,在计算机编程语言中,C++兼容了c语言,又增加了面向对象的机制,同时拥有丰富的库,有标准模板库STL以及很多第三方库,STL中有set、map、hash等容器,第三方库中有Boost库、图形库QT、图库像处理库Opencv、机械学习库Tensorflow等,这些库可以为嵌入式开发提供非常大的支持。1.2C++程序编写#

- LSTM价格预测模型:基于技术指标与市场情绪数据

pk_xz123456

仿真模型算法深度学习lstm人工智能rnn深度学习开发语言目标检测神经网络

LSTM价格预测模型:基于技术指标与市场情绪数据一、模型架构设计importnumpyasnpimportpandasaspdimporttensorflowastffromsklearn.preprocessingimportStandardScalerfromtensorflow.keras.modelsimportSequentialfrom

- python训练Day24 元组和OS模块

小暖星

python训练python开发语言

元组特点:1.有序,可以重复,这一点和列表一样2.元组中的元素不能修改,这一点非常重要,深度学习场景中很多参数、形状定义好了确保后续不能被修改。很多流行的ML/DL库(如TensorFlow,PyTorch,NumPy)在其API中都广泛使用了元组来表示形状、配置等。可以看到,元组最重要的功能是在列表之上,增加了不可修改这个需求元组的创建my_tuple1=(1,2,3)my_tuple2=('a

- TensorFlow:深度学习基础设施的架构哲学与工程实践革新

双囍菜菜

AI深度学习tensorflow架构

TensorFlow:深度学习基础设施的架构哲学与工程实践革新文章目录TensorFlow:深度学习基础设施的架构哲学与工程实践革新一、计算范式革命:从静态图到动态执行的深度架构剖析1.1静态计算图的编译优化体系1.2动态图模式的实现原理1.3混合执行模式的编译原理二、张量计算引擎的深度架构解析2.1运行时核心组件2.2计算图优化技术2.3分布式训练架构三、可微分编程范式的实现奥秘3.1自动微分系

- Python商务数据分析——Python 入门基础知识学习笔记

爱吃代码的小皇冠

python笔记算法数据结构

一、简介1.1Python特性解释型语言:代码无需编译可直接运行,适合快速开发。动态类型:变量类型在运行时确定(如x=1后x="str"仍合法)。面向对象:支持类、对象、继承等特性,代码可复用性强。语法简洁:通过缩进区分代码块,减少括号等冗余符号。1.2应用场景数据分析:Pandas、Numpy等库处理结构化数据。人工智能:TensorFlow、PyTorch构建机器学习模型。Web开发:Djan

- 怎么对词编码进行可视化:Embedding Projector

ZhangJiQun&MXP

教学2024大模型以及算力2021AIpythonembedding

怎么对词编码进行可视化:EmbeddingProjectorhttps://projector.tensorflow.org/EmbeddingProjector是用于可视化高维向量嵌入(如词向量、图像特征向量等)的工具,能帮你理解向量间的关系,下面以词向量分析和**简单自定义数据(比如特征向量)**为例,教你怎么用:一、词向量分析场景(以图中Word2Vec数据为例)1.加载数据与基础查看图里已

- Cross-stitch Networks for Multi-task Learning 项目教程

童香莺Wyman

Cross-stitchNetworksforMulti-taskLearning项目教程Cross-stitch-Networks-for-Multi-task-LearningATensorflowimplementationofthepaperarXiv:1604.03539项目地址:https://gitcode.com/gh_mirrors/cr/Cross-stitch-Network

- 探索多任务学习的新维度:Cross-stitch Networks

计蕴斯Lowell

探索多任务学习的新维度:Cross-stitchNetworksCross-stitch-Networks-for-Multi-task-LearningATensorflowimplementationofthepaperarXiv:1604.03539项目地址:https://gitcode.com/gh_mirrors/cr/Cross-stitch-Networks-for-Multi-t

- TensorFlow 安装与 GPU 驱动兼容(h800)

weixin_44719529

tensorflowneo4j人工智能

环境说明TensorFlow安装与GPU驱动兼容CUDA/H800特殊注意事项PyCharm和终端环境变量设置方法测试GPU是否可用的Python脚本#使用TensorFlow2.13在NVIDIAH800上启用GPU加速完整指南在使用TensorFlow进行深度学习训练时,充分利用GPU能力至关重要。本文记录了在Linux环境下使用TensorFlow2.13搭配NVIDIAH800GPU的完整

- 非root用户在服务器(linux-Ubuntu16.04)上安装cuda和cudnn,tensorflow-gpu1.13.1

码小花

模型测试环境搭建

1.准备工作(下载CUDA10.0和cudnn安装包)查看tensorflow和CUDA,cudnn的版本的对应关系,从而选择合适的版本进行下载下载CUDA10.0安装包,点击官网进行下载,根据服务器的具体情况选择对应的版本,如下图所示下载完毕后得到安装包cuda_10.0.130_410.48_linux.run下载cudnn,选择CUDA10.0对应的版本(需要注册登录nvidia账号),点击

- 如何安装Tensorflow和GPU配置

神隐灬

tensorflow学习tensorflow人工智能python

课题组某一台服务器升级后,很多环境丢失了,4块3090的GPU的驱动已安装好,但没有公用的Tensorflow可使用。于是自己鼓捣了一番Tensorflow的安装,等管理员安装公用的环境不知道要到猴年马月……服务器是Linux系统(CentOS),GPU是英伟达公司的3090,已经安装好驱动,可以通过命令看到相关信息:$nvidia-smiTueMay2820:54:092024+--------

- 非 root 用户安装 cuDNN 并配置 TensorFlow 使用 GPU

为非root用户安装cuDNN并配置TensorFlow使用GPU(以CUDA11.5为例)背景说明在科研服务器或非root权限环境下,用户往往无法通过apt或yum安装CUDA/cuDNN。本文以CUDA11.5和cuDNN8.3.3为例,演示如何手动下载并配置cuDNN,使TensorFlow成功识别GPU并启用加速。第一步:确认已安装CUDAnvcc--version示例输出:Cudacom

- 用Tensorflow进行线性回归和逻辑回归(一)

lishaoan77

tensorflowtensorflow线性回归逻辑回归

这一章告诉你如何用TensorFlow构建简单的机器学习系统。第一部分回顾构建机器学习系统的基础特别是讲函数,连续性,可微性。接着我们介绍损失函数,然后讨论机器学习归根于找到复杂的损失函数最小化的点的能力。我们然后讲梯度下降,解释它如何使损失最小。然后简单的讨论自动微分的算法思想。第二节侧重于介绍基于这些数学思想的TensorFlow概念。包括placeholders,scopes,optimiz

- Java全栈AI平台实战:从模型训练到部署的革命性突破——Spring AI+Deeplearning4j+TensorFlow Java API深度解析

墨夶

Java学习资料3java人工智能spring

一、背景与需求:为什么需要Java驱动的AI平台?某医疗影像公司面临以下挑战:多语言开发混乱:Python训练模型,C++部署推理,Java调用服务,导致维护成本高昂部署效率低下:PyTorch模型需手动转换ONNX格式,TensorRT优化耗时2小时/模型实时性不足:视频流分析延迟达3秒,无法满足急诊场景需求通过Java全栈AI平台,我们实现了:端到端开发:Java调用PyTorch训练模型,直

- 程序代码篇---ESP32-S3小智固件

Atticus-Orion

深度学习篇程序代码篇上位机知识篇AIEsp32-S3小智

Q1:ESP32-S3小智语音对话系统的整体架构是怎样的?A1:该系统采用“语音采集→唤醒词检测→ASR→NLP→TTS→语音播放”的流水线架构:硬件层:ESP32-S3芯片+麦克风阵列(如INMP441)+扬声器(如MAX98357A)。驱动层:ESP-IDF或Arduino框架提供的I2S、ADC、DAC驱动。算法层:唤醒词检测:基于MicroML(如TensorFlowLiteMicro)。

- faster rcnn预训练模型_Faster-RCNN+TensorFlow 详细训练过程(附github源码)

weixin_39958631

fasterrcnn预训练模型

图片来源于网络图片来源于网络1、训练平台:R53600、RTX2060Super,16G运行内存。2、源码地址:https://github.com/dBeker/Faster-RCNN-TensorFlow-Python33、使用git下载源码,gitclonehttps://github.com/dBeker/Faster-RCNN-TensorFlow-Python3.git项目整体代码结构

- 基于Tensorflow的线性回归

用Tensorflow求逆矩阵用Tensorflow实现矩阵分解用Tensorflow实现线性回归理解线性回归中的损失函数用Tensorflow实现戴明回归(DemingRegression)用Tensorflow实现Lasson回归和岭回归(RidgeRegression)用Tensorflow实现弹性网络回归(ElasticNetRegression)用Tensorflow实现逻辑回归文章目录

- 初识 Tensorflow.js【Plan - June - Week 3】

kuiini

Plan人工智能tensorflow人工智能

一、TensorFlow.jsTensorFlow.js是TensorFlow的JavaScript实现,支持在浏览器或Node.js环境中训练和部署机器学习模型。1、TensorFlow.js能做什么?在浏览器中训练机器学习模型加载并使用已有的模型(TensorFlowSavedModel、Keras模型、TensorFlowHub等)在Node.js环境中训练和部署模型将模型从PythonTe

- tensorflow GPU训练loss与val loss值差距过大问题

LXJSWD

tensorflow人工智能python

问题最近在ubuntugpu上训练模型,训练十轮,结果如下epoch,loss,lr,val_loss200,nan,0.001,nan200,0.002468767808750272,0.001,44.29948425292969201,0.007177405059337616,0.001,49.16984176635742202,0.012423301115632057,0.001,49.30

- python哈夫曼树压缩_哈夫曼树及python实现

七十二便

python哈夫曼树压缩

最近在看《tensorflow实战》中关于RNN一节,里面关于word2vec中涉及到了哈夫曼树,因此在查看了很多博客(文末)介绍后,按自己的理解对概念进行了整理(拼凑了下TXT..),最后自己用python实现Haffuman树的构建及编码。哈夫曼(huffman)树基本概念路径和路径长度:树中一个结点到另一个结点之间的分支构成这两个结点之间的路径;路径上的分枝数目称作路径长度,它等于路径上的结

- Spring的注解积累

yijiesuifeng

spring注解

用注解来向Spring容器注册Bean。

需要在applicationContext.xml中注册:

<context:component-scan base-package=”pagkage1[,pagkage2,…,pagkageN]”/>。

如:在base-package指明一个包

<context:component-sc

- 传感器

百合不是茶

android传感器

android传感器的作用主要就是来获取数据,根据得到的数据来触发某种事件

下面就以重力传感器为例;

1,在onCreate中获得传感器服务

private SensorManager sm;// 获得系统的服务

private Sensor sensor;// 创建传感器实例

@Override

protected void

- [光磁与探测]金吕玉衣的意义

comsci

这是一个古代人的秘密:现在告诉大家

信不信由你们:

穿上金律玉衣的人,如果处于灵魂出窍的状态,可以飞到宇宙中去看星星

这就是为什么古代

- 精简的反序打印某个数

沐刃青蛟

打印

以前看到一些让求反序打印某个数的程序。

比如:输入123,输出321。

记得以前是告诉你是几位数的,当时就抓耳挠腮,完全没有思路。

似乎最后是用到%和/方法解决的。

而今突然想到一个简短的方法,就可以实现任意位数的反序打印(但是如果是首位数或者尾位数为0时就没有打印出来了)

代码如下:

long num, num1=0;

- PHP:6种方法获取文件的扩展名

IT独行者

PHP扩展名

PHP:6种方法获取文件的扩展名

1、字符串查找和截取的方法

1

$extension

=

substr

(

strrchr

(

$file

,

'.'

), 1);

2、字符串查找和截取的方法二

1

$extension

=

substr

- 面试111

文强chu

面试

1事务隔离级别有那些 ,事务特性是什么(问到一次)

2 spring aop 如何管理事务的,如何实现的。动态代理如何实现,jdk怎么实现动态代理的,ioc是怎么实现的,spring是单例还是多例,有那些初始化bean的方式,各有什么区别(经常问)

3 struts默认提供了那些拦截器 (一次)

4 过滤器和拦截器的区别 (频率也挺高)

5 final,finally final

- XML的四种解析方式

小桔子

domjdomdom4jsax

在平时工作中,难免会遇到把 XML 作为数据存储格式。面对目前种类繁多的解决方案,哪个最适合我们呢?在这篇文章中,我对这四种主流方案做一个不完全评测,仅仅针对遍历 XML 这块来测试,因为遍历 XML 是工作中使用最多的(至少我认为)。 预 备 测试环境: AMD 毒龙1.4G OC 1.5G、256M DDR333、Windows2000 Server

- wordpress中常见的操作

aichenglong

中文注册wordpress移除菜单

1 wordpress中使用中文名注册解决办法

1)使用插件

2)修改wp源代码

进入到wp-include/formatting.php文件中找到

function sanitize_user( $username, $strict = false

- 小飞飞学管理-1

alafqq

管理

项目管理的下午题,其实就在提出问题(挑刺),分析问题,解决问题。

今天我随意看下10年上半年的第一题。主要就是项目经理的提拨和培养。

结合我自己经历写下心得

对于公司选拔和培养项目经理的制度有什么毛病呢?

1,公司考察,选拔项目经理,只关注技术能力,而很少或没有关注管理方面的经验,能力。

2,公司对项目经理缺乏必要的项目管理知识和技能方面的培训。

3,公司对项目经理的工作缺乏进行指

- IO输入输出部分探讨

百合不是茶

IO

//文件处理 在处理文件输入输出时要引入java.IO这个包;

/*

1,运用File类对文件目录和属性进行操作

2,理解流,理解输入输出流的概念

3,使用字节/符流对文件进行读/写操作

4,了解标准的I/O

5,了解对象序列化

*/

//1,运用File类对文件目录和属性进行操作

//在工程中线创建一个text.txt

- getElementById的用法

bijian1013

element

getElementById是通过Id来设置/返回HTML标签的属性及调用其事件与方法。用这个方法基本上可以控制页面所有标签,条件很简单,就是给每个标签分配一个ID号。

返回具有指定ID属性值的第一个对象的一个引用。

语法:

&n

- 励志经典语录

bijian1013

励志人生

经典语录1:

哈佛有一个著名的理论:人的差别在于业余时间,而一个人的命运决定于晚上8点到10点之间。每晚抽出2个小时的时间用来阅读、进修、思考或参加有意的演讲、讨论,你会发现,你的人生正在发生改变,坚持数年之后,成功会向你招手。不要每天抱着QQ/MSN/游戏/电影/肥皂剧……奋斗到12点都舍不得休息,看就看一些励志的影视或者文章,不要当作消遣;学会思考人生,学会感悟人生

- [MongoDB学习笔记三]MongoDB分片

bit1129

mongodb

MongoDB的副本集(Replica Set)一方面解决了数据的备份和数据的可靠性问题,另一方面也提升了数据的读写性能。MongoDB分片(Sharding)则解决了数据的扩容问题,MongoDB作为云计算时代的分布式数据库,大容量数据存储,高效并发的数据存取,自动容错等是MongoDB的关键指标。

本篇介绍MongoDB的切片(Sharding)

1.何时需要分片

&nbs

- 【Spark八十三】BlockManager在Spark中的使用场景

bit1129

manager

1. Broadcast变量的存储,在HttpBroadcast类中可以知道

2. RDD通过CacheManager存储RDD中的数据,CacheManager也是通过BlockManager进行存储的

3. ShuffleMapTask得到的结果数据,是通过FileShuffleBlockManager进行管理的,而FileShuffleBlockManager最终也是使用BlockMan

- yum方式部署zabbix

ronin47

yum方式部署zabbix

安装网络yum库#rpm -ivh http://repo.zabbix.com/zabbix/2.4/rhel/6/x86_64/zabbix-release-2.4-1.el6.noarch.rpm 通过yum装mysql和zabbix调用的插件还有agent代理#yum install zabbix-server-mysql zabbix-web-mysql mysql-

- Hibernate4和MySQL5.5自动创建表失败问题解决方法

byalias

J2EEHibernate4

今天初学Hibernate4,了解了使用Hibernate的过程。大体分为4个步骤:

①创建hibernate.cfg.xml文件

②创建持久化对象

③创建*.hbm.xml映射文件

④编写hibernate相应代码

在第四步中,进行了单元测试,测试预期结果是hibernate自动帮助在数据库中创建数据表,结果JUnit单元测试没有问题,在控制台打印了创建数据表的SQL语句,但在数据库中

- Netty源码学习-FrameDecoder

bylijinnan

javanetty

Netty 3.x的user guide里FrameDecoder的例子,有几个疑问:

1.文档说:FrameDecoder calls decode method with an internally maintained cumulative buffer whenever new data is received.

为什么每次有新数据到达时,都会调用decode方法?

2.Dec

- SQL行列转换方法

chicony

行列转换

create table tb(终端名称 varchar(10) , CEI分值 varchar(10) , 终端数量 int)

insert into tb values('三星' , '0-5' , 74)

insert into tb values('三星' , '10-15' , 83)

insert into tb values('苹果' , '0-5' , 93)

- 中文编码测试

ctrain

编码

循环打印转换编码

String[] codes = {

"iso-8859-1",

"utf-8",

"gbk",

"unicode"

};

for (int i = 0; i < codes.length; i++) {

for (int j

- hive 客户端查询报堆内存溢出解决方法

daizj

hive堆内存溢出

hive> select * from t_test where ds=20150323 limit 2;

OK

Exception in thread "main" java.lang.OutOfMemoryError: Java heap space

问题原因: hive堆内存默认为256M

这个问题的解决方法为:

修改/us

- 人有多大懒,才有多大闲 (评论『卓有成效的程序员』)

dcj3sjt126com

程序员

卓有成效的程序员给我的震撼很大,程序员作为特殊的群体,有的人可以这么懒, 懒到事情都交给机器去做 ,而有的人又可以那么勤奋,每天都孜孜不倦得做着重复单调的工作。

在看这本书之前,我属于勤奋的人,而看完这本书以后,我要努力变成懒惰的人。

不要在去庞大的开始菜单里面一项一项搜索自己的应用程序,也不要在自己的桌面上放置眼花缭乱的快捷图标

- Eclipse简单有用的配置

dcj3sjt126com

eclipse

1、显示行号 Window -- Prefences -- General -- Editors -- Text Editors -- show line numbers

2、代码提示字符 Window ->Perferences,并依次展开 Java -> Editor -> Content Assist,最下面一栏 auto-Activation

- 在tomcat上面安装solr4.8.0全过程

eksliang

Solrsolr4.0后的版本安装solr4.8.0安装

转载请出自出处:

http://eksliang.iteye.com/blog/2096478

首先solr是一个基于java的web的应用,所以安装solr之前必须先安装JDK和tomcat,我这里就先省略安装tomcat和jdk了

第一步:当然是下载去官网上下载最新的solr版本,下载地址

- Android APP通用型拒绝服务、漏洞分析报告

gg163

漏洞androidAPP分析

点评:记得曾经有段时间很多SRC平台被刷了大量APP本地拒绝服务漏洞,移动安全团队爱内测(ineice.com)发现了一个安卓客户端的通用型拒绝服务漏洞,来看看他们的详细分析吧。

0xr0ot和Xbalien交流所有可能导致应用拒绝服务的异常类型时,发现了一处通用的本地拒绝服务漏洞。该通用型本地拒绝服务可以造成大面积的app拒绝服务。

针对序列化对象而出现的拒绝服务主要

- HoverTree项目已经实现分层

hvt

编程.netWebC#ASP.ENT

HoverTree项目已经初步实现分层,源代码已经上传到 http://hovertree.codeplex.com请到SOURCE CODE查看。在本地用SQL Server 2008 数据库测试成功。数据库和表请参考:http://keleyi.com/a/bjae/ue6stb42.htmHoverTree是一个ASP.NET 开源项目,希望对你学习ASP.NET或者C#语言有帮助,如果你对

- Google Maps API v3: Remove Markers 移除标记

天梯梦

google maps api

Simply do the following:

I. Declare a global variable:

var markersArray = [];

II. Define a function:

function clearOverlays() {

for (var i = 0; i < markersArray.length; i++ )

- jQuery选择器总结

lq38366

jquery选择器

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40

- 基础数据结构和算法六:Quick sort

sunwinner

AlgorithmQuicksort

Quick sort is probably used more widely than any other. It is popular because it is not difficult to implement, works well for a variety of different kinds of input data, and is substantially faster t

- 如何让Flash不遮挡HTML div元素的技巧_HTML/Xhtml_网页制作

刘星宇

htmlWeb

今天在写一个flash广告代码的时候,因为flash自带的链接,容易被当成弹出广告,所以做了一个div层放到flash上面,这样链接都是a触发的不会被拦截,但发现flash一直处于div层上面,原来flash需要加个参数才可以。

让flash置于DIV层之下的方法,让flash不挡住飘浮层或下拉菜单,让Flash不档住浮动对象或层的关键参数:wmode=opaque。

方法如下:

- Mybatis实用Mapper SQL汇总示例

wdmcygah

sqlmysqlmybatis实用

Mybatis作为一个非常好用的持久层框架,相关资料真的是少得可怜,所幸的是官方文档还算详细。本博文主要列举一些个人感觉比较常用的场景及相应的Mapper SQL写法,希望能够对大家有所帮助。

不少持久层框架对动态SQL的支持不足,在SQL需要动态拼接时非常苦恼,而Mybatis很好地解决了这个问题,算是框架的一大亮点。对于常见的场景,例如:批量插入/更新/删除,模糊查询,多条件查询,联表查询,