吴恩达深度学习4-Week4课后作业1-Face Recognition

一、Deeplearning-assignment

在本节的学习中,我们将学习建立一个人脸识别系统。

人脸识别主要有以下两个类别:

- 人脸鉴定:比如说在一些机场,通过一个系统扫描你的护照是否是你本人,来允许或拒绝你通过安检。

- 人脸识别:比如在一个公司入口处扫描你的人脸来识别你的身份。

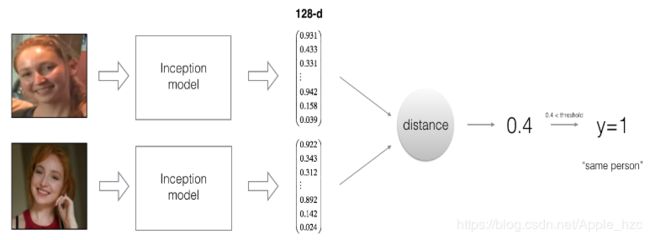

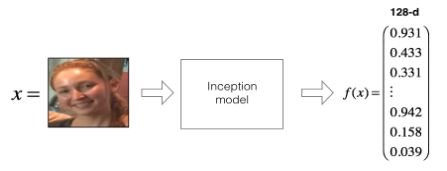

FaceNet通过学习一个神经网络将一个人脸图像编码成具有128个数字的向量,通过比较两个向量,能推测两个图像是否是同一人。

在本次作业中:

- 实现一个三维损失函数。

- 通过预先训练好的模型将人脸图像编码成128维向量。

- 通过这些编码后的向量实现人脸鉴定和人脸识别。

人脸鉴定:在人脸鉴定中,给定两个图像去判断它们是否是同一人。最简单的方法就是去比较两个图像的每一个像素,如果两个图像之间的距离小于某个值,说明是同一人。

但显然这种算法效果很差,因为不同照片由于光线、方向等会有各种各样的变化,因此你将学习通过一个编码函数f(img)对图像进行编码。

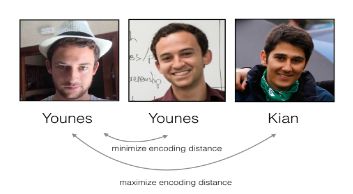

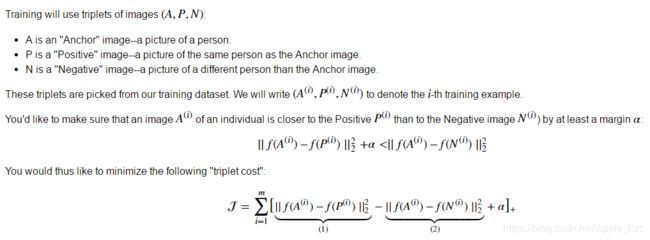

三维损失函数原理:将同一个人不同图像的编码“推”得更近,而将不同人的图像“拉”得更远。

代码运行结果:

人脸识别:首先预计算database中给定人脸的编码,然后计算需要识别的人脸图像编码,将该编码与database中的编码逐一比较,找到具有最小距离的编码,如果小于某个阈值,则说明与database中对应的人脸属于同一个人。

代码运行结果:

二、相关算法代码

from keras.models import Sequential

from keras.layers import Conv2D, ZeroPadding2D, Activation, Input, concatenate

from keras.models import Model

from keras.layers.normalization import BatchNormalization

from keras.layers.pooling import MaxPooling2D, AveragePooling2D

from keras.layers.merge import Concatenate

from keras.layers.core import Lambda, Flatten, Dense

from keras.initializers import glorot_uniform

from keras.engine.topology import Layer

from keras import backend as K

K.set_image_data_format('channels_first')

import cv2

import os

import numpy as np

from numpy import genfromtxt

import pandas as pd

import tensorflow as tf

from fr_utils import *

from inception_blocks_v2 import *

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

np.set_printoptions(threshold=np.nan)

FRmodel = faceRecoModel(input_shape=(3, 96, 96))

# print("Total Params:", FRmodel.count_params())

def triplet_loss(y_true, y_pred, alpha=0.2):

anchor, positive, negative = y_pred[0], y_pred[1], y_pred[2]

pos_dist = tf.reduce_sum(tf.square(tf.subtract(anchor, positive)))

neg_dist = tf.reduce_sum(tf.square(tf.subtract(anchor, negative)))

basic_loss = tf.add(tf.subtract(pos_dist, neg_dist), alpha)

loss = tf.reduce_sum(tf.maximum(basic_loss, 0.0))

return loss

# with tf.Session() as test:

# tf.set_random_seed(1)

# y_true = (None, None, None)

# y_pred = (tf.random_normal([3, 128], mean=6, stddev=0.1, seed=1),

# tf.random_normal([3, 128], mean=1, stddev=1, seed=1),

# tf.random_normal([3, 128], mean=3, stddev=4, seed=1))

# loss = triplet_loss(y_true, y_pred)

#

# print("loss = " + str(loss.eval()))

FRmodel.compile(optimizer='adam', loss=triplet_loss, metrics=['accuracy'])

load_weights_from_FaceNet(FRmodel)

database = {}

database["danielle"] = img_to_encoding("e:/code/Python/DeepLearning/Convolution model/week4/Face Recognition/images/danielle.png", FRmodel)

database["younes"] = img_to_encoding("e:/code/Python/DeepLearning/Convolution model/week4/Face Recognition/images/younes.jpg", FRmodel)

database["tian"] = img_to_encoding("e:/code/Python/DeepLearning/Convolution model/week4/Face Recognition/images/tian.jpg", FRmodel)

database["andrew"] = img_to_encoding("e:/code/Python/DeepLearning/Convolution model/week4/Face Recognition/images/andrew.jpg", FRmodel)

database["kian"] = img_to_encoding("e:/code/Python/DeepLearning/Convolution model/week4/Face Recognition/images/kian.jpg", FRmodel)

database["dan"] = img_to_encoding("e:/code/Python/DeepLearning/Convolution model/week4/Face Recognition/images/dan.jpg", FRmodel)

database["sebastiano"] = img_to_encoding("e:/code/Python/DeepLearning/Convolution model/week4/Face Recognition/images/sebastiano.jpg", FRmodel)

database["bertrand"] = img_to_encoding("e:/code/Python/DeepLearning/Convolution model/week4/Face Recognition/images/bertrand.jpg", FRmodel)

database["kevin"] = img_to_encoding("e:/code/Python/DeepLearning/Convolution model/week4/Face Recognition/images/kevin.jpg", FRmodel)

database["felix"] = img_to_encoding("e:/code/Python/DeepLearning/Convolution model/week4/Face Recognition/images/felix.jpg", FRmodel)

database["benoit"] = img_to_encoding("e:/code/Python/DeepLearning/Convolution model/week4/Face Recognition/images/benoit.jpg", FRmodel)

database["arnaud"] = img_to_encoding("e:/code/Python/DeepLearning/Convolution model/week4/Face Recognition/images/arnaud.jpg", FRmodel)

def verify(image_path, identity, database, model):

encoding = img_to_encoding(image_path, model)

dist = np.linalg.norm(encoding - database[identity])

if dist < 0.7:

print("It's " + str(identity) + ", welcome home!")

door_open = True

else:

print("It's not " + str(identity) + ", please go away")

door_open = False

return dist, door_open

# verify("e:/code/Python/DeepLearning/Convolution model/week4/Face Recognition/images/camera_0.jpg", "younes", database, FRmodel)

# verify("images/camera_2.jpg", "kian", database, FRmodel)

def who_is_it(image_path, database, model):

encoding = img_to_encoding(image_path, model)

min_dist = 100

for (name, db_enc) in database.items():

dist = np.linalg.norm(encoding - db_enc)

if dist < min_dist:

min_dist = dist

identity = name

if min_dist > 0.7:

print("Not in the database.")

else:

print("It's " + str(identity) + ", the distance is " + str(min_dist))

return min_dist, identity

who_is_it("e:/code/Python/DeepLearning/Convolution model/week4/Face Recognition/images/camera_0.jpg", database, FRmodel)三、总结

- 人脸鉴定解决的是1:1匹配问题,而人脸识别则是1:K的匹配问题。

- 三维损失函数对于训练一个神经网络学习人脸图像编码是一种很有效率的方法。

- 相同的编码可以同时用于人脸鉴定和人脸识别。通过比较两张图像编码的距离大小可以判断它们是否是同一个人。