CentOS6.5 ELK搭建最新版(6.3.1)使用filebeat

ELK原理与介绍

为什么用到ELK:

一般我们需要进行日志分析场景:直接在日志文件中 grep、awk 就可以获得自己想要的信息。但在规模较大的场景中,此方法效率低下,面临问题包括日志量太大如何归档、文本搜索太慢怎么办、如何多维度查询。需要集中化的日志管理,所有服务器上的日志收集汇总。常见解决思路是建立集中式日志收集系统,将所有节点上的日志统一收集,管理,访问。

一般大型系统是一个分布式部署的架构,不同的服务模块部署在不同的服务器上,问题出现时,大部分情况需要根据问题暴露的关键信息,定位到具体的服务器和服务模块,构建一套集中式日志系统,可以提高定位问题的效率。

一个完整的集中式日志系统,需要包含以下几个主要特点:

1.收集-能够采集多种来源的日志数据

2.传输-能够稳定的把日志数据传输到中央系统

3.存储-如何存储日志数据

4.分析-可以支持 UI 分析

5.警告-能够提供错误报告,监控机制

ELK提供了一整套解决方案,并且都是开源软件,之间互相配合使用,完美衔接,高效的满足了很多场合的应用。目前主流的一种日志系统。

ELK简介:

ELK是三个开源软件的缩写,分别表示:Elasticsearch , Logstash, Kibana , 它们都是开源软件。新增了一个FileBeat,它是一个轻量级的日志收集处理工具(Agent),Filebeat占用资源少,适合于在各个服务器上搜集日志后传输给Logstash,官方也推荐此工具。

Elasticsearch是个开源分布式搜索引擎,提供搜集、分析、存储数据三大功能。它的特点有:分布式,零配置,自动发现,索引自动分片,索引副本机制,restful风格接口,多数据源,自动搜索负载等。

Logstash 主要是用来日志的搜集、分析、过滤日志的工具,支持大量的数据获取方式。一般工作方式为c/s架构,client端安装在需要收集日志的主机上,server端负责将收到的各节点日志进行过滤、修改等操作在一并发往elasticsearch上去。

Kibana 也是一个开源和免费的工具,Kibana可以为 Logstash 和 ElasticSearch 提供的日志分析友好的 Web 界面,可以帮助汇总、分析和搜索重要数据日志。

Filebeat隶属于Beats。目前Beats包含四种工具:

1.Packetbeat(搜集网络流量数据)

2.Topbeat(搜集系统、进程和文件系统级别的 CPU 和内存使用情况等数据)

3.Filebeat(搜集文件数据)

4.Winlogbeat(搜集 Windows 事件日志数据)

官方文档:

Filebeat:

https://www.elastic.co/cn/products/beats/filebeat

https://www.elastic.co/guide/en/beats/filebeat/5.6/index.html

Logstash:

https://www.elastic.co/cn/products/logstash

https://www.elastic.co/guide/en/logstash/5.6/index.html

Kibana:

https://www.elastic.co/cn/products/kibana

https://www.elastic.co/guide/en/kibana/5.5/index.html

Elasticsearch:

https://www.elastic.co/cn/products/elasticsearch

https://www.elastic.co/guide/en/elasticsearch/reference/5.6/index.html

elasticsearch中文社区:

https://elasticsearch.cn/

实验环境:

| 操作系统 |

IP地址 |

主机名 |

软件包列表 |

| CentOS6.5-x86_64 |

192.168.8.100 |

Nginx+Filebeat |

nginx-1.13.12.tar.gz filebeat-6.3.1-linux-x86_64.tar.gz |

| CentOS6.5-x86_64 |

192.168.8.101 |

Apache+Filebeat |

httpd-2.4.33.tar.gz filebeat-6.3.1-linux-x86_64.tar.gz |

| CentOS6.5-x86_64 |

192.168.8.102 |

Elasticserch |

elasticsearch-6.3.1.tar.gz |

| CentOS6.5-x86_64 |

192.168.8.103 |

Logstash |

logstash-6.3.1.tar.gz

|

| CentOS6.5-x86_64 |

192.168.8.104 |

Kibana |

kibana-6.3.1-linux-x86_64.tar.gz

|

部署ELK:

1.搭建客户机Nginx(推荐使用yum安装进行测试,我在测试kibana使用的是yum安装

[root@nginx ~]# hostname

nginx

[root@nginx ~]# cat /etc/redhat-release

CentOS release 6.5 (Final)

[root@nginx ~]# uname -r

2.6.32-431.el6.x86_64

[root@nginx ~]# /etc/init.d/iptables stop

iptables:将链设置为政策 ACCEPT:filter [确定]

iptables:清除防火墙规则: [确定]

iptables:正在卸载模块: [确定]

[root@nginx ~]# setenforce 0

setenforce: SELinux is disabled

[root@nginx ~]# chkconfig iptables off

[root@nginx ~]# tar xf nginx-1.13.12.tar.gz -C /usr/src/

2.部署客户机Apache(推荐使用yum安装进行测试,我在测试kibana使用的是yum安装)

[root@Apache ~]# hostname

Apache

[root@Apache ~]# cat /etc/redhat-release

CentOS release 6.5 (Final)

[root@Apache ~]# uname -r

2.6.32-431.el6.x86_64

[root@Apache ~]# /etc/init.d/iptables stop

[root@Apache ~]# setenforce 0

[root@Apache ~]# tar xf apr-1.5.1.tar.gz -C /usr/src/

[root@Apache ~]# tar xf apr-util-1.5.1.tar.gz -C /usr/src/

[root@Apache ~]# tar xf httpd-2.4.33.tar.gz -C /usr/src/

[root@Apache ~]# cd /usr/src/apr-1.5.1/

[root@Apache apr-1.5.1]# ./configure prefix=/usr/local/apr && make && make install

[root@Apache apr-1.5.1]# cd ../apr-util-1.5.1/

[root@Apache apr-util-1.5.1]# ./configure --prefix=/usr/local/apr-util --with-apr=/usr/local/apr/ && make && make install

[root@Apache apr-util-1.5.1]# cd ../httpd-2.4.33/

[root@Apache httpd-2.4.33]# ./configure --prefix=/usr/local/httpd --enable-so --enable-rewrite --enable-header --enable-charset-lite --enable-cgi --with-apr=/usr/local/apr/ --with-apr-util=/usr/local/apr-util/ && make && make install

[root@Apache httpd-2.4.33]# ln -s /usr/local/httpd/bin/* /usr/local/sbin/

[root@Apache httpd-2.4.33]# apachectl start

AH00557: httpd: apr_sockaddr_info_get() failed for Apache

AH00558: httpd: Could not reliably determine the server's fully qualified domain name, using 127.0.0.1. Set the 'ServerName' directive globally to suppress this message

[root@Apache httpd-2.4.33]# netstat -anpt|grep httpd

tcp 0 0 :::80 :::* LISTEN 34117/httpd

3.部署Elasticserch(Logstash、Kibana三台都需要安装JDK)

1.查看系统环境:

[root@Elasticserch ~]# hostname

Elasticserch

[root@Elasticserch ~]# cat /etc/redhat-release

CentOS release 6.5 (Final)

[root@Elasticserch ~]# uname -r

2.6.32-431.el6.x86_64

[root@Elasticserch ~]# /etc/init.d/iptables stop

[root@Elasticserch ~]# setenforce 0

2.安装JDK环境:(注如果系统原有老版jdk请删除,重装)

[root@Elasticserch ~]# tar xf jdk-8u161-linux-x64.tar.gz

[root@Elasticserch ~]# mv jdk1.8.0_161/ /usr/local/java

[root@Elasticserch ~]# vim /etc/profile.d/java.sh

export JAVA_HOME=/usr/local/java

export PATH=$PATH:$JAVA_HOME/bin

[root@Elasticserch ~]# source /etc/profile

[root@Elasticserch ~]# java -version

java version "1.8.0_161"

Java(TM) SE Runtime Environment (build 1.8.0_161-b12)

Java HotSpot(TM) 64-Bit Server VM (build 25.161-b12, mixed mode)

3.安装Elasticserch节点:

[root@Elasticserch ~]# tar xf elasticsearch-6.3.1.tar.gz

[root@Elasticserch ~]# mv elasticsearch-6.3.1 /usr/local/elasticsearch

[root@Elasticserch ~]# cd /usr/local/elasticsearch/

[root@Elasticserch elasticsearch]# cd config/

[root@Elasticserch config]# cp elasticsearch.yml{,.default} #备份配置文件,防止修改错误

4.修改配置文件:

[root@Elasticserch config]# vim elasticsearch.yml

cluster.name: my-es-cluster #集群的名称

node.name: node-1 #节点的名称

path.data: /usr/local/elasticsearch/data #数据路径

path.logs: /usr/local/ elasticsearch /logs #日志路径

bootstrap.memory_lock: false #这行去掉注释把ture改成false,不改会造成服务启动报错

bootstrap.system_call_filter: false #添加这行,否则启动会报错。

配置上述两行的原因:

这是在因为Centos6不支持SecComp,而ES5.2.0默认bootstrap.system_call_filter为true进行检测,所以导致检测失败,失败后直接导致ES不能启动。

network.host: 192.168.8.102 # elasticsearch主机地址

http.port: 9200 #端口号

discovery.zen.ping.unicast.hosts: ["node-1"] #启动新节点通过主机列表发现。

discovery.zen.minimum_master_nodes: 1 #总节点数

[root@elasticsearch ~]# vim /etc/security/limits.d/90-nproc.conf

* soft nproc 4096 #默认1024,改成4096

[root@elasticsearch ~]# vim /etc/sysctl.conf

#末尾追加否则服务会报错。

vm.max_map_count=655360

[root@elasticsearch ~]# sysctl -p #使上述配置生效5.创建elasticsearch运行的用户:

[root@Elasticserch config]# useradd elasticsearch

[root@Elasticserch config]# chown -R elasticsearch.elasticsearch /usr/local/elasticsearch/

6.修改文件句柄数:

[root@Elasticserch config]# vim /etc/security/limits.conf

#添加下面内容:

* soft nofile 65536

* hard nofile 65536

* soft nproc 65536

* hard nproc 65536

7.切换用户启动服务

[root@Elasticserch config]# su - elasticsearch

[elasticsearch@Elasticserch ~]$ cd /usr/local/elasticsearch/

[elasticsearch@elasticsearch elasticsearch]$ bin/elasticsearch &

注:如果启动错误请看下上述配置过程黄色标记的部分是否有配置错误或者没有配置。

8.查看服务是否启动成功

[root@elasticsearch ~]# netstat -anpt

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:111 0.0.0.0:* LISTEN 1018/rpcbind

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 1152/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 1228/master

tcp 0 0 0.0.0.0:3616 0.0.0.0:* LISTEN 1036/rpc.statd

tcp 0 0 192.168.8.102:22 192.168.8.254:64255 ESTABLISHED 1727/sshd

tcp 0 64 192.168.8.102:22 192.168.8.254:64756 ESTABLISHED 2617/sshd

tcp 0 0 :::34155 :::* LISTEN 1036/rpc.statd

tcp 0 0 :::111 :::* LISTEN 1018/rpcbind

tcp 0 0 :::9200 :::* LISTEN 2180/java

tcp 0 0 :::9300 :::* LISTEN 2180/java

tcp 0 0 :::22 :::* LISTEN 1152/sshd

tcp 0 0 ::1:25 :::* LISTEN 1228/master9.简单测试

[root@elasticsearch ~]# curl http://192.168.8.102:9200

{

"name" : "node-1",

"cluster_name" : "my-es_cluster",

"cluster_uuid" : "3zTOiOpUSEC5Z4S-lo4TUA",

"version" : {

"number" : "6.3.1",

"build_flavor" : "default",

"build_type" : "tar",

"build_hash" : "eb782d0",

"build_date" : "2018-06-29T21:59:26.107521Z",

"build_snapshot" : false,

"lucene_version" : "7.3.1",

"minimum_wire_compatibility_version" : "5.6.0",

"minimum_index_compatibility_version" : "5.0.0"

},

"tagline" : "You Know, for Search"

}

出现如上结果表示ES配置完成,并且没有问题

4.部署Logstash节点

1.查看系统环境:

[root@Logstash ~]# hostname

Logstash

[root@Logstash ~]# cat /etc/redhat-release

CentOS release 6.5 (Final)

[root@Logstash ~]# uname -r

2.6.32-431.el6.x86_64

[root@Logstash ~]# /etc/init.d/iptables stop

iptables:将链设置为政策 ACCEPT:filter [确定]

iptables:清除防火墙规则: [确定]

iptables:正在卸载模块: [确定]

[root@Logstash ~]# setenforce 0

setenforce: SELinux is disabled

2.安装logstash,通过它来监听数据源文件的新增内容经过logstash处理后上传到es里面。

[root@Logstash ~]# tar xf logstash-6.3.1.tar.gz

[root@Logstash ~]# mv logstash-6.3.1 /usr/local/logstash

如需修改其配置文件

3.为logstash创建启动配置文件

[root@Logstash ~]#/usr/local/logstash/config/apache.conf

input {

beats {

port => "5044"

}

}

filter {

grok {

match => { "message" => "%{COMBINEDAPACHELOG}" }

}

date {

match => [ "timestamp", "dd/MMM/yyyy:HH:mm:ss Z" ]

target => ["datetime"]

}

geoip {

source => "clientip"

}

}

output {

elasticsearch {

hosts => "192.168.8.102:9200" #ES主机IP和端口

index => "access_log" #索引

}

stdout { codec => rubydebug }

}

4.启动logstash

[root@Logstash ~]#cd /usr/local/logstash/

[root@Logstash logstash]#ls

bin config CONTRIBUTORS data Gemfile Gemfile.lock lib LICENSE.txt logs logstash-core logstash-core-plugin-api modules NOTICE.TXT tools vendor x-pack

[root@Logstash logstash]./bin/logstash -f config/apache.conf

[root@Logstash ~]# netstat -anpt

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:111 0.0.0.0:* LISTEN 1019/rpcbind

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 1153/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 1229/master

tcp 0 0 0.0.0.0:47370 0.0.0.0:* LISTEN 1037/rpc.statd

tcp 0 0 192.168.8.103:22 192.168.8.254:49219 ESTABLISHED 1910/sshd

tcp 0 0 192.168.8.103:22 192.168.8.254:52930 ESTABLISHED 2467/sshd

tcp 0 288 192.168.8.103:22 192.168.8.254:65405 ESTABLISHED 3241/sshd

tcp 0 0 :::111 :::* LISTEN 1019/rpcbind

tcp 0 0 :::80 :::* LISTEN 2409/httpd

tcp 0 0 :::5043 :::* LISTEN 2877/java

tcp 0 0 :::5044 :::* LISTEN 2686/java

tcp 0 0 :::64917 :::* LISTEN 1037/rpc.statd

tcp 0 0 :::22 :::* LISTEN 1153/sshd

tcp 0 0 ::1:25 :::* LISTEN 1229/master

tcp 0 0 ::ffff:127.0.0.1:9600 :::* LISTEN 2686/java

tcp 0 0 ::ffff:127.0.0.1:9601 :::* LISTEN 2877/java 我此处启动了两个logstash实例端口分别是5044,5043

5.部署Kibana节点

1.查看系统环境:

[root@Kibana ~]# hostname

Kibana

[root@Kibana ~]# cat /etc/redhat-release

CentOS release 6.5 (Final)

[root@Kibana ~]# uname -r

2.6.32-431.el6.x86_64

2.安装kibana

[root@Kibana ~]# tar xf kibana-6.3.1-linux-x86_64.tar.gz

[root@Kibana ~]# mv kibana-6.3.1-linux-x86_64 /usr/local/kibana

[root@Kibana ~]# cd /usr/local/kibana/config/

[root@Kibana config]# cp kibana.yml{,.default}

3.编辑配置文件:

[root@Kibana config]# vim kibana.yml

server.port: 5601 #这行去掉注释

server.host: "192.168.8.104" #去掉注释,写上本机地址

elasticsearch.url: "http://192.168.8.102:9200" #写上elasticsearch主机的IP

4.启动服务:

[root@Kibana ~]#cd /usr/local/kibana/bin

[root@Kibana bin]./kibana

log [21:22:38.557] [info][status][plugin:[email protected]] Status changed from uninitialized to green - Ready

log [21:22:38.603] [info][status][plugin:[email protected]] Status changed from uninitialized to yellow - Waiting for Elasticsearch

log [21:22:38.608] [info][status][plugin:[email protected]] Status changed from uninitialized to yellow - Waiting for Elasticsearch

log [21:22:38.621] [info][status][plugin:[email protected]] Status changed from uninitialized to yellow - Waiting for Elasticsearch

log [21:22:38.629] [info][status][plugin:[email protected]] Status changed from uninitialized to yellow - Waiting for Elasticsearch

log [21:22:38.698] [info][status][plugin:[email protected]] Status changed from uninitialized to yellow - Waiting for Elasticsearch

log [21:22:38.700] [info][status][plugin:[email protected]] Status changed from uninitialized to yellow - Waiting for Elasticsearch

log [21:22:38.723] [info][status][plugin:[email protected]] Status changed from uninitialized to green - Ready

log [21:22:38.725] [info][status][plugin:[email protected]] Status changed from uninitialized to yellow - Waiting for Elasticsearch

log [21:22:38.858] [info][status][plugin:[email protected]] Status changed from uninitialized to green - Ready

log [21:22:38.860] [info][status][plugin:[email protected]] Status changed from uninitialized to yellow - Waiting for Elasticsearch

log [21:22:38.863] [info][status][plugin:[email protected]] Status changed from uninitialized to green - Ready

log [21:22:38.865] [info][status][plugin:[email protected]] Status changed from uninitialized to yellow - Waiting for Elasticsearch

log [21:22:38.867] [warning][security] Generating a random key for xpack.security.encryptionKey. To prevent sessions from being invalidated on restart, please set xpack.security.encryptionKey in kibana.yml

log [21:22:38.873] [warning][security] Session cookies will be transmitted over insecure connections. This is not recommended.

log [21:22:38.920] [info][status][plugin:[email protected]] Status changed from uninitialized to yellow - Waiting for Elasticsearch

log [21:22:38.933] [info][status][plugin:[email protected]] Status changed from uninitialized to green - Ready

log [21:22:38.940] [info][status][plugin:[email protected]] Status changed from uninitialized to yellow - Waiting for Elasticsearch

log [21:22:38.967] [info][status][plugin:[email protected]] Status changed from uninitialized to green - Ready

log [21:22:38.974] [info][status][plugin:[email protected]] Status changed from uninitialized to green - Ready

log [21:22:38.979] [info][status][plugin:[email protected]] Status changed from uninitialized to green - Ready

log [21:22:38.986] [info][status][plugin:[email protected]] Status changed from uninitialized to green - Ready

log [21:22:39.701] [warning][reporting] Generating a random key for xpack.reporting.encryptionKey. To prevent pending reports from failing on restart, please set xpack.reporting.encryptionKey in kibana.yml

log [21:22:39.708] [info][status][plugin:[email protected]] Status changed from uninitialized to yellow - Waiting for Elasticsearch

log [21:22:39.884] [info][listening] Server running at http://0.0.0.0:5601

log [21:22:39.901] [info][status][plugin:[email protected]] Status changed from yellow to green - Ready

log [21:22:39.906] [info][license][xpack] Imported license information from Elasticsearch for the [data] cluster: mode: basic | status: active

log [21:22:39.946] [info][status][plugin:[email protected]] Status changed from yellow to green - Ready

log [21:22:39.947] [info][status][plugin:[email protected]] Status changed from yellow to green - Ready

log [21:22:39.948] [info][status][plugin:[email protected]] Status changed from yellow to green - Ready

log [21:22:39.949] [info][status][plugin:[email protected]] Status changed from yellow to green - Ready

log [21:22:39.950] [info][status][plugin:[email protected]] Status changed from yellow to green - Ready

log [21:22:39.951] [info][status][plugin:[email protected]] Status changed from yellow to green - Ready

log [21:22:39.954] [info][status][plugin:[email protected]] Status changed from yellow to green - Ready

log [21:22:39.955] [info][status][plugin:[email protected]] Status changed from yellow to green - Ready

log [21:22:39.956] [info][status][plugin:[email protected]] Status changed from yellow to green - Ready

log [21:22:39.959] [info][status][plugin:[email protected]] Status changed from yellow to green - Ready

log [21:22:39.960] [info][status][plugin:[email protected]] Status changed from yellow to green - Ready

log [21:22:39.977] [info][kibana-monitoring][monitoring-ui] Starting all Kibana monitoring collectors

log [21:22:39.988] [info][license][xpack] Imported license information from Elasticsearch for the [monitoring] cluster: mode: basic | status: active5.打开浏览器访问192.168.8.104:5601

5.配置apache上的filebeat

[root@Apache ~]# cd /usr/local/filebeat/

[root@Apache filebeat]# ls

data fields.yml filebeat filebeat.reference.yml filebeat.yml kibana LICENSE.txt module modules.d NOTICE.txt README.md

[root@Apache filebeat]# vim filebeat.yml(只修改如下几段)

#=========================== Filebeat inputs =============================

filebeat.inputs:

- type: log

paths:

- "/var/log/httpd/*" 此处日志位置要注意 我使用的是yum安装的apache

fields:

apache: true

fields_under_root: true

#============================== Kibana =====================================

# Starting with Beats version 6.0.0, the dashboards are loaded via the Kibana API.

# This requires a Kibana endpoint configuration.

setup.kibana:

# Kibana Host

# Scheme and port can be left out and will be set to the default (http and 5601)

# In case you specify and additional path, the scheme is required: http://localhost:5601/path

# IPv6 addresses should always be defined as: https://[2001:db8::1]:5601

host: "192.168.8.104:5601"

#----------------------------- Logstash output --------------------------------

output.logstash:

# The Logstash hosts

hosts: ["192.168.8.103:5044"]

# Optional SSL. By default is off.

# List of root certificates for HTTPS server verifications

#ssl.certificate_authorities: ["/etc/pki/root/ca.pem"]

# Certificate for SSL client authentication

#ssl.certificate: "/etc/pki/client/cert.pem"

# Client Certificate Key

#ssl.key: "/etc/pki/client/cert.key"

[root@Apache filebeat]# ./filebeat -e -c filebeat.yml先测试访问apache,产生日志,完事在logstash上查看前端控制台中的输出

{

"@timestamp" => 2018-07-22T21:37:06.606Z,

"input" => {

"type" => "log"

},

"tags" => [

[0] "beats_input_codec_plain_applied",

[1] "_geoip_lookup_failure"

],

"request" => "/",

"agent" => "\"curl/7.19.7 (x86_64-redhat-linux-gnu) libcurl/7.19.7 NSS/3.14.0.0 zlib/1.2.3 libidn/1.18 libssh2/1.4.2\"",

"beat" => {

"name" => "Logstash",

"hostname" => "Logstash",

"version" => "6.3.1"

},

"ident" => "-",

"verb" => "GET",

"apache" => true,

"httpversion" => "1.1",

"host" => {

"name" => "Logstash"

},

"prospector" => {

"type" => "log"

},

"clientip" => "192.168.8.101",

"source" => "/var/log/httpd/access_log",

"referrer" => "\"-\"",

"auth" => "-",

"datetime" => 2018-07-22T21:37:05.000Z,

"offset" => 25023,

"timestamp" => "23/Jul/2018:05:37:05 +0800",

"message" => "192.168.8.101 - - [23/Jul/2018:05:37:05 +0800] \"GET / HTTP/1.1\" 403 4961 \"-\" \"curl/7.19.7 (x86_64-redhat-linux-gnu) libcurl/7.19.7 NSS/3.14.0.0 zlib/1.2.3 libidn/1.18 libssh2/1.4.2\"",

"response" => "403",

"@version" => "1",

"bytes" => "4961",

"geoip" => {}

}

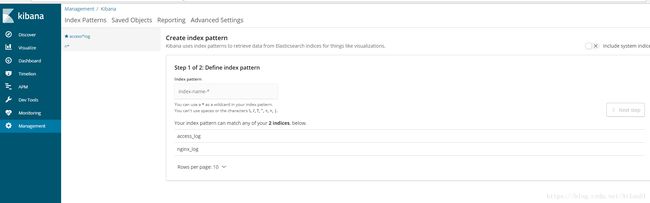

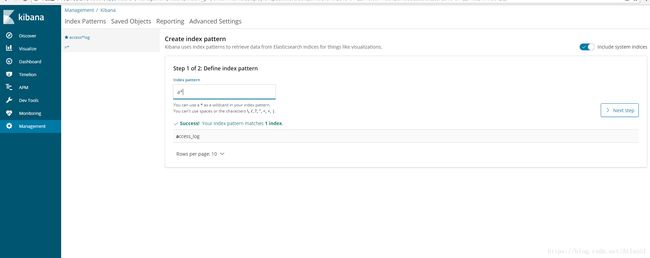

完事在kibana中创建索引

注意当你的输入框下方的语句变成Success! Your index pattern matches 1 index. 才可以点击下一步

在创建索引的时候会让你选择过滤器 此处选择不适用过滤器,否则将看不见数据。。

完事点击菜单栏中的

能看到数据即可

至此ELK搭建完成