【OpenCV学习笔记 012】估算图像间的投影关系

一、相机标定

1.实验实验一:自动检测棋盘图案中的角点

源码示例

#include

#include

#include

#include

#include

using namespace std;

using namespace cv;

int main(){

Mat image = cvLoadImage("chessboard.jpg");

//输出图像角点的vector

vectorimageCorners;

//棋牌中角点数目

Size boardSize(8, 11);

//获取棋牌的角点

bool found = findChessboardCorners(image, boardSize, imageCorners);

//绘制角点

drawChessboardCorners(image, boardSize, imageCorners, found);//已经找到的角点

namedWindow("Corners on Chessboard");

imshow("Corners on Chessboard", image);

waitKey();

return 0;

} 实验结果

连接角点的直线展示了角点在向量中的顺序。

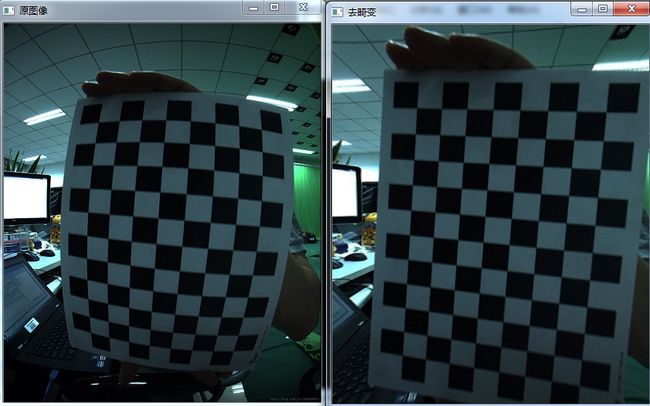

实验二:将棋盘图案去畸变得到正规的透视图像

源码示例

#include

#include

#include

#include

#include

using namespace std;

using namespace cv;

class CameraCalibrator{

//输入点:

//位于世界坐标的点

vector>objectPoints;

//像素坐标的点

vector>imagePoints;

//输出矩阵

Mat cameraMatrix;

Mat distCoeffs;

//标定的方式

int flag;

//用于图像去畸变

Mat map1, map2;

bool mustInitUndistort;

public:

CameraCalibrator() :flag(0), mustInitUndistort(true){};

//打开棋盘图像并提取角点

int addChessboardPoints(const vector&filelist, Size&boardSize);

//添加场景点与对应的图像点

void addPoints(const vector&imageCorners, const vector&objectPoints);

//进行标定,返回重投影误差(re-projection error)

double calibrate(Size&imageSize);

//标定后去除图像中的畸变

Mat remap(const Mat&image);

};

int CameraCalibrator::addChessboardPoints(const vector&filelist, Size&boardSize){

//棋盘上的点的两种坐标

vector imageCorners;

vector objectCorners;

//3D场景中的点 在棋盘坐标系中初始化棋盘角点 这些点位于(x,y,z) = (i,j,0)

for (int i = 0; i < boardSize.height; i++)

{

for (int j = 0; j < boardSize.width; j++)

{

objectCorners.push_back(Point3f(i, j, 0.0f));

}

}

//2D图像中的点

Mat image;//为了保存棋盘图像

int success = 0;

//for all viewpoints

for (int i = 0; i < filelist.size(); i++)

{

//打开图像

image = imread(filelist[i],0);

//得到角点

bool found = findChessboardCorners(image,boardSize,imageCorners);

//获取亚像素精度

cornerSubPix(image, imageCorners,

Size(5, 5), Size(-1, -1),

TermCriteria(TermCriteria::MAX_ITER+TermCriteria::EPS,

30, //最大迭代数目

0.1)); //最小精度

//如果角点数目满足要求,那么将它加入数据

if (imageCorners.size()==boardSize.area())

{

//添加一个视角中的图像点及场景点

addPoints(imageCorners, objectCorners);

success++;

}

}

return success;

}

void CameraCalibrator::addPoints(const vector&imageCorners, const vector&objectCorners){

//2D图像点

imagePoints.push_back(imageCorners);

//对应3D场景中的点

objectPoints.push_back(objectCorners);

}

double CameraCalibrator::calibrate(Size&imageSize){

//必须重新进行去畸变

mustInitUndistort = true;

//输出旋转和平移

vector rvecs, tvecs;

//开始标定

return calibrateCamera(objectPoints,//3D点

imagePoints, //图像点

imageSize, //图像尺寸

cameraMatrix, //输出的相机矩阵

distCoeffs, //输出的畸变矩阵

rvecs, tvecs, //旋转和平移

flag); //额外选项

}

Mat CameraCalibrator::remap(const Mat&image){

Mat undistorted;

if (mustInitUndistort)//每次标定只需要初始化一次

{

initUndistortRectifyMap(cameraMatrix, //计算得到的相机矩阵

distCoeffs, //计算得到的畸变矩阵

Mat(), //可选的rectification矩阵(此处为空)

Mat(), //用于生成undistorted对象的相机矩阵

image.size(), //undistorted对象的尺寸

CV_32FC1, //输出的映射图像的类型

map1, map2); //x坐标和y坐标映射函数

mustInitUndistort = false;

}

//应用映射函数

cv::remap(image, undistorted, map1, map2, INTER_LINEAR);//插值类型

return undistorted;

}

int main(){

CameraCalibrator Cc;

cv::Mat image;

std::vector filelist;

cv::namedWindow("Image");

filelist.push_back("chessboard.jpg");

image = cv::imread(filelist[0]);

//相机标定

cv::Size boardSize(8,11);

Cc.addChessboardPoints(filelist, boardSize);

Cc.calibrate(image.size());

//去畸变

cv::Mat uImage = Cc.remap(image);

cv::imshow("原图像", image);

cv::imshow("去畸变", uImage);

cv::waitKey(0);

waitKey();

return 0;

} 标定过程:检测到一组角点后并将这些点添加到坐标点向量中-->计算标定参数-->通过一种数学公式表示畸变,构造合适的模型反转它以复原图像中的畸变。

原图像与透视图像

二、计算一对图像的基础矩阵

源码示例

#include

#include

#include

#include

#include "opencv2/nonfree/nonfree.hpp"

#include

#include

using namespace std;

using namespace cv;

int main(){

Mat image1 = cvLoadImage("church01.jpg");

Mat image2 = cvLoadImage("church03.jpg");

if (!image1.data || !image2.data)

return 0;

//特征点的向量

vector keypoints1, keypoints2;

//构造SURF特征检测器

SurfFeatureDetector surf(2500.); //阈值

//检测SURF特征

surf.detect(image1, keypoints1);

surf.detect(image2, keypoints2);

//构造SURF描述子提取器

SurfDescriptorExtractor surfDesc;

//提取SURF描述子

Mat descriptors1, descriptors2;

surfDesc.compute(image1, keypoints1, descriptors1);

surfDesc.compute(image2, keypoints2, descriptors2);

//构造匹配器

BruteForceMatcher> matcher;

//匹配两幅图像的描述子

vectormatches;

matcher.match(descriptors1, descriptors2, matches);

nth_element(matches.begin(), //初始位置

matches.begin() + 7, //排序元素的位置

matches.end()); //终止位置

//移除第25位之后所有的元素

matches.erase(matches.begin() + 8, matches.end());

Mat imageMatches;

drawMatches(

image1, keypoints1, //第一幅图像及其特征点

image2, keypoints2, //第二幅图像及其特征点

matches, //匹配结果

imageMatches, //生成的图像

Scalar(255, 255, 255)); //直线的颜色

//转换KeyPoint类型到Point2f

vectorselPoints1, selPoints2;

vectorpointIndexes1, pointIndexes2;

for (std::vector::const_iterator it = matches.begin();

it != matches.end(); ++it)

{

// Get the indexes of the selected matched keypoints

pointIndexes1.push_back(it->queryIdx);

pointIndexes2.push_back(it->trainIdx);

}

KeyPoint::convert(keypoints1, selPoints1, pointIndexes1);

KeyPoint::convert(keypoints2, selPoints2, pointIndexes2);

//从7个矩阵中计算F矩阵

Mat fundemental = findFundamentalMat(

Mat(selPoints1), //图1中的点

Mat(selPoints2), //图2中的点

CV_FM_7POINT); //使用7个点的方法

//计算左图中点的极线 绘制在右图中 在右图中绘制对应的极线

vector lines1;

computeCorrespondEpilines(

Mat(selPoints1), //图像点

1, //图1(也可以是2)

fundemental, //F矩阵

lines1); //一组极线

//对于所有极线

for (vector::const_iterator it = lines1.begin(); it != lines1.end(); ++it)

{

//绘制第一列与最后一列之间的直线

line(image2,

Point(0, -(*it)[2] / (*it)[1]),

Point(image2.cols, -((*it)[2] + (*it)[0] * image2.cols) / (*it)[1]), Scalar(255, 255, 255));

}

namedWindow("Left Image Epilines");

imshow("Left Image Epilines", image2);

waitKey(0);

return 0;

} 三、双视觉的极性约束匹配图像特征

实验

示例源码

#include

#include

#include

#include

#include

#include

#include

using namespace std;

using namespace cv;

class RobustMatcher{

private:

//指向特征检测器的智能指针

Ptrdetector;

//指向描述子提取器的智能指针

Ptrextractor;

float ratio; //第一个以及第二个最近邻之间的最大比率

bool refineF; //是否改善F矩阵

double distance; //到极线的最小距离

double confidence; //置信等级(概率)

public:

RobustMatcher() :ratio(0.65f), refineF(true), confidence(0.99), distance(3.0){

//SURF为默认特征

detector = new SurfFeatureDetector();

extractor = new SurfDescriptorExtractor();

}

//设置特征检测器

void setFeatureDetector(Ptr&detect){

detector = detect;

}

//设置描述子提取器

void setDescriptorExtractor(Ptr&desc){

extractor = desc;

}

//设置最小距离极线RANSAC

void setMinDistanceToEpipolar(double d) {

distance = d;

}

//设置置信水平RANSAC

void setConfidenceLevel(double c) {

confidence = c;

}

void setRatio(float r) {

ratio = r;

}

void refineFundamental(bool flag) {

refineF = flag;

}

//使用对称性测试以及RANSAC匹配特征点

//返回基础矩阵

Mat match(Mat&image1,

Mat&image2, //输入图像

//输出匹配及特征点

vector&matches,

vector&keypoints1,

vector&keypoints2){

//1a测SURF特征

detector->detect(image1, keypoints1);

detector->detect(image2, keypoints2);

//1b取SURF描述子

Mat descriptions1, descriptions2;

extractor->compute(image1, keypoints1, descriptions1);

extractor->compute(image2, keypoints2, descriptions2);

//2.配两幅图像的描述子

//创建匹配器

BruteForceMatcher>matcher;

//从图1->图2的K最近邻(k=2)

vector>matches1;

matcher.knnMatch(descriptions1,descriptions2,

matches1, //匹配结果的向量(每项有两个值)

2); //返回两个最近邻

//从图2->图1的k个最近邻(k=2)

vector>matches2;

matcher.knnMatch(descriptions2, descriptions1,

matches2, //匹配结果的向量(每项有两个值)

2); //返回两个最近邻

//3.移除NN比率大于阈值的匹配

//清理图1->图2的匹配

int removed = ratioTest(matches1);

//清理图2->图1的匹配

removed = ratioTest(matches2);

//4.移除非对称的匹配

vectorsymMatches;

symmetryTest(matches1, matches2, symMatches);

//5.使用RANSAC进行最终验证

Mat fundemental = ransacTest(symMatches,keypoints1,keypoints2,matches);

//返回找到的基础矩阵

return fundemental;

}

//移除NN比率大于阈值的匹配 返回移除点的数目 对应的项被清零即尺寸将为0

int ratioTest(vector>& matches) {

int removed = 0;

//遍历所有匹配

for (vector>::iterator matchIterator = matches.begin();

matchIterator != matches.end(); ++matchIterator) {

// 如果识别两个最近邻

if (matchIterator->size() > 1) {

//检查距离比率

if ((*matchIterator)[0].distance / (*matchIterator)[1].distance > ratio) {

matchIterator->clear(); //移除匹配

removed++;

}

}

else { //不包含两个最近邻

matchIterator->clear(); //移除匹配

removed++;

}

}

return removed;

}

//在symMatches向量中插入对称匹配

void symmetryTest(const vector>& matches1,

const vector>& matches2,

vector& symMatches) {

// 遍历图1->图2的所有匹配

for (vector>::const_iterator matchIterator1 = matches1.begin();

matchIterator1 != matches1.end(); ++matchIterator1) {

if (matchIterator1->size() < 2) //忽略被删除的匹配

continue;

//遍历图2->图1的所有匹配

for (vector>::const_iterator matchIterator2 = matches2.begin();

matchIterator2 != matches2.end(); ++matchIterator2) {

if (matchIterator2->size() < 2) //忽略被删除的匹配

continue;

// 对称性测试

if ((*matchIterator1)[0].queryIdx == (*matchIterator2)[0].trainIdx &&

(*matchIterator2)[0].queryIdx == (*matchIterator1)[0].trainIdx) {

// 添加对称的匹配

symMatches.push_back(DMatch((*matchIterator1)[0].queryIdx,

(*matchIterator1)[0].trainIdx,

(*matchIterator1)[0].distance));

break; //图1->图2中的下一个匹配

}

}

}

}

// 基于RANSAC识别优质匹配

// 返回基础矩阵

Mat ransacTest(const vector& matches,

const vector& keypoints1,

const vector& keypoints2,

vector& outMatches) {

//转换KeyPoint类型到Point2f

vector points1, points2;

for (vector::const_iterator it = matches.begin();

it != matches.end(); ++it) {

// 得到左边特征点的坐标

float x = keypoints1[it->queryIdx].pt.x;

float y = keypoints1[it->queryIdx].pt.y;

points1.push_back(Point2f(x, y));

// 得到右边特征点的左边

x = keypoints2[it->trainIdx].pt.x;

y = keypoints2[it->trainIdx].pt.y;

points2.push_back(Point2f(x, y));

}

// 基于RANSAC计算F矩阵

vector inliers(points1.size(), 0);

Mat fundemental = findFundamentalMat(

Mat(points1), Mat(points2), // 匹配点

inliers, // 匹配状态 (inlier 或 outlier)

CV_FM_RANSAC, // RANSAC 方法

distance, // 到极线的距离

confidence); // 置信概率

// 提取通过的匹配

vector::const_iterator itIn = inliers.begin();

vector::const_iterator itM = matches.begin();

// 遍历所有匹配

for (; itIn != inliers.end(); ++itIn, ++itM) {

if (*itIn) { // 为有效匹配

outMatches.push_back(*itM);

}

}

if (refineF) {

// F矩阵将使用所有接受的匹配重新计算

// 转换keypoints 类型到 Point2f 准备计算最终的F矩阵

points1.clear();

points2.clear();

for (std::vector::const_iterator it = outMatches.begin();

it != outMatches.end(); ++it) {

// 得到左边特征点的坐标

float x = keypoints1[it->queryIdx].pt.x;

float y = keypoints1[it->queryIdx].pt.y;

points1.push_back(Point2f(x, y));

// 得到右边特征点的坐标

x = keypoints2[it->trainIdx].pt.x;

y = keypoints2[it->trainIdx].pt.y;

points2.push_back(Point2f(x, y));

}

// 从所有接受的匹配点中计算8点F

fundemental = findFundamentalMat(

Mat(points1), Mat(points2), // 匹配

CV_FM_8POINT); // 8点法

}

return fundemental;

}

};

int main(){

Mat image1 = imread("canal1.jpg",0);

Mat image2 = imread("canal2.jpg",0);

//准备匹配器

RobustMatcher rmatcher;

rmatcher.setConfidenceLevel(0.98);

rmatcher.setMinDistanceToEpipolar(1.0);

rmatcher.setRatio(0.65);

Ptr pfd = new SurfFeatureDetector(10);

rmatcher.setFeatureDetector(pfd);

//匹配两幅图像

vectormatches;

vectorkeypoints1, keypoints2;

Mat fundemental = rmatcher.match(image1, image2, matches, keypoints1, keypoints2);

// 画匹配图像

Mat imageMatches;

drawMatches(image1, keypoints1, // 第一幅图像及其特征点

image2, keypoints2, // 第二幅图像及其特征点

matches, // 匹配结果

imageMatches, // 生成的图像

Scalar(255, 255, 255)); // 直线的颜色

namedWindow("Matches");

imshow("Matches", imageMatches);

// 转换KeyPoint类型到Point2f

std::vector points1, points2;

for (std::vector::const_iterator it = matches.begin();

it != matches.end(); ++it) {

// 得到左边位置的特征点

float x = keypoints1[it->queryIdx].pt.x;

float y = keypoints1[it->queryIdx].pt.y;

points1.push_back(Point2f(x, y));

circle(image1, Point(x, y), 3, Scalar(255, 255, 255), 3);

// 得到右边位置的特征点

x = keypoints2[it->trainIdx].pt.x;

y = keypoints2[it->trainIdx].pt.y;

circle(image2, Point(x, y), 3, Scalar(255, 255, 255), 3);

points2.push_back(Point2f(x, y));

}

// 画极线

std::vector lines1;

computeCorrespondEpilines(Mat(points1), 1, fundemental, lines1);

for (vector::const_iterator it = lines1.begin();

it != lines1.end(); ++it) {

line(image2, Point(0, -(*it)[2] / (*it)[1]),

Point(image2.cols, -((*it)[2] + (*it)[0] * image2.cols) / (*it)[1]),

Scalar(255, 255, 255));

}

std::vector lines2;

computeCorrespondEpilines(Mat(points2), 2, fundemental, lines2);

for (vector::const_iterator it = lines2.begin();

it != lines2.end(); ++it) {

line(image1, Point(0, -(*it)[2] / (*it)[1]),

Point(image1.cols, -((*it)[2] + (*it)[0] * image1.cols) / (*it)[1]),

Scalar(255, 255, 255));

}

// 显示带有极线约束图片

namedWindow("Right Image Epilines (RANSAC)");

imshow("Right Image Epilines (RANSAC)", image1);

namedWindow("Left Image Epilines (RANSAC)");

imshow("Left Image Epilines (RANSAC)", image2);

waitKey();

return 0;

} 运行结果

匹配的特征点

四、两幅图之间的单应矩阵

源码示例

int main()

{

//读取图像

cv::Mat image1 = cv::imread("parliament1.bmp", 0);

cv::Mat image2 = cv::imread("parliament2.bmp", 0);

if (!image1.data || !image2.data)

return 0;

//准备匹配器

RobustMatcher rmatcher;

rmatcher.setConfidenceLevel(0.98);

rmatcher.setMinDistanceToEpipolar(1.0);

rmatcher.setRatio(0.65f);

cv::Ptr pfd = new cv::SurfFeatureDetector(10);

rmatcher.setFeatureDetector(pfd);

//匹配两幅图像

std::vector matches;

std::vector keypoints1, keypoints2;

cv::Mat fundemental = rmatcher.match(image1, image2, matches, keypoints1, keypoints2);

// 画匹配图像

cv::Mat imageMatches;

cv::drawMatches(image1, keypoints1, // 第一幅图像及其特征点

image2, keypoints2, // 第二幅图像及其特征点

matches, // 匹配结果

imageMatches, // 生成的图像

cv::Scalar(255, 255, 255)); // 直线的颜色

cv::namedWindow("Matches");

cv::imshow("Matches", imageMatches);

// 转换KeyPoint类型到Point2f

std::vector points1, points2;

for (std::vector::const_iterator it = matches.begin();

it != matches.end(); ++it) {

// 得到左边位置的特征点

float x = keypoints1[it->queryIdx].pt.x;

float y = keypoints1[it->queryIdx].pt.y;

points1.push_back(cv::Point2f(x, y));

// 得到右边位置的特征点

x = keypoints2[it->trainIdx].pt.x;

y = keypoints2[it->trainIdx].pt.y;

points2.push_back(cv::Point2f(x, y));

}

//找到两个图之间的单应矩阵

std::vector inliers(points1.size(), 0);

cv::Mat homography = cv::findHomography(

cv::Mat(points1), cv::Mat(points2), // 对应的点集

inliers, // 输出的正确值

CV_RANSAC, // RANSAC 算法

1.); // 到反投影点的最大距离

// 绘制inlier点

std::vector::const_iterator itPts = points1.begin();

std::vector::const_iterator itIn = inliers.begin();

while (itPts != points1.end()) {

// 在每个inlier位置画圆

if (*itIn)

cv::circle(image1, *itPts, 3, cv::Scalar(255, 255, 255), 2);

++itPts;

++itIn;

}

itPts = points2.begin();

itIn = inliers.begin();

while (itPts != points2.end()) {

// 在每个inlier位置画圆

if (*itIn)

cv::circle(image2, *itPts, 3, cv::Scalar(255, 255, 255), 2);

++itPts;

++itIn;

}

cv::namedWindow("Image 1 Homography Points");

cv::imshow("Image 1 Homography Points", image1);

cv::namedWindow("Image 2 Homography Points");

cv::imshow("Image 2 Homography Points", image2);

// 歪曲image1 到 image2

cv::Mat result;

cv::warpPerspective(image1, // 输入

result, // 输出

homography, // homography

cv::Size(2 * image1.cols, image1.rows)); // 输出图像的尺寸

// 赋值图1到整幅图像的前半部分

cv::Mat half(result, cv::Rect(0, 0, image2.cols, image2.rows));

image2.copyTo(half);//复制图2到图1的ROI区域

cv::namedWindow("After warping");

cv::imshow("After warping", result);

cv::waitKey();

return 0;

} 运行效果

【OpenCV学习笔记 012】估算图像间的投影关系 配套的源码下载