一、安装配置hadoop

- 注意:hadoop的配置对内存有要求!!!

- 配置hadoop、jdk(jdk辅助)

- 以hadoop用户操作

[root@server1 ~]# ls

hadoop-2.7.3.tar.gz jdk-7u79-linux-x64.tar.gz

[root@server1 ~]# useradd -u 800 hadoop

[root@server1 ~]# mv * /home/hadoop/

[root@server1 ~]# su - hadoop

[hadoop@server1 ~]$ ls

hadoop-2.7.3.tar.gz jdk-7u79-linux-x64.tar.gz

[hadoop@server1 ~]$ tar zxf hadoop-2.7.3.tar.gz

[hadoop@server1 ~]$ tar zxf jdk-7u79-linux-x64.tar.gz

[hadoop@server1 ~]$ ln -s jdk1.7.0_79/ java

[hadoop@server1 ~]$ ln -s hadoop-2.7.3 hadoop

[hadoop@server1 ~]$ cd hadoop

[hadoop@server1 hadoop]$ cd etc/hadoop/

[hadoop@server1 hadoop]$ vim hadoop-env.sh

export JAVA_HOME=/home/hadoop/java

[hadoop@server1 hadoop]$ pwd

/home/hadoop/hadoop

[hadoop@server1 hadoop]$ mkdir input

[hadoop@server1 hadoop]$ cp etc/hadoop/* input/

[hadoop@server1 hadoop]$ bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.3.jar

[hadoop@server1 hadoop]$ bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.3.jar grep input output 'dfs[a-z.]+'

[hadoop@server1 hadoop]$ cat output/*

6 dfs.audit.logger

4 dfs.class

3 dfs.server.namenode.

2 dfs.period

2 dfs.audit.log.maxfilesize

2 dfs.audit.log.maxbackupindex

1 dfsmetrics.log

1 dfsadmin

1 dfs.servers

1 dfs.file

二、数据操作

1、配置hadoop

[hadoop@server1 hadoop]$ cd etc/hadoop/

[hadoop@server1 hadoop]$ vim core-site.xml

<configuration>

<property>

<name>fs.defaultFSname>

<value>hdfs://172.25.120.1:9000value>

property>

configuration>

[hadoop@server1 hadoop]$ vim slaves

172.25.120.1

[hadoop@server1 hadoop]$ vim hdfs-site.xml

<configuration>

<property>

<name>dfs.replicationname>

<value>1value>

property>

configuration>

2、配置ssh

[hadoop@server1 hadoop]$ ssh-keygen

[hadoop@server1 hadoop]$ cd

[hadoop@server1 ~]$ cd .ssh/

[hadoop@server1 .ssh]$ cp id_rsa.pub authorized_keys

[hadoop@server1 .ssh]$ ssh localhost

[hadoop@server1 ~]$ logout

[hadoop@server1 .ssh]$ ssh 172.25.120.1

[hadoop@server1 ~]$ logout

[hadoop@server1 .ssh]$ ssh server1

[hadoop@server1 ~]$ logout

[hadoop@server1 .ssh]$ ssh 0.0.0.0

[hadoop@server1 ~]$ logout

3、启动dfs

[hadoop@server1 ~]$ pwd

/home/hadoop

[hadoop@server1 ~]$ cd hadoop

[hadoop@server1 hadoop]$ bin/hdfs namenode -format

[hadoop@server1 hadoop]$ ls /tmp/

hadoop-hadoop hsperfdata_hadoop

[hadoop@server1 hadoop]$ sbin/start-dfs.sh

Starting namenodes on [server1]

server1: starting namenode, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-namenode-server1.out

172.25.120.1: starting datanode, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-datanode-server1.out

Starting secondary namenodes [0.0.0.0]

0.0.0.0: starting secondarynamenode, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-secondarynamenode-server1.out

[hadoop@server1 ~]$ vim .bash_profile

PATH=$PATH:$HOME/bin:~/java/bin

[hadoop@server1 ~]$ logout

[root@server1 ~]

[hadoop@server1 ~]$ jps

1896 SecondaryNameNode

1713 DataNode

1620 NameNode

2031 Jps

[hadoop@server1 hadoop]$ pwd

/home/hadoop/hadoop

[hadoop@server1 hadoop]$ bin/hdfs dfs -usage

[hadoop@server1 hadoop]$ bin/hdfs dfs -mkdir /user

[hadoop@server1 hadoop]$ bin/hdfs dfs -mkdir /user/hadoop

[hadoop@server1 hadoop]$ bin/hdfs dfs -put input/

[hadoop@server1 hadoop]$ bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreducexamples-2.7.3.jar wordcount input output

三、分布式文件存储

1、namenode(172.25.120.1)

[root@server1 ~]

[root@server1 ~]

Starting rpcbind: [ OK ]

[root@server1 ~]

/home/hadoop *(rw,anonuid=800,anongid=800)

[root@server1 ~]

Starting NFS services: [ OK ]

Starting NFS mountd: [ OK ]

Starting NFS daemon: [ OK ]

Starting RPC idmapd: [ OK ]

[root@server1 ~]

/home/hadoop (rw,wdelay,root_squash,no_subtree_check,anonuid=800,anongid=800)

[root@server1 ~]

exporting *:/home/hadoop

2、datanode(172.25.120.2和172.25.120.3:操作一致)

[root@server2 ~]

[root@server2 ~]

Starting rpcbind: [ OK ]

[root@server2 ~]

[root@server2 ~]

Export list for 172.25.120.1:

/home/hadoop *

[root@server2 ~]

[root@server2 ~]

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/VolGroup-lv_root 19134332 936680 17225672 6% /

tmpfs 510200 0 510200 0% /dev/shm

/dev/vda1 495844 33480 436764 8% /boot

172.25.120.1:/home/hadoop/ 19134336 1968384 16193920 11% /home/hadoop

3、配置

[root@server1 ~]

[hadoop@server1 ~]$ cd hadoop/etc/

[hadoop@server1 etc]$ vim hadoop/slaves

172.25.120.2

172.25.120.3

[hadoop@server1 etc]$ vim hadoop/hdfs-site.xml

dfs.replication

2

[hadoop@server1 etc]$ cd /tmp/

[hadoop@server1 tmp]$ rm -fr *

[hadoop@server1 tmp]$ ssh server2

[hadoop@server2 ~]$ logout

[hadoop@server1 tmp]$ ssh server3

[hadoop@server3 ~]$ logout

[hadoop@server1 tmp]$ ssh 172.25.120.2

[hadoop@server2 ~]$ logout

[hadoop@server1 tmp]$ ssh 172.25.120.3

[hadoop@server2 ~]$ logout

[hadoop@server1 hadoop]$ bin/hdfs namenode -format

[hadoop@server1 hadoop]$ ls /tmp/

hadoop-hadoop hsperfdata_hadoop

- 启动dfs:namenode和datanode节点分开

[hadoop@server1 hadoop]$ sbin/start-dfs.sh

Starting namenodes on [server1]

server1: starting namenode, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-namenode-server1.out

172.25.120.2: starting datanode, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-datanode-server2.out

172.25.120.3: starting datanode, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-datanode-server3.out

Starting secondary namenodes [0.0.0.0]

0.0.0.0: starting secondarynamenode, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-secondarynamenode-server1.out

[hadoop@server1 hadoop]$ jps

4303 SecondaryNameNode

4115 NameNode

4412 Jps

[root@server2 ~]

[hadoop@server2 ~]$ jps

1261 Jps

1167 DataNode

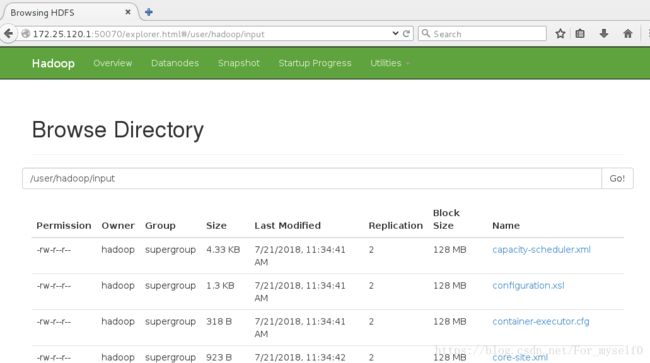

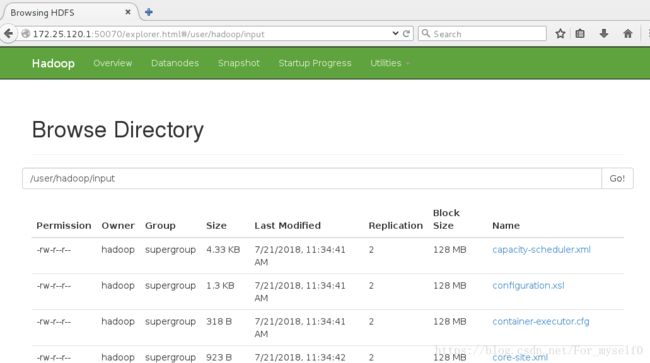

4、处理文件(datanode实时同步)

[hadoop@server1 hadoop]$ bin/hdfs dfs -mkdir /user

[hadoop@server1 hadoop]$ bin/hdfs dfs -mkdir /user/hadoop

[hadoop@server1 hadoop]$ bin/hdfs dfs -put etc/hadoop/ input

- 访问 http://172.25.120.1:50070/explorer.html#/user/hadoop 查看

四、节点的添加与删除

1、在线添加server4(172.25.120.4)

1、在线添加server4(172.25.120.4)

[root@server4 ~]

[root@server4 ~]

[root@server4 ~]

[root@server4 ~]

[hadoop@server4 ~]$ vim hadoop/etc/hadoop/slaves

172.25.120.2

172.25.120.3

172.25.120.4

[hadoop@server1 ~]$ ssh server4

[hadoop@server4 ~]$ logout

[hadoop@server1 ~]$ ssh 172.25.120.4

[hadoop@server4 ~]$ logout

[hadoop@server4 ~]$ cd hadoop

[hadoop@server4 hadoop]$ sbin/hadoop-daemon.sh start datanode

starting datanode, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-datanode-server4.out

[hadoop@server4 hadoop]$ jps

1250 Jps

1177 DataNode

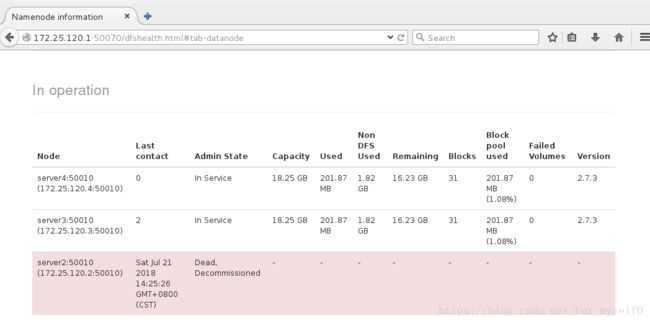

2、在线删除server2(172.25.120.2)

[hadoop@server1 hadoop]$ pwd

/home/hadoop/hadoop/etc/hadoop

[hadoop@server1 hadoop]$ vim hdfs-site.xml

dfs.hosts.exclude

/home/hadoop/hadoop/etc/hadoop/hosts-exclude

[hadoop@server1 hadoop]$ vim hosts-exclude

172.25.120.2

[hadoop@server1 hadoop]$ dd if=/dev/zero of=bigfile bs=1M count=200

[hadoop@server1 hadoop]$ ../../bin/hdfs dfs -put bigfile

[hadoop@server1 hadoop]$ vim slaves

172.25.120.3

172.25.120.4

[hadoop@server1 hadoop]$ ../../bin/hdfs dfsadmin -refreshNodes

Refresh nodes successful

[hadoop@server1 hadoop]$ ../../bin/hdfs dfsadmin -report

Name: 172.25.120.2:50010 (server2)

Hostname: server2

Decommission Status : Decommission in progress

Configured Capacity: 19593555968 (18.25 GB)

DFS Used: 135581696 (129.30 MB)

Non DFS Used: 1954566144 (1.82 GB)

DFS Remaining: 17503408128 (16.30 GB)

DFS Used%: 0.69%

DFS Remaining%: 89.33%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Sat Jul 21 14:23:43 CST 2018

Name: 172.25.120.2:50010 (server2)

Hostname: server2

Decommission Status : Decommissioned

[hadoop@server2 hadoop]$ pwd

/home/hadoop/hadoop

[hadoop@server2 hadoop]$ sbin/hadoop-daemon.sh stop datanode

stopping datanode

[hadoop@server2 hadoop]$ jps

1497 Jps

- hadoop页面查看

3、yarn模式

[hadoop@server1 hadoop]$ pwd

/home/hadoop/hadoop/etc/hadoop

[hadoop@server1 hadoop]$ cp mapred-site.xml.template mapred-site.xml

[hadoop@server1 hadoop]$ vim mapred-site.xml

mapreduce.framework.name

yarn

[hadoop@server1 hadoop]$ vim yarn-site.xml

yarn.nodemanager.aux-services

mapreduce_shuffle

[hadoop@server1 hadoop]$ ../../sbin/start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to /home/hadoop/hadoop-2.7.3/logs/yarn-hadoop-resourcemanager-server1.out

172.25.120.3: starting nodemanager, logging to /home/hadoop/hadoop-2.7.3/logs/yarn-hadoop-nodemanager-server3.out

172.25.120.4: starting nodemanager, logging to /home/hadoop/hadoop-2.7.3/logs/yarn-hadoop-nodemanager-server4.out

[hadoop@server1 hadoop]$ jps

5434 ResourceManager

4303 SecondaryNameNode

4115 NameNode

5691 Jps

[hadoop@server3 ~]$ jps

1596 Jps

1165 DataNode

1498 NodeManager

五、Zookeeper集群搭建

1、server5主机

[root@server5 ~]

[root@server5 ~]

Starting rpcbind: [ OK ]

[root@server5 ~]

[root@server5 ~]

[root@server5 ~]

[hadoop@server5 ~]$ ls

hadoop java zookeeper-3.4.9.tar.gz

hadoop-2.7.3 jdk1.7.0_79

hadoop-2.7.3.tar.gz jdk-7u79-linux-x64.tar.gz

2、server1主机

[hadoop@server1 hadoop]$ ../../sbin/stop-all.sh

This script is Deprecated. Instead use stop-dfs.sh and stop-yarn.sh

Stopping namenodes on [server1]

server1: stopping namenode

172.25.120.3: stopping datanode

172.25.120.4: stopping datanode

Stopping secondary namenodes [0.0.0.0]

0.0.0.0: stopping secondarynamenode

stopping yarn daemons

stopping resourcemanager

172.25.120.4: stopping nodemanager

172.25.120.3: stopping nodemanager

172.25.120.4: nodemanager did not stop gracefully after 5 seconds: killing with kill -9

172.25.120.3: nodemanager did not stop gracefully after 5 seconds: killing with kill -9

no proxyserver to stop

[hadoop@server1 ~]$ ssh server5

[hadoop@server5 ~]$ logout

[hadoop@server1 ~]$ ssh 172.25.120.5

[hadoop@server5 ~]$ logout

[hadoop@server1 ~]$ tar zxf zookeeper-3.4.9.tar.gz

[hadoop@server1 ~]$ cd zookeeper-3.4.9

[hadoop@server1 zookeeper-3.4.9]$ cd conf/

[hadoop@server1 conf]$ cp zoo_sample.cfg zoo.cfg

[hadoop@server1 conf]$ vim zoo.cfg

server.1=172.25.120.2:2888:3888

server.2=172.25.120.3:2888:3888

server.3=172.25.120.4:2888:3888

3、server2、3、4配置

[hadoop@server2 hadoop]$ cd /tmp/

[hadoop@server2 tmp]$ mkdir zookeeper

[hadoop@server2 tmp]$ echo 1 > zookeeper/myid

[hadoop@server2 tmp]$ cat zookeeper/myid

1

[hadoop@server3 tmp]$ cat zookeeper/myid

2

[hadoop@server4 tmp]$ cat zookeeper/myid

3

[hadoop@server4 tmp]$ cd ~/zookeeper-3.4.9

[hadoop@server4 zookeeper-3.4.9]$ bin/zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /home/hadoop/zookeeper-3.4.9/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

4、查看所有节点信息

[hadoop@server2 zookeeper-3.4.9]$ bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /home/hadoop/zookeeper-3.4.9/bin/../conf/zoo.cfg

Mode: follower

[hadoop@server3 zookeeper-3.4.9]$ bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /home/hadoop/zookeeper-3.4.9/bin/../conf/zoo.cfg

Mode: follower

[hadoop@server4 zookeeper-3.4.9]$ bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /home/hadoop/zookeeper-3.4.9/bin/../conf/zoo.cfg

Mode: leader

5、leader(server4)测试

[hadoop@server4 zookeeper-3.4.9]$ bin/zkCli.sh

Connecting to localhost:2181

WATCHER::

WatchedEvent state:SyncConnected type:None path:null

[zk: localhost:2181(CONNECTED) 0] ls

[zk: localhost:2181(CONNECTED) 1] ls /

[zookeeper]

[zk: localhost:2181(CONNECTED) 2] ls /zookeeper

[quota]

[zk: localhost:2181(CONNECTED) 3] ls /zookeeper/quota

[]

[zk: localhost:2181(CONNECTED) 5] quit

Quitting...

六、Zookeeper高可用

1、配置hadoop

[hadoop@server1 ~]$ cd hadoop/etc/

[hadoop@server1 etc]$ vim hadoop/slaves

172.25.120.2

172.25.120.3

172.25.120.4

[hadoop@server1 etc]$ vim hadoop/core-site.xml

<configuration>

<property>

<name>fs.defaultFSname>

<value>hdfs://mastersvalue>

property>

<property>

<name>ha.zookeeper.quorumname>

<value>172.25.120.2:2181,172.25.120.3:2181,172.25.120.4:2181value>

property>

configuration>

[hadoop@server1 etc]$ vim hadoop/hdfs-site.xml

<configuration>

<property>

<name>dfs.replicationname>

<value>3value>

property>

<property>

<name>dfs.nameservicesname>

<value>mastersvalue>

property>

<property>

<name>dfs.ha.namenodes.mastersname>

<value>h1,h2value>

property>

<property>

<name>dfs.namenode.rpc-address.masters.h1name>

<value>172.25.120.1:9000value>

property>

<property>

<name>dfs.namenode.http-address.masters.h1name>

<value>172.25.120.1:50070value>

property>

<property>

<name>dfs.namenode.rpc-address.masters.h2name>

<value>172.25.120.5:9000value>

property>

<property>

<name>dfs.namenode.http-address.masters.h2name>

<value>172.25.120.5:50070value>

property>

<property>

<name>dfs.namenode.shared.edits.dirname>

<value>qjournal://172.25.120.2:8485;172.25.120.3:8485;172.25.120.4:8485/mastersvalue>

property>

<property>

<name>dfs.journalnode.edits.dirname>

<value>/tmp/journaldatavalue>

property>

<property>

<name>dfs.ha.automatic-failover.enabledname>

<value>truevalue>

property>

<property>

<name>dfs.client.failover.proxy.provider.mastersname>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvid

ervalue>

<property>

<name>dfs.ha.fencing.methodsname>

<value>

sshfence

shell(/bin/true)

value>

property>

<property>

<name>dfs.ha.fencing.ssh.private-key-filesname>

<value>/home/hadoop/.ssh/id_rsavalue>

property>

<property>

<name>dfs.ha.fencing.ssh.connect-timeoutname>

<value>30000value>

property>

configuration>

[hadoop@server1 etc]$ cd ~/hadoop

[hadoop@server1 hadoop]$ bin/hdfs namenode -format

[hadoop@server1 hadoop]$ scp -r /tmp/hadoop-hadoop 172.25.120.5:/tmp/

[root@server5 ~]

hadoop-hadoop

2、3个DN主机启动journalnod

[hadoop@server3 zookeeper-3.4.9]$ cd ~/hadoop

[hadoop@server3 hadoop]$ sbin/hadoop-daemon.sh start journalnode

starting journalnode, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-journalnode-server3.out

查看3个DN主机zookeeper集群状态

[hadoop@server3 hadoop]$ jps

1881 DataNode

1698 QuorumPeerMain

1983 Jps

1790 JournalNode

3、NN主机格式化zookeeper

[hadoop@server1 hadoop]$ bin/hdfs zkfc -formatZK

[hadoop@server1 hadoop]$ sbin/start-dfs.sh

Starting namenodes on [server1 server5]

server5: starting namenode, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-namenode-server5.out

server1: starting namenode, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-namenode-server1.out

172.25.120.4: starting datanode, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-datanode-server4.out

172.25.120.2: starting datanode, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-datanode-server2.out

172.25.120.3: starting datanode, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-datanode-server3.out

Starting journal nodes [172.25.120.2 172.25.120.3 172.25.120.4]

172.25.120.3: journalnode running as process 1790. Stop it first.

172.25.120.4: journalnode running as process 1706. Stop it first.

172.25.120.2: journalnode running as process 1626. Stop it first.

Starting ZK Failover Controllers on NN hosts [server1 server5]

server1: starting zkfc, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-zkfc-server1.out

server5: starting zkfc, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-zkfc-server5.out

[hadoop@server1 hadoop]$ jps

6694 Jps

6646 DFSZKFailoverController

6352 NameNode

[hadoop@server5 ~]$ jps

1396 DFSZKFailoverController

1298 NameNode

1484 Jps

4、测试高可用

- 访问 http://172.25.120.1:50070/dfshealth.html#tab-overview 查看master

- 此时,server5 为 master,server1 为 standby

[hadoop@server5 ~]$ jps

1396 DFSZKFailoverController

1298 NameNode

1484 Jps

[hadoop@server5 ~]$ kill -9 1298

[hadoop@server5 ~]$ jps

1396 DFSZKFailoverController

1515 Jps

- server1切换为 master

- server5再次启动,状态为 standby

[hadoop@server5 hadoop]$ sbin/hadoop-daemon.sh start namenode

starting namenode, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-namenode-server5.out

5、DN主机查看

[hadoop@server2 hadoop]$ cd ~/zookeeper-3.4.9

[hadoop@server2 zookeeper-3.4.9]$ bin/zkCli.sh

Connecting to localhost:2181

[zk: localhost:2181(CONNECTED) 3] ls /hadoop-ha/masters

[ActiveBreadCrumb, ActiveStandbyElectorLock]

[zk: localhost:2181(CONNECTED) 4] get /hadoop-ha/masters/Active

ActiveBreadCrumb ActiveStandbyElectorLock

[zk: localhost:2181(CONNECTED) 4] get /hadoop-ha/masters/ActiveBreadCrumb

masters��h1�server1 �F(�>

cZxid = 0x10000000a

ctime = Sat Jul 21 16:32:30 CST 2018

mZxid = 0x10000000e

mtime = Sat Jul 21 16:34:02 CST 2018

pZxid = 0x10000000a

cversion = 0

dataVersion = 1

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 28

numChildren = 0

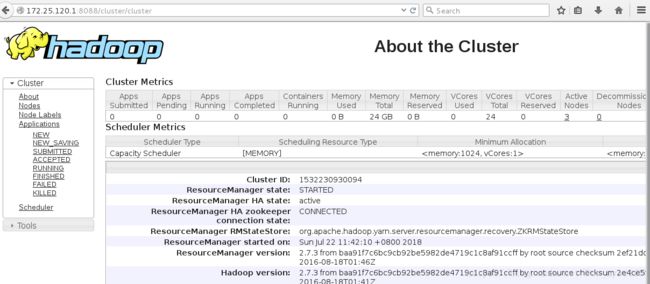

七、yarn的高可用

1、server1主机

[hadoop@server1 hadoop]$ pwd

/home/hadoop/hadoop/etc/hadoop

[hadoop@server1 hadoop]$ vim mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.namename>

<value>yarnvalue>

property>

configuration>

[hadoop@server1 hadoop]$ vim yarn-site.xml

<configuration>

<property>

<name>yarn.nodemanager.aux-servicesname>

<value>mapreduce_shufflevalue>

property>

<property>

<name>yarn.resourcemanager.ha.enabledname>

<value>truevalue>

property>

<property>

<name>yarn.resourcemanager.cluster-idname>

<value>RM_CLUSTERvalue>

property>

<property>

<name>yarn.resourcemanager.ha.rm-idsname>

<value>rm1,rm2value>

property>

<property>

<name>yarn.resourcemanager.hostname.rm1name>

<value>172.25.120.1value>

property>

<property>

<name>yarn.resourcemanager.hostname.rm2name>

<value>172.25.120.5value>

property>

<property>

<name>yarn.resourcemanager.recovery.enabledname>

<value>truevalue>

property>

<property>

<name>yarn.resourcemanager.store.classname>

<value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStorevalue>

property>

<property>

<name>yarn.resourcemanager.zk-addressname>

<value>172.25.120.2:2181,172.25.120.3:2181,172.25.120.4:2181value>

property>

configuration>

[hadoop@server1 ~]$ cd hbase-1.2.4/conf/

[hadoop@server1 conf]$ vim regionservers

172.25.120.2

172.25.120.3

172.25.120.4

[hadoop@server1 hadoop]$ ../../sbin/start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to /home/hadoop/hadoop-2.7.3/logs/yarn-hadoop-resourcemanager-server1.out

172.25.120.3: starting nodemanager, logging to /home/hadoop/hadoop-2.7.3/logs/yarn-hadoop-nodemanager-server3.out

172.25.120.4: starting nodemanager, logging to /home/hadoop/hadoop-2.7.3/logs/yarn-hadoop-nodemanager-server4.out

172.25.120.2: starting nodemanager, logging to /home/hadoop/hadoop-2.7.3/logs/yarn-hadoop-nodemanager-server2.out

[hadoop@server1 hadoop]$ jps

1927 Jps

1837 ResourceManager

1325 NameNode

1602 DFSZKFailoverController

2、server5主机

[hadoop@server5 ~]$ cd hadoop

[hadoop@server5 hadoop]$ sbin/yarn-daemon.sh start resourcemanager

starting resourcemanager, logging to /home/hadoop/hadoop-2.7.3/logs/yarn-hadoop-resourcemanager-server5.out

[hadoop@server5 logs]$ jps

3250 Jps

1694 ResourceManager

1209 NameNode

3184 DFSZKFailoverController

[hadoop@server3 sbin]$ jps

1549 NodeManager

1186 QuorumPeerMain

1293 JournalNode

1869 Jps

1381 DataNode

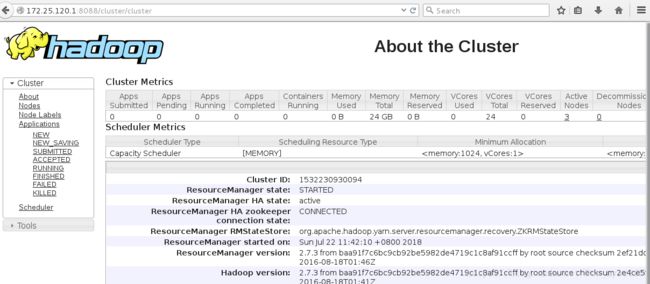

3、访问 http://172.25.120.1:8088/cluster/cluster 查看状态

八、Hbase分布式部署

1、配置Hbase

[hadoop@server1 ~]$ tar zxf hbase-1.2.4-bin.tar.gz

[hadoop@server1 ~]$ cd hbase-1.2.4

[hadoop@server1 hbase-1.2.4]$ ls

bin conf hbase-webapps lib NOTICE.txt

CHANGES.txt docs LEGAL LICENSE.txt README.txt

[hadoop@server1 hbase-1.2.4]$ cd conf/

[hadoop@server1 conf]$ vim hbase-env.sh

[hadoop@server1 conf]$ vim regionservers

[hadoop@server1 conf]$ vim hbase-site.xml

2、启动Hbase

[hadoop@server1 hbase-1.2.4]$ bin/start-hbase.sh

starting master, logging to /home/hadoop/hbase-1.2.4/bin/../logs/hbase-hadoop-master-server1.out

172.25.120.3: starting regionserver, logging to /home/hadoop/hbase-1.2.4/bin/../logs/hbase-hadoop-regionserver-server3.out

172.25.120.4: starting regionserver, logging to /home/hadoop/hbase-1.2.4/bin/../logs/hbase-hadoop-regionserver-server4.out

172.25.120.2: starting regionserver, logging to /home/hadoop/hbase-1.2.4/bin/../logs/hbase-hadoop-regionserver-server2.out

[hadoop@server1 hbase-1.2.4]$ jps

1837 ResourceManager

2567 HMaster

1325 NameNode

1602 DFSZKFailoverController

2634 Jps

[hadoop@server5 hbase-1.2.4]$ bin/hbase-daemon.sh start master

starting master, logging to /home/hadoop/hbase-1.2.4/bin/../logs/hbase-hadoop-master-server1.out

[hadoop@server5 hbase-1.2.4]$ jps

2253 HMaster

1694 ResourceManager

1209 NameNode

2317 Jps

3、测试

[hadoop@server1 hbase]$ bin/hbase shell

hbase(main):003:0> create 'test', 'cf'

0 row(s) in 1.2200 seconds

hbase(main):003:0> list 'test'

TABLE

test

1 row(s) in 0.2150 seconds

=> ["test"]

hbase(main):004:0> put 'test', 'row1', 'cf:a', 'value1'

0 row(s) in 0.0560 seconds

hbase(main):005:0> put 'test', 'row2', 'cf:b', 'value2'

0 row(s) in 0.0370 seconds

hbase(main):006:0> put 'test', 'row3', 'cf:c', 'value3'

0 row(s) in 0.0450 seconds

hbase(main):007:0> scan 'test'

ROW

COLUMN+CELL

row1

column=cf:a, timestamp=1488879391939, value=value1

row2

column=cf:b, timestamp=1488879402796, value=value2

row3

column=cf:c, timestamp=1488879410863, value=value3

3 row(s) in 0.2770 seconds

[hadoop@server5 hadoop]$ bin/hdfs dfs -ls /

Found 3 items

drwxr-xr-x - hadoop supergroup 0 2017-03-07 23:56 /hbase

drwx------ - hadoop supergroup 0 2017-03-04 17:50 /tmp

drwxr-xr-x - hadoop supergroup 0 2017-03-04 17:38 /user

- kill HMaster可以通过网页查看

- http://172.25.120.1:16010