Hbase

本集群 基于:

VMware Workstation12 Pro

SecureCRT 7.3

Xftp 5

CentOS-7-x86_64-Everything-1611.iso

hadoop-2.8.0.tar.gz

jdk-8u121-linux-x64.tar.gz下面是我在使用Intellij IDEA调用JavaAPI创建预分区的时候遇到的问题,写下来,备忘

1.Pom.xml

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0modelVersion>

<groupId>com.hbasegroupId>

<artifactId>HbaseOperationartifactId>

<version>1.0-SNAPSHOTversion>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.pluginsgroupId>

<artifactId>maven-compiler-pluginartifactId>

<configuration>

<source>1.6source>

<target>1.6target>

configuration>

plugin>

plugins>

build>

<dependencies>

<dependency>

<groupId>org.apache.hbasegroupId>

<artifactId>hbase-commonartifactId>

<version>1.1.2version>

dependency>

<dependency>

<groupId>org.apache.hadoopgroupId>

<artifactId>hadoop-commonartifactId>

<version>2.8.0version>

dependency>

<dependency>

<groupId>org.apache.hbasegroupId>

<artifactId>hbase-clientartifactId>

<version>1.1.2version>

dependency>

dependencies>

project>新建一个包,创建几个类,感觉应该没什么问题,运行一下,尼玛,抛异常了

[main] WARN org.apache.hadoop.hbase.util.DynamicClassLoader - Failed to identify the fs of dir hdfs://192.168.195.131:9000/hbase/lib, ignored

java.io.IOException: No FileSystem for scheme: hdfs

at org.apache.hadoop.fs.FileSystem.getFileSystemClass(FileSystem.java:2798)

at org.apache.hadoop.fs.FileSystem.createFileSystem(FileSystem.java:2809)

at org.apache.hadoop.fs.FileSystem.access$200(FileSystem.java:100)

at org.apache.hadoop.fs.FileSystem$Cache.getInternal(FileSystem.java:2848)

at org.apache.hadoop.fs.FileSystem$Cache.get(FileSystem.java:2830)

at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:389)

at org.apache.hadoop.fs.Path.getFileSystem(Path.java:356)

at org.apache.hadoop.hbase.util.DynamicClassLoader.(DynamicClassLoader.java:104)

at org.apache.hadoop.hbase.protobuf.ProtobufUtil.(ProtobufUtil.java:241)

at org.apache.hadoop.hbase.ClusterId.parseFrom(ClusterId.java:64)

at org.apache.hadoop.hbase.zookeeper.ZKClusterId.readClusterIdZNode(ZKClusterId.java:75)

at org.apache.hadoop.hbase.client.ZooKeeperRegistry.getClusterId(ZooKeeperRegistry.java:105)

at org.apache.hadoop.hbase.client.ConnectionManager$HConnectionImplementation.retrieveClusterId(ConnectionManager.java:879)

at org.apache.hadoop.hbase.client.ConnectionManager$HConnectionImplementation.(ConnectionManager.java:635)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.hbase.client.ConnectionFactory.createConnection(ConnectionFactory.java:238)

at org.apache.hadoop.hbase.client.ConnectionFactory.createConnection(ConnectionFactory.java:218)

at org.apache.hadoop.hbase.client.ConnectionFactory.createConnection(ConnectionFactory.java:119)

at neu.HBaseHelper.(HBaseHelper.java:30)

at neu.HBaseHelper.getHelper(HBaseHelper.java:35)

at neu.HBaseOprations.main(HBaseOprations.java:22)

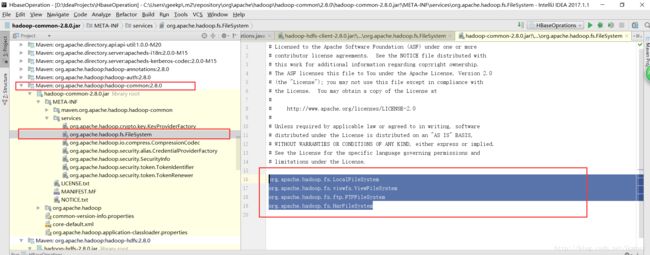

原因是找不到FileSystem

但是Hadoop-common包下是用这个文件的啊,

对应的文件如下:

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

org.apache.hadoop.fs.LocalFileSystem

org.apache.hadoop.fs.viewfs.ViewFileSystem

org.apache.hadoop.fs.ftp.FTPFileSystem

org.apache.hadoop.fs.HarFileSystem

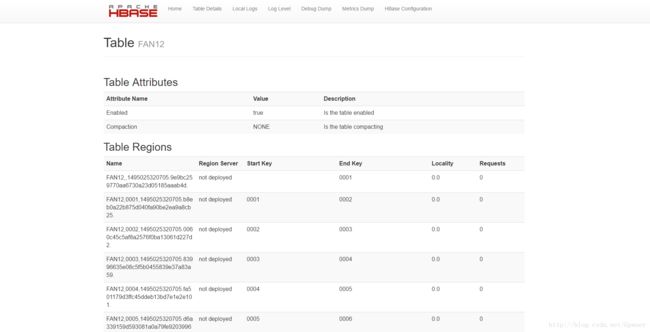

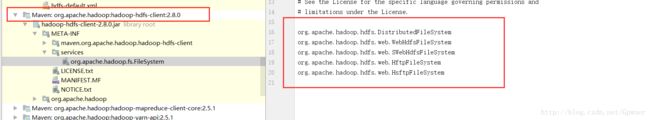

来发现,使用的FileSystem应该是另外一个包的:org.apahce.hadoop:hadoop-hdfs:2.8.0的,加上之后就没有没有这个异常了

<dependency>

<groupId>org.apache.hadoopgroupId>

<artifactId>hadoop-hdfsartifactId>

<version>2.8.0version>

dependency>

看一下包的文件结构:发现在引入这个包的同时引入了org.apahce.hadoop:hadoop-hdfs-client:2.8.0

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

org.apache.hadoop.hdfs.DistributedFileSystem

org.apache.hadoop.hdfs.web.WebHdfsFileSystem

org.apache.hadoop.hdfs.web.SWebHdfsFileSystem

org.apache.hadoop.hdfs.web.HftpFileSystem

org.apache.hadoop.hdfs.web.HsftpFileSystem

对比一下两个包的FileSystem中的内容是不一样的

2.新异常

11785 [main] WARN org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper - Unable to create ZooKeeper Connection

java.net.UnknownHostException: master不识别,检查一下hbase.xml配置文件,发现写的是

<configuration>

<property>

<name>hbase.rootdirname>

<value>hdfs://192.168.195.131:9000/hbasevalue>

property>

<property>

<name>hbase.cluster.distributedname>

<value>truevalue>

property>

<property>

<name>hbase.zookeeper.quorumname>

<value>master,slave1,slave2value>

property>

<property>

<name>hbase.master.info.bindAddressname>

<value>0.0.0.0value>

property>

<property>

<name>hbase.master.info.portname>

<value>16010value>

property>

<property>

<name>hbase.master.portname>

<value>16000value>

property>

configuration>

windows当然不识别,这里有两种方法:

1).修改Windows下:C:\Windows\System32\drivers\etc\hosts文件,加上对应的IP地址和主机名

192.168.195.131 master

192.168.195.132 slave1

192.168.195.133 slave22).将配置文件中的master,slave1,slave2修改为响应的IP地址

这里强烈推荐在使用配置文件的时候,

a.把主机名修改为IP地址

b.把0.0.0.0修改为对应的主机IP地址

3.给出程序的完整代码与结构:

package neu;

/**

* Created by root on 2017/5/15.

*/

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.*;

import org.apache.hadoop.hbase.client.*;

import org.apache.hadoop.hbase.io.compress.Compression.Algorithm;

import org.apache.hadoop.hbase.util.Bytes;

import java.io.Closeable;

import java.io.IOException;

import java.util.ArrayList;

import java.util.List;

import java.util.Random;

/**

* Used by the book examples to generate tables and fill them with test data.

*/

public class HBaseHelper implements Closeable {

private Configuration configuration = null;

private Connection connection = null;

private Admin admin = null;

protected HBaseHelper(Configuration configuration) throws IOException {

this.configuration = configuration;

this.connection = ConnectionFactory.createConnection(configuration);

this.admin = connection.getAdmin();

}

public static HBaseHelper getHelper(Configuration configuration) throws IOException {

return new HBaseHelper(configuration);

}

@Override

public void close() throws IOException {

connection.close();

}

public Connection getConnection() {

return connection;

}

public Configuration getConfiguration() {

return configuration;

}

public void createNamespace(String namespace) {

try {

NamespaceDescriptor nd = NamespaceDescriptor.create(namespace).build();

admin.createNamespace(nd);

} catch (Exception e) {

System.err.println("Error: " + e.getMessage());

}

}

public void dropNamespace(String namespace, boolean force) {

try {

if (force) {

TableName[] tableNames = admin.listTableNamesByNamespace(namespace);

for (TableName name : tableNames) {

admin.disableTable(name);

admin.deleteTable(name);

}

}

} catch (Exception e) {

// ignore

}

try {

admin.deleteNamespace(namespace);

} catch (IOException e) {

System.err.println("Error: " + e.getMessage());

}

}

public boolean existsTable(String table)

throws IOException {

return existsTable(TableName.valueOf(table));

}

public boolean existsTable(TableName table)

throws IOException {

return admin.tableExists(table);

}

public void createTable(String table, String... colfams)

throws IOException {

createTable(TableName.valueOf(table), 1, null, colfams);

}

public void createTable(TableName table, String... colfams)

throws IOException {

createTable(table, 1, null, colfams);

}

public void createTable(String table, int maxVersions, String... colfams)

throws IOException {

createTable(TableName.valueOf(table), maxVersions, null, colfams);

}

public void createTable(TableName table, int maxVersions, String... colfams)

throws IOException {

createTable(table, maxVersions, null, colfams);

}

public void createTable(String table, byte[][] splitKeys, String... colfams)

throws IOException {

createTable(TableName.valueOf(table), 1, splitKeys, colfams);

}

public void createTable(TableName table, int maxVersions, byte[][] splitKeys,

String... colfams)

throws IOException {

HTableDescriptor desc = new HTableDescriptor(table);

desc.setDurability(Durability.SKIP_WAL);

for (String cf : colfams) {

HColumnDescriptor coldef = new HColumnDescriptor(cf);

coldef.setCompressionType(Algorithm.SNAPPY);

coldef.setMaxVersions(maxVersions);

desc.addFamily(coldef);

}

if (splitKeys != null) {

admin.createTable(desc, splitKeys);

} else {

admin.createTable(desc);

}

}

public void disableTable(String table) throws IOException {

disableTable(TableName.valueOf(table));

}

public void disableTable(TableName table) throws IOException {

admin.disableTable(table);

}

public void dropTable(String table) throws IOException {

dropTable(TableName.valueOf(table));

}

public void dropTable(TableName table) throws IOException {

if (existsTable(table)) {

if (admin.isTableEnabled(table)) disableTable(table);

admin.deleteTable(table);

}

}

public void fillTable(String table, int startRow, int endRow, int numCols,

String... colfams)

throws IOException {

fillTable(TableName.valueOf(table), startRow,endRow, numCols, colfams);

}

public void fillTable(TableName table, int startRow, int endRow, int numCols,

String... colfams)

throws IOException {

fillTable(table, startRow, endRow, numCols, -1, false, colfams);

}

public void fillTable(String table, int startRow, int endRow, int numCols,

boolean setTimestamp, String... colfams)

throws IOException {

fillTable(TableName.valueOf(table), startRow, endRow, numCols, -1,

setTimestamp, colfams);

}

public void fillTable(TableName table, int startRow, int endRow, int numCols,

boolean setTimestamp, String... colfams)

throws IOException {

fillTable(table, startRow, endRow, numCols, -1, setTimestamp, colfams);

}

public void fillTable(String table, int startRow, int endRow, int numCols,

int pad, boolean setTimestamp, String... colfams)

throws IOException {

fillTable(TableName.valueOf(table), startRow, endRow, numCols, pad,

setTimestamp, false, colfams);

}

public void fillTable(TableName table, int startRow, int endRow, int numCols,

int pad, boolean setTimestamp, String... colfams)

throws IOException {

fillTable(table, startRow, endRow, numCols, pad, setTimestamp, false,

colfams);

}

public void fillTable(String table, int startRow, int endRow, int numCols,

int pad, boolean setTimestamp, boolean random,

String... colfams)

throws IOException {

fillTable(TableName.valueOf(table), startRow, endRow, numCols, pad,

setTimestamp, random, colfams);

}

public void fillTable(TableName table, int startRow, int endRow, int numCols,

int pad, boolean setTimestamp, boolean random,

String... colfams)

throws IOException {

Table tbl = connection.getTable(table);

Random rnd = new Random();

for (int row = startRow; row <= endRow; row++) {

for (int col = 1; col <= numCols; col++) {

Put put = new Put(Bytes.toBytes("row-" + padNum(row, pad)));

for (String cf : colfams) {

String colName = "col-" + padNum(col, pad);

String val = "val-" + (random ?

Integer.toString(rnd.nextInt(numCols)) :

padNum(row, pad) + "." + padNum(col, pad));

if (setTimestamp) {

put.addColumn(Bytes.toBytes(cf), Bytes.toBytes(colName), col,

Bytes.toBytes(val));

} else {

put.addColumn(Bytes.toBytes(cf), Bytes.toBytes(colName),

Bytes.toBytes(val));

}

}

tbl.put(put);

}

}

tbl.close();

}

public void fillTableRandom(String table,

int minRow, int maxRow, int padRow,

int minCol, int maxCol, int padCol,

int minVal, int maxVal, int padVal,

boolean setTimestamp, String... colfams)

throws IOException {

fillTableRandom(TableName.valueOf(table), minRow, maxRow, padRow,

minCol, maxCol, padCol, minVal, maxVal, padVal, setTimestamp, colfams);

}

public void fillTableRandom(TableName table,

int minRow, int maxRow, int padRow,

int minCol, int maxCol, int padCol,

int minVal, int maxVal, int padVal,

boolean setTimestamp, String... colfams)

throws IOException {

Table tbl = connection.getTable(table);

Random rnd = new Random();

int maxRows = minRow + rnd.nextInt(maxRow - minRow);

for (int row = 0; row < maxRows; row++) {

int maxCols = minCol + rnd.nextInt(maxCol - minCol);

for (int col = 0; col < maxCols; col++) {

int rowNum = rnd.nextInt(maxRow - minRow + 1);

Put put = new Put(Bytes.toBytes("row-" + padNum(rowNum, padRow)));

for (String cf : colfams) {

int colNum = rnd.nextInt(maxCol - minCol + 1);

String colName = "col-" + padNum(colNum, padCol);

int valNum = rnd.nextInt(maxVal - minVal + 1);

String val = "val-" + padNum(valNum, padCol);

if (setTimestamp) {

put.addColumn(Bytes.toBytes(cf), Bytes.toBytes(colName), col,

Bytes.toBytes(val));

} else {

put.addColumn(Bytes.toBytes(cf), Bytes.toBytes(colName),

Bytes.toBytes(val));

}

}

tbl.put(put);

}

}

tbl.close();

}

/**

* 鎸夌収鎸囧畾鐨勬媶鍒嗙偣鎷嗗垎琛�

* @param tableName 琛ㄥ悕

* @param splitPoint 鎷嗗垎鐐�

* @throws IOException

*/

public void splitTable(String tableName, byte[] splitPoint) throws IOException{

TableName table = TableName.valueOf(tableName);

admin.split(table, splitPoint);

}

/**

* 鎷嗗垎Region

* @param regionName 瑕佹媶鍒嗙殑Region鍚嶇О

* @param splitPoint 鎷嗗垎鐐癸紙蹇呴』鍦≧egion鐨剆tartKey鍜宔ndKey涔嬮棿鎵嶅彲浠ユ媶鍒嗘垚鍔燂級

* @throws IOException

*/

public void splitRegion(String regionName, byte[] splitPoint) throws IOException {

admin.splitRegion(Bytes.toBytes(regionName), splitPoint);

}

/**

* 鍚堝苟region

* @param regionNameA regionA鍚嶇О

* @param regionNameB regionB鍚嶇О

* @throws IOException

*/

public void mergerRegions(String regionNameA, String regionNameB) throws IOException {

admin.mergeRegions(Bytes.toBytes(regionNameA), Bytes.toBytes(regionNameB), true);

}

public String padNum(int num, int pad) {

String res = Integer.toString(num);

if (pad > 0) {

while (res.length() < pad) {

res = "0" + res;

}

}

return res;

}

public void put(String table, String row, String fam, String qual,

String val) throws IOException {

put(TableName.valueOf(table), row, fam, qual, val);

}

public void put(TableName table, String row, String fam, String qual,

String val) throws IOException {

Table tbl = connection.getTable(table);

Put put = new Put(Bytes.toBytes(row));

put.addColumn(Bytes.toBytes(fam), Bytes.toBytes(qual), Bytes.toBytes(val));

tbl.put(put);

tbl.close();

}

public void put(String table, String row, String fam, String qual, long ts,

String val) throws IOException {

put(TableName.valueOf(table), row, fam, qual, ts, val);

}

public void put(TableName table, String row, String fam, String qual, long ts,

String val) throws IOException {

Table tbl = connection.getTable(table);

Put put = new Put(Bytes.toBytes(row));

put.addColumn(Bytes.toBytes(fam), Bytes.toBytes(qual), ts,

Bytes.toBytes(val));

tbl.put(put);

tbl.close();

}

public void put(String table, String[] rows, String[] fams, String[] quals,

long[] ts, String[] vals) throws IOException {

put(TableName.valueOf(table), rows, fams, quals, ts, vals);

}

public void put(TableName table, String[] rows, String[] fams, String[] quals,

long[] ts, String[] vals) throws IOException {

Table tbl = connection.getTable(table);

for (String row : rows) {

Put put = new Put(Bytes.toBytes(row));

for (String fam : fams) {

int v = 0;

for (String qual : quals) {

String val = vals[v < vals.length ? v : vals.length - 1];

long t = ts[v < ts.length ? v : ts.length - 1];

System.out.println("Adding: " + row + " " + fam + " " + qual +

" " + t + " " + val);

put.addColumn(Bytes.toBytes(fam), Bytes.toBytes(qual), t,

Bytes.toBytes(val));

v++;

}

}

tbl.put(put);

}

tbl.close();

}

public void dump(String table, String[] rows, String[] fams, String[] quals)

throws IOException {

dump(TableName.valueOf(table), rows, fams, quals);

}

public void dump(TableName table, String[] rows, String[] fams, String[] quals)

throws IOException {

Table tbl = connection.getTable(table);

List gets = new ArrayList();

for (String row : rows) {

Get get = new Get(Bytes.toBytes(row));

get.setMaxVersions();

if (fams != null) {

for (String fam : fams) {

for (String qual : quals) {

get.addColumn(Bytes.toBytes(fam), Bytes.toBytes(qual));

}

}

}

gets.add(get);

}

Result[] results = tbl.get(gets);

for (Result result : results) {

for (Cell cell : result.rawCells()) {

System.out.println("Cell: " + cell +

", Value: " + Bytes.toString(cell.getValueArray(),

cell.getValueOffset(), cell.getValueLength()));

}

}

tbl.close();

}

}

package neu;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.*;

import org.apache.hadoop.hbase.client.*;

import org.apache.hadoop.hbase.util.Bytes;

import org.apache.log4j.BasicConfigurator;

import java.io.IOException;

import java.util.Collection;

import java.util.Map;

import java.util.NavigableMap;

import java.util.Set;

public class HBaseOprations {

public static void main(String[] args) throws IOException {

BasicConfigurator.configure();

Configuration conf = HBaseConfiguration.create();

HBaseHelper helper = HBaseHelper.getHelper(conf);

//helper.splitRegion("24261034a736c06db96172b6f648f0bb", Bytes.toBytes("0120151025"));

//helper.mergerRegions("92e57c211228ae4847dac3a02a51e684", "c059a4fee33246a00c95136319d9215f");

createTable(helper);

getRegionSize(conf);

}

public static void createTable(HBaseHelper helper) throws IOException{

helper.dropTable("FAN12");// 删除表

RegionSplit rSplit = new RegionSplit();

byte[][] splitKeys = rSplit.split();

TableName tablename = TableName.valueOf("FAN12");//新建表

helper.createTable(tablename, 1, splitKeys, "INFO");

// helper.createTable(tablename, 1, "INFO");

}

public static void getRegionsInfo(Configuration conf) throws IOException{

Connection connection = ConnectionFactory.createConnection(conf);

TableName tablename = TableName.valueOf(Bytes.toBytes("faninfo8"));

NavigableMap regionMap

= MetaScanner.allTableRegions(connection, tablename);

Set set = regionMap.keySet();

TableName tableName = TableName.valueOf(Bytes.toBytes("faninfo8"));

RegionLocator regionLoc = connection.getRegionLocator(tableName);

}

public static void getRegionSize(Configuration conf) throws IOException{

Connection connection = ConnectionFactory.createConnection(conf);

Admin admin = connection.getAdmin();

ClusterStatus status = admin.getClusterStatus();

Collection snList = status.getServers();

int totalSize = 0;

for (ServerName sn : snList) {

System.out.println(sn.getServerName());

ServerLoad sl = status.getLoad(sn);

int storeFileSize = sl.getStorefileSizeInMB();// RS大小

Map rlMap = sl.getRegionsLoad();

Set rlKeys = rlMap.keySet();

for (byte[] bs : rlKeys) {

RegionLoad rl = rlMap.get(bs);

String regionName = rl.getNameAsString();

if(regionName.substring(0, regionName.indexOf(",")).equals("FANPOINTINFO")) {

int regionSize = rl.getStorefileSizeMB();

totalSize += regionSize;

System.out.println(regionSize + "MB");

}

}

}

System.out.println("总大小=" + totalSize + "MB");

}

}

package neu;

import org.apache.hadoop.hbase.util.Bytes;

public class RegionSplit {

private String[] pointInfos1 = {

"JLFC_FJ050_",

"JLFC_FJ100_",

"JLFC_FJ150_",

"JLFC_FJ200_",

"JLFC_FJ250_",

"ZYFC_FJ050_",

"ZYFC_FJ100_",

"ZYFC_FJ150_",

"ZYFC_FJ200_",

"ZYFC_FJ250_",

"WDFC_FJ050_",

"WDFC_FJ100_",

"WDFC_FJ150_",

"WDFC_FJ200_",

"WDFC_FJ250_",

"ZRHFC_FJ050_",

"ZRHFC_FJ100_",

"ZRHFC_FJ150_",

"ZRHFC_FJ200_",

"ZRHFC_FJ250_",

"NXFC_FJ050_",

"NXFC_FJ100_",

"NXFC_FJ150_",

"NXFC_FJ200_",

"NXFC_FJ250_"

};

private String[] pointInfos = {

"0001",

"0002",

"0003",

"0004",

"0005",

"0006",

"0007",

"0008",

"0009",

"0010",

"0011",

"0012",

"0013",

"0014",

"0015",

"0016",

"0017",

"0018",

"0019",

"0020",

"0021",

"0022",

"0023",

"0024",

"0025",

"0026",

"0027",

"0028",

"0029"};

public byte[][] split() {

byte[][] result = new byte[pointInfos.length][];

for (int i = 0; i < pointInfos.length; i++) {

result[i] = Bytes.toBytes(pointInfos[i]);

// System.out.print("'" + pointInfos[i] + "'" + ",");

}

return result;

}

public byte[][] splitByPartition() {

return null;

}

public static void main(String[] args) {

RegionSplit split = new RegionSplit();

split.split();

}

}

core-site.xml

<configuration>

<property>

<name>fs.defaultFSname>

<value>hdfs://master:9000value>

property>

<property>

<name>io.file.buffer.sizename>

<value>131072value>

property>

<property>

<name>hadoop.tmp.dirname>

<value>file:/usr/local/hadoop/tmpvalue>

<description>Abase for other temporary directories.description>

property>

<property>

<name>hadoop.proxyuser.root.hostsname>

<value>*value>

property>

<property>

<name>hadoop.proxyuser.root.groupsname>

<value>*value>

property>

configuration>hbase-site.xml

<configuration>

<property>

<name>hbase.rootdirname>

<value>hdfs://192.168.195.131:9000/hbasevalue>

property>

<property>

<name>hbase.cluster.distributedname>

<value>truevalue>

property>

<property>

<name>hbase.zookeeper.quorumname>

<value>192.168.195.131,192.168.195.132,192.168.195.133value>

property>

<property>

<name>hbase.master.info.bindAddressname>

<value>192.168.195.131value>

property>

<property>

<name>hbase.master.info.portname>

<value>16010value>

property>

<property>

<name>hbase.master.portname>

<value>16000value>

property>

configuration>hdfs-site.xml

<configuration>

<property>

<name>dfs.namenode.name.dirname>

<value>file:/usr/local/hadoop/hdfs/namevalue>

property>

<property>

<name>dfs.datanode.data.dirname>

<value>file:/usr/local/hadoop/hdfs/datavalue>

property>

<property>

<name>dfs.replicationname>

<value>3value>

property>

<property>

<name>dfs.namenode.secondary.http-addressname>

<value>192.168.195.131:9001value>

property>

<property>

<name>dfs.webhdfs.enabledname>

<value>truevalue>

property>

<property>

<name>dfs.datanode.max.xcieversname>

<value>4096value>

property>

configuration>pom.xml

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0modelVersion>

<groupId>com.hbasegroupId>

<artifactId>HbaseOperationartifactId>

<version>1.0-SNAPSHOTversion>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.pluginsgroupId>

<artifactId>maven-compiler-pluginartifactId>

<configuration>

<source>1.6source>

<target>1.6target>

configuration>

plugin>

plugins>

build>

<dependencies>

<dependency>

<groupId>org.apache.hbasegroupId>

<artifactId>hbase-commonartifactId>

<version>1.1.2version>

dependency>

<dependency>

<groupId>org.apache.hadoopgroupId>

<artifactId>hadoop-commonartifactId>

<version>2.8.0version>

dependency>

<dependency>

<groupId>org.apache.hbasegroupId>

<artifactId>hbase-clientartifactId>

<version>1.1.2version>

dependency>

<dependency>

<groupId>org.apache.hadoopgroupId>

<artifactId>hadoop-hdfsartifactId>

<version>2.8.0version>

dependency>

dependencies>

project>