Hadoop 2.7集群安装指南

Hadoop 2.7集群安装

安装环境是centOS环境。有三个下面的主机:

balance01 hadoop_master

balance02 node02

balance03 node03

配置Linux安装账号

三步创建一个用户,使他有与root一样的权限。

1) 用root下,创建一个用户“app” 组

[root@daddylinux~]#groupadd app

2) 创建APP并添加到app组

[root@daddylinux~]#useradd app –g app

[root@daddylinux~]#passwd app

3) 限定app使用root特权,如下所示,编辑visudo文件。

[root@daddylinux~]# visudo

# 在最后一行,添加下列信息。

app ALL=(ALL) ALL

退出并保存。

安装必要的依赖库

在目标集群的机器上执行下面shell脚本:

sudo yum -y install lzo-devel zlib-devel gcc autoconf automake libtool cmake openssl-devel

安装protobuf-java

下载最新版的protobuf java版本2.5.0:

$curl -i

https://github-cloud.s3.amazonaws.com/releases/23357588/09f5cfca-d24e-11e4-9840-20d894b9ee09.gz?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIAISTNZFOVBIJMK3TQ%2F20161021%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20161021T025702Z&X-Amz-Expires=300&X-Amz-Signature=a7df369f2f7b389193306c85f4d5aba58a6cc2bf7778da54f59658af6bc27b00&X-Amz-SignedHeaders=host&actor_id=0&response-content-disposition=attachment%3B%20filename%3Dprotobuf-2.5.0.tar.gz&response-content-type=application%2Foctet-stream

$ tar xvf protobuf-2.5.0.tar.gz

$ cd protobuf-2.5.0

##安装

$./configure && make && make install

安装maven

下载最新的maven包,现在最新的是apache-maven-3.3.9-bin.tar。解压做到指定的目录下。下面是具体的执行命令:

$ ls

apache-maven-3.3.9-bin.tar.gz

$pwd

/opt/h2

$ tar xvf apache-maven-3.3.9-bin.tar.gz

$ mv apache-maven-3.3.9 maven

配置mvn

$sudo vi /etc/profile

export JAVA_HOME=/usr/lib/jvm/jdk1.8.0_77

export M2HOME=/opt/h2/maven

export PATH=$JAVA_HOME/bin:$M2HOME/bin:$PATH

#保存并退出

$source /etc/profile

$mvn -version

$mvn -versioin

Apache Maven 3.3.9 (bb52d8502b132ec0a5a3f4c09453c07478323dc5; 2015-11-11T00:41:47+08:00)

Maven home: /opt/h2/maven

Java version: 1.8.0_77, vendor: Oracle Corporation

Java home: /usr/lib/jvm/jdk1.8.0_77/jre

Default locale: en_US, platform encoding: UTF-8

OS name: "linux", version: "2.6.32-279.el6.x86_64", arch: "amd64", family: "unix"

编译Hadoop源码

下载最新的稳定版本hadoop源码(现在最新是hadoop-2.7.3-src.tar.gz)。

$tar –xvf hadoop-2.7.3-src.tar.gz

$mv hadoop-2.7.3 hadoop

$cd hadoop

$mvn clean package -Pdist,native -DskipTests -Dtar

编译成功后,可以看到上面的信息。

Hadoop-dist/target/hadoop-2.7.3.tar.gz

为需要的编译文件。解压缩到/opt/h2目录下。

修改hostname

修改hostname只需要两个步骤:

### 显示hostname

#$ hostname

localhost.localdomain

### 修改hostname

#hostname balance02

#vi /etc/sysconfig/network

NETWORKING=yes

HOSTNAME=balance02

###保存并退出即可

安装上面步骤设置balance01,balance02, balance03主机。最后,修改每台机器的hosts文件,添加下面内容:

192.168.234.155 balance03 node2

192.168.234.33 balance02 node1

192.168.234.154 balance01 hadoopmaster

SSH设置

在每台机上运行:

ssh-keygen -t rsa

接着不断按Enter键,记住不能够设置密码。

cd ~/.ssh

进入到.ssh 目录中,运行:

cp id_rsa.pub authorized_keys

每个节点运行上面命令。

然后,运行:

#master machine

ssh-copy-id -i ~/.ssh/id_rsa.pub node1

ssh-copy-id -i ~/.ssh/id_rsa.pub node2

#slavemachine

ssh-copy-id -i ~/.ssh/id_rsa.pub Hadoopmaster

hadoopmaster能够直接通过ssh node1或者ssh node2登陆对应节点,表示配置成功了。

配置Hadoop

设置haoop环境变量

确保已经安装了jvm1.8.0_77,没有安装,执行下面shell脚本。

curl -L --cookie "oraclelicense=accept-securebackup-cookie"

http://download.oracle.com/otn-pub/java/jdk/8u77-b03/jdk-8u77-linux-x64.tar.gz -o jdk-8-linux-x64.tar.gz

tar -xvf jdk-8-linux-x64.tar.gz

mkdir -p /usr/lib/jvm

mv ./jdk1.8.* /usr/lib/jvm/

update-alternatives --install "/usr/bin/java" "java" "/usr/lib/jvm/jdk1.8.0_77/bin/java" 1

update-alternatives --install "/usr/bin/javac" "javac" "/usr/lib/jvm/jdk1.8.0_77/bin/javac" 1

update-alternatives --install "/usr/bin/javaws" "javaws" "/usr/lib/jvm/jdk1.8.0_77/bin/javaws" 1

chmod a+x /usr/bin/java

chmod a+x /usr/bin/javac

chmod a+x /usr/bin/javaws

rm jdk-8-linux-x64.tar.gz

java –version

配置环境变量

$vi ~/.bashrc

export JAVA_HOME=/usr/lib/jvm/jdk1.8.0_77

export HADOOP_INSTALL=/opt/h2

export PATH=$PATH:$HADOOP_INSTALL/bin

export PATH=$PATH:$HADOOP_INSTALL/sbin

export HADOOP_MAPRED_HOME=$HADOOP_INSTALL

export HADOOP_COMMON_HOME=$HADOOP_INSTALL

export HADOOP_HDFS_HOME=$HADOOP_INSTALL

export HADOOP_CONF_DIR=$HADOOP_INSTALL/etc/hadoop

export YARN_HOME=$HADOOP_INSTALL

$source ~/.bashrc

Hadoop-env.sh

修改hadoop-env.sh,添加配置Namenode使用parallelGC:

export HADOOP_NAMENODE_OPTS="-XX:+UseParallelGC"

core-site.xml

#修改为: fs.defaultFS hdfs://hadoopmaster:9000 NameNode URI

hdfs-site.xml

dfs.permissions.enabled

false

dfs.replication

2

dfs.namenode.name.dir

/opt/h2/hdfs/namenode

dfs.datanode.data.dir

/opt/h2/hdfs/datanode

mapred-site.xml

mapreduce.framework.name

yarn

Execution framework.

mapreduce.jobtracker.address

hadoopmaster:9001

yarn-site.xml

yarn.resourcemanager.hostname

hadoop_master

yarn.nodemanager.aux-services.mapreduce_shuffle.class

org.apache.hadoop.mapred.ShuffleHandler

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.resourcemanager.address

hadoopmaster:8032

yarn.resourcemanager.scheduler.address

hadoopmaster:8030

yarn.resourcemanager.resource-tracker.address

hadoopmaster:8031

yarn.nodemanager.vmem-pmem-ratio

3

masters slaves

添加masters slaves文件的ip地址。

mastes文件:

hadoopmaster

slaves文件:

node1

node2

验证是否安装成功

启动fds

运行start-dfs.sh命令启动fds。成功启动后,可以使用下面命令验证是否成功。

[app@balance01 h2]$ hdfs dfs -mkdir /tmp

[app@balance01 h2]$ hdfs dfs -put NOTICE.txt /tmp/

[app@balance01 h2]$ hdfs dfs -ls /tmp

Found 1 items

-rw-r--r-- 2 app supergroup 14978 2016-10-24 11:27 /tmp/NOTICE.txt

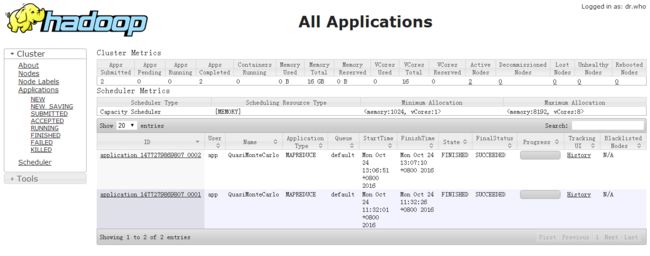

启动yarn

运行start-yarn.sh命令启动yarn。成功启动后,可以使用下面命令验证是否成功。

hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.3.jar pi 2 5

……

Job Finished in 28.235 seconds

Estimated value of Pi is 3.60000000000000000000

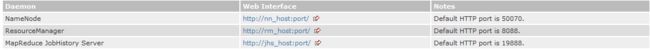

浏览WEB UI

NameNode的管理界面: http://{hadoopmaster}:8088/cluster

MapReduce JobHistory 的浏览界面:

http://{jms_address}:8088/cluster

必须启动JobHistory的进程,

mr-jobhistory-daemon.sh start historyserver

MapReduce 管理页面:

http://{hadoopmaster}:8088/cluster

欢迎加入微信公众号