TensorFlow学习(9)循环神经网络、保存模型和载入模型

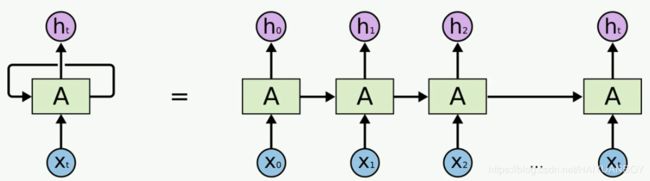

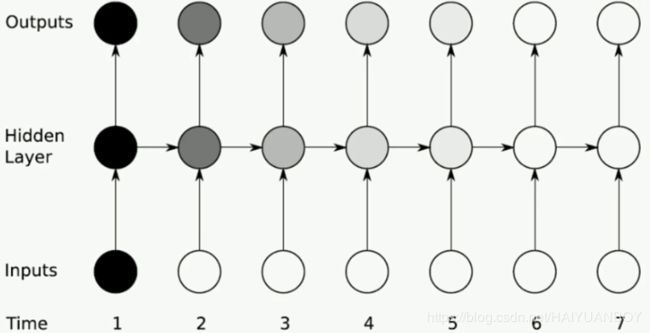

RNN

RNN 和 BP 都有梯度消失的问题,信号会逐渐减弱

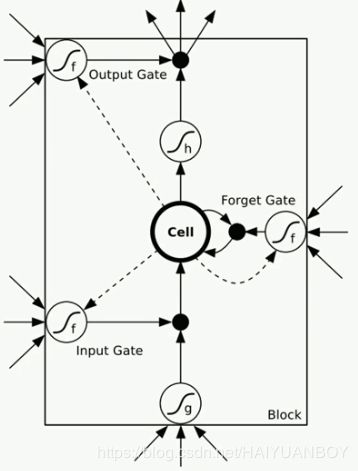

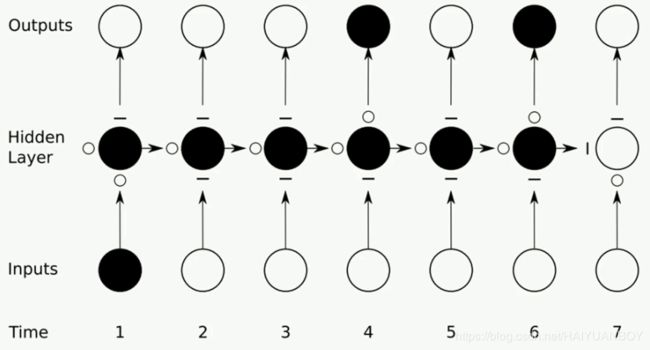

LSTM

主要用于文本和语音等序列化问题

LSTM 隐藏层的神经元不像BP的那样的神经元,而是如下比较复杂的block

第一项的输入影响了第4和第6项的输出结果

手写数字识别使用卷积神经网络案列

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets('MNIST_data', one_hot=True)

# 输入图片是 28*28

n_inputs = 28 # 输入一行,一行有28个数据,每一次输入图片的一行

max_time = 28 # 一共28行,一共输入28次

lstm_size = 100 # 隐藏层单元个数

n_classes = 10 # 10 个分类

batch_size = 50 # 每批次50个样本

n_batch = mnist.train.num_examples // batch_size # 计算有多少个批次

x = tf.placeholder(tf.float32, [None, 784]) #[50,784]

y = tf.placeholder(tf.float32, [None, 10])

# 初始化权值

weights = tf.Variable(tf.truncated_normal([lstm_size, n_classes], stddev=0.1)) # [100,10]

biases = tf.Variable(tf.constant(0.1, shape=[n_classes]))

# 定义RNN网络

def RNN(X, weights, biases):

inputs = tf.reshape(X, [-1, max_time, n_inputs]) # [-1, 28, 28]

# 定义 LSTM 基本 CELL,即隐藏层的block

lstm_cell = tf.nn.rnn_cell.BasicLSTMCell(lstm_size)

# inputs 的格式固定 [batch_size, max_time, n_inputs]

# final_state [state, batch_size, cell.state_size],final_state 是记录时间序列最后一次输出的结果

# final_state[0] 是 cell_state

# final_state[1] 是 hidden_state

# outputs 记录了每一次时间序列输出的结果,如果max_time是1,则是记录第一次,最后一次的输出结果与final_state 相同

# 'outputs' is a tensor of shape [batch_size, max_time, cell_state_size]

outputs, final_state = tf.nn.dynamic_rnn(lstm_cell, inputs, dtype=tf.float32)

results = tf.nn.softmax(tf.matmul(final_state[1], weights) + biases)

return results

# 计算RNN返回结果

prediction = RNN(x, weights, biases)

# 交叉熵代价函数

cross_entropy = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(labels=y, logits=prediction))

# 优化器

train_step = tf.train.AdamOptimizer(1e-4).minimize(cross_entropy)

# 结果存放于布尔列表

correct_prediction = tf.equal(tf.argmax(prediction, 1), tf.argmax(y, 1))

# 计算准确率

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

# 用于保存模型

saver = tf.train.Saver()

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

for epoch in range(6):

for batch in range(n_batch):

batch_xs, batch_ys = mnist.train.next_batch(batch_size)

sess.run(train_step, feed_dict={x: batch_xs, y: batch_ys})

acc = sess.run(accuracy, feed_dict={x: mnist.test.images, y: mnist.test.labels})

print('Iter: ' + str(epoch) + ', Testing Accuracy= ' + str(acc))

# 保存模型

saver.save(sess, 'net/rnn_mnist.ckpt')hidden_state 就是整个 block 的输出,即下面的 ht,cell_state 就是下面的 ct

上面保存了模型,这里载入模型进行测试

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets('MNIST_data', one_hot=True)

# 输入图片是 28*28

n_inputs = 28 # 输入一行,一行有28个数据,每一次输入图片的一行

max_time = 28 # 一共28行,一共输入28次

lstm_size = 100 # 隐藏层单元个数

n_classes = 10 # 10 个分类

batch_size = 50 # 每批次50个样本

n_batch = mnist.train.num_examples // batch_size # 计算有多少个批次

x = tf.placeholder(tf.float32, [None, 784]) #[50,784]

y = tf.placeholder(tf.float32, [None, 10])

# 初始化权值

weights = tf.Variable(tf.truncated_normal([lstm_size, n_classes], stddev=0.1)) # [100,10]

biases = tf.Variable(tf.constant(0.1, shape=[n_classes]))

# 定义RNN网络

def RNN(X, weights, biases):

inputs = tf.reshape(X, [-1, max_time, n_inputs]) # [-1, 28, 28]

# 定义 LSTM 基本 CELL,即隐藏层的block

lstm_cell = tf.nn.rnn_cell.BasicLSTMCell(lstm_size)

# inputs 的格式固定 [batch_size, max_time, n_inputs]

# final_state [state, batch_size, cell.state_size],final_state 是记录时间序列最后一次输出的结果

# final_state[0] 是 cell_state

# final_state[1] 是 hidden_state

# outputs 记录了每一次时间序列输出的结果,如果max_time是1,则是记录第一次,最后一次的输出结果与final_state 相同

# 'outputs' is a tensor of shape [batch_size, max_time, cell_state_size]

outputs, final_state = tf.nn.dynamic_rnn(lstm_cell, inputs, dtype=tf.float32)

results = tf.nn.softmax(tf.matmul(final_state[1], weights) + biases)

return results

# 计算RNN返回结果

prediction = RNN(x, weights, biases)

# 交叉熵代价函数

cross_entropy = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(labels=y, logits=prediction))

# 优化器

train_step = tf.train.AdamOptimizer(1e-4).minimize(cross_entropy)

# 结果存放于布尔列表

correct_prediction = tf.equal(tf.argmax(prediction, 1), tf.argmax(y, 1))

# 计算准确率

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

saver = tf.train.Saver()

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

print(sess.run(accuracy, feed_dict={x: mnist.test.images, y: mnist.test.labels}))

# 载入模型

saver.restore(sess, 'net/rnn_mnist.ckpt')

print(sess.run(accuracy, feed_dict={x: mnist.test.images, y: mnist.test.labels}))载入模型后准确率明显提高,说明用的是已经训练好的参数