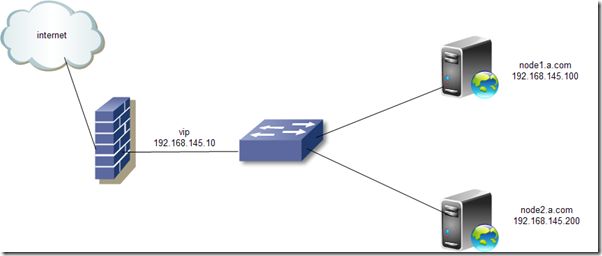

拓扑图

配置前提:

1.节点的名字必须跟uname -n的名字相同,而且两个主机必须能通过主机名来访问。尽量不通过DNS来访问。时钟时间保持一样。

2.双方的通信要必须通过SSL的无障碍通信机制

3.双节点之间可以通过某个IP来提供连接,但是这只是通信Ip,我们需要VIP来对外提供服务。

4.我采用虚拟机的方式实现配置,操作系统使用rhel5.4,x86平台

5.两个节点主机IP分别为192.168.145.100(node1.a.com),以及192.168.145/200(node2.a.com)

6.集群服务为apache的httpd服务

7.提供Web服务的地址(VIP)为192.168.145.10

8.在两个节点上分别准备一块大小为2G的分区用于实现DRBD的共享。(本文使用新分区/dev/sda5)

node1.a.com 配置

1.准备工作

[root@localhost ~]# vim /etc/sysconfig/network

HOSTNAME=node1.a.com

[root@localhost ~]# hostname node1.a.com

重新登录

[root@localhost ~]# vim /etc/sysconfig/network

HOSTNAME=node2.a.com

[root@localhost ~]# hostname node2.a.com

我们首先要保证两节点之间时间相差不应该超过1秒

[root@node1 ~]# hwclock -s

[root@node2 ~]# hwclock -s

分别在两个节点上设置

# vim /etc/hosts

192.168.145.100 node1.a.com node1

192.168.145.200 node2.a.c node2

生成密钥,确保通信正常

node1节点

[root@node1 ~]# ssh-keygen -t rsa

[root@node1 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub node2

node2节点

[root@node2 ~]# ssh-keygen -t rsa

[root@node2 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub node1

由于需要用到软件的依赖,这里配置yum,在node1上

# vim /etc/yum.repos.d/rhel-debuginfo.repo

1 [rhel-server]

2 name=Red Hat Enterprise Linux server

3 baseurl=file:///mnt/cdrom/Server

4 enabled=1

5 gpgcheck=1

6 gpgkey=file:///mnt/cdrom/RPM-GPG-KEY-redhat-release

7 [rhel-cluster]

8 name=Red Hat Enterprise Linux cluster

9 baseurl=file:///mnt/cdrom/Cluster

10 enabled=1

11 gpgcheck=1

12 gpgkey=file:///mnt/cdrom/RPM-GPG-KEY-redhat-release

13 [rhel-vt]

14 name=Red Hat Enterprise Linux vt

15 baseurl=file:///mnt/cdrom/VT

16 enabled=1

17 gpgcheck=1

18 gpgkey=file:///mnt/cdrom/RPM-GPG-KEY-redhat-release

19 [rhel-clusterstorage]

20 name=Red Hat Enterprise Linux clusterstorage

21 baseurl=file:///mnt/cdrom/ClusterStorage

22 enabled=1

23 gpgcheck=1

24 gpgkey=file:///mnt/cdrom/RPM-GPG-KEY-redhat-release

[root@node2 ~]# scp node1:/etc/yum.repos.d/rhel-debuginfo.repo /etc/yum.repos.d/

在node1, node2上安装apache服务,为了测试在node1上创建含’node1.a.com’的index.html文件,在node2上创建’node2.a.com’的index.html确保服务能启动,这里采用yum安装:

# mount /dev/cdrom /mnt/cdrom

# yum install -y httpd

[root@node1 ~]# echo "node1.a.com" >>/var/www/html/index.html //node1上

[root@node2 ~]# echo "node2.a.com" >/var/www/html/index.html //node2上

# service httpd start

# chkconfig httpd on

2.安装所需要的软件包

Node1上

[root@node1 ~]# ll

总计 3416

-rw------- 1 root root 1287 08-11 23:13 anaconda-ks.cfg

-rw-r--r-- 1 root root 271360 10-20 12:10 cluster-glue-1.0.6-1.6.el5.i386.rpm

-rw-r--r-- 1 root root 133254 10-20 12:10 cluster-glue-libs-1.0.6-1.6.el5.i386.rpm

-rw-r--r-- 1 root root 170052 10-20 12:10 corosync-1.2.7-1.1.el5.i386.rpm

-rw-r--r-- 1 root root 158502 10-20 12:10 corosynclib-1.2.7-1.1.el5.i386.rpm

drwxr-xr-x 2 root root 4096 08-11 15:20 Desktop

-rw-r--r-- 1 root root 165591 10-20 12:10 heartbeat-3.0.3-2.3.el5.i386.rpm

-rw-r--r-- 1 root root 289600 10-20 12:10 heartbeat-libs-3.0.3-2.3.el5.i386.rpm

-rw-r--r-- 1 root root 35369 08-11 23:13 install.log

-rw-r--r-- 1 root root 3995 08-11 23:11 install.log.syslog

-rw-r--r-- 1 root root 60458 10-20 12:10 libesmtp-1.0.4-5.el5.i386.rpm

-rw-r--r-- 1 root root 796813 10-20 12:10 pacemaker-1.1.5-1.1.el5.i386.rpm

-rw-r--r-- 1 root root 207925 10-20 12:10 pacemaker-cts-1.1.5-1.1.el5.i386.rpm

-rw-r--r-- 1 root root 332026 10-20 12:10 pacemaker-libs-1.1.5-1.1.el5.i386.rpm

-rw-r--r-- 1 root root 32818 10-20 12:10 perl-TimeDate-1.16-5.el5.noarch.rpm

-rw-r--r-- 1 root root 388632 10-20 12:10 resource-agents-1.0.4-1.1.el5.i386.rpm

# yum localinstall -y *.rpm --nogpgcheck

[root@node1 ~]# cd /etc/corosync/

[root@node1 corosync]# cp -p corosync.conf.example corosync.conf

[root@node1 corosync]# vim corosync.conf

10 bindnetaddr: 192.168.145.0

33 service {

34 ver: 0

35 name: pacemaker

36 }

37 aisexec {

38 user: root

39 group: root

40 }

创建目录

#mkdir /var/log/cluster

为了便面其他主机加入该集群,需要认证,生成一个authkey

[root@node1 corosync]# corosync-keygen

Corosync Cluster Engine Authentication key generator.

Gathering 1024 bits for key from /dev/random.

Press keys on your keyboard to generate entropy.

Writing corosync key to /etc/corosync/authkey.

[root@node1 corosync]# ll

总计 28

-rw-r--r-- 1 root root 5384 2010-07-28 amf.conf.example

-r-------- 1 root root 128 10-20 13:02 authkey

-rw-r--r-- 1 root root 540 10-20 12:59 corosync.conf

-rw-r--r-- 1 root root 436 2010-07-28 corosync.conf.example

drwxr-xr-x 2 root root 4096 2010-07-28 service.d

drwxr-xr-x 2 root root 4096 2010-07-28 uidgid.d

拷贝到node2上去 (使用 -p 以保留权限)

[root@node1 corosync]# scp -p authkey corosync.conf node2:/etc/corosync/

在另外的一个节点上创建日志目录

[root@node1 corosync]# ssh node2 'mkdir /var/log/cluster'

在节点上启动服务

[root@node1 corosync]# service corosync start

[root@node1 corosync]# ssh node2 'service corosync start'

验证启动corosync是否正常:

查看corosync引擎是否正常启动

[root@node1 corosync]# grep -i -e "corosync cluster engine" -e "configuration file" /var/log/messages

Oct 20 13:08:39 localhost corosync[32303]: [MAIN ] Corosync Cluster Engine ('1.2.7'): started and ready to provide service.

Oct 20 13:08:39 localhost corosync[32303]: [MAIN ] Successfully read main configuration file '/etc/corosync/corosync.conf'.

查看初始化成员节点通知是否发出

[root@node1 corosync]# grep -i totem /var/log/messages

Oct 20 13:08:39 localhost corosync[32303]: [TOTEM ] Initializing transport (UDP/IP).

Oct 20 13:08:39 localhost corosync[32303]: [TOTEM ] Initializing transmit/receive security: libtomcrypt SOBER128/SHA1HMAC (mode 0).

Oct 20 13:08:39 localhost corosync[32303]: [TOTEM ] The network interface [192.168.145.100] is now up.

Oct 20 13:08:40 localhost corosync[32303]: [TOTEM ] A processor joined or left the membership and a new membership was formed.

Oct 20 13:09:18 localhost corosync[32303]: [TOTEM ] A processor joined or left the membership and a new membership was formed.

检查过程中是否有错误产生

[root@node1 corosync]# grep -i error: /var/log/messages |grep -v unpack_resources

查看pacemaker是否正常启动:

[root@node1 corosync]# grep -i pcmk_startup /var/log/messages

Oct 20 13:08:39 localhost corosync[32303]: [pcmk ] info: pcmk_startup: CRM: Initialized

Oct 20 13:08:39 localhost corosync[32303]: [pcmk ] Logging: Initialized pcmk_startup

Oct 20 13:08:39 localhost corosync[32303]: [pcmk ] info: pcmk_startup: Maximum core file size is: 4294967295

Oct 20 13:08:40 localhost corosync[32303]: [pcmk ] info: pcmk_startup: Service: 9

Oct 20 13:08:40 localhost corosync[32303]: [pcmk ] info: pcmk_startup: Local hostname: node1.a.com

将前面的验证步骤在另外一个节点上再次验证一次

在任何一个节点上 查看集群的成员状态

[root@node1 corosync]# crm status

============

Last updated: Sat Oct 20 13:17:52 2012

Stack: openais

Current DC: node1.a.com - partition with quorum

Version: 1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f

2 Nodes configured, 2 expected votes

0 Resources configured.

============

Online: [ node1.a.com node2.a.com ]

提供高可用服务

在corosync中,定义服务可以用两种借口

1.图形接口 (使用hb—gui)

2.crm (pacemaker 提供,是一个shell)

[root@node1 corosync]# crm configure show

node node1.a.com

node node2.a.com

property $id="cib-bootstrap-options" \

dc-version="1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f" \

cluster-infrastructure="openais" \

expected-quorum-votes="2"

用于查看cib的相关信息

如何验证该文件的语法错误

[root@node1 corosync]# crm_verify -L

crm_verify[32397]: 2012/10/20_13:24:12 ERROR: unpack_resources: Resource start-up disabled since no STONITH resources have been defined

crm_verify[32397]: 2012/10/20_13:24:12 ERROR: unpack_resources: Either configure some or disable STONITH with the stonith-enabled option

crm_verify[32397]: 2012/10/20_13:24:12 ERROR: unpack_resources: NOTE: Clusters with shared data need STONITH to ensure data integrity

Errors found during check: config not valid

-V may provide more details

可以看到有stonith错误,在高可用的环境里面,会禁止实用任何支援

可以禁用stonith

[root@node1 corosync]# crm

crm(live)# configure

crm(live)configure# property stonith-enabled=false

crm(live)configure# commit

crm(live)configure# show

node node1.a.com

node node2.a.com

property $id="cib-bootstrap-options" \

dc-version="1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f" \

cluster-infrastructure="openais" \

expected-quorum-votes="2" \

stonith-enabled="false"

再次进行检查

[root@node1 corosync]# crm_verify -L

没有错误了

系统上有专门的stonith命令

stonith -L 显示stonith所指示的类型

crm可以使用交互式模式

可以执行help

保存在cib里面,以xml的格式

集群的资源类型有4种

primitive 本地主资源 (只能运行在一个节点上)

group 把多个资源轨道一个组里面,便于管理

clone 需要在多个节点上同时启用的 (如ocfs2 ,stonith ,没有主次之分)

master 有主次之分,如drbd

ip地址 http服务 共享存储

用资源代理进行配置

ocf lsb的

使用list可以查看

crm(live)# ra

crm(live)ra# classes

heartbeat

lsb

ocf / heartbeat pacemaker

stonith

可以实用list lsb 查看资源代理的脚本

crm(live)ra# list lsb

NetworkManager acpid anacron apmd

atd auditd autofs avahi-daemon

avahi-dnsconfd bluetooth capi conman

corosync cpuspeed crond cups

cups-config-daemon dnsmasq dund firstboot

functions gpm haldaemon halt

heartbeat hidd hplip ip6tables

ipmi iptables irda irqbalance

isdn kdump killall krb524

kudzu lm_sensors logd lvm2-monitor

mcstrans mdmonitor mdmpd messagebus

microcode_ctl multipathd netconsole netfs

netplugd network nfs nfslock

nscd ntpd openais openibd

pacemaker pand pcscd portmap

psacct rawdevices rdisc readahead_early

readahead_later restorecond rhnsd rpcgssd

rpcidmapd rpcsvcgssd saslauthd sendmail

setroubleshoot single smartd sshd

syslog vncserver wdaemon winbind

wpa_supplicant xfs xinetd ypbind

(是/etc/init.d目录下的)

查看ocf的heartbeat

crm(live)ra# list ocf heartbeat

实用info或者meta 用于显示一个资源的详细信息

meta ocf:heartbeat:IPaddr 各个子项用:分开

配置一个资源,可以在configuration 下面进行配置

1.先资源名字

primitive webIP ocf:heartbeat:IPaddr params ip=192.168.145.10

2.查看

crm(live)configure# show

node node1.a.com

node node2.a.com

primitive webIP ocf:heartbeat:IPaddr \

params ip="192.168.145.10"

property $id="cib-bootstrap-options" \

dc-version="1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f" \

cluster-infrastructure="openais" \

expected-quorum-votes="2" \

stonith-enabled="false"

3.提交 crm(live)configure# commit

4.crm(live)# status

============

Last updated: Mon May 7 19:39:37 2012

Stack: openais

Current DC: node1.a.com - partition with quorum

Version: 1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f

2 Nodes configured, 2 expected votes

1 Resources configured.

============

Online: [ node1.a.com node2.a.com ]

webIP (ocf::heartbeat:IPaddr): Started node1.a.com

可以看出该资源在node1上启动

5.实用ifconfig 在节点1上进行查看

[root@node1 corosync]# ifconfig

eth0 Link encap:Ethernet HWaddr 00:0C:29:F1:15:00

inet addr:192.168.145.100 Bcast:192.168.145.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fef1:1500/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:22162 errors:0 dropped:0 overruns:0 frame:0

TX packets:27450 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:6012312 (5.7 MiB) TX bytes:3548456 (3.3 MiB)

Interrupt:67 Base address:0x2000

eth0:0 Link encap:Ethernet HWaddr 00:0C:29:F1:15:00

inet addr:192.168.145.10 Bcast:192.168.145.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

Interrupt:67 Base address:0x2000

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:444 errors:0 dropped:0 overruns:0 frame:0

TX packets:444 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:42139 (41.1 KiB) TX bytes:42139 (41.1 KiB)

6.定义web服务资源

在两个节点上都要进行安装

安装完毕后,可以查看httpd的lsb脚本

[root@node2 corosync]# crm ra list lsb

或者

crm(live)ra# list lsb

查看httpd的参数

crm(live)ra# meta lsb:httpd

lsb:httpd

Apache is a World Wide Web server. It is used to serve \

HTML files and CGI.

Operations' defaults (advisory minimum):

start timeout=15

stop timeout=15

status timeout=15

restart timeout=15

force-reload timeout=15

monitor interval=15 timeout=15 start-delay=15

定义httpd的资源

crm(live)configure# primitive webserver lsb:httpd

crm(live)configure# show

node node1.a.com

node node2.a.com

primitive webIP ocf:heartbeat:IPaddr \

params ip="192.168.145.10"

primitive webserver lsb:httpd

property $id="cib-bootstrap-options" \

dc-version="1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f" \

cluster-infrastructure="openais" \

expected-quorum-votes="2" \

stonith-enabled="false"

crm(live)# status

============

Last updated: Mon May 7 20:01:12 2012

Stack: openais

Current DC: node1.a.com - partition with quorum

Version: 1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f

2 Nodes configured, 2 expected votes

2 Resources configured.

============

Online: [ node1.a.com node2.a.com ]

webIP (ocf::heartbeat:IPaddr): Started node1.a.com

webserver (lsb:httpd): Started node2.a.com

发现httpd已经启动了,但是在node2节点上

(高级群集服务资源越来越多,会分布在不同的节点上,以尽量负载均衡)

需要约束在同一个节点上,定义成一个组

可以实用 crm(live)configure# help group 查看配置组的帮助 信息

crm(live)configure# help group

The `group` command creates a group of resources.

Usage:

...............

group

[meta attr_list]

[params attr_list]

attr_list :: [$id=

...............

Example:

...............

group internal_www disk0 fs0 internal_ip apache \

meta target_role=stopped

...............

定义组

crm(live)configure# group web webIP webserver

crm(live)configure# show

node node1.a.com

node node2.a.com

primitive webIP ocf:heartbeat:IPaddr \

params ip="192.168.145.10"

primitive webserver lsb:httpd

group web webIP webserver

property $id="cib-bootstrap-options" \

dc-version="1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f" \

cluster-infrastructure="openais" \

expected-quorum-votes="2" \

stonith-enabled="false"

在次查看群集的状态

crm(live)# status

============

Last updated: Mon May 7 20:09:06 2012

Stack: openais

Current DC: node1.a.com - partition with quorum

Version: 1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f

2 Nodes configured, 2 expected votes

1 Resources configured.

============

Online: [ node1.a.com node2.a.com ]

Resource Group: web

webIP (ocf::heartbeat:IPaddr): Started node1.a.com

webserver (lsb:httpd): Started node1.a.com

crm(live)#

(现在ip地址和 httpd都已经在node1上了)

lsb之类的服务,一定不能自动启动 chkconfig httpd off

1 测试,在两个节点上做网页

在节点1 和节点2 上分别创建网页

使用http://群集ip 发现在第一个节点上

2.将节点1 的corosync 服务停止

[root@node1 corosync]# service corosync stop

3. 在节点2上进行观察

[root@node2 corosync]# crm status

============

Last updated: Mon May 7 20:16:26 2012

Stack: openais

Current DC: node1.a.com - partition with quorum

Version: 1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f

2 Nodes configured, 2 expected votes

1 Resources configured.

============

Online: [ node1.a.com node2.a.com ]

Resource Group: web

webIP (ocf::heartbeat:IPaddr): Started node1.a.com

webserver (lsb:httpd): Started node1.a.com

[root@node2 corosync]# crm status

============

Last updated: Mon May 7 20:16:58 2012

Stack: openais

Current DC: node2.a.com - partition WITHOUT quorum

Version: 1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f

2 Nodes configured, 2 expected votes

1 Resources configured.

============

Online: [ node2.a.com ]

OFFLINE: [ node1.a.com ]

可以看到没有票数

4.关闭 quorum

可选的参数有如下 ignore (忽略)

freeze (冻结,表示已经启用的资源继续实用,没有启用的资源不能

启用)

stop(默认)

suicide (所有的资源杀掉)

将节点1 的corosync 服务启动起来

改变quorum

crm(live)configure# property no-quorum-policy=ignore

cimmit

crm(live)# show (在次查看quorum 的属性)

ERROR: syntax: show

crm(live)# configure

crm(live)configure# show

node node1.a.com

node node2.a.com

primitive webIP ocf:heartbeat:IPaddr \

params ip="192.168.145.10"

primitive webserver lsb:httpd

group web webIP webserver

property $id="cib-bootstrap-options" \

dc-version="1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f" \

cluster-infrastructure="openais" \

expected-quorum-votes="2" \

stonith-enabled="false" \

no-quorum-policy="ignore" (已经关闭)

在节点1 上停止 corosync 服务

[root@node1 corosync]# service corosync stop

在节点2上查看

[root@node2 corosync]# crm status

Resource Group: web

webIP (ocf::heartbeat:IPaddr): Started node2.a.com

webserver (lsb:httpd): Started node2.a.com (已经切换到节点2)

在次启动节点1

[root@node1 corosync]# service corosync start

在节点2上观察

[root@node2 corosync]# crm status

Online: [ node1.a.com node2.a.com ]

Resource Group: web

webIP (ocf::heartbeat:IPaddr): Started node2.a.com

webserver (lsb:httpd): Started node2.a.com

DRBD

添加分区

[root@node2 ~]# fdisk /dev/sda

The number of cylinders for this disk is set to 2610.

There is nothing wrong with that, but this is larger than 1024,

and could in certain setups cause problems with:

1) software that runs at boot time (e.g., old versions of LILO)

2) booting and partitioning software from other OSs

(e.g., DOS FDISK, OS/2 FDISK)

Command (m for help): p

Disk /dev/sda: 21.4 GB, 21474836480 bytes

255 heads, 63 sectors/track, 2610 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sda1 * 1 13 104391 83 Linux

/dev/sda2 14 1288 10241437+ 83 Linux

/dev/sda3 1289 1386 787185 82 Linux swap / Solaris

Command (m for help): n

Command action

e extended

p primary partition (1-4)

e

Selected partition 4

First cylinder (1387-2610, default 1387):

Using default value 1387

Last cylinder or +size or +sizeM or +sizeK (1387-2610, default 2610):

Using default value 2610

Command (m for help): p

Disk /dev/sda: 21.4 GB, 21474836480 bytes

255 heads, 63 sectors/track, 2610 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sda1 * 1 13 104391 83 Linux

/dev/sda2 14 1288 10241437+ 83 Linux

/dev/sda3 1289 1386 787185 82 Linux swap / Solaris

/dev/sda4 1387 2610 9831780 5 Extended

Command (m for help): n

First cylinder (1387-2610, default 1387):

Using default value 1387

Last cylinder or +size or +sizeM or +sizeK (1387-2610, default 2610): +2g

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

WARNING: Re-reading the partition table failed with error 16: 设备或资源忙.

The kernel still uses the old table.

The new table will be used at the next reboot.

Syncing disks.

[root@node2 ~]# partprobe /dev/sda

[root@node2 ~]# cat /proc/partitions

major minor #blocks name

8 0 20971520 sda

8 1 104391 sda1

8 2 10241437 sda2

8 3 787185 sda3

8 4 0 sda4

8 5 1959898 sda5

在节点1上做同样配置

安装drbd,用来构建分布式存储。

这里要选用适合自己系统的版本进行安装,我用到的是

drbd83-8.3.8-1.el5.centos.i386.rpm

kmod-drbd83-8.3.8-1.el5.centos.i686.rpm

[root@node1 ~]# yum localinstall -y drbd83-8.3.8-1.el5.centos.i386.rpm --nogpgcheck

[root@node1 ~]# yum localinstall -y kmod-drbd83-8.3.8-1.el5.centos.i686.rpm --nogpgcheck

在节点2上做同样操作

[root@node1 ~]# cp /usr/share/doc/drbd83-8.3.8/drbd.conf /etc/

cp: overwrite `/etc/drbd.conf'? Y # 这里要选择覆盖

[root@node1 ~]# scp /etc/drbd.conf node2:/etc/

[root@node1 ~]# vim /etc/drbd.d/global_common.conf

1 global {

2 usage-count no;

3 # minor-count dialog-refresh disable-ip-verification

4 }

5

6 common {

7 protocol C;

8

9 startup {

10 wfc-timeout 120;

11 degr-wfc-timeout 120;

12 }

13 disk {

14 on-io-error detach;

15 fencing resource-only;

16

17 }

18 net {

19 cram-hmac-alg "sha1";

20 shared-secret "mydrbdlab";

21 } 22 syncer {

23 rate 100M;

24 }

25 }

[root@node1 ~]# vim /etc/drbd.d/web.res

1 resource web {

2 on node1.a.com {

3 device /dev/drbd0;

4 disk /dev/sda5;

5 address 192.168.145.100:7789;

6 meta-disk internal;

7 }

8

9 on node2.a.com {

10 device /dev/drbd0;

11 disk /dev/sda5;

12 address 192.168.145.200:7789;

13 meta-disk internal;

14 }

15 }

[root@node1 ~]# scp /etc/drbd.d/* node2:/etc/drbd.d/

初始化已定义的资源并启动服务:

[root@node1 ~]# drbdadm create-md web

[root@node2 ~]# drbdadm create-md web

#启动服务:

[root@node1 ~]# service drbd start

[root@node2 ~]#service drbd start

设在主机点上执行

drbdadm -- --overwrite-data-of-peer primary web

然后查看同步过程

[root@node1 ~]# cat /proc/drbd

version: 8.3.8 (api:88/proto:86-94)

GIT-hash: d78846e52224fd00562f7c225bcc25b2d422321d build by [email protected], 2010-06-04 08:04:16

0: cs:SyncSource ro:Primary/Secondary ds:UpToDate/Inconsistent C r----

ns:456904 nr:0 dw:0 dr:465088 al:0 bm:27 lo:1 pe:14 ua:256 ap:0 ep:1 wo:b oos:1503320

[===>................] sync'ed: 23.4% (1503320/1959800)K delay_probe: 44

finish: 0:00:57 speed: 26,044 (25,360) K/sec

创建文件系统(在主节点上实现)

mkfs -t ext3 -L drbdweb /dev/drbd0

[root@node1 ~]# mkdir /web

[root@node1 ~]# mount /dev/drbd0 /web/

echo "hello" >index.html

测试,把node1变成从的,node2 变成主的

root@node1 ~]# umount /web

[root@node1 ~]# drbdadm secondary web

[root@node1 ~]# drbdadm role web

Secondary/Secondary

在node2 节点上,

[root@node2 ~]# mkdir /web

[root@node2 ~]# drbdadm primary web

[root@node2 ~]# drbd-overview

0:web Connected Primary/Secondary UpToDate/UpToDate C r----

[root@node2 ~]# drbdadm role web

Primary/Secondary

[root@node2 ~]# mount /dev/drbd0 /web

[root@node2 ~]# ll /web

total 20

-rw-r--r-- 1 root root 6 May 7 00:46 index.html

drwx------ 2 root root 16384 May 7 00:45 lost+found

root@node2 ~]# cd /web

[root@node2 web]# vim test.html

[root@node2 ~]# umount /web

[root@node2 ~]# drbdadm secondary web

切换到node1上

[root@node1 ~]# drbdadm primary web

[root@node1 ~]# mount /dev/drbd0 /web

[root@node1 ~]# ll /web

total 24

-rw-r--r-- 1 root root 6 May 7 00:46 index.html

drwx------ 2 root root 16384 May 7 00:45 lost+found

-rw-r--r-- 1 root root 13 May 7 00:58 test.html

置其中一个节点为主节点