1. 什么是RAID

2. RAID的实现方式

3. RAID的级别及特点

4. 软RAID的实现方式

1. 什么是RAID

RAID全称:Redundant Arrays of Inexpensive(Independent) Disks

实现原理:通过多个磁盘合成一个“阵列”来提供更好的性能、冗余,或者两者都提供

RAID的级别:多块磁盘组织在一起的工作方式有所不同,通过工作方式不同来区分。如RAID0 , RAID1 , RAID4 , RAID5 , RAID6 , RAID 10 , RAID01 , RAID50等

2. RAID的实现方式:

硬件实现方式:

外接式磁盘阵列:通过PCI或PCI-E扩展卡提供适配能力。

内接式磁盘阵列:主板上集成的RAID控制器

软件实现方式(linux):

通过软件实现(mdadm)

实现RAID的操作:

安装操作系统之前通过BIOS设置。此方式主要用把操作系统安装在RAID上。

安装操作系统后通过BIOS或软件设置。此方式的主要目的是把操作系统和其他存储RAID的数据区隔离开。

3. RAID的级别及特点

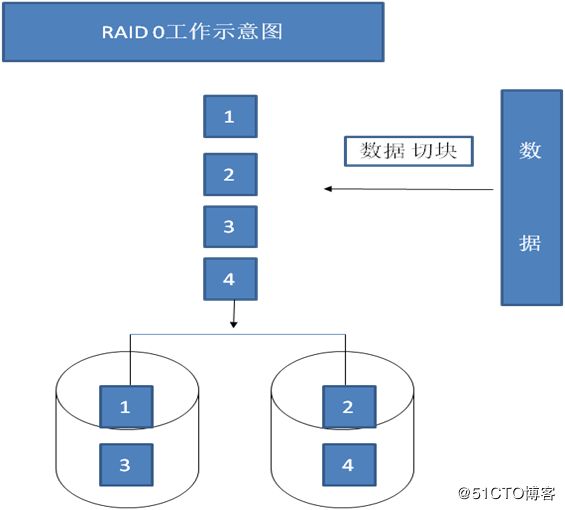

RAID 0 :也称条带卷(strip)

这种模式如通过相同型号、相同容量的磁盘来实现效果极佳。

特点

读写能力提升

可用空间为最小硬盘容量乘以硬盘块数。

无容错能力,若其中一块硬盘有故障,会导致数据缺失。

最少硬盘块数:2

工作方式:

先把硬盘分切出等量的区块,当文件要写入磁盘中时,把数据依据磁盘区块大小切割好,再依序交错存入磁盘。如一个数据文件有100MB,每个磁盘会各存入50MB。

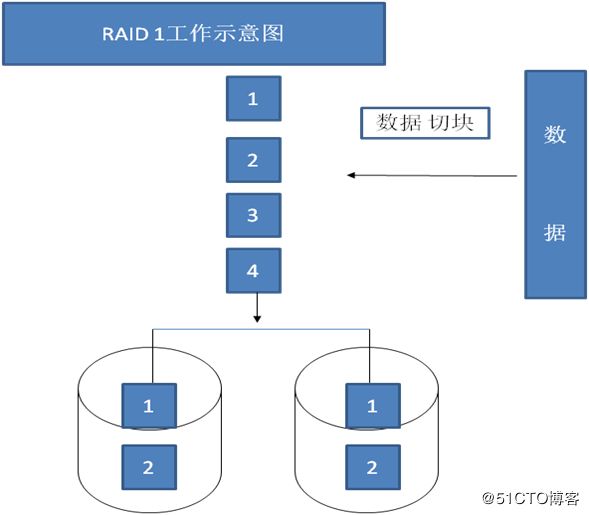

RAID 1 镜像卷(mirror)

特点:

读性能提升,写性能略有下降

可用空间:最小磁盘空间*1

有容错能力

最少磁盘个数:2

工作方式:

先把硬盘分切出等量的区块,当文件要写入磁盘中时,把数据依据磁盘区块大小切割好,再存入各磁盘各一份。

如保存一份文件时,两个磁盘都会保存一份完整的文件。

工作方式如下图:

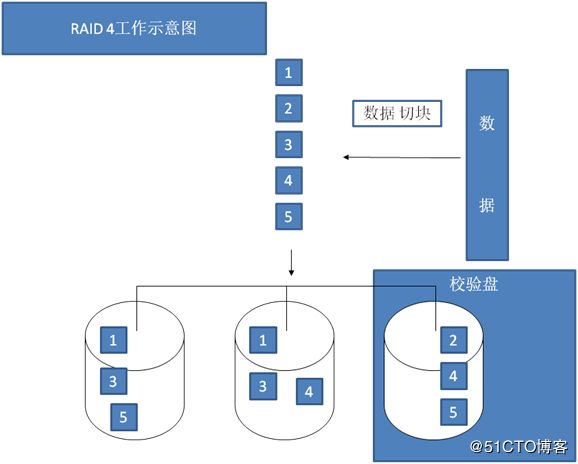

RAID 4

特点:

至少需要三块硬盘

2块硬盘做数据盘,1块硬盘做校验盘(文件读写时都需访问该硬盘,工作压力大)。

有容错能力,允许坏一块硬盘。当坏一块硬盘的时候,为降级工作模式。可读写,但不推荐。

可用空间:(N-1)*最小硬盘空间

工作方式:

两块硬盘做数据盘,另外一块硬盘专门来做校验盘。数据保存时,按异或运算保存数据。

即当硬盘1保存的数据为1011,硬盘2保存的数据为1100,硬盘3中的数据以1011和1100作异或运算即为0111。

工作方式如下图:

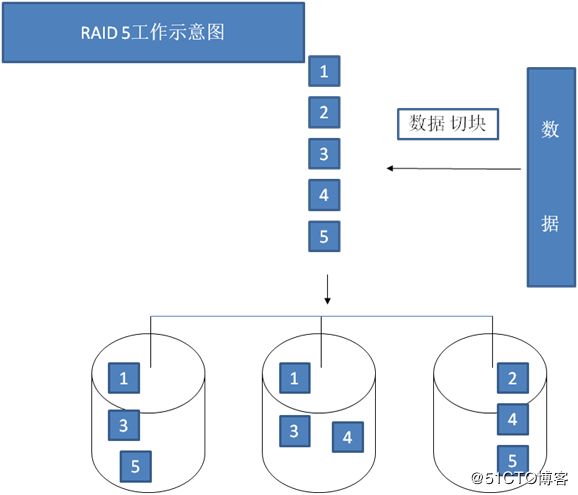

RAID 5

特点:

至少需要三块硬盘

三块硬盘轮流做校验盘。

有容错能力,允许坏一块硬盘。当坏一块硬盘的时候,为降级工作模式。可读写,但不推荐。

可用空间:(N-1)*最小硬盘空间

工作方式

同RAID 4 ,不过为三块硬盘轮流做校验盘。

工作方式如下图:

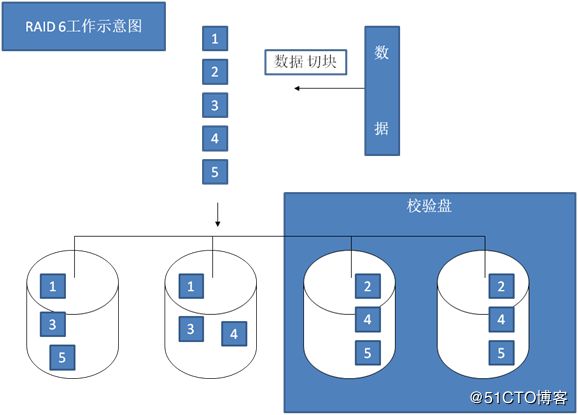

RAID 6

特点:

最少需4块硬盘

读写能力提升

有容错能力,可坏2块硬盘而不影响数据。

可用空间(N-2)X最小磁盘空间大小

工作方式:

至少由四块硬盘构成。两块硬盘数据,另外两块硬盘轮流做校验盘。

工作方式见下图:

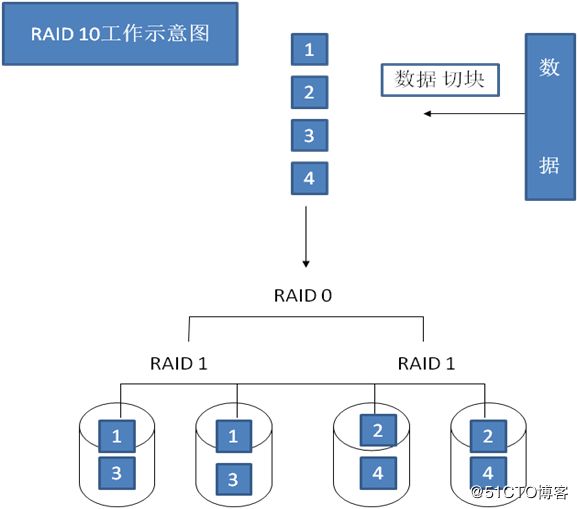

RAID 10

特点:

读写能力提升

有容错能力。每组RAID 1允许坏一块硬盘而不影响数据完整性。

可用空间为N较小硬盘空间大小50%

至少需要用4块硬盘

实现方式:

假设有四块硬盘,分别为1,2,3,4。硬盘1,2构成一组RAID 1,硬盘3,4构成一组RAID 1。这两组RAID 1再组成一组RAID 0

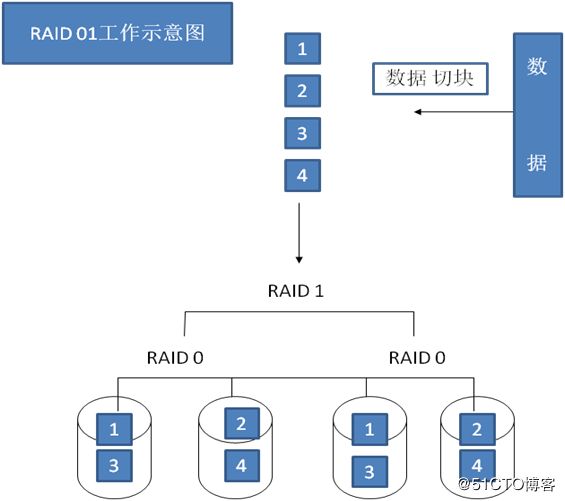

RAID 01

特点:

特点:

读写能力提升

有容错能力。可以一组RAID0同时坏而不影响数据完整性。

可用空间为N较小硬盘空间大小50%

至少需要用4块硬盘

实现方式:

假设有四块硬盘,分别为1,2,3,4。硬盘1,2构成一组RAID 0,硬盘3,4构成一组RAID 0。这两组RAID 0再组成一组RAID 1

4. 软RAID的实现方式(mdadm的用法)

mdadm - manage MD devices aka Linux Software RAID

SYNOPSIS

mdadm [mode]

支持的RAID级别:Linux supports LINEAR md devices, RAID0 (striping), RAID1 (mirroring), RAID4, RAID5, RAID6, RAID10, MULTIPATH, FAULTY, and CONTAINER.

MODES:

创建:-C Create a new array

创建一个新的阵列

装配:-A Assemble a pre-existing array

装配事先存在的阵列

监控:-F Select Monitor mode

选择监控模式

管理:-f , -r , -a

增长:-G Change the size or shape of an active array

更改活动阵列的大小或状态

For Misc mode(混合模式):

-S, --stop deactivate array, releasing all resources.

停用阵列,释放所有资源

-D, --detail Print details of one or more md devices.

打印一个或多个md设备的详细信息

--zero-superblock

If the device contains a valid md superblock, the block is overwritten with zeros. With --force the block where the superblock would be is overwritten even if it doesn't appear to be valid.

如果一个设备包含有效的md超级块信息,这个块信息会被零填充。加上--froce选项之后,即使块信息不是有效的md信息也会被零填充。

For Manage mode(管理模式):

-a, --add hot-add listed devices. 热添加列出的设备

-r, --remove remove listed devices. They must not be active. i.e. they should be failed or spare devices.

移除列出的设备。其必须为非活动的。即为故障设备或热备设备。

-f, --fail Mark listed devices as faulty.

把列出的设备标记为故障

OPTIONS:

For create ,grou:

-c, --chunk=

Specify chunk size of kilobytes. The default when creating an array is 512KB. RAID4, RAID5, RAID6, and RAID10 require the chunk size to be a power of 2. In any case it must be a multiple of 4KB

指定块大小,默认为512KB。RAID4, RAID5, RAID6,和 RAID10需要块大小为2的次方,大多数情况下必须是4KB的倍数

A suffix of 'K', 'M' or 'G' can be given to indicate Kilobytes, Megabytes or Gigabytes respectively

可给定的单位为K , M , G

-n, --raid-devices=

Specify the number of active devices in the array.

指定阵列的活动设备数量

This, plus the number of spare devices (see below) must equal the number of component-devices (including "missing" devices) that are listed on the command line for --create.

这里的数量加上热备设备必须等于 --create时在命令行列出的设备数量

Setting a value of 1 is probably a mistake and so requires that --force be specified first. A value of 1 will then be allowed for linear, multipath, RAID0 and RAID1. It is never allowed for RAID4, RAID5 or RAID6.

该值设置为1时为错误,需要加上--force参数。创建linear, multipath, RAID0 and RAID1时可设置值为1,但是创建RAID4, RAID5 or RAID6时不可指定为1

This number can only be changed using --grow for RAID1, RAID4, RAID5 and RAID6 arrays, and only on kernels which provide the necessary support.

如果内核支持,可以使用--grow选项为RAID1,RAID4,RAID5,RAID6增加磁盘数量

-l, --level=

Set RAID level. When used with --create, options are: linear, raid0, 0, stripe, raid1, 1, mirror, raid4, 4,raid5, 5, raid6, 6, raid10, 10, multipath, mp, faulty, container. Obviously some of these are synonymous.

设置阵列级别。当使用--create时,可用选项有:linear, raid0, 0, stripe, raid1, 1, mirror, raid4, 4,raid5, 5, raid6, 6, raid10, 10, multipath, mp, faulty, container.这其中有些是同义的。

Can be used with --grow to change the RAID level in some cases. See LEVEL CHANGES below.

在某些情况下可以使用--grow改变阵列级别

-a, --auto{=yes,md,mdp,part,p}{NN}

Instruct mdadm how to create the device file if needed, possibly allocating an unused minor number.

如需要,指引mdadm如何创建设备文件,通常会配置一个未使用的设备编号。

If --auto is not given on the command line or in the config file, then the default will be --auto=yes.

如果在配置文件中或命令行中未给出--auto选项,默认情况下为--auto=yes.

-a, --add

This option can be used in Grow mode in two cases.

该选项在两种模式下可用在增长模式下

-x, --spare-devices=

Specify the number of spare (eXtra) devices in the initial array. Spares can also be added and removed later.

指定初始创建的阵列中热备盘的数量,后续可对热备盘添加或移除。

The number of component devices listed on the command line must equal the number of RAID devices plus the number of spare devices.

命令行中列出的组成阵列的磁盘必须等于RAID的设备数加上热备盘数量。

未指定模式的命令:

-s, --scan

Scan config file or /proc/mdstat for missing information.

从配置文件或者/proc/mdstat中寻找丢失的md信息

例:1、创建一个可用空间为1.5G的RAID1设备,要求其chunk大小为128k,文件系统为ext4,有一个空闲盘,开机可自动挂载至/backup目录;

[root@localhost ~]# mdadm -C /dev/md0 -l 1 -n 2 -x 1 -c 128K /dev/sd{c,d,e}

mdadm: Note: this array has metadata at the start and

may not be suitable as a boot device. If you plan to

store '/boot' on this device please ensure that

your boot-loader understands md/v1.x metadata, or use

--metadata=0.90

Continue creating array? y

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md0 started.

[root@localhost ~]# mdadm -D /dev/md0

/dev/md0:

Version : 1.2

Creation Time : Sun Feb 25 20:07:03 2018

Raid Level : raid1

Array Size : 1571840 (1535.00 MiB 1609.56 MB)

Used Dev Size : 1571840 (1535.00 MiB 1609.56 MB)

Raid Devices : 2

Total Devices : 3

Persistence : Superblock is persistent

Update Time : Sun Feb 25 20:07:11 2018

State : clean

Active Devices : 2

Working Devices : 3

Failed Devices : 0

Spare Devices : 1

Consistency Policy : resync

Name : localhost.localdomain:0 (local to host localhost.localdomain)

UUID : c3bdff1b:81d61bc6:2fc3c3a1:7bc1b94d

Events : 17

Number Major Minor RaidDevice State

0 8 32 0 active sync /dev/sdc

1 8 48 1 active sync /dev/sdd

2 8 64 - spare /dev/sde

[root@localhost ~]# mkfs.ext4 /dev/md0

mke2fs 1.42.9 (28-Dec-2013)

Filesystem label=

OS type: Linux

Block size=4096 (log=2)

Fragment size=4096 (log=2)

Stride=0 blocks, Stripe width=0 blocks

98304 inodes, 392960 blocks

19648 blocks (5.00%) reserved for the super user

First data block=0

Maximum filesystem blocks=402653184

12 block groups

32768 blocks per group, 32768 fragments per group

8192 inodes per group

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912

Allocating group tables: done

Writing inode tables: done

Creating journal (8192 blocks): done

Writing superblocks and filesystem accounting information: done

[root@localhost ~]# mkdir -v /backup

mkdir: created directory ‘/backup’

[root@localhost ~]# blkid /dev/md0

/dev/md0: UUID="0eed7df3-35e2-4bd1-8c05-9a9a8081d8da" TYPE="ext4"

编辑/etc/fstab增加一行内容如下:

UUID=0eed7df3-35e2-4bd1-8c05-9a9a8081d8da /backup ext4 defaults 0 0

[root@localhost ~]# mount -a

#挂载fstab文件中所有挂载选项

[root@localhost ~]# df /backup/

#查看/backup目录大小

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/md0 1514352 4608 1414768 1% /backup2、创建一个可用空间为10G的RAID10设备,要求其chunk大小为256k,文件系统为ext4

方法一:直接创建raid10

[root@localhost ~]# mdadm -C /dev/md1 -n 4 -l 10 -c 256 /dev/sd{c..f}

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md1 started.

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid1] [raid6] [raid5] [raid4] [raid10]

md1 : active raid10 sdf[3] sde[2] sdd[1] sdc[0]

3143680 blocks super 1.2 256K chunks 2 near-copies [4/4] [UUUU]

unused devices:

[root@localhost ~]# mdadm -D /dev/md1

/dev/md1:

Version : 1.2

Creation Time : Sun Feb 25 20:21:57 2018

Raid Level : raid10

Array Size : 3143680 (3.00 GiB 3.22 GB)

Used Dev Size : 1571840 (1535.00 MiB 1609.56 MB)

Raid Devices : 4

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Sun Feb 25 20:22:12 2018

State : clean

Active Devices : 4

Working Devices : 4

Failed Devices : 0

Spare Devices : 0

Layout : near=2

Chunk Size : 256K

Consistency Policy : resync

Name : localhost.localdomain:1 (local to host localhost.localdomain)

UUID : a573eb7d:018a1c9b:1e65917b:f7c48894

Events : 17

Number Major Minor RaidDevice State

0 8 32 0 active sync set-A /dev/sdc

1 8 48 1 active sync set-B /dev/sdd

2 8 64 2 active sync set-A /dev/sde

3 8 80 3 active sync set-B /dev/sdf

[root@localhost ~]# mke2fs -t ext4 /dev/md1

mke2fs 1.42.9 (28-Dec-2013)

Filesystem label=

OS type: Linux

Block size=4096 (log=2)

Fragment size=4096 (log=2)

Stride=64 blocks, Stripe width=128 blocks

196608 inodes, 785920 blocks

39296 blocks (5.00%) reserved for the super user

First data block=0

Maximum filesystem blocks=805306368

24 block groups

32768 blocks per group, 32768 fragments per group

8192 inodes per group

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912

Allocating group tables: done

Writing inode tables: done

Creating journal (16384 blocks): done

Writing superblocks and filesystem accounting information: done

[root@localhost ~]# blkid /dev/md1

/dev/md1: UUID="a3e591d6-db9e-40b0-b61a-69c1a69b2db4" TYPE="ext4" 方法二:先创建两个raid1,再利用两个raid1创建raid0

[root@localhost ~]# mdadm -C /dev/md1 -n 2 -l 1 /dev/sdc /dev/sdd

mdadm: Note: this array has metadata at the start and

may not be suitable as a boot device. If you plan to

store '/boot' on this device please ensure that

your boot-loader understands md/v1.x metadata, or use

--metadata=0.90

Continue creating array? y

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md1 started.

[root@localhost ~]# mdadm -C /dev/md2 -n 2 -l 1 /dev/sde /dev/sdf

mdadm: Note: this array has metadata at the start and

may not be suitable as a boot device. If you plan to

store '/boot' on this device please ensure that

your boot-loader understands md/v1.x metadata, or use

--metadata=0.90

Continue creating array? y

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md2 started.

[root@localhost ~]# mdadm -C /dev/md3 -n 2 -l 0 /dev/md{1,2}

mdadm: /dev/md1 appears to contain an ext2fs file system

size=3143680K mtime=Thu Jan 1 08:00:00 1970

Continue creating array? y

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md3 started.3、装配已经创建的阵列

方法一

[root@localhost ~]# mdadm -S /dev/md3

mdadm: stopped /dev/md3

[root@localhost ~]# mdadm -A /dev/md3 /dev/md1 /dev/md2

mdadm: /dev/md3 has been started with 2 drives.

[root@localhost ~]# mdadm -D /dev/md3

/dev/md3:

Version : 1.2

Creation Time : Sun Feb 25 20:28:23 2018

Raid Level : raid0

Array Size : 3141632 (3.00 GiB 3.22 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Sun Feb 25 20:28:23 2018

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Chunk Size : 512K

Consistency Policy : none

Name : localhost.localdomain:3 (local to host localhost.localdomain)

UUID : aac6a917:944b3d60:f901a2cc:a1e3fd13

Events : 0

Number Major Minor RaidDevice State

0 9 1 0 active sync /dev/md1

1 9 2 1 active sync /dev/md2方法二:利用-s选项扫描

[root@localhost ~]# mdadm -S /dev/md0

mdadm: stopped /dev/md0

[root@localhost ~]# mdadm -A -s

mdadm: /dev/md/0 has been started with 4 drives.

[root@localhost ~]# ls /dev/md*

/dev/md0

/dev/md:

0方法三:

[root@localhost ~]# mdadm -D -s > /etc/mdadm.conf

#保存磁盘阵列信息至/etc/mdadm.conf

[root@localhost ~]# cat /etc/mdadm.conf

ARRAY /dev/md1 metadata=1.2 name=localhost.localdomain:1 UUID=2c35b0c6:d7a07385:bedeea30:cef62b46

ARRAY /dev/md2 metadata=1.2 name=localhost.localdomain:2 UUID=48d4ad71:6f9f6565:a62447ba:2a7ce6ec

ARRAY /dev/md3 metadata=1.2 name=localhost.localdomain:3 UUID=aac6a917:944b3d60:f901a2cc:a1e3fd13

[root@localhost ~]# mdadm -S /dev/md3

#停用/dev/md3

mdadm: stopped /dev/md3

[root@localhost ~]# mdadm -A /dev/md3

#装载/dev/md3

mdadm: /dev/md3 has been started with 2 drives.

[root@localhost ~]# cat /proc/mdstat

#查看md的状态

Personalities : [raid1] [raid6] [raid5] [raid4] [raid10] [raid0]

md3 : active raid0 md1[0] md2[1]

3141632 blocks super 1.2 512k chunks

md2 : active raid1 sdf[1] sde[0]

1571840 blocks super 1.2 [2/2] [UU]

md1 : active raid1 sdd[1] sdc[0]

1571840 blocks super 1.2 [2/2] [UU]

unused devices: 4、创建一个raid50,由7块盘组成,其中一块为热备盘,最终容量为6G大小,chunk大小为1M,要求热备盘共享,创建为ext4文件系统,开机自动挂载到/magedata目录下。

#创建第一个raid 5 ,3块活动硬盘,1块热备盘

[root@localhost ~]# mdadm -C /dev/md1 -n 3 -l 5 -x 1 /dev/sd{c,d,e,f}

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md1 started.

#创建第二个raid 5 ,3块活动硬盘

[root@localhost ~]# mdadm -C /dev/md2 -n 3 -l 5 /dev/sd{g,h,i}

mdadm: largest drive (/dev/sdg) exceeds size (1047552K) by more than 1%

Continue creating array? y

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md2 started.

#利用两个raid 5 创建一个raid 0 ,chunk大小为1M

[root@localhost ~]# mdadm -C /dev/md3 -n 2 -l 0 -c 1M /dev/md{1,2}

mdadm: /dev/md1 appears to contain an ext2fs file system

size=3143680K mtime=Thu Jan 1 08:00:00 1970

Continue creating array? y

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md3 started.

#查看当前md状态

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid1] [raid6] [raid5] [raid4] [raid10] [raid0]

md3 : active raid0 md2[1] md1[0]

5234688 blocks super 1.2 1024k chunks

md2 : active raid5 sdi[3] sdh[1] sdg[0]

2095104 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/3] [UUU]

md1 : active raid5 sde[4] sdf[3](S) sdd[1] sdc[0]

3143680 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/3] [UUU]

unused devices:

#为md3创建文件系统

[root@localhost ~]# mkfs.ext4 /dev/md3

mke2fs 1.42.9 (28-Dec-2013)

Filesystem label=

OS type: Linux

Block size=4096 (log=2)

Fragment size=4096 (log=2)

Stride=256 blocks, Stripe width=512 blocks

327680 inodes, 1308672 blocks

65433 blocks (5.00%) reserved for the super user

First data block=0

Maximum filesystem blocks=1340080128

40 block groups

32768 blocks per group, 32768 fragments per group

8192 inodes per group

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912, 819200, 884736

Allocating group tables: done

Writing inode tables: done

Creating journal (32768 blocks): done

Writing superblocks and filesystem accounting information: done

#查看/dev/md3的UUID

[root@localhost ~]# blkid /dev/md3

/dev/md3: UUID="22add5f6-89fe-47c9-a867-a86d952ecf0b" TYPE="ext4"

#创建所需目录

[root@localhost ~]# mkdir -v /magedata

mkdir: created directory ‘/magedata’

#修改/etc/fstab文件,增加一行内容如下:

UUID=22add5f6-89fe-47c9-a867-a86d952ecf0b /magedata ext4 defaults 0 0

#保存当前md状态至/etc/mdadm.conf文件

[root@localhost ~]# mdadm -D -s > /etc/mdadm.conf

#查看/etc/mdad.conf文件内容

[root@localhost ~]# cat /etc/mdadm.conf

ARRAY /dev/md1 metadata=1.2 spares=1 name=localhost.localdomain:1 UUID=f9a252f3:c315e0d6:0fa2986a:e195a8ab

ARRAY /dev/md2 metadata=1.2 name=localhost.localdomain:2 UUID=4c5641d3:965a42dd:e7f26d34:9557d42e

ARRAY /dev/md3 metadata=1.2 name=localhost.localdomain:3 UUID=a5ee4d2f:8512c453:0faadd80:23d98b17

#编辑上述文件内容添加以下红色内容:

ARRAY /dev/md1 metadata=1.2 spares=1 name=localhost.localdomain:1 UUID=f9a252f3:c315e0d6:0fa2986a:e195a8ab spare-group=magedu

ARRAY /dev/md2 metadata=1.2 spares=1 name=localhost.localdomain:2 UUID=4c5641d3:965a42dd:e7f26d34:9557d42e spare-group=magedu

ARRAY /dev/md3 metadata=1.2 name=localhost.localdomain:3 UUID=a5ee4d2f:8512c453:0faadd80:23d98b17MAILADDR root@localhost

#把/dev/sdg设为坏盘

[root@localhost ~]# mdadm /dev/md2 -f /dev/sdg

mdadm: set /dev/sdg faulty in /dev/md2

#查看/dev/md2的状态,可以看到之前md2没有热备盘,但/dev/sdg设为坏盘之后,/dev/md1的热备盘移至/dev/md2下去了。

[root@localhost ~]# mdadm -D /dev/md2

/dev/md2:

Version : 1.2

Creation Time : Sun Feb 25 21:39:04 2018

Raid Level : raid5

Array Size : 2095104 (2046.00 MiB 2145.39 MB)

Used Dev Size : 1047552 (1023.00 MiB 1072.69 MB)

Raid Devices : 3

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Sun Feb 25 22:03:11 2018

State : clean

Active Devices : 3

Working Devices : 3

Failed Devices : 1

Spare Devices : 0

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Name : localhost.localdomain:2 (local to host localhost.localdomain)

UUID : 4c5641d3:965a42dd:e7f26d34:9557d42e

Events : 39

Number Major Minor RaidDevice State

4 8 80 0 active sync /dev/sdf

1 8 112 1 active sync /dev/sdh

3 8 128 2 active sync /dev/sdi

0 8 96 - faulty /dev/sdg