⼀.准备⼯作

1.离线部署大纲

- MySQL离线部署

- CM离线部署

- Parcel⽂件离线源部署

2.规划

linux版本:CentOS 7.2

| 节点 | MySQL组件 | Parcel⽂件离线源 | CM服务进程 | ⼤数据组件 |

|---|---|---|---|---|

| hadoop001 | MySQL | Parcel | Alert Publisher Event Server | NN RM DN NM ZK |

| hadoop002 | Alert Publisher Event Server | DN NM ZK | ||

| hadoop003 | Host Monitor Service Monitor | DN NM ZK |

3.下载源

-

CM

cloudera-manager-centos7-cm5.16.1x8664.tar.gz -

Parcel

CDH-5.16.1-1.cdh5.16.1.p0.3-el7.parcel

CDH-5.16.1-1.cdh5.16.1.p0.3-el7.parcel.sha1

manifest.json -

JDK

JDK8

下载jdk-8u202-linux-x64.tar.gz -

MySQL

MYSQL5.7

下载mysql-5.7.26-el7-x86_64.tar.gz -

MySQL JDBC jar

mysql-connector-java-5.1.47.jar

下载完成后要重命名去掉版本号mv mysql-connector-java-5.1.47.jar mysql-connector-java.jar

⼆.集群节点初始化

1.阿里云购买3台虚拟机

(最低配置 2core 8G),选择按量付费

CentOS7.22.当前笔记本(win)hosts配置文件

路径: C:\Windows\System32\drivers\etc\hosts

39.97.188.249 hadoop001 hadoop001

39.97.225.112 hadoop002 hadoop002

39.97.224.68 hadoop003 hadoop003注意:IP是你虚拟机公网IP

3.设置所有节点的hosts文件

echo '172.17.144.104 hadoop001' >> /etc/hosts

echo '172.17.144.103 hadoop002' >> /etc/hosts

echo '172.17.144.105 hadoop003' >> /etc/hosts

#检查

cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

172.17.144.104 hadoop001

172.17.144.103 hadoop002

172.17.144.105 hadoop003注意:IP为内网IP

4.关闭所有节点防火墙及清空规则

云主机

我们使用的云主机,无论阿里云还是腾讯云的防火墙都是关闭的,所以我们不需要关闭服务器的防火墙。但是,我们需要检查下是否自动开启了web访问端口,如果没有,则自己添加

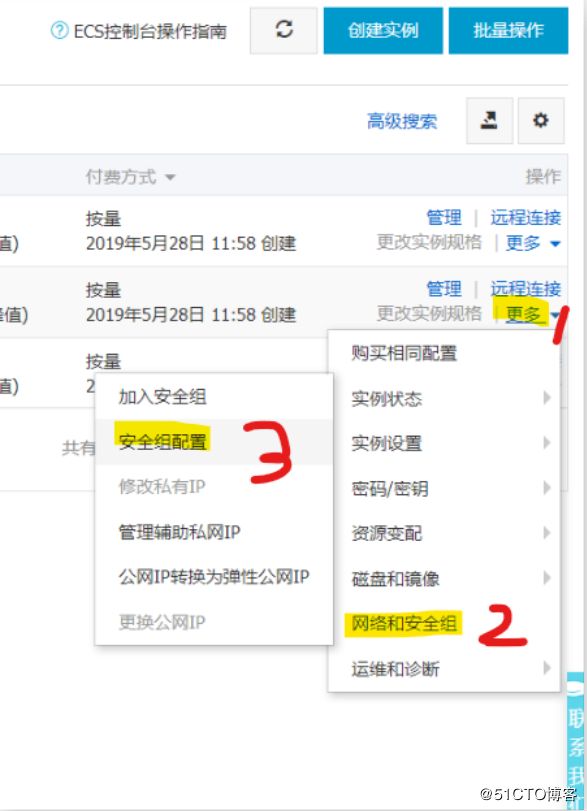

(1)打开安全组配置

进入之后点击配置规则

(2)添加安全组规则

注意:

1.点击蓝色感叹号会有规则说明

2.授权对象如果在公司内需要设置网段,就按照上图,将ip网段规定好。不限制的话就直接0.0.0.0/0内网服务器

最好在内部服务器部署时就将防火墙关闭,如果不行就暂时关闭,等部署成功再开启

systemctl stop firewalldsystemctl disable firewalldiptables -F 5.关闭所有节点selinux

阿里云服务器已经将selinux关闭了,所以不用配置

自己的服务器很可能会开启selinux,这样就需要关闭了

将SELINUX=disabled设置进去,之后重启才会生效

vim /etc/selinux/config

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

SELINUX=disabled

# SELINUXTYPE= can take one of three two values:

# targeted - Targeted processes are protected,

# minimum - Modification of targeted policy. Only selected processes are protected.

# mls - Multi Level Security protection.

SELINUXTYPE=targeted6.设置所有节点时区一致及时钟同步

阿里云已经将节点时区和时间做了同步

我们实操下公司环境的时区时间同步

6.1时区

[root@hadoop001 ~]# timedatectl

Local time: Tue 2019-05-28 15:37:53 CST

Universal time: Tue 2019-05-28 07:37:53 UTC

RTC time: Tue 2019-05-28 15:37:53

Time zone: Asia/Shanghai (CST, +0800)

NTP enabled: yes

NTP synchronized: yes

RTC in local TZ: yes

DST active: n/a

#查看命令帮助,学习⾄关重要,⽆需百度,太low

[root@hadoop001 ~]# timedatectl --help

timedatectl [OPTIONS...] COMMAND ...

Query or change system time and date settings.

-h --help Show this help message

--version Show package version

--no-pager Do not pipe output into a pager

--no-ask-password Do not prompt for password

-H --host=[USER@]HOST Operate on remote host

-M --machine=CONTAINER Operate on local container

--adjust-system-clock Adjust system clock when changing local RTC mode

Commands:

status Show current time settings

set-time TIME Set system time

set-timezone ZONE Set system time zone

list-timezones Show known time zones

set-local-rtc BOOL Control whether RTC is in local time

set-ntp BOOL Control whether NTP is enabled

#查看哪些时区

[root@hadoop001 ~]# timedatectl list-timezones

Africa/Abidjan

Africa/Accra

Africa/Addis_Ababa

Africa/Algiers

Africa/Asmara

Africa/Bamako

#所有节点设置亚洲上海时区

[root@hadoop001 ~]# timedatectl set-timezone Asia/Shanghai

[root@hadoop002 ~]# timedatectl set-timezone Asia/Shanghai

[root@hadoop003 ~]# timedatectl set-timezone Asia/Shanghai6.2.时间

#所有节点安装ntp

[root@hadoop001 ~]# yum install -y ntp

#选取hadoop001为ntp的主节点

[root@hadoop001 ~]# vi /etc/ntp.conf

#time

server 0.asia.pool.ntp.org

server 1.asia.pool.ntp.org

server 2.asia.pool.ntp.org

server 3.asia.pool.ntp.org

#当外部时间不可用时,可使用本地硬件时间

server 127.127.1.0 iburst local clock

#允许哪些网段的机器来同步时间 修改成自己的内网网段

restrict 172.17.144.0 mask 255.255.255.0 nomodify notrap

#开启ntpd及查看状态

[root@hadoop001 ~]# systemctl start ntpd

[root@hadoop001 ~]# systemctl status ntpd

● ntpd.service - Network Time Service

Loaded: loaded (/usr/lib/systemd/system/ntpd.service; enabled; vendor preset: d

isabled)

Active: active (running) since Sat 2019-05-11 10:15:00 CST; 11min ago

Main PID: 18518 (ntpd)

CGroup: /system.slice/ntpd.service

!"18518 /usr/sbin/ntpd -u ntp:ntp -g

May 11 10:15:00 hadoop001 systemd[1]: Starting Network Time Service...

May 11 10:15:00 hadoop001 ntpd[18518]: proto: precision = 0.088 usec

May 11 10:15:00 hadoop001 ntpd[18518]: 0.0.0.0 c01d 0d kern kernel time sync enabl

ed

May 11 10:15:00 hadoop001 systemd[1]: Started Network Time Service.

#验证

[root@hadoop001 ~]# ntpq -p

remote refid st t when poll reach delay offset jitter

==============================================================================

LOCAL(0) .LOCL. 10 l 726 64 0 0.000 0.000 0.000

#其他从节点停⽌禁⽤ntpd服务

[root@hadoop002 ~]# systemctl stop ntpd

[root@hadoop002 ~]# systemctl disable ntpd

Removed symlink /etc/systemd/system/multi-user.target.wants/ntpd.service.

[root@hadoop002 ~]# /usr/sbin/ntpdate hadoop001

11 May 10:29:22 ntpdate[9370]: adjust time server 172.19.7.96 offset 0.000867 sec

#每天凌晨同步hadoop001节点时间[root@hadoop002 ~]# crontab -e

00 00 * * * /usr/sbin/ntpdate hadoop001

[root@hadoop003 ~]# systemctl stop ntpd

[root@hadoop004 ~]# systemctl disable ntpd

Removed symlink /etc/systemd/system/multi-user.target.wants/ntpd.service.

[root@hadoop005 ~]# /usr/sbin/ntpdate hadoop001

11 May 10:29:22 ntpdate[9370]: adjust time server 172.19.7.96 offset 0.000867 sec

#每天凌晨同步hadoop001节点时间

[root@hadoop003 ~]# crontab -e

00 00 * * * /usr/sbin/ntpdate hadoop0017.JDK部署

mkdir /usr/java

tar -xzvf jdk-8u45-linux-x64.tar.gz -C /usr/java/

#切记必须修正所属⽤户及⽤户组

chown -R root:root /usr/java/jdk1.8.0_45

[root@hadoop001 cdh5.16.1]# vim /etc/profile

export JAVA_HOME=/usr/java/jdk1.8.0_45

export PATH=${JAVA_HOME}/bin:${PATH}

source /etc/profile

which java如果节点过多,那么就只做一台镜像模板,将基础工作完成之后,分发克隆。。(最好请运维小哥哥做~)

8.hadoop001节点离线部署MySQL5.7

(按照生产标准)

8.1 解压及创建文件夹

#解压

[root@hadoop001 cdh5.16.1]# tar xzvf mysql-5.7.11-linux-glibc2.5-x86_64.tar.gz -C /usr/local/

#切换目录

[root@hadoop001 cdh5.16.1]# cd /usr/local/

#修改mysql名称

[root@hadoop001 local]# mv mysql-5.7.11-linux-glibc2.5-x86_64/ mysql

#创建文件夹

[root@hadoop001 local]# mkdir mysql/arch mysql/data mysql/tmp8.2 创建my.cnf

rm /etc/my.cnfvim /etc/my.cnf[client]

port = 3306

socket = /usr/local/mysql/data/mysql.sock

default-character-set=utf8mb4

[mysqld]

port = 3306

socket = /usr/local/mysql/data/mysql.sock

skip-slave-start

skip-external-locking

key_buffer_size = 256M

sort_buffer_size = 2M

read_buffer_size = 2M

read_rnd_buffer_size = 4M

query_cache_size= 32M

max_allowed_packet = 16M

myisam_sort_buffer_size=128M

tmp_table_size=32M

table_open_cache = 512

thread_cache_size = 8

wait_timeout = 86400

interactive_timeout = 86400

max_connections = 600

# Try number of CPU's*2 for thread_concurrency

#thread_concurrency = 32

#isolation level and default engine

default-storage-engine = INNODB

transaction-isolation = READ-COMMITTED

server-id = 1739

basedir = /usr/local/mysql

datadir = /usr/local/mysql/data

pid-file = /usr/local/mysql/data/hostname.pid

#open performance schema

log-warnings

sysdate-is-now

binlog_format = ROW

log_bin_trust_function_creators=1

log-error = /usr/local/mysql/data/hostname.err

log-bin = /usr/local/mysql/arch/mysql-bin

expire_logs_days = 7

innodb_write_io_threads=16

relay-log = /usr/local/mysql/relay_log/relay-log

relay-log-index = /usr/local/mysql/relay_log/relay-log.index

relay_log_info_file= /usr/local/mysql/relay_log/relay-log.info

log_slave_updates=1

gtid_mode=OFF

enforce_gtid_consistency=OFF

# slave

slave-parallel-type=LOGICAL_CLOCK

slave-parallel-workers=4

master_info_repository=TABLE

relay_log_info_repository=TABLE

relay_log_recovery=ON

#other logs

#general_log =1

#general_log_file = /usr/local/mysql/data/general_log.err

#slow_query_log=1

#slow_query_log_file=/usr/local/mysql/data/slow_log.err

#for replication slave

sync_binlog = 500

#for innodb options

innodb_data_home_dir = /usr/local/mysql/data/

innodb_data_file_path = ibdata1:1G;ibdata2:1G:autoextend

innodb_log_group_home_dir = /usr/local/mysql/arch

innodb_log_files_in_group = 4

innodb_log_file_size = 1G

innodb_log_buffer_size = 200M

#根据生产需要,调整pool size

innodb_buffer_pool_size = 2G

#innodb_additional_mem_pool_size = 50M #deprecated in 5.6

tmpdir = /usr/local/mysql/tmp

innodb_lock_wait_timeout = 1000

#innodb_thread_concurrency = 0

innodb_flush_log_at_trx_commit = 2

innodb_locks_unsafe_for_binlog=1

#innodb io features: add for mysql5.5.8

performance_schema

innodb_read_io_threads=4

innodb-write-io-threads=4

innodb-io-capacity=200

#purge threads change default(0) to 1 for purge

innodb_purge_threads=1

innodb_use_native_aio=on

#case-sensitive file names and separate tablespace

innodb_file_per_table = 1

lower_case_table_names=1

[mysqldump]

quick

max_allowed_packet = 128M

[mysql]

no-auto-rehash

default-character-set=utf8mb4

[mysqlhotcopy]

interactive-timeout

[myisamchk]

key_buffer_size = 256M

sort_buffer_size = 256M

read_buffer = 2M

write_buffer = 2M8.3 创建用户组及用户

[root@hadoop001 local]# groupadd -g 101 dba

[root@hadoop001 local]# useradd -u 514 -g dba -G root -d /usr/local/mysql mysqladmin[root@hadoop001 local]# id mysqladmin

uid=514(mysqladmin) gid=101(dba) groups=101(dba),0(root)

## 一般不需要设置mysqladmin的密码,直接从root或者LDAP用户sudo切换8.4 copy 环境变量配置文件

copy 环境变量配置文件(隐藏文件)至mysqladmin用户的home目录中,为了以下步骤配置个人环境变量

cp /etc/skel/.* /usr/local/mysql 8.5 配置环境变量

[root@hadoop001 local]# vi mysql/.bash_profile# .bash_profile

# Get the aliases and functions

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

# User specific environment and startup programs

export MYSQL_BASE=/usr/local/mysql

export PATH=${MYSQL_BASE}/bin:$PATH

unset USERNAME

#stty erase ^H

set umask to 022

umask 022

PS1=`uname -n`":"'$USER'":"'$PWD'":>"; export PS18.6 赋权限和用户组 切换用户mysqladmin 安装

[root@hadoop001 local]# chown mysqladmin:dba /etc/my.cnf

[root@hadoop001 local]# chmod 640 /etc/my.cnf

[root@hadoop001 local]# chown -R mysqladmin:dba /usr/local/mysql

[root@hadoop001 local]# chmod -R 755 /usr/local/mysql 8.7 配置服务及开机自启动

[root@hadoop001 local]# cd /usr/local/mysql

#将服务文件拷贝到init.d下,并重命名为mysql

[root@hadoop001 mysql]# cp support-files/mysql.server /etc/rc.d/init.d/mysql

#赋予可执行权限

[root@hadoop001 mysql]# chmod +x /etc/rc.d/init.d/mysql

#删除服务

[root@hadoop001 mysql]# chkconfig --del mysql

#添加服务

[root@hadoop001 mysql]# chkconfig --add mysql

[root@hadoop001 mysql]# chkconfig --level 345 mysql on8.8 安装libaio及安装mysql的初始db

[root@hadoop001 mysql]# yum -y install libaio[root@hadoop001 mysql]# su - mysqladmin

Last login: Tue May 28 17:04:49 CST 2019 on pts/0

hadoop001:mysqladmin:/usr/local/mysql:>bin/mysqld \

> --defaults-file=/etc/my.cnf \

> --user=mysqladmin \

> --basedir=/usr/local/mysql/ \

> --datadir=/usr/local/mysql/data/ \

> --initialize在初始化时如果加上 –initial-insecure,则会创建空密码的 root@localhost 账号,否则会创建带密码的 root@localhost 账号,密码直接写在 log-error 日志文件中(在5.6版本中是放在 ~/.mysql_secret 文件里,更加隐蔽,不熟悉的话可能会无所适从)

8.9 查看临时密码

#查看密码

hadoop001:mysqladmin:/usr/local/mysql/data:>cat hostname.err |grep password

2019-05-28T09:28:40.447701Z 1 [Note] A temporary password is generated for root@localhost: J=8.10 启动

hadoop001:mysqladmin:/usr/local/mysql:>/usr/local/mysql/bin/mysqld_safe --defaults-file=/etc/my.cnf &

[1] 21740

hadoop001:mysqladmin:/usr/local/mysql:>2019-05-28T09:38:16.127060Z mysqld_safe Logging to '/usr/local/mysql/data/hostname.err'.

2019-05-28T09:38:16.196799Z mysqld_safe Starting mysqld daemon with databases from /usr/local/mysql/data

#按两次回车

##退出mysqladmin用户

##查看mysql进程号

[root@hadoop001 mysql]#ps -ef|grep mysql

mysqlad+ 21740 1 0 17:38 pts/0 00:00:00 /bin/sh /usr/local/mysql/bin/mysqld_safe --defaults-file=/etc/my.cnf

mysqlad+ 22557 21740 0 17:38 pts/0 00:00:00 /usr/local/mysql/bin/mysqld --defaults-file=/etc/my.cnf --basedir=/usr/local/mysql --datadir=/usr/local/mysql/data --plugin-dir=/usr/local/mysql/lib/plugin --log-error=/usr/local/mysql/data/hostname.err --pid-file=/usr/local/mysql/data/hostname.pid --socket=/usr/local/mysql/data/mysql.sock --port=3306

root 22609 9194 0 17:39 pts/0 00:00:00 grep --color=auto mysql

##通过mysql进程号查看mysql端口号

[root@hadoop001 mysql]# netstat -nlp|grep 22557

#切换成mysqladmin

[root@hadoop001 mysql]# su - mysqladmin

Last login: Tue May 28 17:24:45 CST 2019 on pts/0

hadoop001:mysqladmin:/usr/local/mysql:>

##查看mysql是否运行

hadoop001:mysqladmin:/usr/local/mysql:>service mysql status

MySQL running (22557)[ OK ]8.11 登录及修改用户密码

#初始密码

hadoop001:mysqladmin:/usr/local/mysql:>mysql -uroot -p'J= alter user root@localhost identified by 'ruozedata123';

mysql>GRANT ALL PRIVILEGES ON *.* TO 'root'@'%' IDENTIFIED BY 'ruozedata123' ;

#刷权限

mysql> flush privileges; 8.12 重启

9.创建CDH的元数据库和用户、 amon服务的数据库及用户

mysql> CREATE DATABASE `cmf` DEFAULT CHARACTER SET utf8;mysql> GRANT ALL PRIVILEGES ON cmf.* TO 'cmf'@'%' IDENTIFIED BY 'ruozedata123' ;mysql> create database amon default character set utf8;mysql> GRANT ALL PRIVILEGES ON amon.* TO 'amon'@'%' IDENTIFIED BY 'ruozedata123' ;--刷权限

mysql> flush privileges;10.部署 mysql JDBC jar

[root@hadoop001 cdh5.16.1]# mkdir -p /usr/share/java

[root@hadoop001 cdh5.16.1]# ls -lh

total 3.5G

-rw-r--r-- 1 root root 2.0G May 15 10:01 CDH-5.16.1-1.cdh5.16.1.p0.3-el7.parcel

-rw-r--r-- 1 root root 41 May 14 20:17 CDH-5.16.1-1.cdh5.16.1.p0.3-el7.parcel.sha1

-rw-r--r-- 1 root root 803M May 15 09:38 cloudera-manager-centos7-cm5.16.1_x86_64.tar.gz

-rw-r--r-- 1 root root 166M May 14 20:21 jdk-8u45-linux-x64.gz

-rw-r--r-- 1 root root 65K May 14 20:17 manifest.json

-rw-r--r-- 1 root root 523M May 15 09:28 mysql-5.7.11-linux-glibc2.5-x86_64.tar.gz

-rw-r--r-- 1 root root 984K May 15 09:10 mysql-connector-java-5.1.47.jar

#mysql的jar包一定要去掉版本号~,有坑

[root@hadoop001 cdh5.16.1]# cp mysql-connector-java-5.1.47.jar /usr/share/java/mysql-connector-java.jar三.CDH离线部署

1.部署CM Server 和Agent

1.1 所有节点创建⽬录及解压

mkdir /opt/cloudera-manager

tar -zxvf cloudera-manager-centos7-cm5.16.1_x86_64.tar.gz -C /opt/cloudera-manager

/

sed -i "s/server_host=localhost/server_host=hadoop001/g" /opt/cloudera-manager/cm-5.16.1/etc/cloudera-scm-agent/config.ini1.2 所有节点修改config.ini

所有节点修改agent的配置,指向server的节点hadoop001

sed -i "s/server_host=localhost/server_host=hadoop001/g" /opt/cloudera-manager/cm-5.16.1/etc/cloudera-scm-agent/config.ini1.3 主节点修改server的配置

vi /opt/cloudera-manager/cm-5.16.1/etc/cloudera-scm-server/db.propertiescom.cloudera.cmf.db.type=mysql

com.cloudera.cmf.db.host=hadoop001

com.cloudera.cmf.db.name=cmf

com.cloudera.cmf.db.user=cmf

com.cloudera.cmf.db.password=ruozedata123

com.cloudera.cmf.db.setupType=EXTERNAL1.4 所有节点创建cloudera-scm用户

#创建cloudera-scm

useradd --system --home=/opt/cloudera-manager/cm-5.16.1/run/cloudera-scm-server/ --no-create-home --shell=/bin/false --comment "Cloudera SCM User" cloudera-scm

#修改cloudera-manager的权限

chown -R cloudera-scm:cloudera-scm /opt/cloudera-manager1.5 所有节点修改cloudera-manager用户名用户组

chown -R cloudera-scm:cloudera-scm /opt/cloudera-manager2.hadoop001节点部署离线parcel源

2.1 部署离线parcel源

mkdir -p /opt/cloudera/parcel-repo[root@hadoop001 opt]# cd ~/cdh5.16.1/

[root@hadoop001 cdh5.16.1]# ls -lh

total 3.5G

-rw-r--r-- 1 root root 2.0G May 15 10:01 CDH-5.16.1-1.cdh5.16.1.p0.3-el7.parcel

-rw-r--r-- 1 root root 41 May 14 20:17 CDH-5.16.1-1.cdh5.16.1.p0.3-el7.parcel.sha1

-rw-r--r-- 1 root root 803M May 15 09:38 cloudera-manager-centos7-cm5.16.1_x86_64.tar.gz

-rw-r--r-- 1 root root 166M May 14 20:21 jdk-8u45-linux-x64.gz

-rw-r--r-- 1 root root 65K May 14 20:17 manifest.json

-rw-r--r-- 1 root root 523M May 15 09:28 mysql-5.7.11-linux-glibc2.5-x86_64.tar.gz

-rw-r--r-- 1 root root 984K May 15 09:10 mysql-connector-java-5.1.47.jar[root@hadoop001 cdh5.16.1]# mv CDH-5.16.1-1.cdh5.16.1.p0.3-el7.parcel.sha1 /opt/cloudera/parcel-repo/CDH-5.16.1-1.cdh5.16.1.p0.3-el7.parcel.sha

#切记mv时,重命名去掉1,不然在部署过程CM认为如上⽂件下载未完整,会持续下载[root@hadoop001 cdh5.16.1]# mv CDH-5.16.1-1.cdh5.16.1.p0.3-el7.parcel /opt/cloudera/parcel-repo/[root@hadoop001 cdh5.16.1]# mv manifest.json /opt/cloudera/parcel-repo/如果你是通过网络下载的parcel包,我们就需要对CDH-5.16.1-1.cdh5.16.1.p0.3-el7.parcel 进行校验,防止文件损坏!!!

[root@hadoop001 parcel-repo]# cat CDH-5.16.1-1.cdh5.16.1.p0.3-el7.parcel.sha

703728dfa7690861ecd3a9bcd412b04ac8de7148

#计算下载文件的值,进行对比

[root@hadoop001 parcel-repo]# sha1sum CDH-5.16.1-1.cdh5.16.1.p0.3-el7.parcel

703728dfa7690861ecd3a9bcd412b04ac8de7148 CDH-5.16.1-1.cdh5.16.1.p0.3-el7.parcel

#相同,可以正常使用2.2 目录修改用户及用户组

chown -R cloudera-scm:cloudera-scm /opt/cloudera/parcel-repo/3.所有节点创建大数据软件安装目录、用户及用户组权限

mkdir -p /opt/cloudera/parcels

chown -R cloudera-scm:cloudera-scm /opt/cloudera/4.hadoop001节点启动Server

4.1 启动server

/opt/cloudera-manager/cm-5.16.1/etc/init.d/cloudera-scm-server start

4.2 阿⾥云web界⾯,设置该hadoop001节点防⽕墙放开7180端⼝

4.3 等待1min,打开 http://hadoop001:7180 账号密码:admin/admin

4.4 假如打不开,去看server的log,根据错误仔细排查错误

log路径在/opt/cloudera-manager/cm-5.16.1/log/cloudera-scm-server5.所有节点启动Agent

/opt/cloudera-manager/cm-5.16.1/etc/init.d/cloudera-scm-agent start6.接下来,全部Web界面操作

http://hadoop001:7180/

账号密码:admin/admin

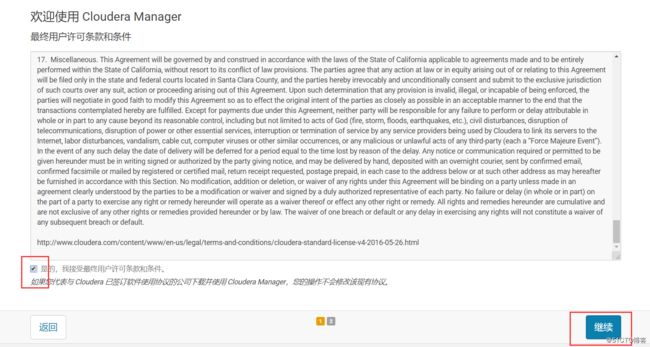

7.欢迎使⽤Cloudera Manager--最终⽤户许可条款与条件。勾选

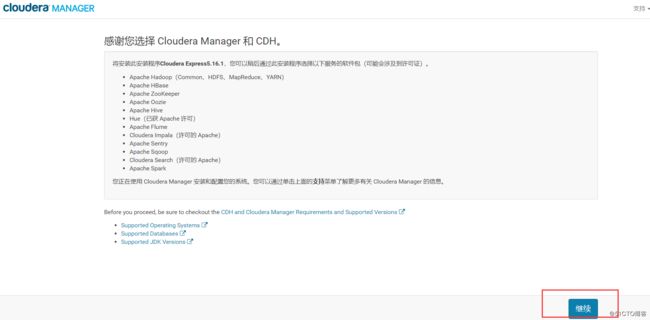

8.欢迎使⽤Cloudera Manager--您想要部署哪个版本?选择Cloudera Express免费版本

9.感谢您选择Cloudera Manager和CDH

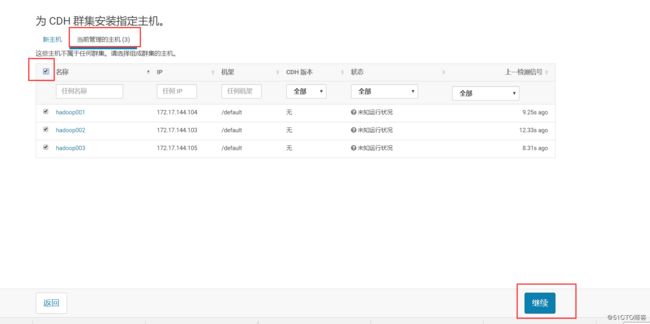

10.为CDH集群安装指导主机。选择[当前管理的主机],全部勾选

11.选择存储库

12.集群安装--正在安装选定Parcel

假如本地parcel离线源配置正确,则"下载"阶段瞬间完成,其余阶段视节点数与内部⽹络情况决定。

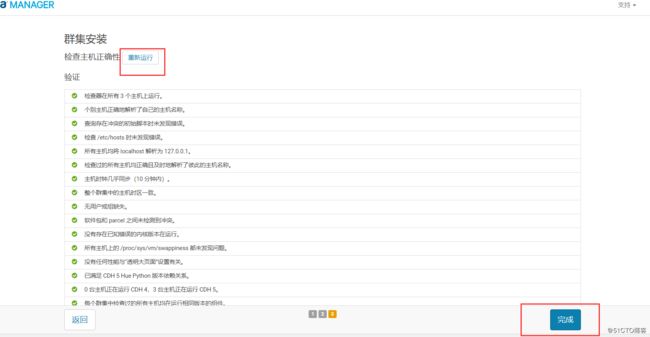

13.检查主机正确性

13.1.建议将/proc/sys/vm/swappiness设置为最⼤值10。

swappiness值控制操作系统尝试交换内存的积极;

swappiness=0:表示最⼤限度使⽤物理内存,之后才是swap空间;

swappiness=100:表示积极使⽤swap分区,并且把内存上的数据及时搬迁到swap空间;

如果是混合服务器,不建议完全禁⽤swap,可以尝试降低swappiness。

临时调整:

sysctl vm.swappiness=10

永久调整:

cat << EOF >> /etc/sysctl.conf

# Adjust swappiness value

vm.swappiness=10

EOF

13.2.已启⽤透明⼤⻚⾯压缩,可能会导致重⼤性能问题,建议禁⽤此设置。

临时调整:

echo never > /sys/kernel/mm/transparent_hugepage/defrag

echo never > /sys/kernel/mm/transparent_hugepage/enabled

永久调整:

cat << EOF >> /etc/rc.d/rc.local

# Disable transparent_hugepage

echo never > /sys/kernel/mm/transparent_hugepage/defrag

echo never > /sys/kernel/mm/transparent_hugepage/enabled

EOF

# centos7.x系统,需要为"/etc/rc.d/rc.local"⽂件赋予执⾏权限

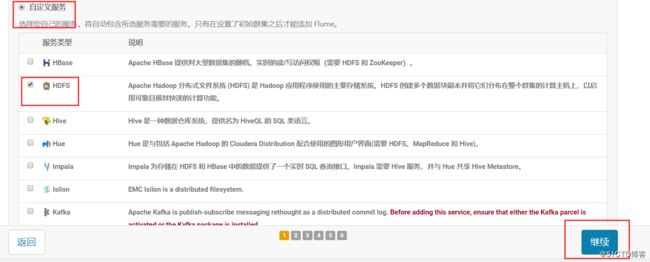

chmod +x /etc/rc.d/rc.local14.自定义服务,选择部署Zookeeper、 HDFS、 Yarn服务

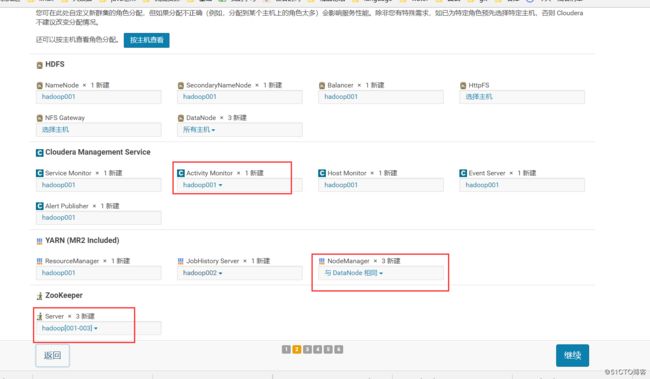

15.自定义角色分配

16.数据库设置

连接测试失败的可能原因:

(1)mysql JDBC jar包没有放到/usr/share/java或jar包没有去掉版本号

(2)建数据库cmf 和amon的时候,没有将权限设置成%

(3)设置完权限之后,没有flush privileges;

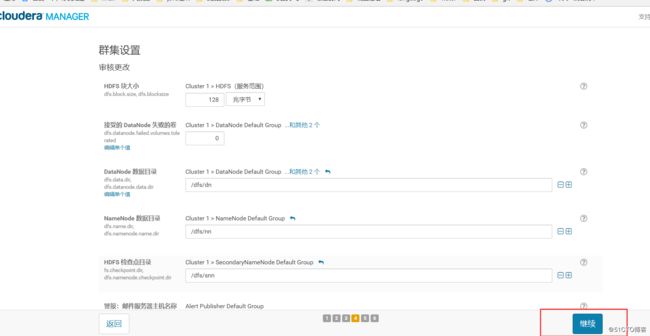

17.审改设置,默认即可

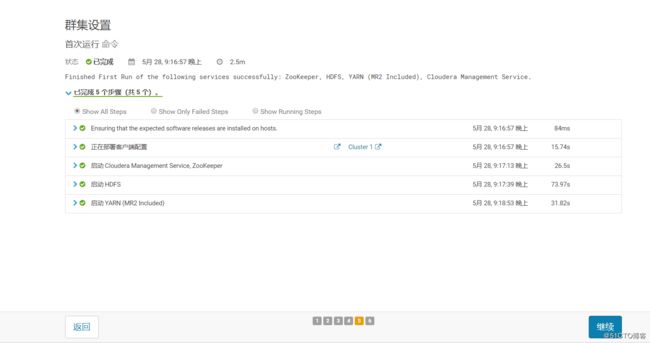

18.⾸次运⾏

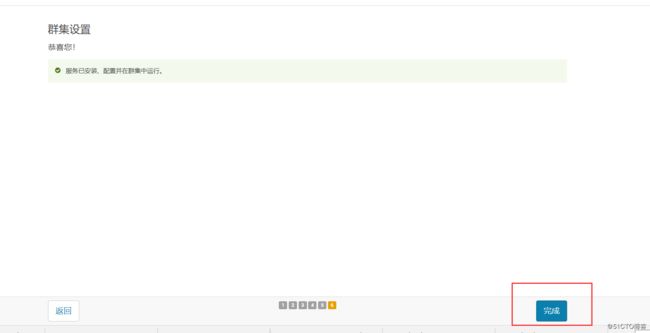

19.恭喜您!

20.主页

四.报错

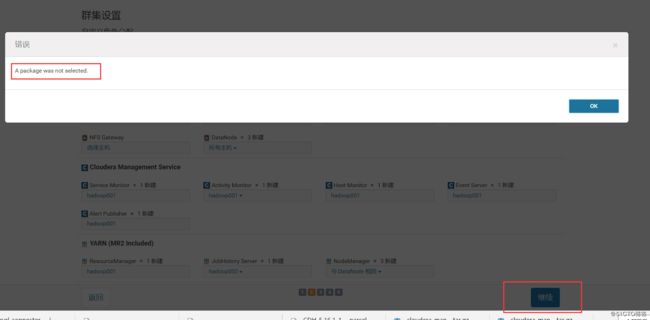

1.在数据库设置测试时发生报错

报错信息

ERROR 226616765@scm-web-17:com.cloudera.server.web.common.JsonResponse:

JsonResponse created with throwable: com.cloudera.server.web.cmf.MessageException:

A package was not selected.

原因:

测试连接时,等待时间过长,我就点了返回键重新加载,然后出现packet找不到的异常。

解决:

返回到选择大数据组件的页面后,重新进行操作,就可以测试成功了。