文本分类相关论文

2018年开始关注文本分类的一些论文,在这里做个小小的总结,记录一下。

1.论文题目:Joint Embedding of Words and Labels for Text Classification

机构:Duke University

论文发表:ACL2018

Github:https://github.com/guoyinwang/LEAM

摘要:将标签label与输入文本word embedding在相同空间进行词嵌入,并引入attention框架,比较输入文本text sequence和标签label作为attention。实验结果表明方法在准确率和效率上都有较好效果。

2.论文题目:Deep Pyramid Convolutional Neural Networks for Text Categorization

机构:Tencent AI Lab 张潼

论文发表:ACL2018

Github:作者github(C++)https://github.com/riejohnson/ConText

网友pytorch:https://github.com/Cheneng/DPCNN

摘要:DPCNN,低复杂度,有效表示长距离关联,简单结构的word-level CNN,增加层数但不增加复杂度。

3.论文题目:Disconnected Recurrent Neural Networks for Text Categorization

机构:科大讯飞

论文发表:ACL2018

Github:

摘要:DRNN,结合了CNN和RNN,将CNN的位移不变性引入RNN,使用CNN代替RNN单元。CNN的窗口越大则模型更像CNN,越小则倾向于RNN。

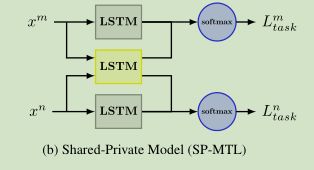

4.论文题目:Adversarial Multi-task Learning for Text Classification

机构:复旦大学

论文发表:ACL2018

Github:https://github.com/FrankWork/fudan_mtl_reviews

摘要:多任务学习+对抗思想,抽取共享特征和任务特殊特征。

5.论文题目:A Multi-sentiment-resource Enhanced Attention Network for Sentiment

Classification

机构:清华北大等

论文发表:2018

Github:

摘要:多情感资源attention网络(MEAN),通过构建情感词、负面词、强调词三种词库,使用attention机制引入神经网络,可用于情感分类。

文本分类经典的几篇:textcnn,vdcnn,HAN

SWEM:Baseline Needs More Love: On Simple Word-Embedding-Based Models and Associated Pooling Mechanisms

(效果又快又好,实现和思想都很简单,安利一下)

fasttext:可作为baseline使用。

transformer:Attention Is All You Need

神经网络+朴素贝叶斯:Weighted Neural Bag-of-n-grams Model:New Baselines for Text Classification

github:https://github.com/zhezhaoa/neural_BOW_toolkit.

Adaptive+attention:Adaptive Learning of Local Semantic and Global StructureRepresentations for Text Classification

三明治网络:LSTM+CNN+LSTM 捕获结构和语义信息,加入自适应学习和注意力机制。

Target-Oriented:Transformation Networks for Target-Oriented Sentiment Classification

LSTM+pooling:Text Classification Improved by Integrating Bidirectional LSTM with Two-dimensional Max Pooling

Multi-scale Feature Attention:Densely Connected CNN with Multi-scale Feature Attention for Text Classification

通过堆叠CNN block加大特征抽取尺度,同时也加入了attention机制。

https://github.com/wangshy31/Densely-Connected-CNN-with-Multiscale-Feature-Attention.git

task-specific word embedding:Task-oriented Word Embedding for Text Classification

强化学习(文本分类):Learning Structured Representation for Text Classification via Reinforcement Learning

region embedding:A NEW METHOD OF REGION EMBEDDING FOR TEXT CLASSIFICATION

sentence embedding:A S TRUCTURED S ELF - ATTENTIVE SENTENCE E MBEDDING

语义相似(文本分类无关):ABCNN: Attention-Based Convolutional Neural Network for Modeling Sentence Pairs

Pairwise Word Interaction Modeling with Deep Neural Networks for Semantic Similarity Measurement

2018年NLP届都在关注的:Elmo、GPT、ULMFIT、BERT。

之前没有笔记的习惯,看到哪算哪,过久了就忘。一切过往,皆为序章。以后把看的能够记录下来,希望能从简单地看论文到有自己的解读和思考,并能得到启发,为己所用。