kubernetes系列之十九:配置kubernetes使用kubevirt管理虚拟机

一、前言

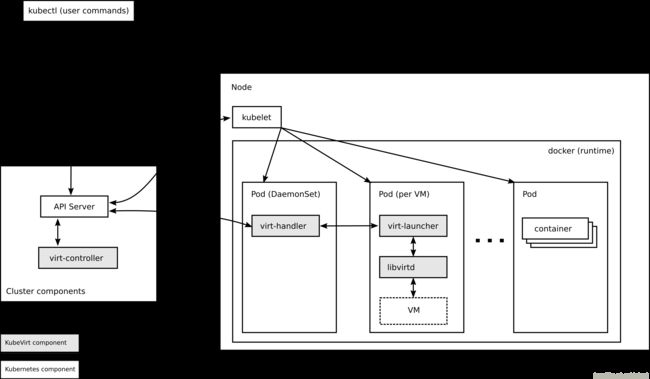

kubevirt可以扩展kubernetes的功能以管理虚拟机,其架构见文章

https://github.com/kubevirt/kubevirt/blob/master/docs/architecture.md

在安装之前需要先做如下准备工作:

安装libvirt和qemu软件包

yum -y install qemu-kvm libvirt virt-install bridge-utils给k8s node打标签

kubectl label node k8s-01 kubevirt.io=virt-controller

kubectl label node k8s-02 kubevirt.io=virt-controller

kubectl label node k8s-03 kubevirt.io=virt-api

kubectl label node k8s-04 kubevirt.io=virt-api查看node能力

kubectl get nodes -o yaml

allocatable:

cpu: "2"

devices.kubevirt.io/tun: "110"

ephemeral-storage: "16415037823"

hugepages-2Mi: "0"

memory: 1780264Ki

pods: "110"

capacity:

cpu: "2"

devices.kubevirt.io/tun: "110"

ephemeral-storage: 17394Mi

hugepages-2Mi: "0"

memory: 1882664Ki

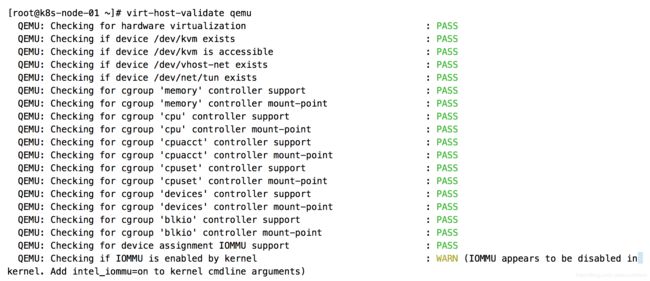

pods: "110"查看节点是否支持kvm硬件辅助虚拟化

ls /dev/kvm

ls: cannot access /dev/kvm: No such file or directory

virt-host-validate qemu

QEMU: Checking for hardware virtualization : FAIL (Only emulated CPUs are available, performance will be significantly limited)

QEMU: Checking if device /dev/vhost-net exists : PASS

QEMU: Checking if device /dev/net/tun exists : PASS

QEMU: Checking for cgroup 'memory' controller support : PASS

QEMU: Checking for cgroup 'memory' controller mount-point : PASS

QEMU: Checking for cgroup 'cpu' controller support : PASS

QEMU: Checking for cgroup 'cpu' controller mount-point : PASS

QEMU: Checking for cgroup 'cpuacct' controller support : PASS

QEMU: Checking for cgroup 'cpuacct' controller mount-point : PASS

QEMU: Checking for cgroup 'cpuset' controller support : PASS

QEMU: Checking for cgroup 'cpuset' controller mount-point : PASS

QEMU: Checking for cgroup 'devices' controller support : PASS

QEMU: Checking for cgroup 'devices' controller mount-point : PASS

QEMU: Checking for cgroup 'blkio' controller support : PASS

QEMU: Checking for cgroup 'blkio' controller mount-point : PASS

WARN (Unknown if this platform has IOMMU support)如不支持,则先生成让kubevirt使用软件虚拟化的配置

kubectl configmap -n kube-system kubevirt-config --from-literal debug.useEmulation=true支持虚拟化的结果如下:

kubectl get nodes -o yaml

allocatable:

cpu: "48"

devices.kubevirt.io/kvm: "110"

devices.kubevirt.io/tun: "110"

ephemeral-storage: "48294789041"

hugepages-1Gi: "0"

hugepages-2Mi: "0"

memory: 263753532Ki

pods: "110"

capacity:

cpu: "48"

devices.kubevirt.io/kvm: "110"

devices.kubevirt.io/tun: "110"

ephemeral-storage: 51175Mi

hugepages-1Gi: "0"

hugepages-2Mi: "0"

memory: 263855932Ki

pods: "110"

转载自https://blog.csdn.net/cloudvtech

二、安装kubevirt

2.1 部署kubevirt

export VERSION=v0.8.0

kubectl create -f https://github.com/kubevirt/kubevirt/releases/download/$VERSION/kubevirt.yaml2.2 查看部署结果

virt-api-79c6f4d756-2vwqh 0/1 Running 0 9s

virt-controller-559c749968-7lwrl 0/1 Running 0 9s

virt-handler-2grjk 1/1 Running 0 9s

virt-handler-djfbr 1/1 Running 0 9s

virt-handler-r2pls 1/1 Running 0 9s

virt-handler-rb948 1/1 Running 0 9s2.3 virt-controller logs

level=info timestamp=2018-10-06T14:46:37.788008Z pos=application.go:179 component=virt-controller msg="DataVolume integration disabled"

level=info timestamp=2018-10-06T14:46:37.790568Z pos=application.go:194 component=virt-controller service=http action=listening interface=0.0.0.0 port=8182

level=info timestamp=2018-10-06T14:46:55.398082Z pos=virtinformers.go:117 component=virt-controller service=http msg="STARTING informer vmiInformer"

level=info timestamp=2018-10-06T14:46:55.398243Z pos=virtinformers.go:117 component=virt-controller service=http msg="STARTING informer kubeVirtPodInformer"

level=info timestamp=2018-10-06T14:46:55.398271Z pos=virtinformers.go:117 component=virt-controller service=http msg="STARTING informer vmiPresetInformer"

level=info timestamp=2018-10-06T14:46:55.398300Z pos=virtinformers.go:117 component=virt-controller service=http msg="STARTING informer vmInformer"

level=info timestamp=2018-10-06T14:46:55.398322Z pos=virtinformers.go:117 component=virt-controller service=http msg="STARTING informer limitrangeInformer"

level=info timestamp=2018-10-06T14:46:55.398339Z pos=virtinformers.go:117 component=virt-controller service=http msg="STARTING informer kubeVirtNodeInformer"

level=info timestamp=2018-10-06T14:46:55.398359Z pos=virtinformers.go:117 component=virt-controller service=http msg="STARTING informer vmirsInformer"

level=info timestamp=2018-10-06T14:46:55.398372Z pos=virtinformers.go:117 component=virt-controller service=http msg="STARTING informer configMapInformer"

level=info timestamp=2018-10-06T14:46:55.398385Z pos=virtinformers.go:117 component=virt-controller service=http msg="STARTING informer fakeDataVolumeInformer"

level=info timestamp=2018-10-06T14:46:55.398430Z pos=vm.go:113 component=virt-controller service=http msg="Starting VirtualMachine controller."

level=info timestamp=2018-10-06T14:46:55.399309Z pos=node.go:104 component=virt-controller service=http msg="Starting node controller."

level=info timestamp=2018-10-06T14:46:55.399360Z pos=vmi.go:165 component=virt-controller service=http msg="Starting vmi controller."

level=info timestamp=2018-10-06T14:46:55.399393Z pos=replicaset.go:111 component=virt-controller service=http msg="Starting VirtualMachineInstanceReplicaSet controller."

level=info timestamp=2018-10-06T14:46:55.399416Z pos=preset.go:74 component=virt-controller service=http msg="Starting Virtual Machine Initializer.”2.4 virt-api logs

level=info timestamp=2018-10-06T14:46:49.538030Z pos=filter.go:46 component=virt-api remoteAddress=10.244.11.128 username=- method=GET url=/ proto=HTTP/2.0 statusCode=404 contentLength=19

level=info timestamp=2018-10-06T14:46:49.550993Z pos=filter.go:46 component=virt-api remoteAddress=10.244.11.128 username=- method=GET url=/ proto=HTTP/2.0 statusCode=404 contentLength=19

level=info timestamp=2018-10-06T14:46:51.623461Z pos=filter.go:46 component=virt-api remoteAddress=10.244.11.128 username=- method=GET url="/apis/subresources.kubevirt.io/v1alpha2?timeout=32s" proto=HTTP/2.0 statusCode=200 contentLength=136

level=info timestamp=2018-10-06T14:46:52.758037Z pos=filter.go:46 component=virt-api remoteAddress=10.244.11.128 username=- method=GET url=/ proto=HTTP/2.0 statusCode=404 contentLength=19

level=info timestamp=2018-10-06T14:46:52.975272Z pos=filter.go:46 component=virt-api remoteAddress=10.244.11.128 username=- method=GET url=/openapi/v2 proto=HTTP/2.0 statusCode=404 contentLength=19

level=info timestamp=2018-10-06T14:46:52.976837Z pos=filter.go:46 component=virt-api remoteAddress=10.244.11.128 username=- method=GET url=/swagger.json proto=HTTP/2.0 statusCode=404 contentLength=19

level=info timestamp=2018-10-06T14:46:55.124209Z pos=filter.go:46 component=virt-api remoteAddress=10.244.11.128 username=- method=GET url="/apis/subresources.kubevirt.io/v1alpha2?timeout=32s" proto=HTTP/2.0 statusCode=200 contentLength=136

level=info timestamp=2018-10-06T14:46:55.278349Z pos=filter.go:46 component=virt-api remoteAddress=10.244.11.128 username=- method=GET url="/apis/subresources.kubevirt.io/v1alpha2?timeout=32s" proto=HTTP/2.0 statusCode=200 contentLength=136

level=info timestamp=2018-10-06T14:46:58.350208Z pos=filter.go:46 component=virt-api remoteAddress=10.244.11.128 username=- method=GET url="/apis/subresources.kubevirt.io/v1alpha2?timeout=32s" proto=HTTP/2.0 statusCode=200 contentLength=136

level=info timestamp=2018-10-06T14:47:08.771700Z pos=filter.go:46 component=virt-api remoteAddress=10.244.11.128 username=- method=GET url="/apis/subresources.kubevirt.io/v1alpha2?timeout=32s" proto=HTTP/2.0 statusCode=200 contentLength=136

level=info timestamp=2018-10-06T14:47:21.831239Z pos=filter.go:46 component=virt-api remoteAddress=10.244.11.128 username=- method=GET url="/apis/subresources.kubevirt.io/v1alpha2?timeout=32s" proto=HTTP/2.0 statusCode=200 contentLength=136

level=info timestamp=2018-10-06T14:47:22.746803Z pos=filter.go:46 component=virt-api remoteAddress=10.244.11.128 username=- method=GET url=/ proto=HTTP/2.0 statusCode=404 contentLength=19

level=info timestamp=2018-10-06T14:47:25.637788Z pos=filter.go:46 component=virt-api remoteAddress=10.244.11.128 username=- method=GET url="/apis/subresources.kubevirt.io/v1alpha2?timeout=32s" proto=HTTP/2.0 statusCode=200 contentLength=136

level=info timestamp=2018-10-06T14:47:28.376151Z pos=filter.go:46 component=virt-api remoteAddress=10.244.11.128 username=- method=GET url="/apis/subresources.kubevirt.io/v1alpha2?timeout=32s" proto=HTTP/2.0 statusCode=200 contentLength=136

2018/10/06 14:47:28 http: TLS handshake error from 10.0.2.15:45040: EOF

2018/10/06 14:47:38 http: TLS handshake error from 10.0.2.15:45046: EOF

level=info timestamp=2018-10-06T14:47:38.972610Z pos=filter.go:46 component=virt-api remoteAddress=10.244.11.128 username=- method=GET url="/apis/subresources.kubevirt.io/v1alpha2?timeout=32s" proto=HTTP/2.0 statusCode=200 contentLength=136

2018/10/06 14:47:48 http: TLS handshake error from 10.0.2.15:45052: EOF

level=info timestamp=2018-10-06T14:47:51.847764Z pos=filter.go:46 component=virt-api remoteAddress=10.244.11.128 username=- method=GET url="/apis/subresources.kubevirt.io/v1alpha2?timeout=32s" proto=HTTP/2.0 statusCode=200 contentLength=136

level=info timestamp=2018-10-06T14:47:52.756364Z pos=filter.go:46 component=virt-api remoteAddress=10.244.11.128 username=- method=GET url=/ proto=HTTP/2.0 statusCode=404 contentLength=19

level=info timestamp=2018-10-06T14:47:52.979039Z pos=filter.go:46 component=virt-api remoteAddress=10.244.11.128 username=- method=GET url=/openapi/v2 proto=HTTP/2.0 statusCode=404 contentLength=19

level=info timestamp=2018-10-06T14:47:52.981050Z pos=filter.go:46 component=virt-api remoteAddress=10.244.11.128 username=- method=GET url=/swagger.json proto=HTTP/2.0 statusCode=404 contentLength=19

level=info timestamp=2018-10-06T14:47:55.842662Z pos=filter.go:46 component=virt-api remoteAddress=10.244.11.128 username=- method=GET url="/apis/subresources.kubevirt.io/v1alpha2?timeout=32s" proto=HTTP/2.0 statusCode=200 contentLength=136

level=info timestamp=2018-10-06T14:47:58.396208Z pos=filter.go:46 component=virt-api remoteAddress=10.244.11.128 username=- method=GET url="/apis/subresources.kubevirt.io/v1alpha2?timeout=32s" proto=HTTP/2.0 statusCode=200 contentLength=1362.5 virt-handler logs

level=info timestamp=2018-10-06T14:46:38.210175Z pos=virt-handler.go:87 component=virt-handler hostname=k8s-01

level=info timestamp=2018-10-06T14:46:38.213346Z pos=vm.go:210 component=virt-handler msg="Starting virt-handler controller."

level=info timestamp=2018-10-06T14:46:38.213831Z pos=cache.go:151 component=virt-handler msg="Synchronizing domains"

level=info timestamp=2018-10-06T14:46:38.213918Z pos=cache.go:121 component=virt-handler msg="List domains from sock /var/run/kubevirt/sockets/41ad405c-c96b-11e8-9d0e-08002763f94a_sock"

level=error timestamp=2018-10-06T14:46:38.213995Z pos=cache.go:124 component=virt-handler reason="dial unix /var/run/kubevirt/sockets/41ad405c-c96b-11e8-9d0e-08002763f94a_sock: connect: connection refused" msg="failed to connect to cmd client socket"

level=info timestamp=2018-10-06T14:46:38.314489Z pos=device_controller.go:133 component=virt-handler msg="Starting device plugin controller"

level=info timestamp=2018-10-06T14:46:38.315207Z pos=device_controller.go:113 component=virt-handler msg="kvm device not found. Waiting."

level=info timestamp=2018-10-06T14:46:38.317441Z pos=device_controller.go:127 component=virt-handler msg="tun device plugin started"转载自https://blog.csdn.net/cloudvtech

三、启动一个VM

3.1 vm.yaml

apiVersion: kubevirt.io/v1alpha2

kind: VirtualMachine

metadata:

name: testvm

spec:

running: false

selector:

matchLabels:

guest: testvm

template:

metadata:

labels:

guest: testvm

kubevirt.io/size: small

spec:

domain:

devices:

disks:

- name: registrydisk

volumeName: registryvolume

disk:

bus: virtio

- name: cloudinitdisk

volumeName: cloudinitvolume

disk:

bus: virtio

volumes:

- name: registryvolume

registryDisk:

image: kubevirt/cirros-registry-disk-demo

- name: cloudinitvolume

cloudInitNoCloud:

userDataBase64: SGkuXG4=

---

apiVersion: kubevirt.io/v1alpha2

kind: VirtualMachineInstancePreset

metadata:

name: small

spec:

selector:

matchLabels:

kubevirt.io/size: small

domain:

resources:

requests:

memory: 64M

devices: {}3.2 部署

kubectl apply -f vm.yaml

virtualmachine.kubevirt.io/testvm created

virtualmachineinstancepreset.kubevirt.io/small created3.3 查看部署结果

此时由于VM还没启动,所有没有POD没有vmis,只有vms资源

[root@k8s-install-node kubevirt]# kubectl get pods

No resources found.

[root@k8s-install-node kubevirt]# kubectl get vms

NAME AGE

testvm 43s

[root@k8s-install-node kubevirt]# kubectl get vmis

No resources found.

kubectl describe vms

Name: testvm

Namespace: default

Labels:

Annotations: kubectl.kubernetes.io/last-applied-configuration={"apiVersion":"kubevirt.io/v1alpha2","kind":"VirtualMachine","metadata":{"annotations":{},"name":"testvm","namespace":"default"},"spec":{"running":fals...

API Version: kubevirt.io/v1alpha2

Kind: VirtualMachine

Metadata:

Creation Timestamp: 2018-10-06T14:54:30Z

Generation: 1

Resource Version: 630320

Self Link: /apis/kubevirt.io/v1alpha2/namespaces/default/virtualmachines/testvm

UID: baf248ad-c977-11e8-9d0e-08002763f94a

Spec:

Running: false

Selector:

Match Labels:

Guest: testvm

Template:

Metadata:

Labels:

Guest: testvm

Kubevirt . Io / Size: small

Spec:

Domain:

Devices:

Disks:

Disk:

Bus: virtio

Name: registrydisk

Volume Name: registryvolume

Disk:

Bus: virtio

Name: cloudinitdisk

Volume Name: cloudinitvolume

Volumes:

Name: registryvolume

Registry Disk:

Image: kubevirt/cirros-registry-disk-demo

Cloud Init No Cloud:

User Data Base 64: SGkuXG4=

Name: cloudinitvolume

Events: 转载自https://blog.csdn.net/cloudvtech

四、启动虚拟机

4.1 启动虚拟机

下载virtctl

curl -L -o virtctl https://github.com/kubevirt/kubevirt/releases/download/$VERSION/virtctl-$VERSION-linux-amd64

chmod +x virtctl启动VM

virtctl start testvm

VM testvm was scheduled to start4.2 查看状态

kubectl get pods

NAME READY STATUS RESTARTS AGE

virt-launcher-testvm-jv6tk 2/2 Running 0 1m

kubectl get vmis

NAME AGE

testvm 1mkubectl get vms -o yaml testvm

apiVersion: kubevirt.io/v1alpha2

kind: VirtualMachine

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"kubevirt.io/v1alpha2","kind":"VirtualMachine","metadata":{"annotations":{},"name":"testvm","namespace":"default"},"spec":{"running":false,"selector":{"matchLabels":{"guest":"testvm"}},"template":{"metadata":{"labels":{"guest":"testvm","kubevirt.io/size":"small"}},"spec":{"domain":{"devices":{"disks":[{"disk":{"bus":"virtio"},"name":"registrydisk","volumeName":"registryvolume"},{"disk":{"bus":"virtio"},"name":"cloudinitdisk","volumeName":"cloudinitvolume"}]}},"volumes":[{"name":"registryvolume","registryDisk":{"image":"kubevirt/cirros-registry-disk-demo"}},{"cloudInitNoCloud":{"userDataBase64":"SGkuXG4="},"name":"cloudinitvolume"}]}}}}

creationTimestamp: 2018-10-06T14:54:30Z

generation: 1

name: testvm

namespace: default

resourceVersion: "630920"

selfLink: /apis/kubevirt.io/v1alpha2/namespaces/default/virtualmachines/testvm

uid: baf248ad-c977-11e8-9d0e-08002763f94a

spec:

running: true

template:

metadata:

creationTimestamp: null

labels:

guest: testvm

kubevirt.io/size: small

spec:

domain:

devices:

disks:

- disk:

bus: virtio

name: registrydisk

volumeName: registryvolume

- disk:

bus: virtio

name: cloudinitdisk

volumeName: cloudinitvolume

machine:

type: ""

resources: {}

volumes:

- name: registryvolume

registryDisk:

image: kubevirt/cirros-registry-disk-demo

- cloudInitNoCloud:

userDataBase64: SGkuXG4=

name: cloudinitvolume

status:

created: true

ready: true

kubectl get vmis -o yaml

apiVersion: v1

items:

- apiVersion: kubevirt.io/v1alpha2

kind: VirtualMachineInstance

metadata:

annotations:

presets.virtualmachines.kubevirt.io/presets-applied: kubevirt.io/v1alpha2

virtualmachinepreset.kubevirt.io/small: kubevirt.io/v1alpha2

creationTimestamp: 2018-10-06T14:58:39Z

finalizers:

- foregroundDeleteVirtualMachine

generateName: testvm

generation: 1

labels:

guest: testvm

kubevirt.io/nodeName: k8s-01

kubevirt.io/size: small

name: testvm

namespace: default

ownerReferences:

- apiVersion: kubevirt.io/v1alpha2

blockOwnerDeletion: true

controller: true

kind: VirtualMachine

name: testvm

uid: baf248ad-c977-11e8-9d0e-08002763f94a

resourceVersion: "630919"

selfLink: /apis/kubevirt.io/v1alpha2/namespaces/default/virtualmachineinstances/testvm

uid: 4f2f4897-c978-11e8-9d0e-08002763f94a

spec:

domain:

devices:

disks:

- disk:

bus: virtio

name: registrydisk

volumeName: registryvolume

- disk:

bus: virtio

name: cloudinitdisk

volumeName: cloudinitvolume

interfaces:

- bridge: {}

name: default

features:

acpi:

enabled: true

firmware:

uuid: 5a9fc181-957e-5c32-9e5a-2de5e9673531

machine:

type: q35

resources:

requests:

memory: 64M

networks:

- name: default

pod: {}

volumes:

- name: registryvolume

registryDisk:

image: kubevirt/cirros-registry-disk-demo

- cloudInitNoCloud:

userDataBase64: SGkuXG4=

name: cloudinitvolume

status:

interfaces:

- ipAddress: 10.244.61.218

nodeName: k8s-01

phase: Running

kind: List

metadata:

resourceVersion: ""

selfLink: “"4.3 日志

virt-controller logs

level=info timestamp=2018-10-06T14:58:46.837085Z pos=preset.go:142 component=virt-controller service=http namespace=default name=testvm kind= uid=4f2f4897-c978-11e8-9d0e-08002763f94a msg="Initializing VirtualMachineInstance"

level=info timestamp=2018-10-06T14:58:46.837188Z pos=preset.go:255 component=virt-controller service=http namespace=default name=testvm kind= uid=4f2f4897-c978-11e8-9d0e-08002763f94a msg="VirtualMachineInstancePreset small matches VirtualMachineInstance"

level=info timestamp=2018-10-06T14:58:46.837223Z pos=preset.go:171 component=virt-controller service=http namespace=default name=testvm kind= uid=4f2f4897-c978-11e8-9d0e-08002763f94a msg="Marking VirtualMachineInstance as initialized"virt-handler logs

level=info timestamp=2018-10-06T14:58:59.921866Z pos=vm.go:319 component=virt-handler msg="Processing vmi testvm, existing: true\n"

level=info timestamp=2018-10-06T14:58:59.921906Z pos=vm.go:321 component=virt-handler msg="vmi is in phase: Scheduled\n"

level=info timestamp=2018-10-06T14:58:59.921920Z pos=vm.go:339 component=virt-handler msg="Domain: existing: false\n"

level=info timestamp=2018-10-06T14:58:59.921967Z pos=vm.go:426 component=virt-handler namespace=default name=testvm kind= uid=4f2f4897-c978-11e8-9d0e-08002763f94a msg="Processing vmi update"

level=info timestamp=2018-10-06T14:59:00.401528Z pos=server.go:75 component=virt-handler msg="Received Domain Event of type ADDED"

level=info timestamp=2018-10-06T14:59:00.401800Z pos=vm.go:731 component=virt-handler namespace=default name=testvm kind=Domain uid=4f2f4897-c978-11e8-9d0e-08002763f94a msg="Domain is in state Paused reason StartingUp"

level=info timestamp=2018-10-06T14:59:00.484518Z pos=server.go:75 component=virt-handler msg="Received Domain Event of type MODIFIED"

level=info timestamp=2018-10-06T14:59:00.486456Z pos=vm.go:762 component=virt-handler namespace=default name=testvm kind=Domain uid=4f2f4897-c978-11e8-9d0e-08002763f94a msg="Domain is in state Running reason Unknown"

level=info timestamp=2018-10-06T14:59:00.488657Z pos=vm.go:450 component=virt-handler namespace=default name=testvm kind= uid=4f2f4897-c978-11e8-9d0e-08002763f94a msg="Synchronization loop succeeded."

level=info timestamp=2018-10-06T14:59:00.489459Z pos=vm.go:319 component=virt-handler msg="Processing vmi testvm, existing: true\n"

level=info timestamp=2018-10-06T14:59:00.489483Z pos=vm.go:321 component=virt-handler msg="vmi is in phase: Scheduled\n"

level=info timestamp=2018-10-06T14:59:00.489497Z pos=vm.go:339 component=virt-handler msg="Domain: existing: true\n"

level=info timestamp=2018-10-06T14:59:00.489506Z pos=vm.go:341 component=virt-handler msg="Domain status: Running, reason: Unknown\n"

level=info timestamp=2018-10-06T14:59:00.489534Z pos=vm.go:429 component=virt-handler namespace=default name=testvm kind= uid=4f2f4897-c978-11e8-9d0e-08002763f94a msg="No update processing required"

level=info timestamp=2018-10-06T14:59:00.500284Z pos=server.go:75 component=virt-handler msg="Received Domain Event of type MODIFIED"

level=info timestamp=2018-10-06T14:59:00.507488Z pos=vm.go:450 component=virt-handler namespace=default name=testvm kind= uid=4f2f4897-c978-11e8-9d0e-08002763f94a msg="Synchronization loop succeeded."

level=info timestamp=2018-10-06T14:59:00.507577Z pos=vm.go:319 component=virt-handler msg="Processing vmi testvm, existing: true\n"

level=info timestamp=2018-10-06T14:59:00.507590Z pos=vm.go:321 component=virt-handler msg="vmi is in phase: Running\n"

level=info timestamp=2018-10-06T14:59:00.507602Z pos=vm.go:339 component=virt-handler msg="Domain: existing: true\n"

level=info timestamp=2018-10-06T14:59:00.507611Z pos=vm.go:341 component=virt-handler msg="Domain status: Running, reason: Unknown\n"

level=info timestamp=2018-10-06T14:59:00.507651Z pos=vm.go:426 component=virt-handler namespace=default name=testvm kind= uid=4f2f4897-c978-11e8-9d0e-08002763f94a msg="Processing vmi update"4.4 访问virt-luncher POD

POD信息

kubectl get pod

NAME READY STATUS RESTARTS AGE

virt-launcher-testvm-jv6tk 2/2 Running 0 11m

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 12m default-scheduler Successfully assigned default/virt-launcher-testvm-jv6tk to k8s-01

Normal Pulled 11m kubelet, k8s-01 Container image "kubevirt/cirros-registry-disk-demo" already present on machine

Normal Created 11m kubelet, k8s-01 Created container

Normal Started 11m kubelet, k8s-01 Started container

Normal Pulled 11m kubelet, k8s-01 Container image "docker.io/kubevirt/virt-launcher:v0.8.0" already present on machine

Normal Created 11m kubelet, k8s-01 Created container

Normal Started 11m kubelet, k8s-01 Started containerPOD日志

2018-10-06 14:58:49.197+0000: 52: error : virPCIDeviceConfigOpen:312 : Failed to open config space file '/sys/bus/pci/devices/0000:00:07.0/config': Read-only file system

2018-10-06 14:58:49.197+0000: 52: error : virPCIDeviceConfigOpen:312 : Failed to open config space file '/sys/bus/pci/devices/0000:00:08.0/config': Read-only file system

2018-10-06 14:58:49.197+0000: 52: error : virPCIDeviceConfigOpen:312 : Failed to open config space file '/sys/bus/pci/devices/0000:00:0d.0/config': Read-only file system

2018-10-06 14:58:51.058+0000: 47: error : virCommandWait:2600 : internal error: Child process (/usr/sbin/dmidecode -q -t 0,1,2,3,4,17) unexpected exit status 1: /dev/mem: No such file or directory

2018-10-06 14:58:51.069+0000: 47: error : virNodeSuspendSupportsTarget:336 : internal error: Cannot probe for supported suspend types

2018-10-06 14:58:51.069+0000: 47: warning : virQEMUCapsInit:1229 : Failed to get host power management capabilities

level=info timestamp=2018-10-06T14:58:58.295400Z pos=libvirt.go:276 component=virt-launcher msg="Connected to libvirt daemon"

level=info timestamp=2018-10-06T14:58:58.307021Z pos=virt-launcher.go:143 component=virt-launcher msg="Watchdog file created at /var/run/kubevirt/watchdog-files/default_testvm"

level=info timestamp=2018-10-06T14:58:58.307374Z pos=client.go:152 component=virt-launcher msg="Registered libvirt event notify callback"

level=info timestamp=2018-10-06T14:58:58.307460Z pos=virt-launcher.go:60 component=virt-launcher msg="Marked as ready"

level=info timestamp=2018-10-06T14:58:59.926926Z pos=converter.go:381 component=virt-launcher msg="Hardware emulation device '/dev/kvm' not present. Using software emulation."

level=info timestamp=2018-10-06T14:58:59.935170Z pos=cloud-init.go:254 component=virt-launcher msg="generated nocloud iso file /var/run/libvirt/kubevirt-ephemeral-disk/cloud-init-data/default/testvm/noCloud.iso"

level=info timestamp=2018-10-06T14:58:59.937885Z pos=converter.go:811 component=virt-launcher msg="Found nameservers in /etc/resolv.conf: \n`\u0000\n"

level=info timestamp=2018-10-06T14:58:59.937953Z pos=converter.go:812 component=virt-launcher msg="Found search domains in /etc/resolv.conf: default.svc.cluster.local svc.cluster.local cluster.local"

level=info timestamp=2018-10-06T14:58:59.938724Z pos=dhcp.go:62 component=virt-launcher msg="Starting SingleClientDHCPServer"

level=error timestamp=2018-10-06T14:58:59.940154Z pos=common.go:126 component=virt-launcher msg="updated MAC for interface: eth0 - be:38:5d:23:70:ad"

level=info timestamp=2018-10-06T14:58:59.971683Z pos=manager.go:158 component=virt-launcher namespace=default name=testvm kind= uid=4f2f4897-c978-11e8-9d0e-08002763f94a msg="Domain defined."

level=info timestamp=2018-10-06T14:58:59.972022Z pos=client.go:136 component=virt-launcher msg="Libvirt event 0 with reason 0 received"

2018-10-06 14:59:00.006+0000: 32: error : virDBusGetSystemBus:109 : internal error: Unable to get DBus system bus connection: Failed to connect to socket /run/dbus/system_bus_socket: No such file or directory

2018-10-06 14:59:00.388+0000: 32: error : virCgroupDetect:714 : At least one cgroup controller is required: No such device or address

level=info timestamp=2018-10-06T14:59:00.394568Z pos=virt-launcher.go:214 component=virt-launcher msg="Detected domain with UUID 5a9fc181-957e-5c32-9e5a-2de5e9673531"

level=info timestamp=2018-10-06T14:59:00.394747Z pos=monitor.go:253 component=virt-launcher msg="Monitoring loop: rate 1s start timeout 5m0s"

level=info timestamp=2018-10-06T14:59:00.398183Z pos=client.go:119 component=virt-launcher msg="domain status: 3:11"

level=info timestamp=2018-10-06T14:59:00.402066Z pos=client.go:145 component=virt-launcher msg="processed event"

level=info timestamp=2018-10-06T14:59:00.470313Z pos=client.go:136 component=virt-launcher msg="Libvirt event 4 with reason 0 received"

level=info timestamp=2018-10-06T14:59:00.478060Z pos=client.go:119 component=virt-launcher msg="domain status: 1:1"

level=info timestamp=2018-10-06T14:59:00.484866Z pos=manager.go:189 component=virt-launcher namespace=default name=testvm kind= uid=4f2f4897-c978-11e8-9d0e-08002763f94a msg="Domain started."

level=info timestamp=2018-10-06T14:59:00.485661Z pos=server.go:74 component=virt-launcher namespace=default name=testvm kind= uid=4f2f4897-c978-11e8-9d0e-08002763f94a msg="Synced vmi"

level=info timestamp=2018-10-06T14:59:00.490557Z pos=client.go:145 component=virt-launcher msg="processed event"

level=info timestamp=2018-10-06T14:59:00.490629Z pos=client.go:136 component=virt-launcher msg="Libvirt event 2 with reason 0 received"

level=info timestamp=2018-10-06T14:59:00.499447Z pos=client.go:119 component=virt-launcher msg="domain status: 1:1"

level=info timestamp=2018-10-06T14:59:00.500643Z pos=client.go:145 component=virt-launcher msg="processed event"在POD内部信息

[root@testvm /]# ps -ef

UID PID PPID C STIME TTY TIME CMD

root 1 0 0 14:58 ? 00:00:00 /bin/bash /usr/share/kubevirt/virt-launcher/entrypoint.sh --qemu-timeout

root 7 1 0 14:58 ? 00:00:00 virt-launcher --qemu-timeout 5m --name testvm --uid 4f2f4897-c978-11e8-9

root 17 7 0 14:58 ? 00:00:00 /bin/bash /usr/share/kubevirt/virt-launcher/libvirtd.sh

root 18 7 0 14:58 ? 00:00:00 /usr/sbin/virtlogd -f /etc/libvirt/virtlogd.conf

root 31 17 0 14:58 ? 00:00:00 /usr/sbin/libvirtd

qemu 156 1 2 14:58 ? 00:00:23 /usr/bin/qemu-system-x86_64 -name guest=default_testvm,debug-threads=on

root 2829 0 0 15:13 ? 00:00:00 bash

root 2842 1 0 15:13 ? 00:00:00 /usr/bin/coreutils --coreutils-prog-shebang=sleep /usr/bin/sleep 1

root 2843 2829 0 15:13 ? 00:00:00 ps -ef

[root@testvm /]# ps -ef | grep 156

qemu 156 1 2 14:58 ? 00:00:23 /usr/bin/qemu-system-x86_64 -name guest=default_testvm,debug-threads=on -S -object secret,id=masterKey0,format=raw,file=/var/lib/libvirt/qemu/domain-1-default_testvm/master-key.aes -machine pc-q35-2.12,accel=tcg,usb=off,dump-guest-core=off -cpu EPYC,acpi=on,ss=on,hypervisor=on,erms=on,mpx=on,pcommit=on,clwb=on,pku=on,ospke=on,la57=on,3dnowext=on,3dnow=on,vme=off,fma=off,avx=off,f16c=off,rdrand=off,avx2=off,rdseed=off,sha-ni=off,xsavec=off,fxsr_opt=off,misalignsse=off,3dnowprefetch=off,osvw=off -m 62 -realtime mlock=off -smp 1,sockets=1,cores=1,threads=1 -uuid 5a9fc181-957e-5c32-9e5a-2de5e9673531 -no-user-config -nodefaults -chardev socket,id=charmonitor,path=/var/lib/libvirt/qemu/domain-1-default_testvm/monitor.sock,server,nowait -mon chardev=charmonitor,id=monitor,mode=control -rtc base=utc -no-shutdown -boot strict=on -device pcie-root-port,port=0x10,chassis=1,id=pci.1,bus=pcie.0,multifunction=on,addr=0x2 -device pcie-root-port,port=0x11,chassis=2,id=pci.2,bus=pcie.0,addr=0x2.0x1 -device pcie-root-port,port=0x12,chassis=3,id=pci.3,bus=pcie.0,addr=0x2.0x2 -device pcie-root-port,port=0x13,chassis=4,id=pci.4,bus=pcie.0,addr=0x2.0x3 -drive file=/var/run/libvirt/kubevirt-ephemeral-disk/registry-disk-data/default/testvm/disk_registryvolume/disk-image.raw,format=raw,if=none,id=drive-ua-registrydisk -device virtio-blk-pci,scsi=off,bus=pci.2,addr=0x0,drive=drive-ua-registrydisk,id=ua-registrydisk,bootindex=1 -drive file=/var/run/libvirt/kubevirt-ephemeral-disk/cloud-init-data/default/testvm/noCloud.iso,format=raw,if=none,id=drive-ua-cloudinitdisk -device virtio-blk-pci,scsi=off,bus=pci.3,addr=0x0,drive=drive-ua-cloudinitdisk,id=ua-cloudinitdisk -netdev tap,fd=23,id=hostua-default -device virtio-net-pci,netdev=hostua-default,id=ua-default,mac=be:38:5d:65:04:dd,bus=pci.1,addr=0x0 -chardev socket,id=charserial0,path=/var/run/kubevirt-private/4f2f4897-c978-11e8-9d0e-08002763f94a/virt-serial0,server,nowait -device isa-serial,chardev=charserial0,id=serial0 -vnc vnc=unix:/var/run/kubevirt-private/4f2f4897-c978-11e8-9d0e-08002763f94a/virt-vnc -device VGA,id=video0,vgamem_mb=16,bus=pcie.0,addr=0x1 -msg timestamp=on

root 2885 2829 0 15:13 ? 00:00:00 grep --color=auto 156

[root@testvm /]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: tunl0@NONE: mtu 1480 qdisc noop state DOWN group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

4: eth0@if18: mtu 1500 qdisc noqueue master k6t-eth0 state UP group default

link/ether be:38:5d:23:70:ad brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::bc38:5dff:fe23:70ad/64 scope link

valid_lft forever preferred_lft forever

5: k6t-eth0: mtu 1500 qdisc noqueue state UP group default

link/ether be:38:5d:23:70:ad brd ff:ff:ff:ff:ff:ff

inet 169.254.75.10/32 brd 169.254.75.10 scope global k6t-eth0

valid_lft forever preferred_lft forever

inet6 fe80::bc38:5dff:fe65:4dd/64 scope link

valid_lft forever preferred_lft forever

6: vnet0: mtu 1500 qdisc pfifo_fast master k6t-eth0 state UNKNOWN group default qlen 1000

link/ether fe:38:5d:65:04:dd brd ff:ff:ff:ff:ff:ff

inet6 fe80::fc38:5dff:fe65:4dd/64 scope link

valid_lft forever preferred_lft forever k8snode上面信息

root 19315 19298 0 10:58 ? 00:00:00 /bin/bash /usr/share/kubevirt/virt-launcher/entrypoint.sh --qemu-timeout 5m --name testvm --uid 4f2f4897-c978-11e8-9d0e-08002763f94a --namespace default --kubevirt-share-dir /var/run/kubevirt --readiness-file /tmp/healthy --grace-period-seconds 45 --hook-sidecars 0 --use-emulation

root 19334 19315 0 10:58 ? 00:00:00 virt-launcher --qemu-timeout 5m --name testvm --uid 4f2f4897-c978-11e8-9d0e-08002763f94a --namespace default --kubevirt-share-dir /var/run/kubevirt --readiness-file /tmp/healthy --grace-period-seconds 45 --hook-sidecars 0 --use-emulation

root 19354 19334 0 10:58 ? 00:00:00 /bin/bash /usr/share/kubevirt/virt-launcher/libvirtd.sh

root 19355 19334 0 10:58 ? 00:00:00 /usr/sbin/virtlogd -f /etc/libvirt/virtlogd.conf

root 19368 19354 0 10:58 ? 00:00:00 /usr/sbin/libvirtd

root 19607 2 0 10:58 ? 00:00:00 [kworker/1:4]

qemu 19649 19315 2 10:58 ? 00:00:23 /usr/bin/qemu-system-x86_64 -name guest=default_testvm,debug-threads=on -S -object secret,id=masterKey0,format=raw,file=/var/lib/libvirt/qemu/domain-1-default_testvm/master-key.aes -machine pc-q35-2.12,accel=tcg,usb=off,dump-guest-core=off -cpu EPYC,acpi=on,ss=on,hypervisor=on,erms=on,mpx=on,pcommit=on,clwb=on,pku=on,ospke=on,la57=on,3dnowext=on,3dnow=on,vme=off,fma=off,avx=off,f16c=off,rdrand=off,avx2=off,rdseed=off,sha-ni=off,xsavec=off,fxsr_opt=off,misalignsse=off,3dnowprefetch=off,osvw=off -m 62 -realtime mlock=off -smp 1,sockets=1,cores=1,threads=1 -uuid 5a9fc181-957e-5c32-9e5a-2de5e9673531 -no-user-config -nodefaults -chardev socket,id=charmonitor,path=/var/lib/libvirt/qemu/domain-1-default_testvm/monitor.sock,server,nowait -mon chardev=charmonitor,id=monitor,mode=control -rtc base=utc -no-shutdown -boot strict=on -device pcie-root-port,port=0x10,chassis=1,id=pci.1,bus=pcie.0,multifunction=on,addr=0x2 -device pcie-root-port,port=0x11,chassis=2,id=pci.2,bus=pcie.0,addr=0x2.0x1 -device pcie-root-port,port=0x12,chassis=3,id=pci.3,bus=pcie.0,addr=0x2.0x2 -device pcie-root-port,port=0x13,chassis=4,id=pci.4,bus=pcie.0,addr=0x2.0x3 -drive file=/var/run/libvirt/kubevirt-ephemeral-disk/registry-disk-data/default/testvm/disk_registryvolume/disk-image.raw,format=raw,if=none,id=drive-ua-registrydisk -device virtio-blk-pci,scsi=off,bus=pci.2,addr=0x0,drive=drive-ua-registrydisk,id=ua-registrydisk,bootindex=1 -drive file=/var/run/libvirt/kubevirt-ephemeral-disk/cloud-init-data/default/testvm/noCloud.iso,format=raw,if=none,id=drive-ua-cloudinitdisk -device virtio-blk-pci,scsi=off,bus=pci.3,addr=0x0,drive=drive-ua-cloudinitdisk,id=ua-cloudinitdisk -netdev tap,fd=23,id=hostua-default -device virtio-net-pci,netdev=hostua-default,id=ua-default,mac=be:38:5d:65:04:dd,bus=pci.1,addr=0x0 -chardev socket,id=charserial0,path=/var/run/kubevirt-private/4f2f4897-c978-11e8-9d0e-08002763f94a/virt-serial0,server,nowait -device isa-serial,chardev=charserial0,id=serial0 -vnc vnc=unix:/var/run/kubevirt-private/4f2f4897-c978-11e8-9d0e-08002763f94a/virt-vnc -device VGA,id=video0,vgamem_mb=16,bus=pcie.0,addr=0x1 -msg timestamp=on

root 20444 2 0 10:59 ? 00:00:00 [kworker/0:1]

root 24979 2 0 11:04 ? 00:00:00 [kworker/1:0]

root 25450 665 0 09:46 ? 00:00:00 /sbin/dhclient -d -q -sf /usr/libexec/nm-dhcp-helper -pf /var/run/dhclient-enp0s3.pid -lf /var/lib/NetworkManager/dhclient-d5c415a1-73dc-47da-afdd-9e44d451e97f-enp0s3.lease -cf /var/lib/NetworkManager/dhclient-enp0s3.conf enp0s34.5 访问VM

virtctl console testvm

Successfully connected to testvm console. The escape sequence is ^]

login as 'cirros' user. default password: 'gocubsgo'. use 'sudo' for root.

testvm login: cirros

Password:

$ ip a

1: lo: mtu 65536 qdisc noqueue qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: mtu 1500 qdisc pfifo_fast qlen 1000

link/ether be:38:5d:65:04:dd brd ff:ff:ff:ff:ff:ff

inet 10.244.61.218/32 brd 10.255.255.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::bc38:5dff:fe65:4dd/64 scope link tentative flags 08

valid_lft forever preferred_lft forever

$ ps -ef

PID USER COMMAND

1 root init

2 root [kthreadd]

3 root [ksoftirqd/0]

4 root [kworker/0:0]

5 root [kworker/0:0H]

7 root [rcu_sched]

8 root [rcu_bh]

9 root [migration/0]

10 root [watchdog/0]

11 root [kdevtmpfs]

12 root [netns]

13 root [perf]

14 root [khungtaskd]

15 root [writeback]

16 root [ksmd]

17 root [crypto]

18 root [kintegrityd]

19 root [bioset]

20 root [kblockd]

21 root [ata_sff]

22 root [md]

23 root [devfreq_wq]

24 root [kworker/u2:1]

25 root [kworker/0:1]

27 root [kswapd0]

28 root [vmstat]

29 root [fsnotify_mark]

30 root [ecryptfs-kthrea]

46 root [kthrotld]

47 root [acpi_thermal_pm]

48 root [bioset]

49 root [bioset]

50 root [bioset]

51 root [bioset]

52 root [bioset]

53 root [bioset]

54 root [bioset]

55 root [bioset]

56 root [bioset]

57 root [bioset]

58 root [bioset]

59 root [bioset]

60 root [bioset]

61 root [bioset]

62 root [bioset]

63 root [bioset]

64 root [bioset]

65 root [bioset]

66 root [bioset]

67 root [bioset]

68 root [bioset]

69 root [bioset]

70 root [bioset]

71 root [bioset]

72 root [bioset]

73 root [bioset]

77 root [ipv6_addrconf]

79 root [kworker/u2:2]

91 root [deferwq]

92 root [charger_manager]

123 root [jbd2/vda1-8]

124 root [ext4-rsv-conver]

125 root [kworker/0:1H]

152 root /sbin/syslogd -n

154 root /sbin/klogd -n

181 root /sbin/acpid

252 root udhcpc -p /var/run/udhcpc.eth0.pid -R -n -T 60 -i eth0 -s /sbin/

280 root /usr/sbin/dropbear -R

372 cirros -sh

373 root /sbin/getty 115200 tty1

378 cirros ps -ef 转载自https://blog.csdn.net/cloudvtech

五、启动另外一个虚拟机

5.1 vm1.yaml

apiVersion: kubevirt.io/v1alpha2

kind: VirtualMachine

metadata:

name: testvm1

spec:

running: true

selector:

matchLabels:

guest: testvm1

template:

metadata:

labels:

guest: testvm1

kubevirt.io/size: big

spec:

domain:

devices:

disks:

- name: registrydisk

volumeName: registryvolume

disk:

bus: virtio

- name: cloudinitdisk

volumeName: cloudinitvolume

disk:

bus: virtio

volumes:

- name: registryvolume

registryDisk:

image: kubevirt/cirros-registry-disk-demo

- name: cloudinitvolume

cloudInitNoCloud:

userDataBase64: SGkuXG4=

---

apiVersion: kubevirt.io/v1alpha2

kind: VirtualMachineInstancePreset

metadata:

name: big

spec:

selector:

matchLabels:

kubevirt.io/size: big

domain:

resources:

requests:

memory: 128M

devices: {}5.2 查看状态

[root@k8s-install-node kubevirt]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

virt-launcher-testvm-jv6tk 2/2 Running 0 33m 10.244.61.218 k8s-01

virt-launcher-testvm1-dtxfl 2/2 Running 0 3m 10.244.179.39 k8s-02

[root@k8s-install-node ~]# ssh [email protected]

The authenticity of host '10.244.179.39 (10.244.179.39)' can't be established.

ECDSA key fingerprint is SHA256:OgAtlTnwN/EYYwCPFxWBrDMBaZeoZzDS+P7pEZ6CEwM.

ECDSA key fingerprint is MD5:c3:97:7f:e3:73:11:5d:8a:cd:dc:7e:27:a2:1b:c7:3e.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '10.244.179.39' (ECDSA) to the list of known hosts.

[email protected]'s password:

$ ip a

1: lo: mtu 65536 qdisc noqueue qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: mtu 1500 qdisc pfifo_fast qlen 1000

link/ether b6:91:13:f1:b2:0f brd ff:ff:ff:ff:ff:ff

inet 10.244.179.39/32 brd 10.255.255.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::b491:13ff:fef1:b20f/64 scope link tentative flags 08

valid_lft forever preferred_lft forever 转载自https://blog.csdn.net/cloudvtech