centos7 搭建elk日志系统

安装elasticsearch 6.0.0

1.下载和配置相应的elasticsearch文件

# 进入到 cd /opt 下

wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-6.0.0.zip

unzip elasticsearch-6.0.0.zip

cd elasticsearch-6.0.0/

2.添加普通用户

# 在linux环境中,elasticsearch不允许以root权限运行,这里创建一个elk用户

groupadd elk # 创建用户组elk

useradd elk -g elk -s /sbin/nologin # 创建新用户elk,-g elk 设置其用户组为 elk,-p elk 设置其密码为elk

chown -R elk.elk /opt # 更改 /opt 文件夹及内部文件的所属用户及组为 elk:elk

# vim config/elasticsearch.yml

network.host: 0.0.0.0

http.port: 9200

path.logs: /opt/elasticsearch-6.0.0/logs

path.data: /opt/elasticsearch-6.0.0/data

action.destructive_requires_name: true

# 允许跨域访问

http.cors.enabled: true

http.cors.allow-origin: "*"

3.修改分配的jvm空间大小,修改系统配置,启动服务

参考我之前写的 centos7安装elasticsearch-7.0.1伪集群

安装logstash 6.0.0

1.下载和配置相应的logstash 文件

# 进入到opt目录下 cd /opt

wget https://artifacts.elastic.co/downloads/logstash/logstash-6.0.0.tar.gz

tar -xzvf logstash-6.0.0.tar.gz

# 进入到logstash-6.0.0 目录下 cd /opt/logstash-6.0.0

# 新建一个配置文件

touch config/python-logstash.conf

# 编辑配置文件

vim config/python-logstash.conf

# 配置文件的内容如下

input {

udp {

port => 5959 #udp的端口

codec => json#输入的格式为json格式

}

}

output {

elasticsearch {

hosts => ["192.168.18.133:9200"] #es的地址

index => "python-message-%{+YYYY.MM.dd}" #存入到es的索引名

}

stdout {

codec => rubydebug

#codec => json

}

# 输出到kafka

kafka {

codec => json

topic_id => "mytopic" # 组的id

bootstrap_servers => "10.211.55.3:29092" # kafka所在的ip:port

}

}

2.下载和配置相应的logstash 文件

# 检查配置文件是否正确

/opt/logstash-6.0.0/bin/logstash -t -f /opt/logstash-6.0.0/config/python-logstash.conf

启动:

/opt/logstash-6.0.0/bin/logstash -f /opt/logstash-6.0.0/config/python-logstash.conf

加载本文件夹所有配置文件启动:

/opt/logstash-6.0.0/bin/logstash -f /opt/logstash-6.0.0/config/python-logstash.conf

或后台启动:

nohup /opt/logstash-6.0.0/bin/logstash -f config/python-logstash.conf &

安装kibana

# 进入到opt目录 cd /opt

wget https://artifacts.elastic.co/downloads/kibana/kibana-6.0.0-x86_64.rpm

yum install -y kibana-6.0.0-x86_64.rpm

# 修改配置文件

vim /etc/kibana/kibana.yml

server.port: 5601 //监听端口

# server.host: "0.0.0.0"

server.host: "10.211.55.3" //监听IP地址,建议内网ip

elasticsearch.url: "http://10.211.55.3:9200" //elasticsearch连接kibana的URL,也可以填写10.211.55.3,因为它们是一个集群

# .启动服务

systemctl enable kibana

systemctl start kibana

# .验证

ss -antlup | grep 5601

# -*- coding: utf-8 -*-

# @Time : 2019/3/18 9:45

# @Author :

"""

"""

import logging

import logstash

import sys

class Logstash(object):

def __init__(self, host='192.168.18.133', console_name='article', port=5959, version=1):

# 输出的日志的名称

self.host = host

self.console_name = console_name

self.port = port

self.version = version

self.spider_logger = self.init()

def init(self):

spider_logger = logging.getLogger(self.console_name)

spider_logger.setLevel(logging.INFO)

spider_logger.addHandler(logstash.LogstashHandler(self.host, self.port, version=self.version))

return spider_logger

def level(self,message='', level='info'):

"""设置日志等级"""

if not message:

raise Exception("please input your message")

if level == 'error':

self.spider_logger.error(message)

elif level == 'info':

self.spider_logger.info(message)

else:

self.spider_logger.warning(message)

def extra(self, extra=None):

"""额外输出信息"""

# add extra field to logstash message

if not extra:

extra = {

'test_string': 'python version: ' + repr(sys.version_info),

'test_boolean': True,

'test_dict': {'a': 1, 'b': 'c'},

'test_float': 1.23,

'test_integer': 123,

'test_list': [1, 2, '3'],

}

self.spider_logger.info('python-logstash: test extra fields', extra=extra)

if __name__ == '__main__':

lgs = Logstash()

lgs.level(message='hello')

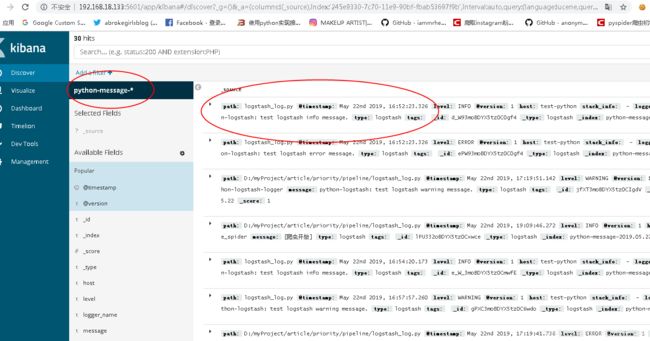

在kibana中

在logstash中

参考 https://www.jianshu.com/p/cb510f55e318

参考 https://www.jianshu.com/p/1bffca69ac1a

参考 https://blog.51cto.com/kexiaoke/1952969

参考 https://www.cnblogs.com/lovelinux199075/p/9101631.html