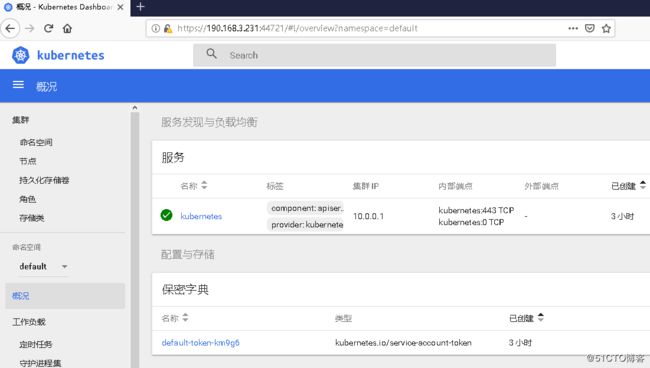

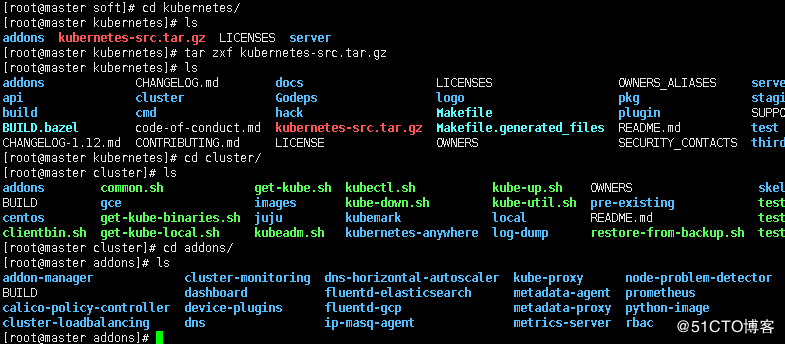

一.部署Web UI(Dashboard)

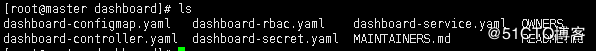

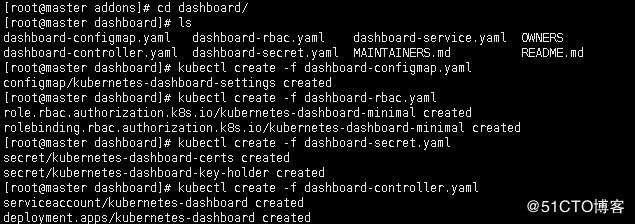

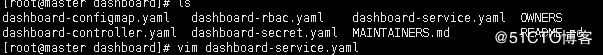

1.解压包,进入目录

包就在之前的master部署组件里

这里里面kubernetes-server-linux-amd64.tar.gz

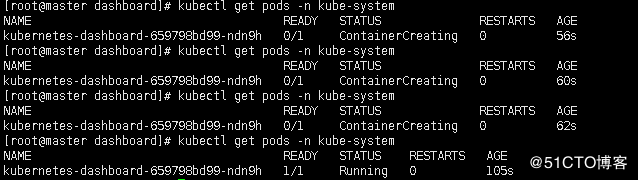

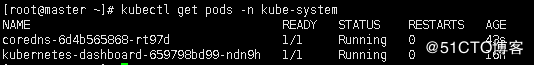

查看启动的pod,没在默认命名空间,在kube-system下

注:

其中dashboard-controller.yaml这个里面的dashboard镜像是国外的,如果慢,可以换成国内的镜像地址 image: registry.cn-hangzhou.aliyuncs.com/google_containers/kubernetes-dashboard-amd64:v1.10.0![]()

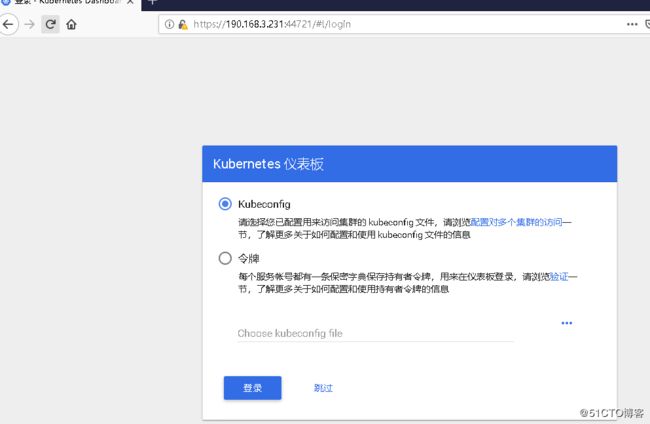

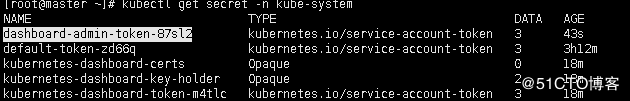

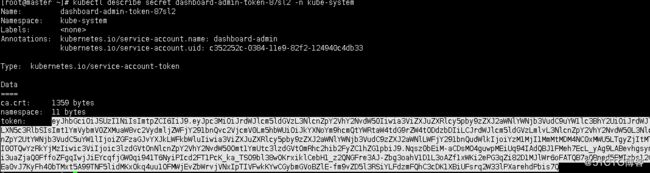

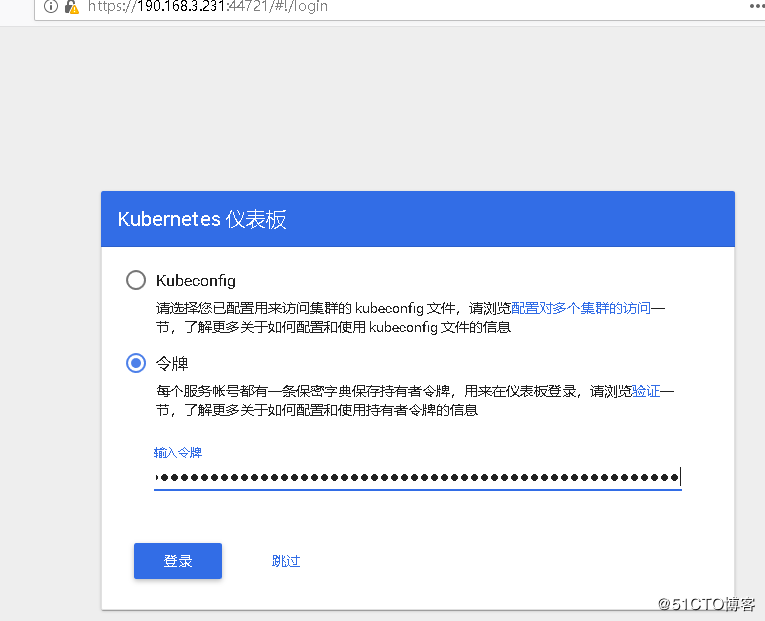

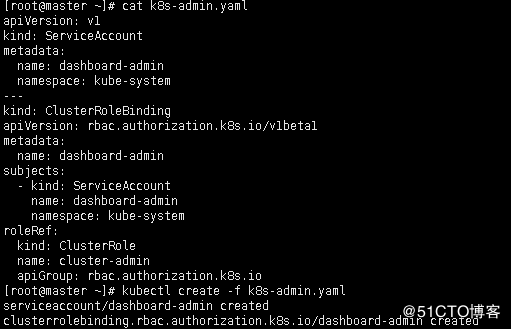

5.设置登陆令牌,访问web界面

创建用户访问,绑定集群管理员,使用它产生的密钥

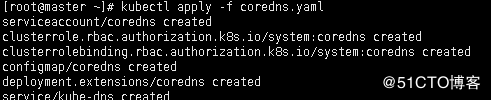

二.coredns的安装

安装coredns的yaml文档可以在kubernetes的github上找到https://github.com/kubernetes/kubernetes/edit/master/cluster/addons/dns/coredns/coredns.yaml.sed

[root@master ~]# vim coredns.yaml

# Warning: This is a file generated from the base underscore template file: coredns.yaml.baseapiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: Reconcile

name: system:coredns

rules:

- apiGroups:

- ""

resources: - endpoints

- services

- pods

- namespaces

verbs: - list

- watch

- ""

- apiGroups:

- ""

resources: - nodes

verbs: -

get

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: EnsureExists

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- ""

-

kind: ServiceAccount

name: coredns

namespace: kube-systemapiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

labels:

addonmanager.kubernetes.io/mode: EnsureExists

data:

Corefile: |

.:53 {

errors

health

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

upstream

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

proxy . /etc/resolv.conf

cache 30

loop

reload

loadbalance

}apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "CoreDNS"

spec:replicas: not specified here:

# 1. In order to make Addon Manager do not reconcile this replicas parameter. # 2. Default is 1. # 3. Will be tuned in real time if DNS horizontal auto-scaling is turned on.strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'docker/default'

spec:

serviceAccountName: coredns

tolerations:- key: "CriticalAddonsOnly"

operator: "Exists"

containers:- name: coredns

image: coredns/coredns:1.2.6

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: coredns

- name: config-volume

mountPath: /etc/coredns

readOnly: true

ports: - containerPort: 53

name: dns

protocol: UDP - containerPort: 53

name: dns-tcp

protocol: TCP - containerPort: 9153

name: metrics

protocol: TCP

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:- NET_BIND_SERVICE

drop: - all

readOnlyRootFilesystem: true

dnsPolicy: Default

volumes:

- NET_BIND_SERVICE

- name: config-volume

configMap:

name: coredns

items:-

key: Corefile

path: CorefileapiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: 10.0.0.2

ports:- name: dns

port: 53

protocol: UDP - name: dns-tcp

port: 53

protocol: TCP

- name: dns

-

- key: "CriticalAddonsOnly"

2.查看部署dns结果

[root@master ~]# kubectl get pods -n kube-system

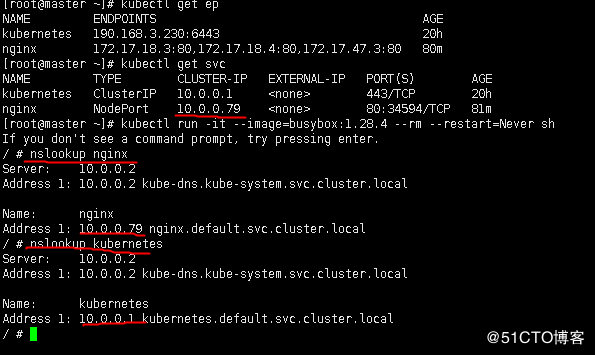

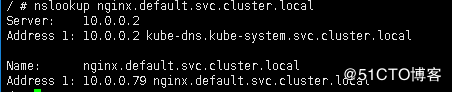

不同命名空间解析

后面加上命名空间.svc.cluster.local

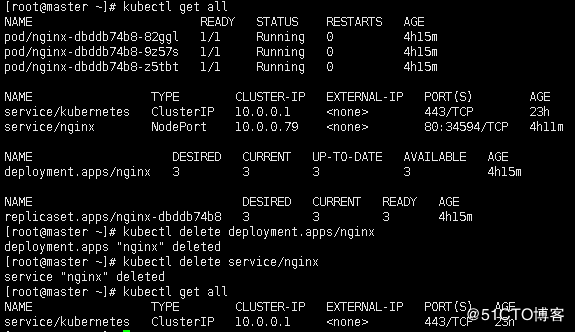

[root@master ~]# kubectl delete deployment.apps/nginx

[root@master ~]# kubectl delete service/nginx

2.在默认命名空间下创建nginx容器

[root@master ~]# kubectl run nginx --image=nginx --replicas=3 --labels="app=nignx-example" --image=nginx:1.10 --port=80

查看pod

[root@master ~]# kubectl get all

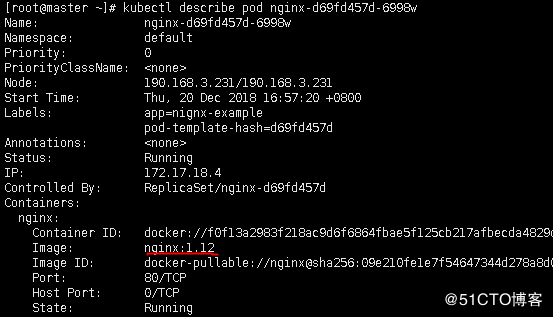

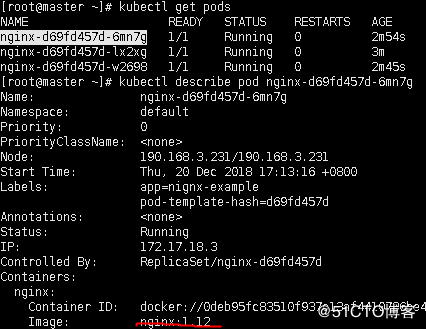

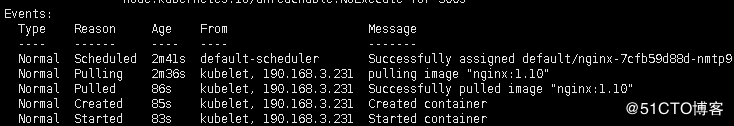

3.查看pod的详细信息describe

[root@master ~]# kubectl describe pod/nginx-7cfb59d88d-nmtp9

下面还有事件,可以用于排错

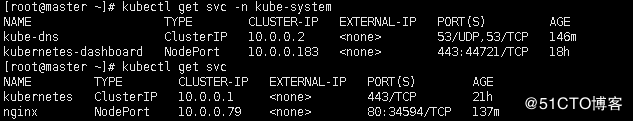

4.查看pod和service

[root@master ~]# kubectl get pod

[root@master ~]# kubectl get svc

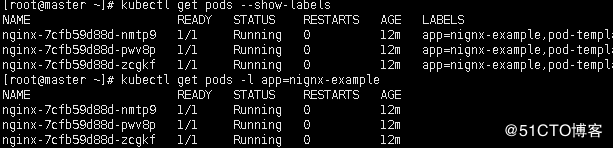

5.显示标签

[root@master ~]# kubectl get pods --show-labels

注:设置标签的好处,到时候pod多了,可以指定pod的标签查找

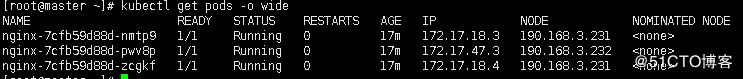

6.查询pod的详细信息

[root@master ~]# kubectl get pods -o wide

7.查看控制用到的那些镜像

[root@master ~]# kubectl get deployment -o wide![]()

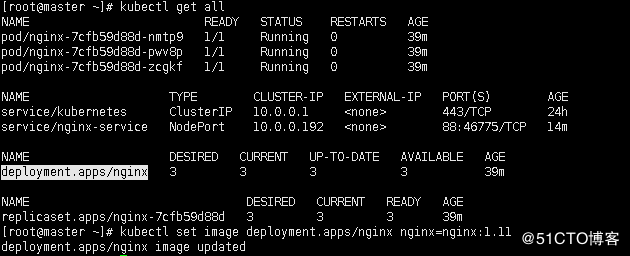

8.启动发布服务

[root@master ~]# kubectl expose deployment nginx --port=88 --type=NodePort --target-port=80 --name=nginx-service

expose暴露服务

--port=88 外部ba暴露端口

type=NodePort 使用节点端口访问应用

--target-port=80 内部端口

--name=nginx-service 服务名称

通过集群ip加外部暴露端口可以在任意节点访问应用

或者通过node端口和节点地址地址访问

四.故障排查

1.查看pod详细信息

kubectl describe 容器pod id

2.查看pod日志

kubectl log 容器pod id

3.进入到pod查看情况

kubectl exec -it pod id bash

五.更新镜像

kubectl set --help 这个命令更新设置容器的设置

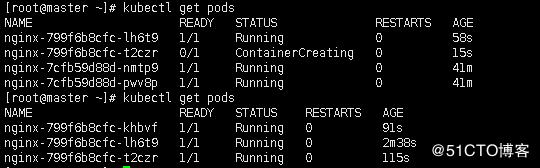

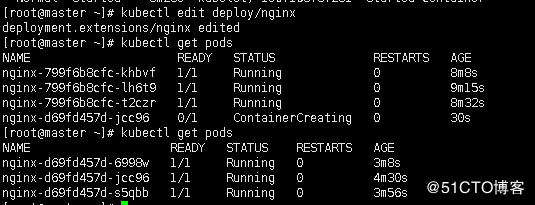

1.更新镜像版本

这个过程是将之前的1.10版本的3个镜像删除,在创建3个1.11版本的镜像

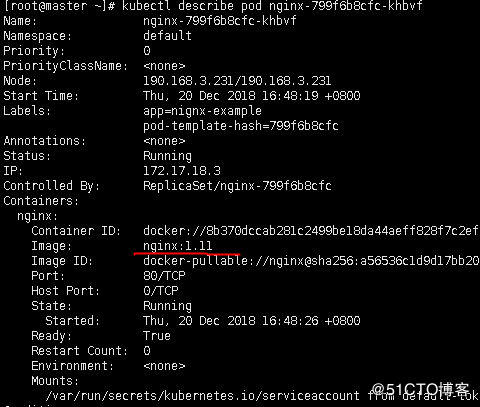

查看刚创建的pod详细信息,已经变成nginx:1.11

[root@master ~]# kubectl describe pod nginx-799f6b8cfc-khbvf

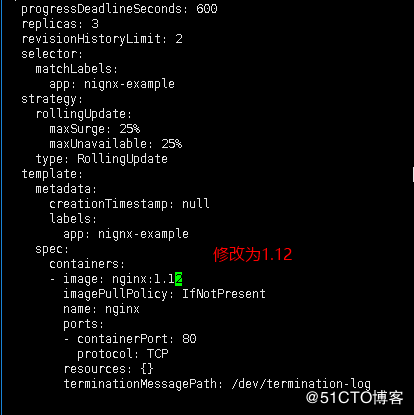

2.还有另一种的办法更新镜像,直接编辑控制器资源文件

[root@master ~]# kubectl edit deploy/nginx

3.可以查看版本历史

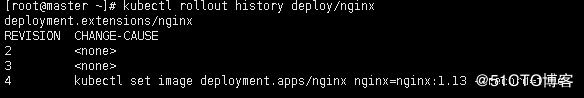

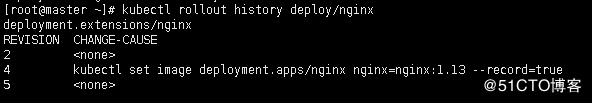

[root@master ~]# kubectl rollout status deploy/nginx

[root@master ~]# kubectl rollout history deploy/nginx

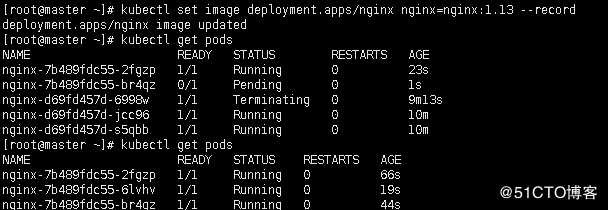

再次升级为nginx:1.13

4.回滚状态

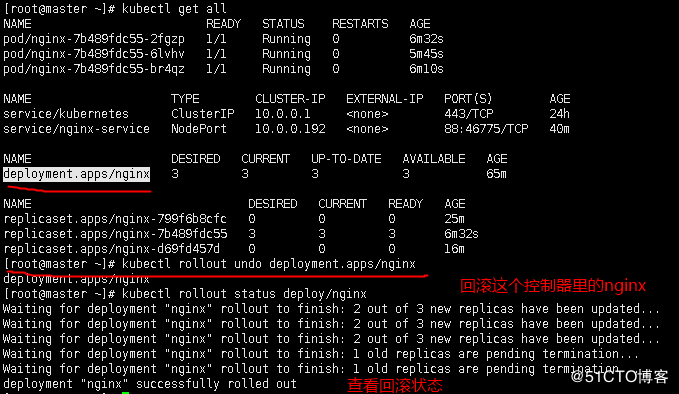

现在版本是nginx:1.13,假设这个版本有问题,要根据history回滚到之前的版本

[root@master ~]# kubectl rollout undo deployment.apps/nginx

[root@master ~]# kubectl rollout status deploy/nginx

查看版本历史,少了第三个版本,已经回滚到nginx:1.12了

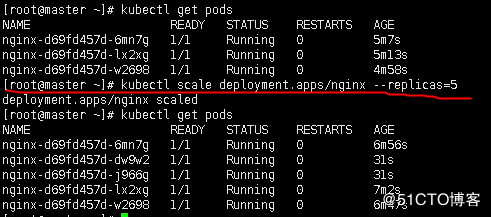

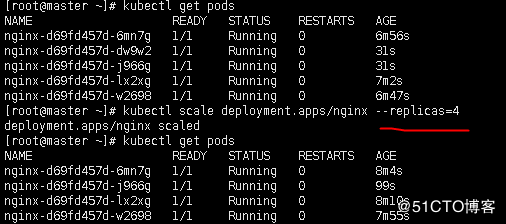

六.扩容副本scale

由于高并发业务需要将后端pod扩展为5个(现在为3个)

[root@master ~]# kubectl scale deployment.apps/nginx --replicas=5