使用VGG19的参数测试图片

本文使用网上下载的VGG19卷积层参数测试一张图片,只使用了VGG19的Conv,Relu,Max-pooling层,没有用到最后三个FC层。参数文件是网上下载的imagenet-vgg-verydeep-19.mat。本文中经过的层包含如下:

'conv1_1', 'relu1_1', 'conv1_2', 'relu1_2', 'pool1',

'conv2_1', 'relu2_1', 'conv2_2', 'relu2_2', 'pool2',

'conv3_1', 'relu3_1', 'conv3_2', 'relu3_2', 'conv3_3',

'relu3_3', 'conv3_4', 'relu3_4', 'pool3',

'conv4_1', 'relu4_1', 'conv4_2', 'relu4_2', 'conv4_3',

'relu4_3', 'conv4_4', 'relu4_4', 'pool4',

'conv5_1', 'relu5_1', 'conv5_2', 'relu5_2', 'conv5_3',

'relu5_3', 'conv5_4', 'relu5_4'第一步是搞清楚参数在该文件中的位置,在我的另一篇博客中有讲,这里只是简单的贴代码,方法比较笨拙,嘿嘿。

# -*- coding:utf-8 -*

import scipy.io

import numpy as np

import os

import scipy.misc

cwd = os.getcwd()

VGG_PATH = cwd + "/data/imagenet-vgg-verydeep-19.mat"

vgg = scipy.io.loadmat(VGG_PATH)

#先显示一下数据类型,发现是dict

print(type(vgg))

#字典就可以打印出键值dict_keys(['__header__', '__version__', '__globals__', 'layers', 'classes', 'normalization'])

print(vgg.keys())

#进入layers字段,我们要的权重和偏置参数应该就在这个字段下

layers = vgg['layers']

#打印下layers发现输出一大堆括号,好复杂的样子:[[ array([[ (array([[ array([[[[ ,顶级array有两个[[

#所以顶层是两维,每一个维数的元素是array,array内部还有维数

#print(layers)

#输出一下大小,发现是(1, 43),说明虽然有两维,但是第一维是”虚的”,也就是只有一个元素

#根据模型可以知道,这43个元素其实就是对应模型的43层信息(conv1_1,relu,conv1_2…),Vgg-19没有包含Relu和Pool,那么看一层就足以,

#而且我们现在得到了一个有用的index,那就是layer,layers[layer]

print("layers.shape:",layers.shape)

layer = layers[0]

#输出的尾部有dtype=[('weights', 'O'), ('pad', 'O'), ('type', 'O'), ('name', 'O'), ('stride', 'O')])

#可以看出顶层的array有5个元素,分别是weight(含有bias), pad(填充元素,无用), type, name, stride信息,

#然后继续看一下shape信息,

print("layer.shape:",layer.shape)

#print(layer)输出是(1, 1),只有一个元素

print("layer[0].shape:",layer[0].shape)

#layer[0][0].shape: (1,),说明只有一个元素

print("layer[0][0].shape:",layer[0][0].shape)

#layer[0][0][0].shape: (1,),说明只有一个元素

print("layer[0][0][0].shape:",layer[0][0][0].shape)

#len(layer[0][0]):5,即weight(含有bias), pad(填充元素,无用), type, name, stride信息

print("len(layer[0][0][0]):",len(layer[0][0][0]))

#所以应该能按照如下方式拿到信息,比如说name,输出为['conv1_1']

print("name:",layer[0][0][0][3])

#查看一下weights的权重,输出(1,2),再次说明第一维是虚的,weights中包含了weight和bias

print("layer[0][0][0][0].shape",layer[0][0][0][0].shape)

print("layer[0][0][0][0].len",len(layer[0][0][0][0]))

#weights[0].shape: (2,),weights[0].len: 2说明两个元素就是weight和bias

print("layer[0][0][0][0][0].shape:",layer[0][0][0][0][0].shape)

print("layer[0][0][0][0].len:",len(layer[0][0][0][0][0]))

weights = layer[0][0][0][0][0]

#解析出weight和bias

weight,bias = weights

#weight.shape: (3, 3, 3, 64)

print("weight.shape:",weight.shape)

#bias.shape: (1, 64)

print("bias.shape:",bias.shape)

dict_keys(['__header__', '__version__', '__globals__', 'layers', 'classes', 'normalization'])

layers.shape: (1, 43)

layer.shape: (43,)

layer[0].shape: (1, 1)

layer[0][0].shape: (1,)

layer[0][0][0].shape: ()

len(layer[0][0][0]): 5

name: ['conv1_1']

layer[0][0][0][0].shape (1, 2)

layer[0][0][0][0].len 1

layer[0][0][0][0][0].shape: (2,)

layer[0][0][0][0].len: 2

weight.shape: (3, 3, 3, 64)

bias.shape: (1, 64) 第二步就是用代码加载网络中现成的权重和偏置参数用于测试我们的图片:

import scipy.io

import numpy as np

import os

import scipy.misc

import matplotlib.pyplot as plt

import tensorflow as tf

def _conv_layer(input, weights, bias):

#由于此处使用的是已经训练好的VGG-19参数,所有weights可以定义为常量

conv = tf.nn.conv2d(input, tf.constant(weights), strides=(1, 1, 1, 1),

padding='SAME')

return tf.nn.bias_add(conv, bias)

def _pool_layer(input):

return tf.nn.max_pool(input, ksize=(1, 2, 2, 1), strides=(1, 2, 2, 1),

padding='SAME')

def preprocess(image, mean_pixel):

return image - mean_pixel

def unprocess(image, mean_pixel):

return image + mean_pixel

def imread(path):

return scipy.misc.imread(path).astype(np.float)

def imsave(path, img):

img = np.clip(img, 0, 255).astype(np.uint8)

scipy.misc.imsave(path, img)

#加载现成的VGG参数,使每一层的名字和对应的参数对应起来

def net(data_path, input_image):

layers = (

'conv1_1', 'relu1_1', 'conv1_2', 'relu1_2', 'pool1',

'conv2_1', 'relu2_1', 'conv2_2', 'relu2_2', 'pool2',

'conv3_1', 'relu3_1', 'conv3_2', 'relu3_2', 'conv3_3',

'relu3_3', 'conv3_4', 'relu3_4', 'pool3',

'conv4_1', 'relu4_1', 'conv4_2', 'relu4_2', 'conv4_3',

'relu4_3', 'conv4_4', 'relu4_4', 'pool4',

'conv5_1', 'relu5_1', 'conv5_2', 'relu5_2', 'conv5_3',

'relu5_3', 'conv5_4', 'relu5_4'

)

data = scipy.io.loadmat(data_path)

#原始VGG中,对输入的数据进行了减均值的操作,借别人的参数使用时也需要进行此步骤

#获取每个通道的均值,打印输出每个通道的均值为[ 123.68 116.779 103.939]

mean = data['normalization'][0][0][0]

mean_pixel = np.mean(mean, axis=(0, 1))

weights = data['layers'][0]

#定义net字典结构,key为层的名字,value保存每一层使用VGG参数运算后的结果

net = {}

current = input_image

for i, name in enumerate(layers):

kind = name[:4]

if kind == 'conv':

kernels, bias = weights[i][0][0][0][0]

#注意:Mat中的weights参数和tensorflow中不同

# matconvnet: weights are [width, height, in_channels, out_channels]

# mat weight.shape: (3, 3, 3, 64)

# tensorflow: weights are [height, width, in_channels, out_channels]

kernels = np.transpose(kernels, (1, 0, 2, 3))

#扁平化

bias = bias.reshape(-1)

current = _conv_layer(current, kernels, bias)

elif kind == 'relu':

current = tf.nn.relu(current)

elif kind == 'pool':

current = _pool_layer(current)

net[name] = current

assert len(net) == len(layers)

return net, mean_pixel, layers

#致次完成一次前向传播

cwd = os.getcwd()

VGG_PATH = cwd + "/data/imagenet-vgg-verydeep-19.mat"

IMG_PATH = cwd + "/data/dog.png"

input_image = imread(IMG_PATH)

shape = (1,input_image.shape[0],input_image.shape[1],input_image.shape[2])

with tf.Session() as sess:

image = tf.placeholder('float', shape=shape)

nets, mean_pixel, all_layers = net(VGG_PATH, image)

input_image_pre = np.array([preprocess(input_image, mean_pixel)])

layers = all_layers # For all layers

# layers = ('relu2_1', 'relu3_1', 'relu4_1')

for i, layer in enumerate(layers):

print ("[%d/%d] %s" % (i+1, len(layers), layer))

#数据预处理

#feature:[batch数,H ,W ,深度]

features = nets[layer].eval(feed_dict={image: input_image_pre})

print (" Type of 'features' is ", type(features))

print (" Shape of 'features' is %s" % (features.shape,))

# Plot response

if 1:

plt.figure(i+1, figsize=(10, 5))

plt.matshow(features[0, :, :, 0], cmap=plt.cm.gray, fignum=i+1)

plt.title("" + layer)

plt.colorbar()

plt.show()

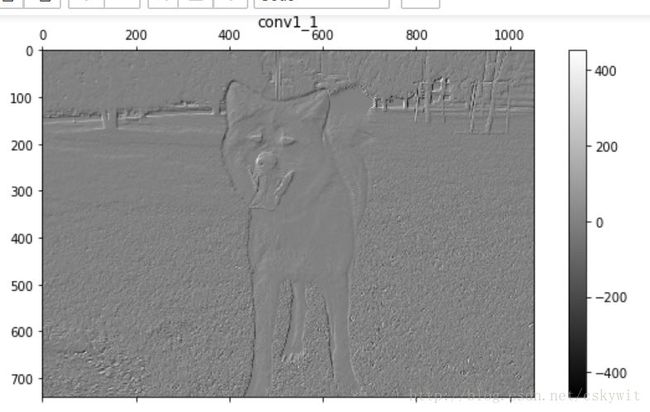

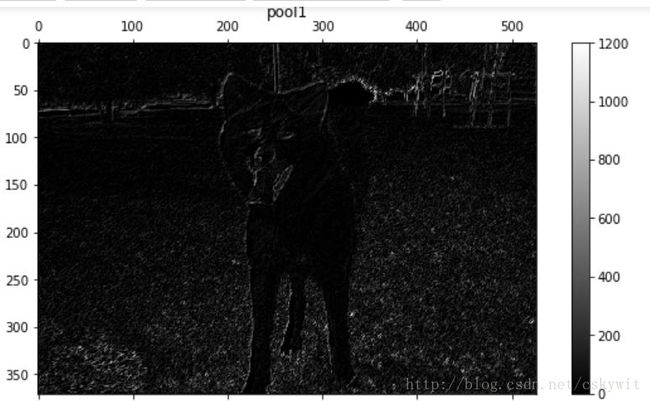

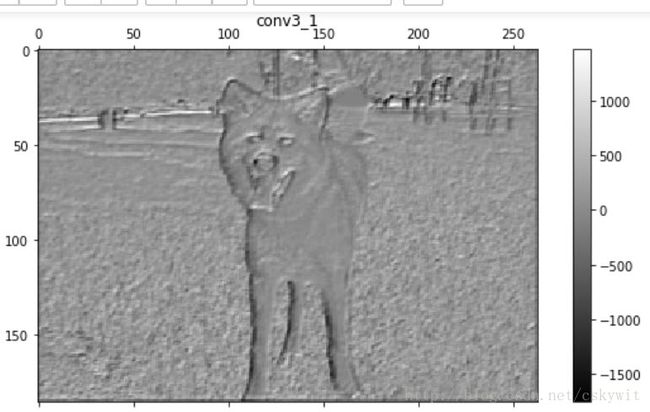

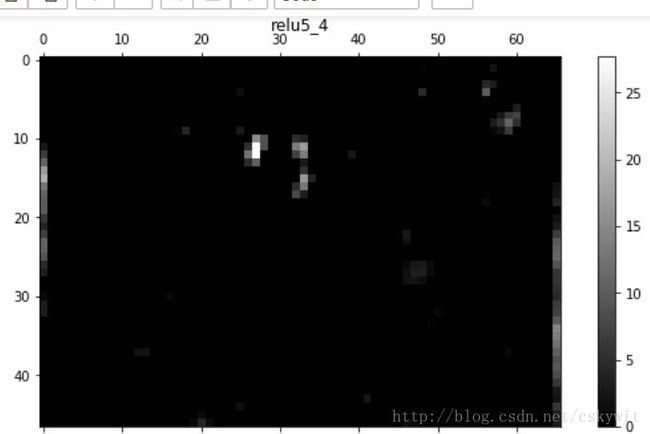

下面选几层看一下经过卷积和池化的效果: