接着上一篇《基于haddop的HDFS和Excel开源库POI导出大数据报表(一)》的遗留的问题开始,这篇做优化处理。

优化导出流程

在一开始的时候,当我获取到订单的数量,遍历订单,获取用户id和用户的地址id,逐条查询,可想而知,1w条数据,我要查询数据库1w*2,这种资源消耗是伤不起的,小号的时间大多数花在了查询上面。

后来,做了一次优化,将用户id和地址id分别放入到list中,每500条查询一次,加入有1w条,可以执行查询(10000 / 500) = 20,只需要查询20次即可,一般而言这个数目更小,原因用户id重复,同一个用户有很多订单,这样选择set比起list好很多,那么查询次数又降低了很多。

@Component("userService")

@Path("user")

@Produces({ContentType.APPLICATION_JSON_UTF_8})

public class UserServiceImpl implements UserService {

private static final int PER_TIMES = 500;

@Resource

private UserRepo repo;

@Override

@GET

@Path("/users")

public Map getUsersByUids(List uids) {

if (uids == null || uids.size() <= 0) {

return null;

}

Map map = new HashMap<>();

int times = uids.size() > PER_TIMES ? (int) Math.ceil(uids.size() * 1.0 / PER_TIMES) : 1;

for (int i = 0; i < times; i++) { // 执行多少次查询

StringBuffer strUids = new StringBuffer();

strUids.append("(0");

for (int j = i * PER_TIMES; j < ((i + 1) * PER_TIMES) && j < uids.size(); j++) { // 每次查询多少条数据

strUids.append(",").append(uids.get(j));

}

strUids.append(")");

String uid = strUids.toString();

//

Map m = repo.getUserByUids(uid);

if (m != null && m.size() > 0) {

System.out.println("第" + i + "次循环,返回数据" + m.size());

map.putAll(m);

}

}

return map;

}

// ... 其他的业务逻辑

} 在使用内部for循坏的时候,我犯了基本的算法错误,原来的代码:

// ...

// size 是第一个for循坏外面的变量,初识值为 size = uids.size();

StringBuffer strUids = new StringBuffer();

strUids.append("(0");

for (int j = i * PER_TIMES; j < PER_TIMES && j < size; j++) {

strUids.append(",").append(uids.get(j));

}

size = size - (i + 1) * PER_TIMES;

strUids.append(")");

String uid = strUids.toString();

// ...

是的,你没看错,这个错误我犯了,记在这里,是为了提醒以后少犯这样低级的错误。不管外部循环如何,里面的size值一直在减小,PER_TIMES值不变。

假如 PER_TIMES =500; i = 2; 那么里面的for是这样的,j = 2 * 500;j < 500 && j < (1000 - 500); j++;错误就在这里了,1000 < 500永远为false,再说了size的值一直在减小,j也会小于size。

这个错误造成的直接问题是数据空白,因为只会执行一次,第二次条件就为false了。

舍弃反射

在接口传来的数据类似这样的json:

{

"params": {

"starttm": 1469980800000

},

"filename": "2016-08-28-订单.xlsx",

"header": {

"crtm": "下单时间",

"paytm": "付款时间",

"oid": "订单ID",

"iid": "商品ID",

"title": "商品标题",

"type": "商品类型",

"quantity": "购买数量",

"user": "买家用户名",

"uid": "买家ID",

"pro": "省市",

"city": "区县",

"addr": "买家地址",

"status": "订单状态",

"refund": "退款状态",

"pri": "单价",

"realpay": "实付款",

"tel": "电话",

"rec": "收件人姓名",

"sex": "性别",

"comment": "备注"

}

}按照header字段的key生成数据,所以,一开始我是拿key通过反射获取get+"Key"值得,但是这样导致很慢。

/**

* 直接读取对象属性值, 无视private/protected修饰符, 不经过getter函数.

* @param obj

* @param fieldName

* @return

*/

public static Object getFieldValue(final Object obj, final String fieldName) {

Field field = getAccessibleField(obj, fieldName);

if (null == field) {

throw new IllegalArgumentException("Could not find field [" + fieldName + "] on " +

"target [" + obj + "]");

}

Object result = null;

try {

result = field.get(obj);

} catch (IllegalAccessException e) {

LOGGER.error("不可能抛出的异常{}" + e);

}

return result;

}

/**

* 循环向上转型, 获取对象的DeclaredField, 并强制设置为可访问.

* 如向上转型到Object仍无法找到, 返回null.

* @param obj

* @param fieldName

* @return

*/

public static Field getAccessibleField(final Object obj, final String fieldName) {

Assert.notNull(obj, "OBJECT不能为空");

Assert.hasText(fieldName, "fieldName");

for (Class superClass = obj.getClass(); superClass != Object.class; superClass = superClass.getSuperclass()) {

try {

Field field = superClass.getDeclaredField(fieldName);

field.setAccessible(true);

return field;

} catch (NoSuchFieldException e) {

//NOSONAR

// Field不在当前类定义,继续向上转型

}

}

return null;

}因为这些字段来自多个不同的对象,可能某些字段注入会失败,当注入失败的时候尝试注入到另一个对象。我觉得耗时也在这地方,后来修改成直接使用getter方法获取,速度也有提升。

jar包冲突解决

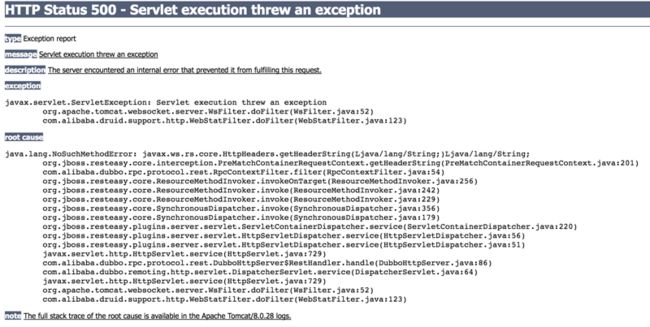

错误:

javax.servlet.ServletException: Servlet execution threw an exception

org.apache.tomcat.websocket.server.WsFilter.doFilter(WsFilter.java:52)

com.alibaba.druid.support.http.WebStatFilter.doFilter(WebStatFilter.java:123)

test环境和dev环境均好,但是线上环境报错。几经波折,终于知道,引起错误的原因是jar包冲突,resteasy和jersey包的冲突。项目中如何引入的jersey,这就得跟hadoop有关。

研究后发现,hadoop并不一定需要jersey,因此果断舍弃掉jersey包:

compile ('org.apache.hadoop:hadoop-common:2.7.2') {

exclude(module: 'jersey')

exclude(module: 'contribs')

}

compile ('org.apache.hadoop:hadoop-hdfs:2.7.2') {

exclude(module: 'jersey')

exclude(module: 'contribs')

}

compile ('org.apache.hadoop:hadoop-client:2.7.2') {

exclude(module: 'jersey')

exclude(module: 'contribs')

}尽管项目rest接口报错,但是启动不会报错,mq的执行正常。原因,大家都看到,jar包冲突,引起web中的过滤器根本不去请求路由,直接被过滤掉了。

HDFS优化后的封装

public class HDFSUtils {

private static FileSystem fs = null;

public static FileSystem getFileSystem(Configuration conf)

throws IOException, URISyntaxException {

if (null == fs) {

fs = FileSystem.get(conf);

}

return fs;

}

/**

* 判断路径是否存在

*

* @param conf hadoop 配置

* @param path hadoop 文件路径

* @return 文件是否存在

* @throws IOException

*/

public static boolean exits(Configuration conf, String path) throws IOException,

URISyntaxException {

FileSystem fs = getFileSystem(conf);

return fs.exists(new Path(path));

}

/**

* 创建文件

*

* @param conf hadoop 配置

* @param filePath 本地文件路径

* @param contents 文件内容

* @throws IOException

*/

public static void createFile(Configuration conf, String filePath, byte[] contents)

throws IOException, URISyntaxException {

try (FileSystem fs = getFileSystem(conf);

FSDataOutputStream outputStream = createFromFileSystem(fs, filePath)) {

outputStream.write(contents, 0, contents.length);

outputStream.hflush();

}

}

private static FSDataOutputStream createFromFileSystem(FileSystem fs, String filePath)

throws IOException {

Path path = new Path(filePath);

return fs.create(path);

}

private static FSDataInputStream openFromFileSystem(FileSystem fs, String filePath)

throws IOException {

Path path = new Path(filePath);

return fs.open(path);

}

/**

* 创建文件

*

* @param conf hadoop 配置

* @param filePath 本地文件路径

* @param workbook excel workbook 内容

* @throws IOException

*/

public static void createFile(Configuration conf, String filePath, Workbook workbook)

throws IOException, URISyntaxException {

try (FileSystem fs = getFileSystem(conf);

FSDataOutputStream outputStream = createFromFileSystem(fs, filePath)) {

ByteArrayOutputStream os = new ByteArrayOutputStream();

workbook.write(os);

outputStream.write(os.toByteArray());

outputStream.hflush();

}

}

/**

* 创建文件

*

* @param conf hadoop 配置

* @param filePath 本地文件路径

* @param contents 文件内容

* @throws IOException

*/

public static void uploadWorkbook(Configuration conf, String filePath, byte[] contents)

throws IOException, URISyntaxException {

try (FileSystem fs = getFileSystem(conf);

FSDataOutputStream outputStream = createFromFileSystem(fs, filePath)) {

outputStream.write(contents, 0, contents.length);

outputStream.hflush();

}

}

/**

* 创建文件

*

* @param conf hadoop 配置

* @param filePath 本地文件路径

* @param fileContent 文件内容

* @throws IOException

*/

public static void createFile(Configuration conf, String fileContent, String filePath)

throws IOException, URISyntaxException {

createFile(conf, filePath, fileContent.getBytes());

}

/**

* 上传文件

*

* @param conf hadoop 配置

* @param localFilePath 本地文件路径

* @param remoteFilePath 远程文件路径

* @throws IOException

*/

public static void copyFromLocalFile(Configuration conf, String localFilePath, String remoteFilePath)

throws IOException, URISyntaxException {

try (FileSystem fs = getFileSystem(conf)) {

Path localPath = new Path(localFilePath);

Path remotePath = new Path(remoteFilePath);

fs.copyFromLocalFile(true, true, localPath, remotePath);

}

}

/**

* 删除目录或文件

*

* @param conf hadoop 配置

* @param remoteFilePath 远程文件路径

* @param recursive if the subdirectories need to be traversed recursively

* @return 是否成功

* @throws IOException

*/

public static boolean deleteFile(Configuration conf, String remoteFilePath, boolean recursive)

throws IOException, URISyntaxException {

try (FileSystem fs = getFileSystem(conf)) {

return fs.delete(new Path(remoteFilePath), recursive);

}

}

/**

* 删除目录或文件(如果有子目录,则级联删除)

*

* @param conf hadoop 配置

* @param remoteFilePath 远程文件路径

* @return 是否成功

* @throws IOException

*/

public static boolean deleteFile(Configuration conf, String remoteFilePath)

throws IOException, URISyntaxException {

return deleteFile(conf, remoteFilePath, true);

}

/**

* 文件重命名

*

* @param conf hadoop 配置

* @param oldFileName 原始文件名

* @param newFileName 新文件名

* @return 是否成功

* @throws IOException

*/

public static boolean renameFile(Configuration conf, String oldFileName, String newFileName)

throws IOException, URISyntaxException {

try (FileSystem fs = getFileSystem(conf)) {

Path oldPath = new Path(oldFileName);

Path newPath = new Path(newFileName);

return fs.rename(oldPath, newPath);

}

}

/**

* 创建目录

*

* @param conf hadoop 配置

* @param dirName hadoop 目录名

* @return 是否成功

* @throws IOException

*/

public static boolean createDirectory(Configuration conf, String dirName)

throws IOException, URISyntaxException {

try (FileSystem fs = getFileSystem(conf)) {

Path dir = new Path(dirName);

return fs.mkdirs(dir);

}

}

/**

* 列出指定路径下的所有文件(不包含目录)

*

* @param fs hadoop文件系统

* @param basePath 基础路径

* @param recursive if the subdirectories need to be traversed recursively

*/

public static RemoteIterator listFiles(FileSystem fs, String basePath,

boolean recursive)

throws IOException {

return fs.listFiles(new Path(basePath), recursive);

}

/**

* 列出指定路径下的文件(非递归)

*

* @param conf hadoop 配置

* @param basePath 基础路径

* @return 文件状态集合

* @throws IOException

*/

public static RemoteIterator listFiles(Configuration conf, String basePath)

throws IOException, URISyntaxException {

try (FileSystem fs = getFileSystem(conf)) {

return fs.listFiles(new Path(basePath), false);

}

}

/**

* 列出指定目录下的文件\子目录信息(非递归)

*

* @param conf hadoop 配置

* @param dirPath 文件目录

* @return 文件状态数组

* @throws IOException

*/

public static FileStatus[] listStatus(Configuration conf, String dirPath) throws IOException,

URISyntaxException {

try (FileSystem fs = getFileSystem(conf)) {

return fs.listStatus(new Path(dirPath));

}

}

/**

* 读取文件内容并写入outputStream中

*

* @param conf hadoop 配置

* @param filePath 文件路径

* @param os 输出流

* @throws IOException

*/

public static void readFile(Configuration conf, String filePath, OutputStream os) throws IOException,

URISyntaxException {

FileSystem fs = getFileSystem(conf);

Path path = new Path(filePath);

try (FSDataInputStream inputStream = fs.open(path)) {

int c;

while ((c = inputStream.read()) != -1) {

os.write(c);

}

}

}

/**

* 读取文件内容并返回

* @param conf hadoop 配置

* @param filePath 本地文件路径

* @return 文件内容

* @throws IOException

* @throws URISyntaxException

*/

public static String readFile(Configuration conf, String filePath)

throws IOException, URISyntaxException {

String fileContent;

try (FileSystem fs = getFileSystem(conf);

InputStream inputStream = openFromFileSystem(fs, filePath);

ByteArrayOutputStream outputStream = new ByteArrayOutputStream(inputStream.available()))

{

IOUtils.copyBytes(inputStream, outputStream, conf);

byte[] lens = outputStream.toByteArray();

fileContent = new String(lens, "UTF-8");

}

return fileContent;

}

} 优化1:所有的try{} finally{}均由try代替掉了。而把简单代码放到try()里面了。try()是java7的特性,叫自动资源释放,具有关闭流的作用,不再手动去在finally中关闭各种stream和文件句柄,前提是,这些可关闭的资源必须实现 java.lang.AutoCloseable 接口。

新增了一个方法:

public static void createFile(Configuration conf, String filePath, Workbook workbook)

throws IOException, URISyntaxException {

try (FileSystem fs = getFileSystem(conf);

FSDataOutputStream outputStream = createFromFileSystem(fs, filePath)) {

ByteArrayOutputStream os = new ByteArrayOutputStream();

workbook.write(os);

outputStream.write(os.toByteArray());

outputStream.hflush();

}

}方法参数:hadoop配置,完整文件名,Workbook。这里通过workbook.write把Workbook写到ByteArrayOutputStream中,然后把ByteArrayOutputStream流写入到FSDataOutputStream流,再flush到磁盘。

这个优化的原因:下载文件的时候,读取流必须是POI的WorkBook的流,如果转换成其他的流,发生乱码。

POI优化后的封装

package cn.test.web.utils;

import cn.common.util.Utils;

import org.apache.commons.io.FilenameUtils;

import org.apache.poi.hssf.record.crypto.Biff8EncryptionKey;

import org.apache.poi.hssf.usermodel.HSSFFont;

import org.apache.poi.hssf.usermodel.HSSFFooter;

import org.apache.poi.hssf.usermodel.HSSFHeader;

import org.apache.poi.hssf.usermodel.HSSFWorkbook;

import org.apache.poi.openxml4j.exceptions.InvalidFormatException;

import org.apache.poi.openxml4j.opc.OPCPackage;

import org.apache.poi.openxml4j.opc.PackageAccess;

import org.apache.poi.poifs.filesystem.POIFSFileSystem;

import org.apache.poi.ss.usermodel.Cell;

import org.apache.poi.ss.usermodel.CellStyle;

import org.apache.poi.ss.usermodel.Font;

import org.apache.poi.ss.usermodel.Footer;

import org.apache.poi.ss.usermodel.Header;

import org.apache.poi.ss.usermodel.Row;

import org.apache.poi.ss.usermodel.Sheet;

import org.apache.poi.ss.usermodel.Workbook;

import org.apache.poi.ss.usermodel.WorkbookFactory;

import org.apache.poi.xssf.streaming.SXSSFWorkbook;

import org.apache.poi.xssf.usermodel.XSSFWorkbook;

import java.io.BufferedInputStream;

import java.io.FileInputStream;

import java.io.FileOutputStream;

import java.io.IOException;

import java.io.InputStream;

import java.util.List;

import java.util.Properties;

/**

* Created with presentation

* User zhoujunwen

* Date 16/8/11

* Time 下午5:02

*/

public class POIUtils {

private static final short HEADER_FONT_SIZE = 16; // 大纲字体

private static final short FONT_HEIGHT_IN_POINTS = 14; // 行首字体

private static final int MEM_ROW = 100;

public static Workbook createWorkbook(String file) {

String ext = FilenameUtils.getExtension(CommonUtils.getFileName(file));

Workbook wb = createSXSSFWorkbook(MEM_ROW);

/*switch (ext) {

case "xls":

wb = createHSSFWorkbook();

break;

case "xlsx":

wb = createXSSFWorkbook();

break;

default:

wb = createHSSFWorkbook();

}*/

return wb;

}

public static Workbook createWorkbookByIS(String file, InputStream inputStream) {

String ext = FilenameUtils.getExtension(CommonUtils.getFileName(file));

Workbook wb = null;

try {

OPCPackage p = OPCPackage.open(inputStream);

wb = new SXSSFWorkbook(new XSSFWorkbook(p), 100);

} catch (Exception ex) {

try {

wb = new HSSFWorkbook(inputStream, false);

} catch (IOException e) {

wb = new XSSFWorkbook();

}

}

return wb;

}

/**

*

* @param wb

* @param file

* @return

*/

public static Workbook writeFile(Workbook wb, String file) {

if (wb == null || Utils.isEmpty(file)) {

return null;

}

FileOutputStream out = null;

try {

out = new FileOutputStream(file);

wb.write(out);

} catch (IOException e) {

e.printStackTrace();

} finally {

if (out != null) {

try {

out.close();

} catch (IOException e) {

e.printStackTrace();

}

}

}

return wb;

}

public static Workbook createHSSFWorkbook() {

//生成Workbook

HSSFWorkbook wb = new HSSFWorkbook();

//添加Worksheet(不添加sheet时生成的xls文件打开时会报错)

@SuppressWarnings("unused")

Sheet sheet = wb.createSheet();

return wb;

}

public static Workbook createSXSSFWorkbook(int memRow) {

Workbook wb = new SXSSFWorkbook(memRow);

Sheet sheet = wb.createSheet();

return wb;

}

public static Workbook createXSSFWorkbook() {

XSSFWorkbook wb = new XSSFWorkbook();

@SuppressWarnings("unused")

Sheet sheet = wb.createSheet();

return wb;

}

public static Workbook openWorkbook(String file) {

FileInputStream in = null;

Workbook wb = null;

try {

in = new FileInputStream(file);

wb = WorkbookFactory.create(in);

} catch (InvalidFormatException | IOException e) {

e.printStackTrace();

} finally {

try {

if (in != null) {

in.close();

}

} catch (IOException e) {

e.printStackTrace();

}

}

return wb;

}

public static Workbook openEncryptedWorkbook(String file, String password) {

FileInputStream input = null;

BufferedInputStream binput = null;

POIFSFileSystem poifs = null;

Workbook wb = null;

try {

input = new FileInputStream(file);

binput = new BufferedInputStream(input);

poifs = new POIFSFileSystem(binput);

Biff8EncryptionKey.setCurrentUserPassword(password);

String ext = FilenameUtils.getExtension(CommonUtils.getFileName(file));

switch (ext) {

case "xls":

wb = new HSSFWorkbook(poifs);

break;

case "xlsx":

wb = new XSSFWorkbook(input);

break;

default:

wb = new HSSFWorkbook(poifs);

}

} catch (IOException e) {

e.printStackTrace();

}

return wb;

}

/**

* 追加一个sheet,如果wb为空且isNew为true,创建一个wb

*

* @param wb

* @param isNew

* @param type 创建wb类型,isNew为true时有效 1:xls,2:xlsx

* @return

*/

public static Workbook appendSheet(Workbook wb, boolean isNew, int type) {

if (wb != null) {

Sheet sheet = wb.createSheet();

} else if (isNew) {

if (type == 1) {

wb = new HSSFWorkbook();

wb.createSheet();

} else {

wb = new XSSFWorkbook();

wb.createSheet();

}

}

return wb;

}

public static Workbook setSheetName(Workbook wb, int index, String sheetName) {

if (wb != null && wb.getSheetAt(index) != null) {

wb.setSheetName(index, sheetName);

}

return wb;

}

public static Workbook removeSheet(Workbook wb, int index) {

if (wb != null && wb.getSheetAt(index) != null) {

wb.removeSheetAt(index);

}

return wb;

}

public static void insert(Sheet sheet, int row, int start, List columns) {

for (int i = start; i < (row + start); i++) {

Row rows = sheet.createRow(i);

if (columns != null && columns.size() > 0) {

for (int j = 0; j < columns.size(); j++) {

Cell ceil = rows.createCell(j);

ceil.setCellValue(String.valueOf(columns.get(j)));

}

}

}

}

public static void insertRow(Row row, List columns) {

if (columns != null && columns.size() > 0) {

for (int j = 0; j < columns.size(); j++) {

Cell ceil = row.createCell(j);

ceil.setCellValue(String.valueOf(columns.get(j)));

}

}

}

/**

* 设置excel头部

*

* @param wb

* @param sheetName

* @param columns 比如:["国家","活动类型","年份"]

* @return

*/

public static Workbook setHeader(Workbook wb, String sheetName, List columns) {

if (wb == null) return null;

Sheet sheet = wb.getSheetAt(0);

if (sheetName == null) {

sheetName = sheet.getSheetName();

}

insert(sheet, 1, 0, columns);

return setHeaderStyle(wb, sheetName);

}

/**

* 插入数据

*

* @param wb Workbook

* @param sheetName sheetName,默认为第一个sheet

* @param start 开始行数

* @param data 数据,List嵌套List ,比如:[["中国","奥运会",2008],["伦敦","奥运会",2012]]

* @return

*/

public static Workbook setData(Workbook wb, String sheetName, int start, List data) {

if (wb == null) return null;

if (sheetName == null) {

sheetName = wb.getSheetAt(0).getSheetName();

}

if (!Utils.isEmpty(data)) {

if (data instanceof List) {

int s = start;

Sheet sheet = wb.getSheet(sheetName);

for (Object rowData : data) {

Row row = sheet.createRow(s);

insertRow(row, (List) rowData);

s++;

}

}

}

return wb;

}

/**

* 移除某一行

*

* @param wb

* @param sheetName sheet name

* @param row 行号

* @return

*/

public static Workbook delRow(Workbook wb, String sheetName, int row) {

if (wb == null) return null;

if (sheetName == null) {

sheetName = wb.getSheetAt(0).getSheetName();

}

Row r = wb.getSheet(sheetName).getRow(row);

wb.getSheet(sheetName).removeRow(r);

return wb;

}

/**

* 移动行

*

* @param wb

* @param sheetName

* @param start 开始行

* @param end 结束行

* @param step 移动到那一行后(前) ,负数表示向前移动

* moveRow(wb,null,2,3,5); 把第2和3行移到第5行之后

* moveRow(wb,null,2,3,-1); 把第3行和第4行往上移动1行

* @return

*/

public static Workbook moveRow(Workbook wb, String sheetName, int start, int end, int step) {

if (wb == null) return null;

if (sheetName == null) {

sheetName = wb.getSheetAt(0).getSheetName();

}

wb.getSheet(sheetName).shiftRows(start, end, step);

return wb;

}

public static Workbook setHeaderStyle(Workbook wb, String sheetName) {

Font font = wb.createFont();

CellStyle style = wb.createCellStyle();

font.setBoldweight(HSSFFont.BOLDWEIGHT_BOLD);

font.setFontHeightInPoints(FONT_HEIGHT_IN_POINTS);

font.setFontName("黑体");

style.setFont(font);

if (Utils.isEmpty(sheetName)) {

sheetName = wb.getSheetAt(0).getSheetName();

}

int row = wb.getSheet(sheetName).getFirstRowNum();

int cell = wb.getSheet(sheetName).getRow(row).getLastCellNum();

for (int i = 0; i < cell; i++) {

wb.getSheet(sheetName).getRow(row).getCell(i).setCellStyle(style);

}

return wb;

}

public static Workbook setHeaderOutline(Workbook wb, String sheetName, String title) {

if (wb == null) return null;

if (Utils.isEmpty(sheetName)) {

sheetName = wb.getSheetAt(0).getSheetName();

}

Header header = wb.getSheet(sheetName).getHeader();

header.setLeft(HSSFHeader.startUnderline() +

HSSFHeader.font("宋体", "Italic") +

"喜迎G20!" +

HSSFHeader.endUnderline());

header.setCenter(HSSFHeader.fontSize(HEADER_FONT_SIZE) +

HSSFHeader.startDoubleUnderline() +

HSSFHeader.startBold() +

title +

HSSFHeader.endBold() +

HSSFHeader.endDoubleUnderline());

header.setRight("时间:" + HSSFHeader.date() + " " + HSSFHeader.time());

return wb;

}

public static Workbook setFooter(Workbook wb, String sheetName, String copyright) {

if (wb == null) return null;

if (Utils.isEmpty(sheetName)) {

sheetName = wb.getSheetAt(0).getSheetName();

}

Footer footer = wb.getSheet(sheetName).getFooter();

if (Utils.isEmpty(copyright)) {

copyright = "joyven";

}

footer.setLeft("Copyright @ " + copyright);

footer.setCenter("Page:" + HSSFFooter.page() + " / " + HSSFFooter.numPages());

footer.setRight("File:" + HSSFFooter.file());

return wb;

}

public static Workbook create(String sheetNm, String file, List header, List data, String

title, String copyright) {

Workbook wb = createWorkbook(file);

if (Utils.isEmpty(sheetNm)) {

sheetNm = wb.getSheetAt(0).getSheetName();

}

setHeaderOutline(wb, sheetNm, title);

setHeader(wb, sheetNm, header);

setData(wb, sheetNm, 1, data);

setFooter(wb, sheetNm, copyright);

if (wb != null) {

return wb;

}

return null;

}

public static String getSystemFileCharset() {

Properties pro = System.getProperties();

return pro.getProperty("file.encoding");

}

// TODO 后面增加其他设置

}这里面修复了一个bug,这个bug导致数据写入过大,耗内存,耗CPU。下面是修改后的方法。

public static Workbook setData(Workbook wb, String sheetName, int start, List data) {

if (wb == null) return null;

if (sheetName == null) {

sheetName = wb.getSheetAt(0).getSheetName();

}

if (!Utils.isEmpty(data)) {

if (data instanceof List) {

int s = start;

Sheet sheet = wb.getSheet(sheetName);

for (Object rowData : data) {

Row row = sheet.createRow(s);

insertRow(row, (List) rowData);

s++;

}

}

}

return wb;

}

public static void insertRow(Row row, List columns) {

if (columns != null && columns.size() > 0) {

for (int j = 0; j < columns.size(); j++) {

Cell ceil = row.createCell(j);

ceil.setCellValue(String.valueOf(columns.get(j)));

}

}

}下面是原来的写法:

public static Workbook setData(Workbook wb, String sheetName, int start, List data) {

if (wb == null) return null;

if (sheetName == null) {

sheetName = wb.getSheetAt(0).getSheetName();

}

if (data != null || data.size() > 0) {

if (data instanceof List) {

int s = start;

for (Object columns : data) {

insert(wb, sheetName, data.size() - (s - 1), s, (List) columns);

s++;

}

}

}

return wb;

}

public static void insert(Sheet sheet, int row, int start, List columns) {

for (int i = start; i < (row + start); i++) {

Row rows = sheet.createRow(i);

if (columns != null && columns.size() > 0) {

for (int j = 0; j < columns.size(); j++) {

Cell ceil = rows.createCell(j);

ceil.setCellValue(String.valueOf(columns.get(j)));

}

}

}

}错误:for (Object columns : data)已经在遍历数据了,但是在insert中又for (int i = start; i < (row + start); i++)遍历了一次,而且遍历的无厘头,尽管无厘头,数据却写进去,至于写入到什么地方了,就不知道,反正是成倍的增大内存和cpu。