Hive从0.14开始,使用Zookeeper实现了HiveServer2的HA功能(ZooKeeper Service Discovery),Client端可以通过指定一个nameSpace来连接HiveServer2,而不是指定某一个host和port。本文描述了hive的metastore和HiveServer2的高可用配置。使用的Hive版本为2.3.2。

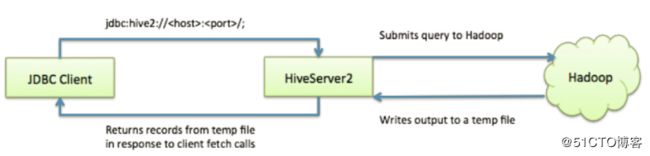

单实例的构成如下图:

如果使用HiveServer2的Client并发比较少,可以使用一个HiveServer2实例,绝对没问题。

而都多实例的构成如下图:

如图,本文在hdp01和hdp04上分别启用两个HiveServer2的实例,并通过zookeeper完成HA的配置(ZooKeeper已安装并配置)。

一、Hive Metastore HA配置

1、编辑hive-site.xml文件

配置很简单,只需要指定 hive.metastore.uris的值即可。多个server之间以逗号隔开,如下图:![]()

完了之后,同步hive-site.xml到hdp04节点上:

[hadoop@hdp01 ~]$ scp /u01/hive/conf/hive-site.xml hdp04:/u01/hive/conf/2、重启各个节点的hive服务

[hadoop@hdp01 ~]$ hive --service metastore >/dev/null &

[hadoop@hdp01 ~]$ hive --service hiveserver2 >/dev/null &

[hadoop@hdp04 ~]$ hive --service metastore >/dev/null &

[hadoop@hdp04 ~]$ hive --service hiveserver2 >/dev/null &3、更新FS Roots

Hive的元数据信息是存放在关系型数据库中的,我们只要找到存放这些数据的Table,然后用SQL去修改就行了。但是这样比较麻烦,你还得去登录数据库,最重要的是这样还很容易出错,所以不推荐使用。

查看当前的FS Root信息:

[hadoop@hdp01 ~]$ hive --service metatool -listFSRoot

Listing FS Roots..

hdfs://hdp01:9000/user/hive/warehouse/hivedb.db

hdfs://hdp01:9000/user/hive/warehouse

[hadoop@hdp04 ~]$ hive --service metatool -listFSRoot

Listing FS Roots..

hdfs://hdp01:9000/user/hive/warehouse/hivedb.db

hdfs://hdp01:9000/user/hive/warehouse使用下面的命令进行模拟更新:

[hadoop@hdp01 ~]$ hive --service metatool -updateLocation hdfs://hdp04:9000 hdfs://hdp01:9000 -dryRun

Initializing HiveMetaTool..

Looking for LOCATION_URI field in DBS table to update..

Dry Run of updateLocation on table DBS..

old location: hdfs://hdp01:9000/user/hive/warehouse/hivedb.db new location: hdfs://hdp04:9000/user/hive/warehouse/hivedb.db

old location: hdfs://hdp01:9000/user/hive/warehouse new location: hdfs://hdp04:9000/user/hive/warehouse

Found 2 records in DBS table to update

Looking for LOCATION field in SDS table to update..

Dry Run of updateLocation on table SDS..

old location: hdfs://hdp01:9000/user/hive/warehouse/hivedb.db/exam_score new location: hdfs://hdp04:9000/user/hive/warehouse/hivedb.db/exam_score

old location: hdfs://hdp01:9000/user/hive/warehouse/hivedb.db/tbdelivermsg new location: hdfs://hdp04:9000/user/hive/warehouse/hivedb.db/tbdelivermsg

old location: hdfs://hdp01:9000/user/hive/warehouse/hivedb.db/hivedb__exam_score_exam_idx__ new location: hdfs://hdp04:9000/user/hive/warehouse/hivedb.db/hivedb__exam_score_exam_idx__

old location: hdfs://hdp01:9000/user/hive/warehouse/hivedb.db/hivedb__xj_student_xj_student_idx__ new location: hdfs://hdp04:9000/user/hive/warehouse/hivedb.db/hivedb__xj_student_xj_student_idx__

old location: hdfs://hdp01:9000/user/hive/warehouse/hivedb.db/xj_student new location: hdfs://hdp04:9000/user/hive/warehouse/hivedb.db/xj_student

Found 5 records in SDS table to update如果模拟更换成功,则使用不加-dryRun参数进行实际替换:

[hadoop@hdp01 ~]$ hive --service metatool -updateLocation hdfs://hdp04:9000 hdfs://hdp01:9000

Initializing HiveMetaTool..

Looking for LOCATION_URI field in DBS table to update..

Successfully updated the following locations..

old location: hdfs://hdp01:9000/user/hive/warehouse/hivedb.db new location: hdfs://hdp04:9000/user/hive/warehouse/hivedb.db

old location: hdfs://hdp01:9000/user/hive/warehouse new location: hdfs://hdp04:9000/user/hive/warehouse

Updated 2 records in DBS table

Looking for LOCATION field in SDS table to update..

Successfully updated the following locations..

old location: hdfs://hdp01:9000/user/hive/warehouse/hivedb.db/exam_score new location: hdfs://hdp04:9000/user/hive/warehouse/hivedb.db/exam_score

old location: hdfs://hdp01:9000/user/hive/warehouse/hivedb.db/tbdelivermsg new location: hdfs://hdp04:9000/user/hive/warehouse/hivedb.db/tbdelivermsg

old location: hdfs://hdp01:9000/user/hive/warehouse/hivedb.db/hivedb__exam_score_exam_idx__ new location: hdfs://hdp04:9000/user/hive/warehouse/hivedb.db/hivedb__exam_score_exam_idx__

old location: hdfs://hdp01:9000/user/hive/warehouse/hivedb.db/hivedb__xj_student_xj_student_idx__ new location: hdfs://hdp04:9000/user/hive/warehouse/hivedb.db/hivedb__xj_student_xj_student_idx__

old location: hdfs://hdp01:9000/user/hive/warehouse/hivedb.db/xj_student new location: hdfs://hdp04:9000/user/hive/warehouse/hivedb.db/xj_student

Updated 5 records in SDS table

[hadoop@hdp04 ~]$ hive --service metatool -listFSRoot

Initializing HiveMetaTool..

Listing FS Roots..

hdfs://hdp04:9000/user/hive/warehouse/hivedb.db

hdfs://hdp04:9000/user/hive/warehouse4、测试

将一个节点的metastore关闭,然后使用beeline命令进行测试,如下:

beeline> !connect jdbc:hive2://hdp04:10000 hadoop redhat org.apache.hive.jdbc.HiveDriver

Connecting to jdbc:hive2://hdp04:10000

Connected to: Apache Hive (version 2.3.2)

Driver: Hive JDBC (version 2.3.2)

Transaction isolation: TRANSACTION_REPEATABLE_READ

0: jdbc:hive2://hdp04:10000> use hivedb;

OK

No rows affected (0.069 seconds)

0: jdbc:hive2://hdp04:10000> show tables;

二、Hive HA配置

1、Hive配置

各个节点上编辑hive-site.xml文件,设置以下参数的值:

[hadoop@hdp01 ~]$ vi /u01/hive/conf/hive-site.xml

hive.server2.support.dynamic.service.discovery=true

hive.server2.zookeeper.namespace=hivesrv2

hive.zookeeper.quorum=hdp01:2181,hdp02:2181,hdp03:2181,hdp04:2181

hive.zookeeper.client.port=2181

hive.server2.thrift.bind.host=0.0.0.0

hive.server2.thrift.port=100002、启动第一个节点hive服务

启动服务:

[hadoop@hdp01 ~]$ hive --service metastore >/dev/null &

[hadoop@hdp01 ~]$ hive --service hiveserver2 >/dev/null &

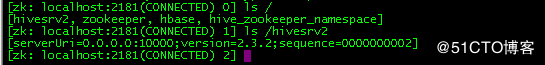

[hadoop@hdp01 ~]$ zkCli.sh

如图,hive服务已注册到ZooKeeper。

3、启动第二个节点的hive服务

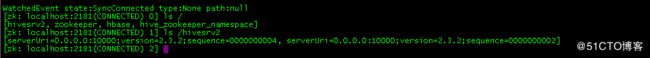

[hadoop@hdp04 ~]$ hive --service hiveserver2 >/dev/null &

[hadoop@hdp04 ~]$ zkCli.sh

如图,第二个hive也注册成功。

4、连接测试

Beeline 是一个 Hive 客户端,包含在 HDInsight 群集的头节点上。 Beeline 使用 JDBC 连接到 HiveServer2,后者是 HDInsight 群集上托管的一项服务。 还可以使用 Beeline 通过 Internet 远程访问 Hive on HDInsight。

[hadoop@hdp01 ~]$ beeline -u "jdbc:hive2://hdp01:2181,hdp02:2181,hdp03:2181,hdp04:2181/;serviceDiscoveryMode=zooKeeper;zooKeeperNamespace=hivesrv2" -n hadoop -p

Connecting to jdbc:hive2://hdp01:2181,hdp02:2181,hdp03:2181,hdp04:2181/;serviceDiscoveryMode=zooKeeper;zooKeeperNamespace=hivesrv2;user=hadoop

Enter password for jdbc:hive2://hdp01:2181,hdp02:2181,hdp03:2181,hdp04:2181/: ******

17/12/13 15:48:16 [main]: INFO jdbc.HiveConnection: Connected to 0.0.0.0:10000

Connected to: Apache Hive (version 2.3.2)

Driver: Hive JDBC (version 2.3.2)

Transaction isolation: TRANSACTION_REPEATABLE_READ

Beeline version 2.3.2 by Apache Hive

0: jdbc:hive2://hdp01:2181,hdp02> show databases;

+----------------+

| database_name |

+----------------+

| default |

| hivedb |

+----------------+

2 rows selected (0.43 seconds)

0: jdbc:hive2://hdp01:2181,hdp02> use hivedb;

No rows affected (0.048 seconds)

0: jdbc:hive2://hdp01:2181,hdp02> show tables;

+--------------------------------------+

| tab_name |

+--------------------------------------+

| exam_score |

| hivedb__exam_score_exam_idx__ |

| hivedb__xj_student_xj_student_idx__ |

| tbdelivermsg |

| xj_student |

+--------------------------------------+

5 rows selected (0.143 seconds)参考文献:

1、将 Beeline 客户端与 Apache Hive 配合使用

2、HiveServer2的高可用-HA配置