caffe 输出信息分析+debug_info

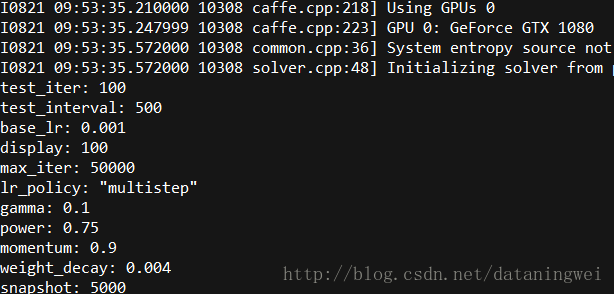

1 caffe输出基本信息

caffe在训练的时候屏幕会输出程序运行的状态信息,通过查看状态信息方便查看程序运行是否正常,且方便查找bug.

caffe debug信息默认是不开启的,此时的输出信息的总体结构如下所示:

![]()

1.1 solver信息加载并显示

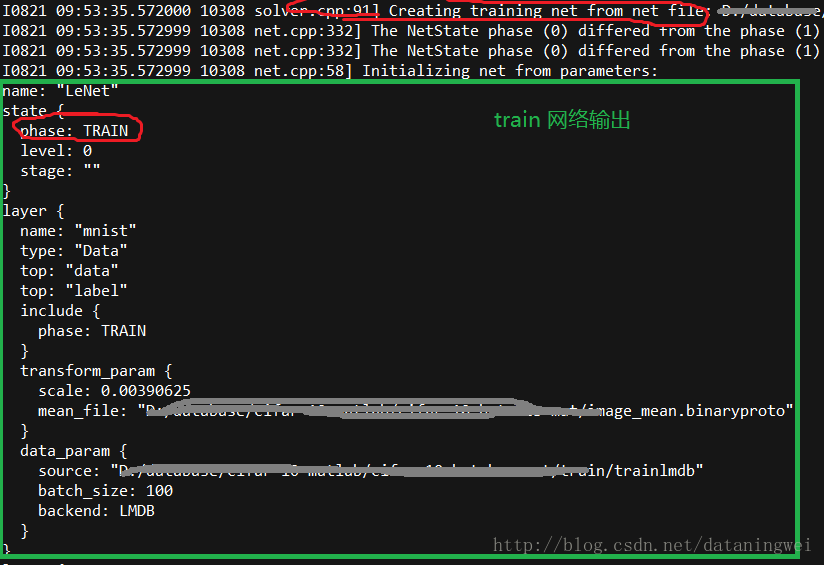

1.2 train网络结构输出

1.3 Train 各层创建状态信息

I0821 09:53:35.572999 10308 layer_factory.hpp:77] Creating layer mnist ####创建第一层

I0821 09:53:35.572999 10308 common.cpp:36] System entropy source not available, using fallback algorithm to generate seed instead.

I0821 09:53:35.572999 10308 net.cpp:100] Creating Layer mnist

I0821 09:53:35.572999 10308 net.cpp:418] mnist -> data

I0821 09:53:35.572999 10308 net.cpp:418] mnist -> label

I0821 09:53:35.572999 10308 data_transformer.cpp:25] Loading mean file from: ....../image_mean.binaryproto

I0821 09:53:35.579999 11064 common.cpp:36] System entropy source not available, using fallback algorithm to generate seed instead.

I0821 09:53:35.580999 11064 db_lmdb.cpp:40] Opened lmdb ......./train/trainlmdb

I0821 09:53:35.623999 10308 data_layer.cpp:41] output data size: 100,3,32,32 ###输出blob尺寸

I0821 09:53:35.628999 10308 net.cpp:150] Setting up mnist

I0821 09:53:35.628999 10308 net.cpp:157] Top shape: 100 3 32 32 (307200)

I0821 09:53:35.628999 10308 net.cpp:157] Top shape: 100 (100)

I0821 09:53:35.628999 10308 net.cpp:165] Memory required for data: 1229200

I0821 09:53:35.628999 10308 layer_factory.hpp:77] Creating layer conv1 ##### 创建第二层

I0821 09:53:35.628999 10308 net.cpp:100] Creating Layer conv1

I0821 09:53:35.628999 10308 net.cpp:444] conv1 <- data

I0821 09:53:35.628999 10308 net.cpp:418] conv1 -> conv1

I0821 09:53:35.629999 7532 common.cpp:36] System entropy source not available, using fallback algorithm to generate seed instead.

I0821 09:53:35.909999 10308 net.cpp:150] Setting up conv1

I0821 09:53:35.909999 10308 net.cpp:157] Top shape: 100 64 28 28 (5017600) #### 输出blob尺寸

I0821 09:53:35.909999 10308 net.cpp:165] Memory required for data: 21299600

.

.

.

I0821 09:53:35.914000 10308 layer_factory.hpp:77] Creating layer loss

I0821 09:53:35.914000 10308 net.cpp:150] Setting up loss

I0821 09:53:35.914000 10308 net.cpp:157] Top shape: (1)

I0821 09:53:35.914000 10308 net.cpp:160] with loss weight 1

I0821 09:53:35.914000 10308 net.cpp:165] Memory required for data: 49322804

I0821 09:53:35.914000 10308 net.cpp:226] loss needs backward computation. ######## 各层反向传播信息

I0821 09:53:35.914000 10308 net.cpp:226] ip2 needs backward computation.

I0821 09:53:35.914000 10308 net.cpp:226] relu3 needs backward computation.

I0821 09:53:35.914000 10308 net.cpp:226] ip1 needs backward computation.

I0821 09:53:35.914000 10308 net.cpp:226] pool2 needs backward computation.

I0821 09:53:35.914000 10308 net.cpp:226] relu2 needs backward computation.

I0821 09:53:35.914000 10308 net.cpp:226] conv2 needs backward computation.

I0821 09:53:35.914000 10308 net.cpp:226] pool1 needs backward computation.

I0821 09:53:35.914000 10308 net.cpp:226] relu1 needs backward computation.

I0821 09:53:35.914000 10308 net.cpp:226] conv1 needs backward computation.

I0821 09:53:35.914000 10308 net.cpp:228] mnist does not need backward computation.

I0821 09:53:35.914000 10308 net.cpp:270] This network produces output loss ######## 网络输出节点个数及名称(重要),后续参数输出均是此节点的信息 ###############

I0821 09:53:35.914000 10308 net.cpp:283] Network initialization done. ###网络创建完成1、通过查看网络创建信息科了解网络节点blob大小

2、可知道网络后续最终输出信息

3、test的创建过程与train类似,此处不再重复说明

1.4 Train 和Test网络的迭代信息输出

I0821 09:53:35.929999 10308 solver.cpp:60] Solver scaffolding done.

I0821 09:53:35.929999 10308 caffe.cpp:252] Starting Optimization ####### 开始网络训练

I0821 09:53:35.929999 10308 solver.cpp:279] Solving LeNet

I0821 09:53:35.929999 10308 solver.cpp:280] Learning Rate Policy: multistep

I0821 09:53:35.930999 10308 solver.cpp:337] Iteration 0, Testing net (#0) #### Test(Iteration 0)

I0821 09:53:35.993999 10308 blocking_queue.cpp:50] Data layer prefetch queue empty

I0821 09:53:36.180999 10308 solver.cpp:404] Test net output #0: accuracy = 0.1121 #### Test(Iteration 0)网络输出节点0,accuracy信息。(由网络定义决定)

I0821 09:53:36.180999 10308 solver.cpp:404] Test net output #1: loss = 2.30972 (* 1 = 2.30972 loss) #### Test(Iteration 0)网络输出节点1,loss信息。 (由网络定义决定)

I0821 09:53:36.190999 10308 solver.cpp:228] Iteration 0, loss = 2.2891 #### Tain(Iteration 0) 网络loss值

I0821 09:53:36.190999 10308 solver.cpp:244] Train net output #0: loss = 2.2891 (* 1 = 2.2891 loss) #### Tain(Iteration 0) 只有一个输出值

I0821 09:53:36.190999 10308 sgd_solver.cpp:106] Iteration 0, lr = 0.001 #### Tain(Iteration 0)

I0821 09:53:36.700999 10308 solver.cpp:228] Iteration 100, loss = 2.24716 #### Tain(Iteration 100)

I0821 09:53:36.700999 10308 solver.cpp:244] Train net output #0: loss = 2.24716 (* 1 = 2.24716 loss) #### Tain(Iteration 100)

I0821 09:53:36.700999 10308 sgd_solver.cpp:106] Iteration 100, lr = 0.001 #### Tain(Iteration 100)

I0821 09:53:37.225999 10308 solver.cpp:228] Iteration 200, loss = 2.08563

I0821 09:53:37.225999 10308 solver.cpp:244] Train net output #0: loss = 2.08563 (* 1 = 2.08563 loss)

I0821 09:53:37.225999 10308 sgd_solver.cpp:106] Iteration 200, lr = 0.001

I0821 09:53:37.756000 10308 solver.cpp:228] Iteration 300, loss = 2.11631

I0821 09:53:37.756000 10308 solver.cpp:244] Train net output #0: loss = 2.11631 (* 1 = 2.11631 loss)

I0821 09:53:37.756000 10308 sgd_solver.cpp:106] Iteration 300, lr = 0.001

I0821 09:53:38.286999 10308 solver.cpp:228] Iteration 400, loss = 1.89424

I0821 09:53:38.286999 10308 solver.cpp:244] Train net output #0: loss = 1.89424 (* 1 = 1.89424 loss)

I0821 09:53:38.286999 10308 sgd_solver.cpp:106] Iteration 400, lr = 0.001

I0821 09:53:38.819999 10308 solver.cpp:337] Iteration 500, Testing net (#0) #### Test(Iteration 500)

I0821 09:53:39.069999 10308 solver.cpp:404] Test net output #0: accuracy = 0.3232 #### Test(Iteration 500)

I0821 09:53:39.069999 10308 solver.cpp:404] Test net output #1: loss = 1.87822 (* 1 = 1.87822 loss) #### Test(Iteration 500)

I0821 09:53:39.072999 10308 solver.cpp:228] Iteration 500, loss = 1.94478

I0821 09:53:39.072999 10308 solver.cpp:244] Train net output #0: loss = 1.94478 (* 1 = 1.94478 loss)

I0821 09:53:39.072999 10308 sgd_solver.cpp:106] Iteration 500, lr = 0.001从输出可以看出,Train和Test一次输出周期如下:

- Test 一次训练周期

–Iteration 0, Testing net (#0)

–Test net output #0: accuracy = 0.1121

–Test net output #1: loss = 2.30972 (* 1 = 2.30972 loss) - Train 一次训练周期

–Iteration 0, loss = 2.2891

–Train net output #0: loss = 2.2891 (* 1 = 2.2891 loss)

–Iteration 0, lr = 0.001

2 log信息解析

2 debug info信息

- 在solver 中添加 debug_info:true

- 开启caffe的debug信息输出

import os

import re

import extract_seconds

import argparse

import csv

from collections import OrderedDict

def get_datadiff_paradiff(line,data_row,para_row,data_list,para_list,L_list,L_row,top_list,top_row,iteration):

regex_data=re.compile('\[Backward\] Layer (\S+), bottom blob (\S+) diff: ([\.\deE+-]+)')

regex_para=re.compile('\[Backward\] Layer (\S+), param blob (\d+) diff: ([\.\deE+-]+)')

regex_L1L2=re.compile('All net params \(data, diff\): L1 norm = \(([\.\deE+-]+), ([\.\deE+-]+)\); L2 norm = \(([\.\deE+-]+), ([\.\deE+-]+)\)')

regex_topdata=re.compile('\[Forward\] Layer (\S+), (\S+) blob (\S+) data: ([\.\deE+-]+)')

#regex_toppara=re.compile('')

out_match_data=regex_data.search(line)

if out_match_data or iteration>-1:

if not data_row or iteration>-1 :

if data_row:

data_row['NumIters']=iteration

data_list.append(data_row)

data_row = OrderedDict()

if out_match_data :

layer_name=out_match_data.group(1)

blob_name=out_match_data.group(2)

data_diff_value=out_match_data.group(3)

key=layer_name+'-'+blob_name

data_row[key]=float(data_diff_value)

out_match_para=regex_para.search(line)

if out_match_para or iteration>-1:

if not para_row or iteration>-1:

if para_row:

para_row['NumIters']=iteration

para_list.append(para_row)

para_row=OrderedDict()

if out_match_para:

layer_name=out_match_para.group(1)

param_d=out_match_para.group(2)

para_diff_value=out_match_para.group(3)

layer_name=layer_name+'-blob'+'-'+param_d

para_row[layer_name]=para_diff_value

out_match_norm=regex_L1L2.search(line)

if out_match_norm or iteration>-1:

if not L_row or iteration>-1:

if L_row:

L_row['NumIters']=iteration

L_list.append(L_row)

L_row=OrderedDict()

if out_match_norm:

L_row['data-L1']=out_match_norm.group(1)

L_row['diff-L1']=out_match_norm.group(2)

L_row['data-L2']=out_match_norm.group(3)

L_row['diff-L2']=out_match_norm.group(4)

out_match_top=regex_topdata.search(line)

if out_match_top or iteration>-1:

if not top_row or iteration>-1:

if top_row:

top_row['NumIters']=iteration

top_list.append(top_row)

top_row=OrderedDict()

if out_match_top:

layer_name=out_match_top.group(1)

top_para=out_match_top.group(2)

blob_or_num=out_match_top.group(3)

key=layer_name+'-'+top_para+'-'+blob_or_num

data_value=out_match_top.group(4)

top_row[key]=float(data_value)

return data_list,data_row,para_list,para_row,L_list,L_row,top_list,top_row

def parse_log(path_to_log):

"""Parse log file

Returns (train_dict_list, train_dict_names, test_dict_list, test_dict_names)

train_dict_list and test_dict_list are lists of dicts that define the table

rows

train_dict_names and test_dict_names are ordered tuples of the column names

for the two dict_lists

"""

regex_iteration = re.compile('Iteration (\d+)')

regex_train_iteration=re.compile('Iteration (\d+), loss')

regex_train_output = re.compile('Train net output #(\d+): (\S+) = ([\.\deE+-]+)')

regex_test_output = re.compile('Test net output #(\d+): (\S+) = ([\.\deE+-]+)')

regex_learning_rate = re.compile('lr = ([-+]?[0-9]*\.?[0-9]+([eE]?[-+]?[0-9]+)?)')

regex_backward = re.compile('\[Backward\] Layer ')

# Pick out lines of interest

iteration = -1

train_iter=-1

learning_rate = float('NaN')

train_dict_list = []

test_dict_list = []

train_row = None

test_row = None

data_diff_list=[]

para_diff_list=[]

L1L2_list=[]

top_list=[]

data_diff_row=None

para_diff_row=None

L1L2_row = None

top_row=None

logfile_year = extract_seconds.get_log_created_year(path_to_log)

with open(path_to_log) as f:

start_time = extract_seconds.get_start_time(f, logfile_year)

for line in f:

iteration_match = regex_iteration.search(line)

train_iter_match=regex_train_iteration.search(line)

if iteration_match:

iteration = float(iteration_match.group(1))

if train_iter_match:

train_iter=float(train_iter_match.group(1))

if iteration == -1:

# Only start parsing for other stuff if we've found the first

# iteration

continue

time = extract_seconds.extract_datetime_from_line(line,

logfile_year)

seconds = (time - start_time).total_seconds()

learning_rate_match = regex_learning_rate.search(line)

if learning_rate_match:

learning_rate = float(learning_rate_match.group(1))

back_match=regex_backward.search(line)

# if back_match:

data_diff_list,data_diff_row,para_diff_list,para_diff_row,L1L2_list,L1L2_row,top_list,top_row=get_datadiff_paradiff(

line,data_diff_row,para_diff_row,

data_diff_list,para_diff_list,

L1L2_list,L1L2_row,

top_list,top_row,

train_iter

)

train_iter=-1

train_dict_list, train_row = parse_line_for_net_output(

regex_train_output, train_row, train_dict_list,

line, iteration, seconds, learning_rate

)

test_dict_list, test_row = parse_line_for_net_output(

regex_test_output, test_row, test_dict_list,

line, iteration, seconds, learning_rate

)

fix_initial_nan_learning_rate(train_dict_list)

fix_initial_nan_learning_rate(test_dict_list)

return train_dict_list, test_dict_list,data_diff_list,para_diff_list,L1L2_list,top_list

def parse_line_for_net_output(regex_obj, row, row_dict_list,

line, iteration, seconds, learning_rate):

"""Parse a single line for training or test output

Returns a a tuple with (row_dict_list, row)

row: may be either a new row or an augmented version of the current row

row_dict_list: may be either the current row_dict_list or an augmented

version of the current row_dict_list

"""

output_match = regex_obj.search(line)

if output_match:

if not row or row['NumIters'] != iteration:

# Push the last row and start a new one

if row:

# If we're on a new iteration, push the last row

# This will probably only happen for the first row; otherwise

# the full row checking logic below will push and clear full

# rows

row_dict_list.append(row)

row = OrderedDict([

('NumIters', iteration),

('Seconds', seconds),

('LearningRate', learning_rate)

])

# output_num is not used; may be used in the future

# output_num = output_match.group(1)

output_name = output_match.group(2)

output_val = output_match.group(3)

row[output_name] = float(output_val)

if row and len(row_dict_list) >= 1 and len(row) == len(row_dict_list[0]):

# The row is full, based on the fact that it has the same number of

# columns as the first row; append it to the list

row_dict_list.append(row)

row = None

return row_dict_list, row

def fix_initial_nan_learning_rate(dict_list):

"""Correct initial value of learning rate

Learning rate is normally not printed until after the initial test and

training step, which means the initial testing and training rows have

LearningRate = NaN. Fix this by copying over the LearningRate from the

second row, if it exists.

"""

if len(dict_list) > 1:

dict_list[0]['LearningRate'] = dict_list[1]['LearningRate']

def save_csv_files(logfile_path, output_dir, train_dict_list, test_dict_list,data_diff_list, para_diff_list,L1L2_list,top_list,

delimiter=',', verbose=False):

"""Save CSV files to output_dir

If the input log file is, e.g., caffe.INFO, the names will be

caffe.INFO.train and caffe.INFO.test

"""

log_basename = os.path.basename(logfile_path)

train_filename = os.path.join(output_dir, log_basename + '.train')

write_csv(train_filename, train_dict_list, delimiter, verbose)

test_filename = os.path.join(output_dir, log_basename + '.test')

write_csv(test_filename, test_dict_list, delimiter, verbose)

data_diff_filename=os.path.join(output_dir, log_basename + '.datadiff')

write_csv(data_diff_filename, data_diff_list, delimiter, verbose)

para_diff_filename=os.path.join(output_dir, log_basename + '.paradiff')

write_csv(para_diff_filename, para_diff_list, delimiter, verbose)

L1L2_filename=os.path.join(output_dir, log_basename + '.L1L2')

write_csv(L1L2_filename, L1L2_list, delimiter, verbose)

topdata_filename=os.path.join(output_dir, log_basename + '.topdata')

write_csv(topdata_filename, top_list, delimiter, verbose)

def write_csv(output_filename, dict_list, delimiter, verbose=False):

"""Write a CSV file

"""

if not dict_list:

if verbose:

print('Not writing %s; no lines to write' % output_filename)

return

dialect = csv.excel

dialect.delimiter = delimiter

with open(output_filename, 'w') as f:

dict_writer = csv.DictWriter(f, fieldnames=dict_list[0].keys(),

dialect=dialect)

dict_writer.writeheader()

dict_writer.writerows(dict_list)

if verbose:

print 'Wrote %s' % output_filename

def parse_args():

description = ('Parse a Caffe training log into two CSV files '

'containing training and testing information')

parser = argparse.ArgumentParser(description=description)

parser.add_argument('logfile_path',

help='Path to log file')

parser.add_argument('output_dir',

help='Directory in which to place output CSV files')

parser.add_argument('--verbose',

action='store_true',

help='Print some extra info (e.g., output filenames)')

parser.add_argument('--delimiter',

default=',',

help=('Column delimiter in output files '

'(default: \'%(default)s\')'))

args = parser.parse_args()

return args

def main():

args = parse_args()

train_dict_list, test_dict_list,data_diff_list,para_diff_list,L1L2_list,top_list = parse_log(args.logfile_path)

save_csv_files(args.logfile_path, args.output_dir, train_dict_list,

test_dict_list, data_diff_list, para_diff_list, L1L2_list,top_list,delimiter=args.delimiter)

if __name__ == '__main__':

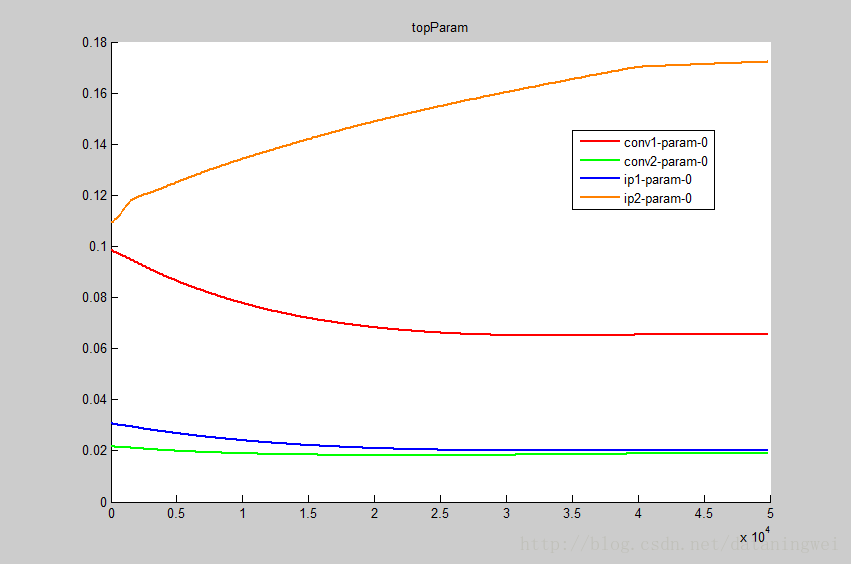

main()参数变化趋势图。