分布式消息中间件(七)——Kafka安装及配置详解(Linux)

一、Zookeeper集群准备

Kafka服务有赖于Zookeeper来管理,故在安装kakfa前先安装zk集群环境。具体安装步骤,详见 Linux 系列(七)——Zookeeper集群搭建

二、Kafka核心配置解析

1、上传压缩包,解压

2、解压后,cd /lamp/kafka/config文件下,查看kafka所有相关配置

-rw-r--r--. 1 root root 906 May 17 21:26 connect-console-sink.properties

-rw-r--r--. 1 root root 909 May 17 21:26 connect-console-source.properties

-rw-r--r--. 1 root root 2760 May 17 21:26 connect-distributed.properties

-rw-r--r--. 1 root root 883 May 17 21:26 connect-file-sink.properties

-rw-r--r--. 1 root root 881 May 17 21:26 connect-file-source.properties

-rw-r--r--. 1 root root 1074 May 17 21:26 connect-log4j.properties

-rw-r--r--. 1 root root 2061 May 17 21:26 connect-standalone.properties

-rw-r--r--. 1 root root 1199 May 17 21:26 consumer.properties

-rw-r--r--. 1 root root 4369 May 17 21:26 log4j.properties

-rw-r--r--. 1 root root 1900 May 17 21:26 producer.properties

-rw-r--r--. 1 root root 5243 May 17 21:26 server.properties

-rw-r--r--. 1 root root 1032 May 17 21:26 tools-log4j.properties

-rw-r--r--. 1 root root 1041 Jun 18 11:19 zookeeper.properties

# the directory where the snapshot is stored.

dataDir=/usr/local/cloud/zookeeper1/data

# the port at which the clients will connect

clientPort=2181

# disable the per-ip limit on the number of connections since this is a non-production config

maxClientCnxns=0

############################# Server Basics 指定broker ID,每个kafka实例拥有一个唯一brokerID作为标识#############################

# The id of the broker. This must be set to a unique integer for each broker.

broker.id=0

############################# Log Basics kafka日志持久化日志文件路径,生产环境指定到非tmp临时文件夹下#############################

# A comma seperated list of directories under which to store log files

log.dirs=/tmp/kafka-logs

# The default number of log partitions per topic. More partitions allow greater

# parallelism for consumption, but this will also result in more files across

# the brokers.

num.partitions=1 # 配置本broker topic 分区个数

# The number of threads per data directory to be used for log recovery at startup and flushing at shutdown.

# This value is recommended to be increased for installations with data dirs located in RAID array.

num.recovery.threads.per.data.dir=1

############################# Zookeeper #############################

# Zookeeper connection string (see zookeeper docs for details).

# This is a comma separated host:port pairs, each corresponding to a zk

# server. e.g. "127.0.0.1:3000,127.0.0.1:3001,127.0.0.1:3002".

# You can also append an optional chroot string to the urls to specify the

# root directory for all kafka znodes.

zookeeper.connect=192.168.220.128:2181 #zk服务地址

# Timeout in ms for connecting to zookeeper

zookeeper.connection.timeout.ms=6000 #zk响应过期时间

server.properties是kafka broker的配置文件,主要用于配置broker的日志、分区、zk连接信息。除了上述提供的主要配置之外,还有Log Retention Policy日志保留策略(以总时间、segment大小、间隔时间配置)、Log Flush Policy 日志flush策略 ,日志中存有10000条记录开始持久化操作、每隔1000ms执行持久化,通过配置可调可配,非常灵活。

producer.properties

############################# Producer Basics #############################

# list of brokers used for bootstrapping knowledge about the rest of the cluster

# format: host1:port1,host2:port2 ...

bootstrap.servers=192.168.220.128:9092

# specify the compression codec for all data generated: none, gzip, snappy, lz4

compression.type=none

# Zookeeper connection string

# comma separated host:port pairs, each corresponding to a zk

# server. e.g. "127.0.0.1:3000,127.0.0.1:3001,127.0.0.1:3002"

zookeeper.connect=127.0.0.1:2181

# timeout in ms for connecting to zookeeper

zookeeper.connection.timeout.ms=6000

#consumer group id

group.id=test-consumer-group

#consumer timeout

#consumer.timeout.ms=5000

1、kafka启动

启动kafka前先启动zk服务: bin/zookeeper-server-start.sh /usr/local/cloud/zookeeper1/conf/zoo.cfg --指定zk的配置文件

启动kafka: bin/kafka-server-start.sh /lamp/kafka/config/server.properties >/dev/null 2>&1 & --后台启动kafka

执行jsp查看启动进程如下

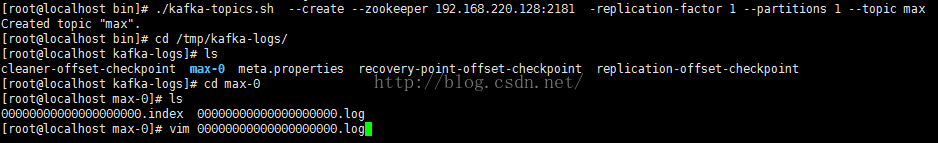

2、创建topic

bin/kafka-topics.sh --create --zookeeper localhost:2181 --replication-factor 1 --partitions 1 --topic max

在创建完topic后,可查看kafka日志持久化路径,已经创建好刚才的log日志文件用于记录topic中parttion信息。(log :partition=1:1)

3、查看topic

查看topic中list:bin/kafka-topics.sh --list --zookeeper localhost:2181 --返回以创建的topic名称,如max,linxi

查看某topic 详情信息:bin/kafka-topics.sh --describe --zookeeper localhost:2181 --topic max

4、删除topic

bin/kafka-run-class.sh kafka.admin.TopicCommand –delete --topic max --zookeeper 192.168.220.128:2181

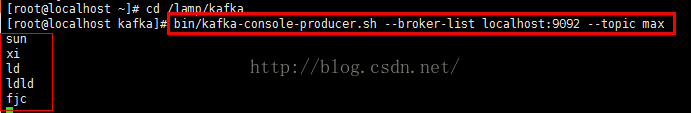

5、创建生产者

bin/kafka-console-producer.sh --broker-list localhost:9092 --topic max 向名为max的topic中生产消息

6、创建消费者

bin/kafka-console-consumer.sh --zookeeper localhost:2181 --topic max --from-beginning

生产者和消费间消息是实时的,这边生产,另一端立马开始消费。

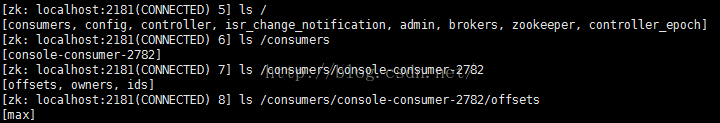

另外在zk中记录了partion消息的消费记录信息,进入zk客户端连接服务,进行查看

三、Kafka集群

Kafka的集群与zk集群类似,灰常简单,因为已经搭建好zk集群,kafka只需创建多个实例,修改上述server.properties中broker.id 不重复即可,and 在zk connection处添加上三个zk实例即可。

四、客户端实现

/*

*消息生产者

*/

public class Producer extends Thread{

private String topic;

public Producer(String topic)

{

super();

this.topic=topic;

}

@Override

public void run() {

Producer producer=createProducer();

producer.send(new KeyedMessage<>(topic, "xiaowei"));

}

private Producer createProducer()

{

Properties props=new Properties();

props.setProperty("zookeeper.connect", "192.168.220.128:2181");

props.setProperty("serializer.class", StringEncoder.class.getName());

props.setProperty("metadata.broker.list", "192.168.220.128:9092");//p.properties的配置中的端口号

return new Producer(new ProducerConfig(props));

}

public static void main(String[] args) {

new Producer("max").start();

}

}

/*

*消息消费者

*/

public class Consumer extends Thread{

private String topic;

public Consumer(String topic)

{

super();

this.topic=topic;

}

@Override

public void run() {

//通过props创建consumer连接

ConsumerConnector consumer=createConsumer();

//创建topicMap存放指定消费的topic名,分区号

Map topicMap=new HashMap();

topicMap.put(topic, 1);

//msgstreams-topic中的所有消息,kafkaStream-某一条消息

Map>> msgStreams=consumer.createMessageStreams(topicMap);

KafkaStream kafkaStream=msgStreams.get(topic).get(0);

//数据处理过程

ConsumerIterator iterator=kafkaStream.iterator();

while(iterator.hasNext())

{

byte[] msg=iterator.next.message();

System.out.println("message is" +new String(msg));

}

}

private ConsumerConnector createConsumer()

{

Properties props=new Properties();

props.setProperty("zookeeper.connect", "192.168.220.128:2181");

props.setProperty("group.id", "max");

return Consumer.createJavaConsumerConnector(new Consumer());

}

public static void main(String[] args)

{

new Consumer("max").start();

}

}