elk搭建方法详见之前一篇文章https://blog.51cto.com/chentianwang/1915326

废话不多说

环境介绍

192.168.102.20 nginx logstash-agent6.1

192.168.102.21 logstash-server6.1 redis-server

一,搭建测试的nginx环境

配置文件如下

worker_processes 1;

events {

worker_connections 1024;

}

http {

include mime.types;

include /etc/nginx/conf.d/*.conf;

default_type application/octet-stream;

log_format main '{"http_x_forwarded_for":"$http_x_forwarded_for","remote_user":"$remote_user","time_local":"$time_local","request":"$request","status":"$status","body_bytes_sent":"$body_bytes_sent","request_body":"$request_body","http_referer":"$http_referer","remote_addr":"$remote_addr","fastcgi_script_name":"$fastcgi_script_name","request_time":"$request_time","proxy_host":"$proxy_host","upstream_addr":"$upstream_addr","upstream_response_time":"$upstream_response_time","agent":"$http_user_agent"}';

access_log /var/log/nginx/access.log main;

sendfile on;

keepalive_timeout 65;

upstream real_server {

server 127.0.0.1:8080;

}

server {

listen 80;

server_name asd.com;

location / {

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_pass http://real_server;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

}

}这里要注意 log_format 已经定义好了日志为json格式,方便logstash的获取

access的日志格式为

{"http_x_forwarded_for":"-","remote_user":"-","time_local":"26/Jan/2018:15:32:53 +0800","request":"GET / HTTP/1.1","status":"304","body_bytes_sent":"0","request_body":"-","http_referer":"-","remote_addr":"192.168.102.1","fastcgi_script_name":"/","request_time":"0.001","proxy_host":"real_server","upstream_addr":"127.0.0.1:8080","upstream_response_time":"0.001","agent":"Mozilla/5.0 (Windows NT 10.0; WOW64; rv:57.0) Gecko/20100101 Firefox/57.0"}为了方便测试还会另外起一个8080端口用于upstream测试

server {

server_name 127.0.0.1;

listen 8080 ;

root /data/;

index index.html ;

}二,logstash配置

192.168.102.20

input {

file {

path => "/var/log/nginx/access.log"

}

}

output {

redis {

host => "192.168.102.21" # redis主机地址

port => 6379 # redis端口号

data_type => "list" # 使用发布/订阅模式

key => "nginx_102_20" # 发布通道名称

}

}192.168.102.21

input {

redis {

host => "192.168.102.21" #本地的reds地址

port => 6379 #redis端口

type => "redis-input" #输入类型

data_type => "list" #使用redis的list存储数据

key => "nginx_102_20"

}

}

filter {

json {

source => "message"

}

}

output {

stdout {}

if [status] == "304" {

exec {

command => "python /root/elk_alarm/count.py err_304 %{proxy_host}"

}

}

elasticsearch {

index => "test" #索引信息

hosts => [ "192.168.102.21:9200" ]

}

}这里agent往redis里写数据 server从redis里读数据,并且判断状态码304(方便测试而已具体规则自己定义,也支持and or等)发现后执行python脚本

三,自定义报警脚本

大致意思为发现报警自动往redis增加一条计数器

vim count.py

#!/usr/bin/python

import redis

import sys

f=open("/root/elk_alarm/status.log","a")

f.write(sys.argv[1] + "\n")

f.write(sys.argv[2] + "\n")

f.close()

r = redis.StrictRedis(host='192.168.102.21', port=6379)

count = r.hget(sys.argv[1],sys.argv[2])

if count:

r.hset(sys.argv[1],sys.argv[2],int(count)+1)

else:

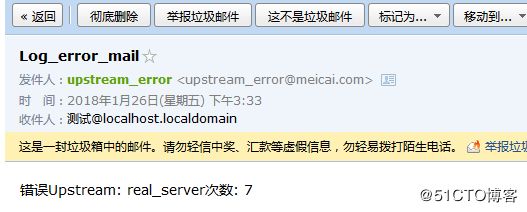

r.hset(sys.argv[1],sys.argv[2],1)还要写一个报警脚本,大致是发现304超过3时报警,需要配合crontab定时执行,如果在定义的时间内达不到3就清0,如果大于3就报警

vim check.py

#!/usr/bin/python

# -*- coding: UTF-8 -*-

import redis

import smtplib

from email.mime.text import MIMEText

from email.header import Header

r = redis.StrictRedis(host='192.168.102.21', port=6379)

tengine=[

'err_304',

]

sender = '[email protected]'

receivers = ['[email protected]']

for i in tengine:

print i

print "============================="

for k,v in r.hgetall(i).items():

if int(v) > 3:

print k,v

print "error"

# 三个参数:第一个为文本内容,第二个 plain 设置文本格式,第三个 utf-8 设置编码

mail_msg = "错误Upstream:" + " " + k + "次数:" + " "+ v

message = MIMEText(mail_msg, 'plain', 'utf-8')

message['From'] = Header(sender)

message['To'] = Header("测试", 'utf-8')

subject = 'Log_error_mail'

message['Subject'] = Header(subject, 'utf-8')

try:

smtpObj = smtplib.SMTP('localhost')

smtpObj.sendmail(sender, receivers, message.as_string())

print "邮件发送成功"

except smtplib.SMTPException:

print "Error: 无法发送邮件"

r.hset(i,k,0)

print "-----------------------------------------"

else:

print k,v

print "ok"

print "-----------------------------------------"四,效果