重写ansible-playbook api实现多进程异步部署

几个说明:

- ansible调用线程不安全,一是多用户权限切换多线程无法实现,二是playbook之间需要数据进程独立,plugin初始化的时候,/plugins/init.py的35行有三个全局变量,这些是ansible的缓存,存储所有初始化完成的plugin。多线程竞争共享全局变量,那么有些线程就会把未初始化完成的plugin调用使用,从而报错。

- 使用动态inventory实现api直接修改调用;

- ansible-api实现了ansible和ansbile-playbook的基本功能(重新用多线程模式把ansible的cli写了一次),然后通过借助他的task和callback机制来获取结构化返回数据。最后把这些操作封装成了http api。

cli调用模块:

class Deploy():

def run(self, monlist, osdlist):

mon_config = MonConfig(monlist)

osd_config = OsdConfig(osdlist)

player = CephPlayer()

#player.set_ssh_key_file()

player.add_config(mon_config)

player.add_config(osd_config)

player.start()

CephPlayer()继承了multiprocessing.Process,直接调用start()方法开启新进程,异步执行脚本。

动态Inventory实现:

class MonConfig(AnsibleConfig):

def __init__(self, mons):

super(MonConfig, self).__init__(mons)

for i in range(len(mons)):

self.hosts.append(mons[i])

self.name = 'mons'

def make_host(self, inventory):

if self.name not in inventory.groups:

inventory.add_group(self.name)

##inventory.add_host(self.host, group=self.name)

for host in self.hosts:

inventory.add_host(host, group=self.name)

首先在cmd实例化若干个config类,然后在player中添加并依次调用make_host()方法将对应group的主机添加到inventory类中,传递给player。

CallBack类重写,自定义输出:

class ResultController(CallbackModule):

def __init__(self):

super(ResultController, self).__init__()

#self.stats = TaskQueueManager._stats

def _notify_task(self, result, state):

file = open('/var/log/deploy1.txt', 'a')

file.write(json.dumps({

'type': 'task',

'state': state,

'host': result._host.name,

'task': result._task.get_name(),

}))

file.write("\n\n")

file.close()

def _notify_task_result(self, result, state):

file = open('/var/log/deploy1.txt', 'a')

file.write(json.dumps({

'type': 'task',

'state': state,

'host': result._host.name,

'task': result._task.get_name(),

'result': result._result

}))

file.write("\n\n")

file.close()

def v2_runner_on_ok(self, result, **kwargs):

self._notify_task(result, 'ok')

def v2_runner_on_unreachable(self, result):

self._notify_task_result(result, 'unreachable')

def v2_runner_on_failed(self, result, *args, **kwargs):

self._notify_task_result(result, 'failed')

def v2_runner_on_skipped(self, result):

self._notify_task(result, 'failed')

def v2_playbook_on_start(self, playbook):

file = open('/var/log/deploy1.txt', 'w')

file.write(json.dumps({

'type': 'playbook_start',

'file': playbook._file_name

}))

file.write("\n\n")

file.close()

def v2_playbook_on_play_start(self, play):

file = open('/var/log/deploy1.txt', 'a')

file.write(json.dumps({

'type': 'play_start',

'name': play.get_name()

}))

file.write("\n\n")

file.close()

def v2_playbook_on_task_start(self, task, is_conditional):

file = open('/var/log/deploy1.txt', 'a')

file.write(json.dumps({

'type': 'task_start',

'name': task.get_name()

}))

file.write("\n\n")

file.close()

def done(self, code, stats):

file = open('/var/log/deploy1.txt', 'a')

file.write(json.dumps({

'type': 'exit',

'code': code,

'ok': stats.ok,

'failures': stats.failures,

'unreachable': stats.dark,

'skipped': stats.skipped,

'changed': stats.changed

}))

file.write("\n\n")

file.close()

多进程下变量不共享,使用pipe进行数据传输则需要主进程等待,会造成rpc超时down掉。这里采用文件保存部署输出结果信息。stats.py存储了所有host的task运行结果。

封装PlaybookExecutor:

增加了几个方法让PlaybookExecutor的调用更为简单,其中run方法负责执行executor。

def run(self):

loader = DataLoader()

options = self.options

##options = self._make_ansible_options()

inventory = InventoryManager(loader=loader)

passwords = dict(conn_pass='123456', become_pass='123456')

result_callback = ResultController()

for config in self.configs:

config.make_host(inventory)

variable_manager = VariableManager(loader=loader, inventory=inventory)

#private_key_file = self.set_ssh_key_file(ssh_key_file)

extra_vars = {}

extra_vars.update(loader.load_from_file(self.all_conf))

extra_vars.update(loader.load_from_file(self.osd_conf))

extra_vars.update({

'ansible_version': {

'full': '2.4.6.0',

'major': 2,

'minor': 4,

'revision': 6,

'string': '2.5.0'

}

})

##extra_vars.update({'ansible_distribution': 'ubuntu'})

##extra_vars.update({'ansible_hostname': 'zwk-01'})

variable_manager.extra_vars = extra_vars

ret = -1

try:

executor = PlaybookExecutor(

playbooks=[self.playbook_file],

inventory=inventory,

variable_manager=variable_manager,

loader=loader,

options=options,

passwords=passwords,

)

# self._callback.clear()

executor._tqm._stdout_callback = self._callback

ret = executor.run()

finally:

self._callback.done(ret, executor._tqm._stats)

##executor._tqm._stdout_callback = ResultController()

print 'result code ==', ret

'''

file = re.search('site.yml', self.playbook_file)

print file

if file and ret == 0:

f1 = open('/etc/ceph/ceph.conf')

info1 = f1.read()

f2 = open('/etc/ceph/ceph.client.admin.keyring')

info2 = f2.read()

##info = json.dumps(info)

print '\033[1;32;40m'

print info1

print info2

print '\033[0m'

print type(info2)

'''

shutil.rmtree(C.DEFAULT_LOCAL_TMP, True)

注意:

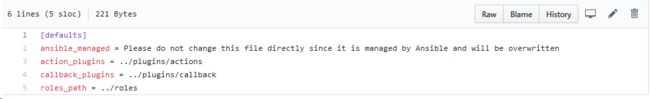

- Python环境运行正常,如果使用api调用的话,会报错file transfer failure,这是因为api调用的时候无法识别ansible.cfg中默认的临时文件路径,这时候只需将文件中的配置修改如下:

remote_tmp = $HOME/.ansible/tmp

local_tmp = $HOME/.ansible/tmp

- 报错

TASK [ceph.ceph-common : generate ceph configuration file] *********************

fatal: [172.16.0.51]: FAILED! => {"failed": true, "msg": "module (config_template) is missing interpreter line"}

原因是没有修改ansible.cfg中的action_plugin默认路径,需要将默认路径修改为ansible当前工作路径:

action_plugins = /opt/calamari/venv/lib/python2.7/site-packages/ztos-deploy/ceph-ansible/plugins/actions

ceph-mon : collect admin and bootstrap keys failed以及start the monitor service failed:

这两个地方主要是由于monitor服务没有顺利启动导致无法收集密钥信息,同时启动了的节点由于monitor数量不足也无法进行后续步骤。手动重启systemctl start [email protected]后发现只能短暂启动,所以start the monitor service这一步就算成功后面还是会报错。执行journalctl -xe发现提示服务重启太过频繁。查看/etc/systemd/system/ceph-mon.target.wants发现服务启动间隔时间为30分钟,修改后重新systemctl daemon-reload后再重启服务,成功。

变量VariableManager类:

可重写或用extra_variable。待填坑。。