浅析HiBench之SparkBench(单节点)配置

一 前言:

1. 语术:

Hadoop 版本: Version 2.7.1

HiBench 版本:Version 6.0

Spark 版本:Version 2.1.0

2. 本篇讲个啥子?

以pagerank为例子,讲述哪些参数跟performance相关,后续可以深入去看相关的代码。

同样的硬件配置和Hadoop软件配置,hadoop VS spark 的 pagerank 测试结果如下:

Type Date Time Input_data_size Duration(s) Throughput(bytes/s) Throughput/node HadoopPagerank 2018-05-18 17:06:47 1596962260 601.049 2656958 2656958

ScalaSparkPagerank 2018-05-28 16:19:28 1596962260 377.125 4234570 4234570

但hadoop VS spark 的Terasort 测试结果如下:

Type Date Time Input_data_size Duration(s) Throughput(bytes/s) Throughput/node

HadoopTerasort 2018-06-05 14:41:29 32000000000 288.466 110931617 110931617

ScalaSparkTerasort 2018-05-18 15:00:48 32000000000 326.053 98143553 98143553

二 、简述 HiBench/conf/spark.conf:

HiBench根据 HiBench/conf/spark.conf最终生成具体Test Case的conf文件,例如:~/HiBench/report/pagerank/spark/conf/sparkbench/spark.conf 。而$SPARK_HOME/conf/spark-defaults.conf配置文件,HiBench默认是不使用的,即不生效。这就是为什么在$SPARK_HOME/conf/spark-defaults.conf明明设置了spark.eventLog.enabled为true, 而访问不了History Sever, 18080端口。为什么?

关键配置参数如下:

hibench.spark.home /home/xxx/Spark

# Spark master

# standalone mode: spark://xxx:7077

# YARN mode: yarn-client

hibench.spark.master yarn

方案一:###这里需要同时修改脚本 HiBench/bin/functions/workload_functions.sh

###HiBench6.0版本里是匹配$SPARK_MASTER" == yarn-

###diff workload_functions.sh workload_functions.sh.template

###207c207

###< if [[ "$SPARK_MASTER" == yarn* ]]; then

###---

###> if [[ "$SPARK_MASTER" == yarn-* ]]; then

方案二:不需要修改脚本 HiBench/bin/functions/workload_functions.sh,增加配置 spark.deploy.mode

spark.deploy.mode yarn-cluster

# executor number and cores when running on Yarn

hibench.yarn.executor.num 4

hibench.yarn.executor.cores 11

# executor and driver memory in standalone & YARN mode

spark.executor.memory 17g

spark.driver.memory 4g

###注意2:下面黑色参数可以打开调试,绿色可以做性能调优

spark.eventLog.dir hdfs://localhost:9000/sparklogs

spark.eventLog.enabled true

spark.executor.extraJavaOptions -XX:+PrintGCDetails -XX:+PrintGCTimeStamps -XX:NewRatio=1

spark.io.compression.lz4.blockSize 128k

spark.memory.fraction 0.8

spark.memory.storageFraction 0.2

spark.rdd.compress true

spark.reducer.maxSizeInFlight 272m

spark.serializer org.apache.spark.serializer.KryoSerializer

spark.shuffle.service.index.cache.size 128m

三 配置参数说明:

1. hibench.yarn.executor.num:

这三哥俩是一个玩意,只是披了不同的马甲--num-executors(Number of executors to launch ,Spark/bin/spark-submit的参数,Default: 2,参考源码SparkSubmitArguments.scala) 、spark.executor.instances(可住在文件Spark/conf/spark-defautl.conf)、 hibench.yarn.executor.num(住在文件HiBench/conf/hibench.conf)

2. hibench.yarn.executor.cores:

这三哥类似(--executor-cores(Number of cores per executor. (Default: 1 in YARN mode, | or all available cores on the worker in standalone mode,参考源码SparkSubmitArguments.scala)、spark.executor.cores hibench.yarn.executor.cores

3. spark.executor.memory (Amount of memory to use per executor process )

--executor-memory、SPARK_EXECUTOR_MEMORY(spark-env.sh). 参考源码SparkSubmitArguments.scala, 取值的顺序如下:

executorMemory = Option(executorMemory)

.orElse(sparkProperties.get("spark.executor.memory"))

.orElse(env.get("SPARK_EXECUTOR_MEMORY"))

.orNull

4. hibench.default.shuffle.parallelism

因为pagerank会用到FPU(float point unit),所以值应该不少于CPU cores(这样才能充分利用FPU)。

5. SPARK_LOCAL_DIRS

export SPARK_LOCAL_DIRS=/home/xxx/data/tmp, /home/xxx/ssd2/tmp

6. Worker相关的参数:

# - SPARK_WORKER_CORES, to set the number of cores to use on this machine

# - SPARK_WORKER_MEMORY, to set how much total memory workers have to give executors (e.g. 1000m, 2g)

# - SPARK_WORKER_INSTANCES, to set the number of worker processes per node

# - SPARK_WORKER_DIR, to set the working directory of worker processes

i.e:

export SCALA_HOME=/home/hadoopuser/.local/bin/scala-2.11.12

export JAVA_HOME=/home/hadoopuser/.local/bin/jdk8u-server-release-1708

export HADOOP_HOME=/home/hadoopuser/.local/bin/Hadoop

export HADOOP_CONF_DIR=${HADOOP_HOME}/etc/hadoop

export SPARK_HOME=/home/hadoopuser/.local/bin/Spark

export SPARK_LOCAL_DIRS=/home/hadoopuser/data/tmp,/home/hadoopuser/ssd2/tmp

export SPARK_MASTER_HOST=localhost

export SPARK_MASTER_PORT=7077

export SPARK_WORKER_CORES=46

export SPARK_WORKER_INSTANCES=2

export SPARK_WORKER_MEMORY=80g

export SPARK_WORKER_DIR=/home/hadoopuser/data/tmp/worker,/home/hadoopuser/ssd2/tmp/work

四 分析方法:

1. 更改为DEBUG log:

log4j.properties文件:log4j.rootCategory=INFO, console =〉log4j.rootCategory=DEBUG, console

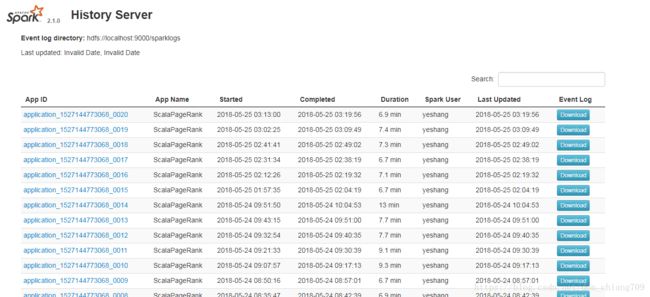

2. 开启spark.eventLog.enable (记得创建目录: hdfs://localhost:9000/sparklogs)

这个页面可以参看配置参数的值和stage及消耗的时间

3. 分析Hadoop/logs/Userlogs/的application log.

五 参考:

http://spark.apache.org/docs/2.1.0/configuration.html