Docker--cgroup

cgroup

Cgroups 是 control groups 的缩写,是 Linux 内核提供的一种可以限制、记录、隔离进程组(process groups)所使用的物理资源(如:cpu,memory,IO等等)的机制。

cpu限制

1.打开docker并检测cgroup是否开启

[root@server1 ~]# systemctl start docker

[root@server1 ~]# mount -t cgroup

2.cgroup子系统的层级路径

[root@server1 ~]# cd /sys/fs/cgroup/

[root@server1 cgroup]# ll

total 0

drwxr-xr-x 4 root root 0 Jun 4 10:18 blkio

lrwxrwxrwx 1 root root 11 Jun 4 10:18 cpu -> cpu,cpuacct

lrwxrwxrwx 1 root root 11 Jun 4 10:18 cpuacct -> cpu,cpuacct

drwxr-xr-x 4 root root 0 Jun 4 10:18 cpu,cpuacct

drwxr-xr-x 2 root root 0 Jun 4 10:18 cpuset

drwxr-xr-x 4 root root 0 Jun 4 10:18 devices

drwxr-xr-x 2 root root 0 Jun 4 10:18 freezer

drwxr-xr-x 2 root root 0 Jun 4 10:18 hugetlb

drwxr-xr-x 4 root root 0 Jun 4 10:18 memory

lrwxrwxrwx 1 root root 16 Jun 4 10:18 net_cls -> net_cls,net_prio

drwxr-xr-x 2 root root 0 Jun 4 10:18 net_cls,net_prio

lrwxrwxrwx 1 root root 16 Jun 4 10:18 net_prio -> net_cls,net_prio

drwxr-xr-x 2 root root 0 Jun 4 10:18 perf_event

drwxr-xr-x 2 root root 0 Jun 4 10:18 pids

drwxr-xr-x 4 root root 0 Jun 4 10:18 systemd

3.CPU控制族群

[root@server1 cgroup]# cd cpu

[root@server1 cpu]# ls

cgroup.clone_children cpuacct.usage cpu.rt_runtime_us system.slice

cgroup.event_control cpuacct.usage_percpu cpu.shares tasks

cgroup.procs cpu.cfs_period_us cpu.stat user.slice

cgroup.sane_behavior cpu.cfs_quota_us notify_on_release

cpuacct.stat cpu.rt_period_us release_agent

[root@server1 cpu]# mkdir x1

[root@server1 cpu]# cd x1/

[root@server1 x1]# ls ##继承上一级目录的所有文件

cgroup.clone_children cpuacct.usage cpu.rt_period_us notify_on_release

cgroup.event_control cpuacct.usage_percpu cpu.rt_runtime_us tasks

cgroup.procs cpu.cfs_period_us cpu.shares

cpuacct.stat cpu.cfs_quota_us cpu.stat

[root@server1 x1]# cat cpu.cfs_period_us

100000

[root@server1 x1]# cat cpu.cfs_quota_us

-1 ##-1代表无限制

[root@server1 x1]# echo 20000 > cpu.cfs_quota_us

[root@server1 x1]# cat cpu.cfs_quota_us

20000 ##20000占100000的五分之一,所以是百分之二十

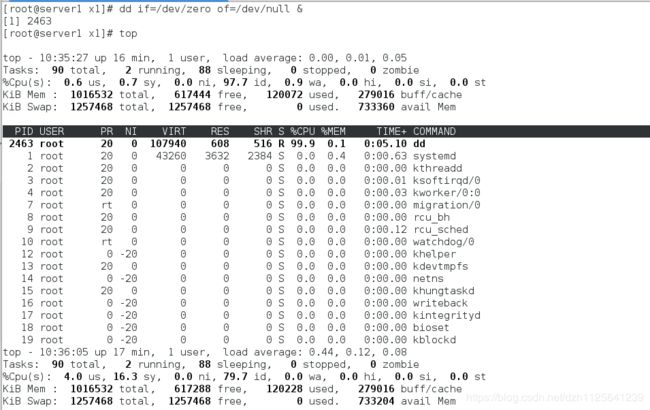

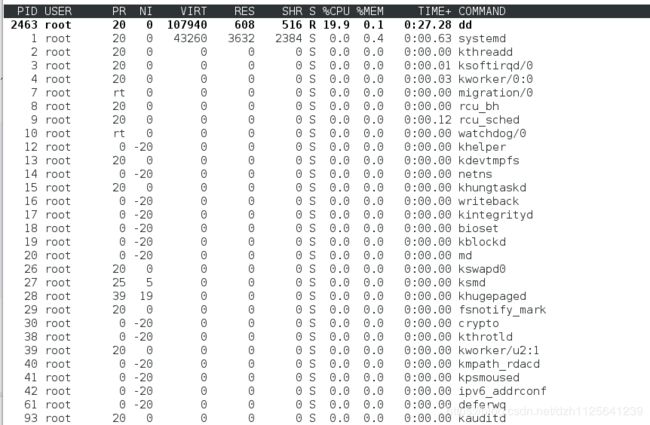

[root@server1 x1]# dd if=/dev/zero of=/dev/null &

[1] 2463

[root@server1 x1]# echo 2463 > tasks

top ##cpu被限制到了百分之二十

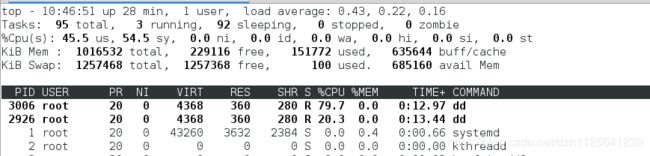

[root@server1 ~]# docker run -it --name vm1 --cpu-quota=20000 ubuntu

root@21a931db8acc:/# dd if=/dev/zero of=/dev/null &

[root@server1 ~]# docker run -it --name vm2 ubuntu

root@5bb40832dd8a:/# dd if=/dev/zero of=/dev/null &

[root@server1 cpu]# cd docker/

[root@server1 docker]# ls

a780df0fc198afa1d65ddf68e42287cf792efd279b344104ee73c891aa5ac50f

[root@server1 docker]# cd a780df0fc198afa1d65ddf68e42287cf792efd279b344104ee73c891aa5ac50f/

[root@server1 a780df0fc198afa1d65ddf68e42287cf792efd279b344104ee73c891aa5ac50f]# cat cpu.cfs_quota_us

20000

内存限制

内存限制

/sys/fs/cgroup/memory/x2

[root@server1 x2]# cat memory.limit_in_bytes

9223372036854771712

[root@server1 x2]# echo 314572800 > memory.limit_in_bytes

[root@server1 x2]# cd /dev/shm/

[root@server1 shm]# ls

[root@server1 shm]# dd if=/dev/zero of=bigfile bs=1M count=400

400+0 records in

400+0 records out

419430400 bytes (419 MB) copied, 0.244224 s, 1.7 GB/s ##无法限制

[root@server1 shm]# yum search cgroup

[root@server1 shm]# yum install libcgroup-tools.x86_64 -y

[root@server1 shm]# free -m

total used free shared buff/cache available

Mem: 992 125 525 7 341 710

Swap: 1023 5 1018

[root@server1 shm]# cgexec -g memory:x2 dd if=/dev/zero of=bigfile bs=1M count=400

400+0 records in

400+0 records out

419430400 bytes (419 MB) copied, 0.245783 s, 1.7 GB/s

[root@server1 shm]# free -m

total used free shared buff/cache available

Mem: 992 129 226 301 636 410

Swap: 1023 111 912 ##限制成功,其实之占用了300M

[root@server1 shm]# cd /sys/fs/cgroup/memory/x2/

[root@server1 x2]# cat memory.memsw.limit_in_bytes

9223372036854771712

[root@server1 shm]# rm -f bigfile

[root@server1 x2]# echo 314572800 > memory.memsw.limit_in_bytes

[root@server1 shm]# cgexec -g memory:x2 dd if=/dev/zero of=bigfile bs=1M count=400

Killed

容器资源限制

1.限制容器内存

[root@server1 ~]# docker run -it --name vm1 --memory=300M --memory-swap=300M ubuntu

root@b232ef01b673:/#

root@b232ef01b673:/# free -m

total used free shared buffers cached

Mem: 992 402 590 12 0 248

-/+ buffers/cache: 153 839

Swap: 1227 0 1227

root@b232ef01b673:/# dd if=/dev/zero of=bigfile bs=1M count=400

400+0 records in

400+0 records out

419430400 bytes (419 MB) copied, 2.77425 s, 151 MB/s

root@b232ef01b673:/# free -m

total used free shared buffers cached

Mem: 992 699 293 12 0 533 ##限制成功,只可以使用300M,但是隔离性不行,显示的总用有992M而不是300M

-/+ buffers/cache: 164 827

Swap: 1227 0 1227

2.增加隔离性

[root@server1 ~]# yum install lxcfs-2.0.5-3.el7.centos.x86_64.rpm -y

[root@server1 ~]# cd /var/lib/lxcfs/

[root@server1 lxcfs]# ls

[root@server1 lxcfs]# cd

[root@server1 ~]# lxcfs /var/lib/lxcfs/ &

[1] 2541

[root@server1 ~]# hierarchies:

0: fd: 5: net_prio,net_cls

1: fd: 6: devices

2: fd: 7: blkio

3: fd: 8: freezer

4: fd: 9: pids

5: fd: 10: perf_event

6: fd: 11: memory

7: fd: 12: cpuset

8: fd: 13: cpuacct,cpu

9: fd: 14: hugetlb

10: fd: 15: name=systemd

[root@server1 ~]# cd /var/lib/lxcfs/

[root@server1 lxcfs]# ls

cgroup proc

[root@server1 lxcfs]# cd proc/

[root@server1 proc]# ls

cpuinfo diskstats meminfo stat swaps uptime

[root@server1 proc]# docker run -it -m 300m \

> -v /var/lib/lxcfs/proc/cpuinfo:/proc/cpuinfo:rw \

> -v /var/lib/lxcfs/proc/diskstats:/proc/diskstats:rw \

> -v /var/lib/lxcfs/proc/meminfo:/proc/meminfo:rw \

> -v /var/lib/lxcfs/proc/stat:/proc/stat:rw \

> -v /var/lib/lxcfs/proc/swaps:/proc/swaps:rw \

> -v /var/lib/lxcfs/proc/uptime:/proc/uptime:rw \

> ubuntu

root@e1c1c580defa:/# free -m

total used free shared buffers cached

Mem: 300 2 297 12 0 0

-/+ buffers/cache: 2 297

Swap: 300 0 300