Bigdata:

结构化数据:有元数据,有约束

半结构化数据:有元数据,无约束

非结构化数据:没有元数据;

搜索引擎:搜索组件、索引组件

蜘蛛程序:

存储:

分析处理:

2003年:The Google File System

2004年:MapReduce:Simplified Data Processing On Large Cluster

2006年:BigTable:A Distributed Storage System for Structure Data

HDFS + MapReduce = Hadoop

Hbase

Nutch

批处理:

函数式编程:

Lisp, ML函数式编程语言:高阶函数;

map, fold

map:

map(f())

map:接受一个函数为参数,并将其应用于列表中的所有元素;从而生成一个结果列表

fold:

接受两个参数:函数,初始值

fold(g(),init)

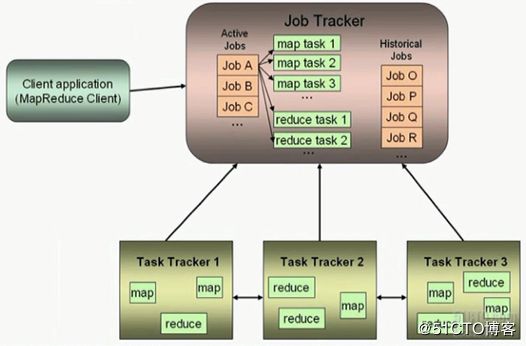

mapreduce:

mapper:

reducer:

shuffle and sort

MRv1(Haddop2) --> MRv2(Haddop2)

MRv1: Cluster resource manager, Data processing

MRv2:

YARN:Cluster resourse manager

MRv2:Data process

MR:batch

Tez:execution engine

RM:Resource Manager

NM:Node Manager

AM:Application Master

container:mr任务;hadoop官网:http://hadoop.apache.org

Hadoop Distribution:

Cloudera:CDH

Hortonworks:HDP

Intel:IDH

MapR

Haddop安装模型:

单机模型:测试使用

伪分布式模型:运行于单机。

分布式模型:集群模型

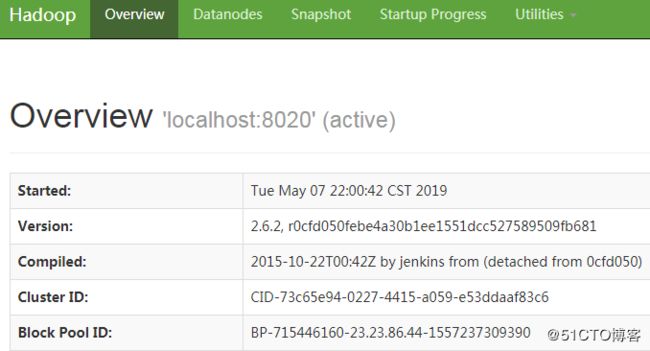

Hadoop,基于Java语言

jdk:1.6,1.7,1.8

hadoop-2.6.2 jdk 1.6+

hadoop-2.7

Applications Run Natively IN Hadoop:

User Commands

dfs

fetchdt

fsck

version

Administration Commands

balancer

datanode

dfsadmin

mover

namenode

secondarynamenode

Hadoop Cluster中Daemon:

HDFS:

NameNode,NN

SecondaryNameNode,SNN

DataNode,DN

/data/hadoop/hdfs/{nn,snn,dn}

nn: fsimage,editlog

hadoop-daemon.sh

start|stop

YARN:

ResourceManager

NodeManager

yarn-daemon.sh start|stop官方提供Ambari自动部署hadoop工具

单节点的Hadoop伪分布式模型部署:

实验环境:

主机名称:node1.smoke.com

操作系统:CentOS 7.5

内核版本:3.10.0-862.el7.x86_64

网卡1:172.16.100.67

node1:

[root@node1 ~]# hostname

node1.smoke.com

[root@node1 ~]# ip addr show

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:67:e5:70 brd ff:ff:ff:ff:ff:ff

inet 172.16.100.67/24 brd 172.16.100.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::7382:eaed:fa1f:633f/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: ens37: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:67:e5:7a brd ff:ff:ff:ff:ff:ff

inet 192.168.243.128/24 brd 192.168.243.255 scope global noprefixroute dynamic ens37

valid_lft 1773sec preferred_lft 1773sec

inet6 fe80::9612:1176:5405:40dd/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@node1 ~]# ip route show

default via 192.168.243.2 dev ens37 proto dhcp metric 101

172.16.100.0/24 dev ens33 proto kernel scope link src 172.16.100.67 metric 100

192.168.243.0/24 dev ens37 proto kernel scope link src 192.168.243.128 metric 101

[root@node1 ~]# crontab -l

*/5 * * * * /usr/sbin/ntpdate ntp1.aliyun.com &> /dev/null

[root@node1 ~]# yum -y install java

[root@node1 ~]# java -version

openjdk version "1.8.0_212"

OpenJDK Runtime Environment (build 1.8.0_212-b04)

OpenJDK 64-Bit Server VM (build 25.212-b04, mixed mode)

[root@node1 ~]# vim /etc/profile.d/java.sh

export JAVA_HOME=/usr

[root@node1 ~]# yum -y install java-1.8.0-openjdk-devel

[root@node1 ~]# ls -lh

总用量 187M

-rw-------. 1 root root 1.3K 5月 4 06:20 anaconda-ks.cfg

-rw-r--r--. 1 root root 187M 5月 5 20:57 hadoop-2.6.2.tar.gz

[root@node1 ~]# mkdir /bdapps

[root@node1 ~]# tar xf hadoop-2.6.2.tar.gz -C /bdapps/

[root@node1 ~]# cd /bdapps/

[root@node1 bdapps]# ln -sv hadoop-2.6.2 hadoop

[root@node1 bdapps]# cd hadoop

[root@node1 hadoop]# vim /etc/profile.d/hadoop.sh

export HADOOP_PREFIX=/bdapps/hadoop

export PATH=$PATH:${HADOOP_PREFIX}/bin:${HADOOP_PREFIX}/sbin

export HADOOP_YARN_HOME=${HADOOP_PREFIX}

export HADOOP_MAPPERD_HOME=${HADOOP_PREFIX}

export HADOOP_COMMON_HOME=${HADOOP_PREFIX}

export HADOOP_HDFS_HOME=${HADOOP_PREFIX}

[root@node1 hadoop]# . /etc/profile.d/hadoop.sh

[root@node1 hadoop]# groupadd hadoop

[root@node1 hadoop]# useradd -g hadoop yarn

[root@node1 hadoop]# useradd -g hadoop hdfs

[root@node1 hadoop]# useradd -g hadoop mapred

[root@node1 hadoop]# mkdir -pv /data/hadoop/hdfs/{nn,snn,dn}

[root@node1 hadoop]# chown -R hdfs:hadoop /data/hadoop/hdfs/

[root@node1 hadoop]# mkdir logs

[root@node1 hadoop]# chmod g+w logs

[root@node1 hadoop]# chown -R yarn:hadoop logs

[root@node1 hadoop]# chown -R yarn:hadoop ./*

[root@node1 hadoop]# cd etc/hadoop/

[root@node1 hadoop]# vim core-site.xml

fs.defaultFS

hdfs://localhost:8020

true

[root@node1 hadoop]# vim hdfs-site.xml

dfs.replication

1

dfs.namenode.name.dir

file:///data/hadoop/hdfs/nn

dfs.datanode.data.dir

file:///data/hadoop/hdfs/dn

fs.checkpoint.dir

file:///data/hadoop/hdfs/snn

fs.checkpoint.edits.dir

file:///data/hadoop/hdfs/snn

[root@node1 hadoop]# cp mapred-site.xml.template mapred-site.xml

[root@node1 hadoop]# vim mapred-site.xml

mapreduce.framework.name

yarn

[root@node1 hadoop]# chown yarn:hadoop mapred-site.xml

[root@node1 hadoop]# vim yarn-site.xml

yarn.resourcemanager.address

localhost:8032

yarn.resourcemanager.scheduler.address

localhost:8030

yarn.resourcemanager.resource-tracker.address

localhost:8031

yarn.resourcemanager.admin.address

localhost:8033

yarn.resourcemanager.webapp.address

localhost:8088

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.nodemanager.auxservices.mapreduce_shuffle.class

org.apache.hadoop.mapred.ShuffleHandler

yarn.resourcemanager.scheduler.class

org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.CapacityScheduler

[root@node1 hadoop]# cat slaves

localhost

[root@node1 hadoop]# su - hdfs

[hdfs@node1 ~]$ hdfs namenode -format

[hdfs@node1 ~]$ ls /data/hadoop/hdfs/nn/

current

[hdfs@node1 ~]$ ls /data/hadoop/hdfs/nn/current/

fsimage_0000000000000000000 fsimage_0000000000000000000.md5 seen_txid VERSION

[hdfs@node1 ~]$ hadoop-daemon.sh start namenode

[hdfs@node1 ~]$ ls /bdapps/hadoop/logs/

hadoop-hdfs-namenode-node1.smoke.com.log hadoop-hdfs-namenode-node1.smoke.com.out SecurityAuth-hdfs.audit

[hdfs@node1 ~]$ jps

34165 NameNode

34278 Jps

[hdfs@node1 ~]$ jps -v

34324 Jps -Dapplication.home=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.212.b04-0.el7_6.x86_64 -Xms8m

34165 NameNode -Dproc_namenode -Xmx1000m -Djava.net.preferIPv4Stack=true -Dhadoop.log.dir=/bdapps/hadoop/logs -Dhadoop.log.file=hadoop.log -Dhadoop.home.dir=/bdapps/hadoop -Dhadoop.id.str=hdfs -Dhadoop.root.logger=INFO,console -Djava.library.path=/bdapps/hadoop/lib/native -Dhadoop.policy.file=hadoop-policy.xml -Djava.net.preferIPv4Stack=true -Djava.net.preferIPv4Stack=true -Djava.net.preferIPv4Stack=true -Dhadoop.log.dir=/bdapps/hadoop/logs -Dhadoop.log.file=hadoop-hdfs-namenode-node1.smoke.com.log -Dhadoop.home.dir=/bdapps/hadoop -Dhadoop.id.str=hdfs -Dhadoop.root.logger=INFO,RFA -Djava.library.path=/bdapps/hadoop/lib/native -Dhadoop.policy.file=hadoop-policy.xml -Djava.net.preferIPv4Stack=true -Dhadoop.security.logger=INFO,RFAS -Dhdfs.audit.logger=INFO,NullAppender -Dhadoop.security.logger=INFO,RFAS -Dhdfs.audit.logger=INFO,NullAppender -Dhadoop.security.logger=INFO,RFAS -Dhdfs.audit.logger=INFO,NullAppender -Dhadoop.security.logger=INFO,RFAS

[hdfs@node1 ~]$ hadoop-daemon.sh start secondarynamenode

[hdfs@node1 ~]$ jps

34165 NameNode

34393 Jps

34350 SecondaryNameNode

[hdfs@node1 ~]$ hadoop-daemon.sh start datanode

[hdfs@node1 ~]$ jps

34419 DataNode

34165 NameNode

34350 SecondaryNameNode

34495 Jps

[hdfs@node1 ~]$ hdfs dfs -ls /

[hdfs@node1 ~]$ hdfs dfs -mkdir /test

[hdfs@node1 ~]$ hdfs dfs -ls /

Found 1 items

drwxr-xr-x - hdfs supergroup 0 2019-05-07 22:08 /test

[hdfs@node1 ~]$ hdfs dfs -put /etc/fstab /user/hdfs/fstab

[hdfs@node1 ~]$ hdfs dfs -put /etc/fstab /test/fstab

[hdfs@node1 ~]$ hdfs dfs -lsr /

lsr: DEPRECATED: Please use 'ls -R' instead.

drwxr-xr-x - hdfs supergroup 0 2019-05-07 22:11 /test

-rw-r--r-- 1 hdfs supergroup 541 2019-05-07 22:11 /test/fstab

[hdfs@node1 ~]$ ls /data/hadoop/hdfs/dn/current/BP-715446160-23.23.86.44-1557237309390/current/finalized/subdir0/subdir0/

blk_1073741825 blk_1073741825_1001.meta

[hdfs@node1 ~]$ file /data/hadoop/hdfs/dn/current/BP-715446160-23.23.86.44-1557237309390/current/finalized/subdir0/subdir0/blk_1073741825

/data/hadoop/hdfs/dn/current/BP-715446160-23.23.86.44-1557237309390/current/finalized/subdir0/subdir0/blk_1073741825: ASCII text

[hdfs@node1 ~]$ cat /data/hadoop/hdfs/dn/current/BP-715446160-23.23.86.44-1557237309390/current/finalized/subdir0/subdir0/blk_1073741825

#

# /etc/fstab

# Created by anaconda on Sat May 4 06:14:49 2019

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/centos-root / xfs defaults 0 0

UUID=6f08f050-07b8-4179-81af-5c134cc98879 /boot xfs defaults 0 0

/dev/mapper/centos-home /home xfs defaults 0 0

/dev/mapper/centos-swap swap swap defaults 0 0

[hdfs@node1 ~]$ hdfs dfs -cat /test/fstab

#

# /etc/fstab

# Created by anaconda on Sat May 4 06:14:49 2019

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/centos-root / xfs defaults 0 0

UUID=6f08f050-07b8-4179-81af-5c134cc98879 /boot xfs defaults 0 0

/dev/mapper/centos-home /home xfs defaults 0 0

/dev/mapper/centos-swap swap swap defaults 0 0

[hdfs@node1 ~]$ exit

[root@node1 hadoop]# su - yarn

[yarn@node1 ~]$ yarn-daemon.sh start resourcemanager

[yarn@node1 ~]$ jps

70105 ResourceManager

70333 Jps

[yarn@node1 ~]$ yarn-daemon.sh start nodemanager

[root@node1 hadoop]# ss -tnl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 *:50020 *:*

LISTEN 0 128 *:50090 *:*

LISTEN 0 128 127.0.0.1:8020 *:*

LISTEN 0 128 *:50070 *:*

LISTEN 0 128 *:22 *:*

LISTEN 0 100 127.0.0.1:25 *:*

LISTEN 0 50 *:50010 *:*

LISTEN 0 128 *:50075 *:*

LISTEN 0 128 ::ffff:127.0.0.1:8030 :::*

LISTEN 0 128 ::ffff:127.0.0.1:8031 :::*

LISTEN 0 128 :::40352 :::*

LISTEN 0 128 ::ffff:127.0.0.1:8032 :::*

LISTEN 0 128 ::ffff:127.0.0.1:8033 :::*

LISTEN 0 128 :::8040 :::*

LISTEN 0 128 :::8042 :::*

LISTEN 0 128 :::22 :::*

LISTEN 0 128 ::ffff:127.0.0.1:8088 :::*

LISTEN 0 100 ::1:25 :::*

LISTEN 0 50 :::13562 :::* 通过windows的浏览器访问172.16.100.67:50070;

[root@node1 hadoop]# firefox localhost:8088 &

运行测试程序:

[root@node1 mapreduce]# su - hdfs

[hdfs@node1 ~]$ yarn jar /bdapps/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.2.jar wordcount /test/fstab /test/fstab.out

[hdfs@localhost ~]$ hdfs dfs -ls /test

Found 2 items

-rw-r--r-- 1 hdfs supergroup 541 2019-05-07 22:11 /test/fstab

drwxr-xr-x - hdfs supergroup 0 2019-05-13 20:18 /test/fstab.out

[hdfs@localhost ~]$ hdfs dfs -ls /test/fstab.out

Found 2 items

-rw-r--r-- 1 hdfs supergroup 0 2019-05-13 20:18 /test/fstab.out/_SUCCESS

-rw-r--r-- 1 hdfs supergroup 430 2019-05-13 20:18 /test/fstab.out/part-r-00000

[hdfs@localhost ~]$ hdfs dfs -cat /test/fstab.out/part-r-00000

# 7

'/dev/disk' 1

/ 1

/boot 1

/dev/mapper/centos-home 1

/dev/mapper/centos-root 1

/dev/mapper/centos-swap 1

/etc/fstab 1

/home 1

0 8

06:14:49 1

2019 1

4 1

Accessible 1

Created 1

May 1

Sat 1

See 1

UUID=6f08f050-07b8-4179-81af-5c134cc98879 1

anaconda 1

and/or 1

are 1

blkid(8) 1

by 2

defaults 4

filesystems, 1

findfs(8), 1

for 1

fstab(5), 1

info 1

maintained 1

man 1

more 1

mount(8) 1

on 1

pages 1

reference, 1

swap 2

under 1

xfs 3分布式模型:

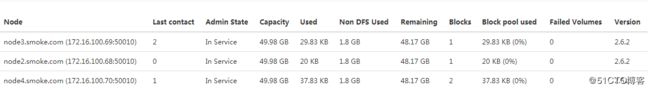

实验环境:

主机名称:node1.smoke.com master

操作系统:CentOS 7.5

内核版本:3.10.0-862.el7.x86_64

网卡1:172.16.100.67

主机名称:node2.smoke.com

操作系统:CentOS 7.5

内核版本:3.10.0-862.el7.x86_64

网卡1:172.16.100.68

主机名称:node3.smoke.com

操作系统:CentOS 7.5

内核版本:3.10.0-862.el7.x86_64

网卡1:172.16.100.69

主机名称:node4.smoke.com

操作系统:CentOS 7.5

内核版本:3.10.0-862.el7.x86_64

网卡1:172.16.100.70

node1:

[root@node1 ~]# hostname

node1.smoke.com

[root@node1 ~]# ip addr show

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:67:e5:70 brd ff:ff:ff:ff:ff:ff

inet 172.16.100.67/24 brd 172.16.100.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::7382:eaed:fa1f:633f/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: ens37: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:67:e5:7a brd ff:ff:ff:ff:ff:ff

inet 192.168.243.135/24 brd 192.168.243.255 scope global noprefixroute dynamic ens37

valid_lft 1492sec preferred_lft 1492sec

inet6 fe80::9612:1176:5405:40dd/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@node1 ~]# ip route show

default via 192.168.243.2 dev ens37 proto dhcp metric 101

172.16.100.0/24 dev ens33 proto kernel scope link src 172.16.100.67 metric 100

192.168.243.0/24 dev ens37 proto kernel scope link src 192.168.243.135 metric 101

[root@node1 ~]# crontab -l

*/5 * * * * /usr/sbin/ntpdate ntp1.aliyun.com &> /dev/null

[root@node1 ~]# vim /etc/hosts

172.16.100.67 node1.smoke.com node1 master

172.16.100.68 node2.smoke.com node2

172.16.100.69 node3.smoke.com node3

172.16.100.70 node4.smoke.com node4 node2:

[root@node2 ~]# hostname

node2.smoke.com

[root@node2 ~]# ip addr show

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:70:86:6c brd ff:ff:ff:ff:ff:ff

inet 172.16.100.68/24 brd 172.16.100.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::d665:1c67:c985:a1cf/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: ens37: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:70:86:76 brd ff:ff:ff:ff:ff:ff

inet 192.168.243.136/24 brd 192.168.243.255 scope global noprefixroute dynamic ens37

valid_lft 1773sec preferred_lft 1773sec

inet6 fe80::d698:df07:91e4:43a6/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@node2 ~]# ip route show

default via 192.168.243.2 dev ens37 proto dhcp metric 101

172.16.100.0/24 dev ens33 proto kernel scope link src 172.16.100.68 metric 100

192.168.243.0/24 dev ens37 proto kernel scope link src 192.168.243.136 metric 101

[root@node2 ~]# crontab -l

*/5 * * * * /usr/sbin/ntpdate ntp1.aliyun.com &> /dev/null

[root@node2 ~]# vim /etc/hosts

172.16.100.67 node1.smoke.com node1 master

172.16.100.68 node2.smoke.com node2

172.16.100.69 node3.smoke.com node3

172.16.100.70 node4.smoke.com node4 node3:

[root@node3 ~]# hostname

node3.smoke.com

[root@node3 ~]# ip addr show

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:03:53:27 brd ff:ff:ff:ff:ff:ff

inet 172.16.100.69/24 brd 172.16.100.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::270d:efa4:1975:8122/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: ens37: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:03:53:31 brd ff:ff:ff:ff:ff:ff

inet 192.168.243.134/24 brd 192.168.243.255 scope global noprefixroute dynamic ens37

valid_lft 1651sec preferred_lft 1651sec

inet6 fe80::77b4:1247:f181:d2dc/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@node3 ~]# ip route show

default via 192.168.243.2 dev ens37 proto dhcp metric 101

172.16.100.0/24 dev ens33 proto kernel scope link src 172.16.100.69 metric 102

192.168.243.0/24 dev ens37 proto kernel scope link src 192.168.243.134 metric 101

[root@node3 ~]# crontab -l

*/5 * * * * /usr/sbin/ntpdate ntp1.aliyun.com &> /dev/null

[root@node3 ~]# vim /etc/hosts

172.16.100.67 node1.smoke.com node1 master

172.16.100.68 node2.smoke.com node2

172.16.100.69 node3.smoke.com node3

172.16.100.70 node4.smoke.com node4 node4:

[root@node4 ~]# hostname

node4.smoke.com

[root@node4 ~]# ip addr show

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:db:3c:9e brd ff:ff:ff:ff:ff:ff

inet 172.16.100.70/24 brd 172.16.100.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::bf5b:a2c5:f22e:7812/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: ens37: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:db:3c:a8 brd ff:ff:ff:ff:ff:ff

inet 192.168.243.133/24 brd 192.168.243.255 scope global noprefixroute dynamic ens37

valid_lft 1349sec preferred_lft 1349sec

inet6 fe80::ad5:80be:e485:ffb5/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@node4 ~]# ip route show

default via 192.168.243.2 dev ens37 proto dhcp metric 101

172.16.100.0/24 dev ens33 proto kernel scope link src 172.16.100.70 metric 100

192.168.243.0/24 dev ens37 proto kernel scope link src 192.168.243.133 metric 101

[root@node4 ~]# crontab -l

*/5 * * * * /usr/sbin/ntpdate ntp1.aliyun.com &> /dev/null

[root@node4 ~]# vim /etc/hosts

172.16.100.67 node1.smoke.com node1 master

172.16.100.68 node2.smoke.com node2

172.16.100.69 node3.smoke.com node3

172.16.100.70 node4.smoke.com node4 node1:

[root@node1 ~]# yum -y install java

[root@node1 ~]# vim /etc/profile.d/java.sh

export JAVA_HOME=/usr

[root@node1 ~]# yum -y install java-1.8.0-openjdk-devel

[root@node1 ~]# java -version

openjdk version "1.8.0_212"

OpenJDK Runtime Environment (build 1.8.0_212-b04)

OpenJDK 64-Bit Server VM (build 25.212-b04, mixed mode)

[root@node1 ~]# groupadd hadoop

[root@node1 ~]# useradd -g hadoop hadoop

[root@node1 ~]# echo 'smoke520' | passwd --stdin hadoop

[root@node1 ~]# su - hadoop

[hadoop@node1 ~]$ ssh-keygen -t rsa -P ''

[hadoop@node1 ~]$ for i in 2 3 4; do ssh-copy-id -i .ssh/id_rsa.pub hadoop@node${i}; done

[hadoop@node1 ~]$ ssh node2 'date'

2019年 05月 14日 星期二 09:24:58 EDT

[hadoop@node1 ~]$ ssh node3 'date'

2019年 05月 14日 星期二 21:25:08 CST

[hadoop@node1 ~]$ ssh node4 'date'

2019年 05月 14日 星期二 21:25:12 CST

[root@node1 ~]# mkdir -pv /bdapps /data/hadoop/hdfs/{nn,snn,dn}

[root@node1 ~]# chown -R hadoop:hadoop /data/hadoop/hdfs/

[root@node1 ~]# tar xf hadoop-2.6.2.tar.gz -C /bdapps/

[root@node1 ~]# cd /bdapps/

[root@node1 bdapps]# ln -sv hadoop-2.6.2 hadoop

[root@node1 bdapps]# vim /etc/profile.d/hadoop.sh

export HADOOP_PREFIX=/bdapps/hadoop

export PATH=$PATH:${HADOOP_PREFIX}/bin:${HADOOP_PREFIX}/sbin

export HADOOP_COMMON_HOME=${HADOOP_PREFIX}

export HADOOP_HDES_HOME=${HADOOP_PREFIX}

export HADOOP_MAPRED_HOME=${HADOOP_PREFIX}

export HADOOP_YARN_HOME=${HADOOP_PREFIX}

[root@node1 bdapps]# cd hadoop

[root@node1 bdapps]# mkdir logs

[root@node1 bdapps]# chmod g+w logs

[root@node1 bdapps]# chown -R hadoop:hadoop ./*

[root@node1 hadoop]# cd etc/hadoop/

[root@node1 hadoop]# vim core-site.xml

fs.defaultFS

hdfs://master:8020

true

[root@node1 hadoop]# vim yarn-site.xml

yarn.resourcemanager.address

master:8032

yarn.resourcemanager.scheduler.address

master:8030

yarn.resourcemanager.resource-tracker.address

master:8031

yarn.resourcemanager.admin.address

master:8033

yarn.resourcemanager.webapp.address

master:8088

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.nodemanager.auxservices.mapreduce_shuffle.class

org.apache.hadoop.mapred.ShuffleHandler

yarn.resourcemanager.scheduler.class

org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.CapacityScheduler

[root@node1 hadoop]# vim hdfs-site.xml

dfs.replication

2

dfs.namenode.name.dir

file:///data/hadoop/hdfs/nn

dfs.datanode.data.dir

file:///data/hadoop/hdfs/dn

fs.checkpoint.dir

file:///data/hadoop/hdfs/snn

fs.checkpoint.edits.dir

file:///data/hadoop/hdfs/snn

[root@node1 hadoop]# cp mapred-site.xml.template mapred-site.xml

[root@node1 hadoop]# vim mapred-site.xml

mapreduce.framework.name

yarn

[root@node1 hadoop]# chown hadoop:hadoop mapred-site.xml

[root@node1 hadoop]# vim slaves

node2

node3

node4node2:

[root@node2 ~]# yum -y install java

[root@node2 ~]# vim /etc/profile.d/java.sh

export JAVA_HOME=/usr

[root@node2 ~]# yum -y install java-1.8.0-openjdk-devel

[root@node2 ~]# java -version

openjdk version "1.8.0_212"

OpenJDK Runtime Environment (build 1.8.0_212-b04)

OpenJDK 64-Bit Server VM (build 25.212-b04, mixed mode)

[root@node2 ~]# groupadd hadoop

[root@node2 ~]# useradd -g hadoop hadoop

[root@node2 ~]# echo 'smoke520' | passwd --stdin hadoop

[root@node2 ~]# mkdir -pv /bdapps /data/hadoop/hdfs/{nn,snn,dn}

[root@node2 ~]# chown -R hadoop:hadoop /data/hadoop/hdfs/

[root@node2 ~]# tar xf hadoop-2.6.2.tar.gz -C /bdapps/

[root@node2 ~]# cd /bdapps/

[root@node2 bdapps]# ln -sv hadoop-2.6.2 hadoop

[root@node2 bdapps]# vim /etc/profile.d/hadoop.sh

export HADOOP_PREFIX=/bdapps/hadoop

export PATH=$PATH:${HADOOP_PREFIX}/bin:${HADOOP_PREFIX}/sbin

export HADOOP_COMMON_HOME=${HADOOP_PREFIX}

export HADOOP_HDES_HOME=${HADOOP_PREFIX}

export HADOOP_MAPRED_HOME=${HADOOP_PREFIX}

export HADOOP_YARN_HOME=${HADOOP_PREFIX}

[root@node2 bdapps]# cd hadoop

[root@node2 hadoop]# mkdir logs

[root@node2 hadoop]# chmod g+w logs

[root@node2 hadoop]# chown -R hadoop:hadoop ./*

[root@node2 hadoop]# cd etc/hadoop/

[root@node2 hadoop]# vim core-site.xml

fs.defaultFS

hdfs://master:8020

true

[root@node2 hadoop]# vim yarn-site.xml

yarn.resourcemanager.address

master:8032

yarn.resourcemanager.scheduler.address

master:8030

yarn.resourcemanager.resource-tracker.address

master:8031

yarn.resourcemanager.admin.address

master:8033

yarn.resourcemanager.webapp.address

master:8088

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.nodemanager.auxservices.mapreduce_shuffle.class

org.apache.hadoop.mapred.ShuffleHandler

yarn.resourcemanager.scheduler.class

org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.CapacityScheduler

[root@node2 hadoop]# vim hdfs-site.xml

dfs.replication

2

dfs.namenode.name.dir

file:///data/hadoop/hdfs/nn

dfs.datanode.data.dir

file:///data/hadoop/hdfs/dn

fs.checkpoint.dir

file:///data/hadoop/hdfs/snn

fs.checkpoint.edits.dir

file:///data/hadoop/hdfs/snn

[root@node2 hadoop]# cp mapred-site.xml.template mapred-site.xml

[root@node2 hadoop]# vim mapred-site.xml

mapreduce.framework.name

yarn

[root@node2 hadoop]# chown hadoop:hadoop mapred-site.xmlnode3:

[root@node3 ~]# yum -y install java

[root@node3 ~]# vim /etc/profile.d/java.sh

export JAVA_HOME=/usr

[root@node3 ~]# yum -y install java-1.8.0-openjdk-devel

[root@node3 ~]# java -version

openjdk version "1.8.0_212"

OpenJDK Runtime Environment (build 1.8.0_212-b04)

OpenJDK 64-Bit Server VM (build 25.212-b04, mixed mode)

[root@node3 ~]# groupadd hadoop

[root@node3 ~]# useradd -g hadoop hadoop

[root@node3 ~]# echo 'smoke520' | passwd --stdin hadoop

[root@node3 ~]# mkdir -pv /bdapps /data/hadoop/hdfs/{nn,snn,dn}

[root@node3 ~]# chown -R hadoop:hadoop /data/hadoop/hdfs/

[root@node3 ~]# tar xf hadoop-2.6.2.tar.gz -C /bdapps/

[root@node3 ~]# cd /bdapps/

[root@node3 bdapps]# ln -sv hadoop-2.6.2 hadoop

[root@node3 bdapps]# vim /etc/profile.d/hadoop.sh

export HADOOP_PREFIX=/bdapps/hadoop

export PATH=$PATH:${HADOOP_PREFIX}/bin:${HADOOP_PREFIX}/sbin

export HADOOP_COMMON_HOME=${HADOOP_PREFIX}

export HADOOP_HDES_HOME=${HADOOP_PREFIX}

export HADOOP_MAPRED_HOME=${HADOOP_PREFIX}

export HADOOP_YARN_HOME=${HADOOP_PREFIX}

[root@node3 bdapps]# cd hadoop

[root@node3 hadoop]# mkdir logs

[root@node3 hadoop]# chmod g+w logs/

[root@node3 hadoop]# chown -R hadoop.hadoop ./*

[root@node3 hadoop]# cd etc/hadoop/

[root@node3 hadoop]# vim core-site.xml

fs.defaultFS

hdfs://master:8020

true

[root@node3 hadoop]# vim yarn-site.xml

yarn.resourcemanager.address

master:8032

yarn.resourcemanager.scheduler.address

master:8030

yarn.resourcemanager.resource-tracker.address

master:8031

yarn.resourcemanager.admin.address

master:8033

yarn.resourcemanager.webapp.address

master:8088

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.nodemanager.auxservices.mapreduce_shuffle.class

org.apache.hadoop.mapred.ShuffleHandler

yarn.resourcemanager.scheduler.class

org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.CapacityScheduler

[root@node3 hadoop]# vim hdfs-site.xml

dfs.replication

2

dfs.namenode.name.dir

file:///data/hadoop/hdfs/nn

dfs.datanode.data.dir

file:///data/hadoop/hdfs/dn

fs.checkpoint.dir

file:///data/hadoop/hdfs/snn

fs.checkpoint.edits.dir

file:///data/hadoop/hdfs/snn

[root@node3 hadoop]# cp mapred-site.xml.template mapred-site.xml

[root@node3 hadoop]# vim mapred-site.xml

mapreduce.framework.name

yarn

[root@node3 hadoop]# chown hadoop:hadoop mapred-site.xmlnode4:

[root@node4 ~]# yum -y install java

[root@node4 ~]# vim /etc/profile.d/java.sh

export JAVA_HOME=/usr

[root@node4 ~]# yum -y install java-1.8.0-openjdk-devel

[root@node4 ~]# java -version

openjdk version "1.8.0_212"

OpenJDK Runtime Environment (build 1.8.0_212-b04)

OpenJDK 64-Bit Server VM (build 25.212-b04, mixed mode)

[root@node4 ~]# groupadd hadoop

[root@node4 ~]# useradd -g hadoop hadoop

[root@node4 ~]# echo 'smoke520' | passwd --stdin hadoop

[root@node4 ~]# mkdir -pv /bdapps /data/hadoop/hdfs/{nn,snn,dn}

[root@node4 ~]# chown -R hadoop:hadoop /data/hadoop/hdfs/

[root@node4 ~]# tar xf hadoop-2.6.2.tar.gz -C /bdapps/

[root@node4 ~]# cd /bdapps/

[root@node4 bdapps]# ln -sv hadoop-2.6.2 hadoop

[root@node4 bdapps]# vim /etc/profile.d/hadoop.sh

export HADOOP_PREFIX=/bdapps/hadoop

export PATH=$PATH:${HADOOP_PREFIX}/bin:${HADOOP_PREFIX}/sbin

export HADOOP_COMMON_HOME=${HADOOP_PREFIX}

export HADOOP_HDES_HOME=${HADOOP_PREFIX}

export HADOOP_MAPRED_HOME=${HADOOP_PREFIX}

export HADOOP_YARN_HOME=${HADOOP_PREFIX}

[root@node4 bdapps]# cd hadoop

[root@node4 hadoop]# mkdir logs

[root@node4 hadoop]# chmod g+w logs

[root@node4 hadoop]# chown -R hadoop.hadoop ./*

[root@node4 hadoop]# cd etc/hadoop/

[root@node4 hadoop]# vim core-site.xml

fs.defaultFS

hdfs://master:8020

true

[root@node1 hadoop]# vim yarn-site.xml

yarn.resourcemanager.address

master:8032

yarn.resourcemanager.scheduler.address

master:8030

yarn.resourcemanager.resource-tracker.address

master:8031

yarn.resourcemanager.admin.address

master:8033

yarn.resourcemanager.webapp.address

master:8088

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.nodemanager.auxservices.mapreduce_shuffle.class

org.apache.hadoop.mapred.ShuffleHandler

yarn.resourcemanager.scheduler.class

org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.CapacityScheduler

[root@node4 hadoop]# vim hdfs-site.xml

dfs.replication

2

dfs.namenode.name.dir

file:///data/hadoop/hdfs/nn

dfs.datanode.data.dir

file:///data/hadoop/hdfs/dn

fs.checkpoint.dir

file:///data/hadoop/hdfs/snn

fs.checkpoint.edits.dir

file:///data/hadoop/hdfs/snn

[root@node4 hadoop]# cp mapred-site.xml.template mapred-site.xml

[root@node4 hadoop]# vim mapred-site.xml

mapreduce.framework.name

yarn

[root@node4 hadoop]# chown hadoop:hadoop mapred-site.xmlnode1:

[root@node1 hadoop]# su - hadoop

[hadoop@node1 ~]$ hdfs namenode -format

[hadoop@node1 ~]$ ls /data/hadoop/hdfs/nn/

current

[hadoop@node1 ~]$ start-dfs.sh

[root@node1 hadoop]# su - hadoop

[hadoop@node1 ~]$ jps

58085 Jps

42956 NameNode

43149 SecondaryNameNodenode2:

[root@node2 hadoop]# su - hadoop

[hadoop@node2 ~]$ jps

44982 Jps

44888 DataNodenode3:

[root@node3 hadoop]# su - hadoop

[hadoop@node3 ~]$ jps

37417 Jps

37325 DataNodenode4:

[root@node4 hadoop]# su - hadoop

[hadoop@node4 ~]$ jps

36688 Jps

36596 DataNodenode1:

[hadoop@node1 ~]$ hdfs dfs -mkdir /test

[hadoop@node1 ~]$ hdfs dfs -put /etc/fstab /test/fstab

[hadoop@node1 ~]$ hdfs dfs -ls /test

Found 1 items

-rw-r--r-- 2 hadoop supergroup 541 2019-05-18 20:10 /test/fstab

[hadoop@node1 ~]$ hdfs dfs -lsr /test

lsr: DEPRECATED: Please use 'ls -R' instead.

-rw-r--r-- 2 hadoop supergroup 541 2019-05-18 20:10 /test/fstab

[hadoop@node1 ~]$ hdfs dfs -cat /test/fstab

#

# /etc/fstab

# Created by anaconda on Sat May 4 06:14:49 2019

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/centos-root / xfs defaults 0 0

UUID=6f08f050-07b8-4179-81af-5c134cc98879 /boot xfs defaults 0 0

/dev/mapper/centos-home /home xfs defaults 0 0

/dev/mapper/centos-swap swap swap defaults 0 0node2:

[hadoop@node2 ~]$ ls /data/hadoop/hdfs/dn/current/

BP-1149991452-172.16.100.67-1558017374316 VERSION

[hadoop@node2 ~]$ ls /data/hadoop/hdfs/dn/current/BP-1149991452-172.16.100.67-1558017374316/current/finalized/subdir0/subdir0/

blk_1073741825 blk_1073741825_1001.meta

[hadoop@node2 ~]$ cat /data/hadoop/hdfs/dn/current/BP-1149991452-172.16.100.67-1558017374316/current/finalized/subdir0/subdir0/blk_1073741825

#

# /etc/fstab

# Created by anaconda on Sat May 4 06:14:49 2019

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/centos-root / xfs defaults 0 0

UUID=6f08f050-07b8-4179-81af-5c134cc98879 /boot xfs defaults 0 0

/dev/mapper/centos-home /home xfs defaults 0 0

/dev/mapper/centos-swap swap swap defaults 0 0node3:

[hadoop@node3 ~]$ cat /data/hadoop/hdfs/dn/current/BP-1149991452-172.16.100.67-1558017374316/current/finalized/subdir0/subdir0/blk_1073741825

#

# /etc/fstab

# Created by anaconda on Sat May 4 06:14:49 2019

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/centos-root / xfs defaults 0 0

UUID=6f08f050-07b8-4179-81af-5c134cc98879 /boot xfs defaults 0 0

/dev/mapper/centos-home /home xfs defaults 0 0

/dev/mapper/centos-swap swap swap defaults 0 0node1:

[hadoop@node1 ~]$ start-yarn.sh

[hadoop@node1 ~]$ jps

59696 Jps

59554 ResourceManager

59114 NameNode

59308 SecondaryNameNodenode2:

[hadoop@node2 ~]$ jps

55686 Jps

55577 NodeManager

55455 DataNodenode3:

[hadoop@node3 ~]$ jps

47923 DataNode

48155 Jps

48029 NodeManagernode4:

[hadoop@node4 ~]$ jps

DataNode

NodeManager

Jpsnode1:

[hadoop@node1 ~]$ exit

[root@node1 hadoop]# ss -tnl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 *:50090 *:*

LISTEN 0 128 172.16.100.67:8020 *:*

LISTEN 0 128 *:50070 *:*

LISTEN 0 128 *:22 *:*

LISTEN 0 100 127.0.0.1:25 *:*

LISTEN 0 128 ::ffff:172.16.100.67:8030 :::*

LISTEN 0 128 ::ffff:172.16.100.67:8031 :::*

LISTEN 0 128 ::ffff:172.16.100.67:8032 :::*

LISTEN 0 128 ::ffff:172.16.100.67:8033 :::*

LISTEN 0 128 :::22 :::*

LISTEN 0 128 ::ffff:172.16.100.67:8088 :::*

LISTEN 0 100 ::1:25 :::*通过winodws浏览器访问172.16.100.67:8088;

通过winodws浏览器访问172.16.100.67:50070;

node1:

[root@node1 hadoop]# su - hadoop

[root@node1 hadoop]# hdfs dfs -put /etc/rc.d/init.d/functions /test/

[hadoop@node1 ~]$ hdfs dfs -ls /test

Found 2 items

-rw-r--r-- 2 hadoop supergroup 541 2019-05-18 20:10 /test/fstab

-rw-r--r-- 2 hadoop supergroup 18104 2019-05-18 21:22 /test/functionsnode1:

[hadoop@node1 ~]$ exit

[root@node1 hadoop]# cd

[root@node1 ~]# mv hadoop-2.6.2.tar.gz /home/hadoop/

[root@node1 ~]# su - hadoop

[hadoop@node1 ~]$ hdfs dfs -put hadoop-2.6.2.tar.gz /testnode1:

[hadoop@node1 ~]$ yarn jar /bdapps/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.2.jar

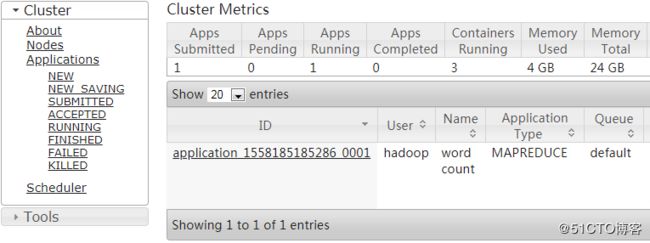

[hadoop@node1 ~]$ yarn jar /bdapps/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.2.jar wordcount /test/fstab /test/functions /test/wc通过winodws浏览器访问172.16.100.67:8088,Apps Subnitted为1;

node1:

[hadoop@node1 ~]$ hdfs dfs -ls /test/wc

Found 2 items

-rw-r--r-- 2 hadoop supergroup 0 2019-05-19 21:00 /test/wc/_SUCCESS

-rw-r--r-- 2 hadoop supergroup 8611 2019-05-19 21:00 /test/wc/part-r-00000

[hadoop@node1 ~]$ hdfs dfs -cat /test/wc/part-r-00000

[hadoop@node1 ~]$ yarn application -list #列出活动作业

19/05/19 21:10:39 INFO client.RMProxy: Connecting to ResourceManager at master/172.16.100.67:8032

Total number of applications (application-types: [] and states: [SUBMITTED, ACCEPTED, RUNNING]):0

Application-Id Application-Name Application-Type User Queue State Final-State Progress Tracking-URL

[hadoop@node1 ~]$ yarn application -list -appStates=all #列出所有作业

19/05/19 21:12:15 INFO client.RMProxy: Connecting to ResourceManager at master/172.16.100.67:8032

Total number of applications (application-types: [] and states: [NEW, NEW_SAVING, SUBMITTED, ACCEPTED, RUNNING, FINISHED, FAILED, KILLED]):1

Application-Id Application-Name Application-Type User Queue State Final-State Progress Tracking-URL

application_1558185185286_0001 word count MAPREDUCE hadoop default FINISHED SUCCEEDED 100% http://node2.smoke.com:19888/jobhistory/job/job_1558185185286_000

[hadoop@node1 ~]$ yarn application -status application_1558185185286_0001 #查看作业报告

19/05/19 21:14:45 INFO client.RMProxy: Connecting to ResourceManager at master/172.16.100.67:8032

Application Report :

Application-Id : application_1558185185286_0001

Application-Name : word count

Application-Type : MAPREDUCE

User : hadoop

Queue : default

Start-Time : 1558270250142

Finish-Time : 1558270803655

Progress : 100%

State : FINISHED

Final-State : SUCCEEDED

Tracking-URL : http://node2.smoke.com:19888/jobhistory/job/job_1558185185286_0001

RPC Port : 44661

AM Host : node2.smoke.com

Aggregate Resource Allocation : 2095249 MB-seconds, 1513 vcore-seconds

Diagnostics :

[hadoop@node1 ~]$ yarn node -list #查看有哪些node;

19/05/19 21:16:36 INFO client.RMProxy: Connecting to ResourceManager at master/172.16.100.67:8032

Total Nodes:3

Node-Id Node-State Node-Http-Address Number-of-Running-Containers

node4.smoke.com:41490 RUNNING node4.smoke.com:8042 0

node2.smoke.com:46826 RUNNING node2.smoke.com:8042 0

node3.smoke.com:33522 RUNNING node3.smoke.com:8042 0

[hadoop@node1 ~]$ yarn node -status node4.smoke.com:41490 #查看对应节点的状态

19/05/19 21:34:44 INFO client.RMProxy: Connecting to ResourceManager at master/172.16.100.67:8032

Node Report :

Node-Id : node4.smoke.com:41490

Rack : /default-rack

Node-State : RUNNING

Node-Http-Address : node4.smoke.com:8042

Last-Health-Update : 星期日 19/五月/19 09:33:08:711CST

Health-Report :

Containers : 0

Memory-Used : 0MB

Memory-Capacity : 8192MB

CPU-Used : 0 vcores

CPU-Capacity : 8 vcores

Node-Labels :

[hadoop@node1 ~]$ yarn logs -applicationId application_1558185185286_0001

19/05/19 21:43:10 INFO client.RMProxy: Connecting to ResourceManager at master/172.16.100.67:8032

/tmp/logs/hadoop/logs/application_1558185185286_0001does not exist.

Log aggregation has not completed or is not enabled.

[hadoop@node1 ~]$ yarn classpath #查看类路径

/bdapps/hadoop/etc/hadoop:/bdapps/hadoop/etc/hadoop:/bdapps/hadoop/etc/hadoop:/bdapps/hadoop/share/hadoop/common/lib/*:/bdapps/hadoop/share/hadoop/common/*:/bdapps/hadoop/share/hadoop/hdfs:/bdapps/hadoop/share/hadoop/hdfs/lib/*:/bdapps/hadoop/share/hadoop/hdfs/*:/bdapps/hadoop/share/hadoop/yarn/lib/*:/bdapps/hadoop/share/hadoop/yarn/*:/bdapps/hadoop/share/hadoop/mapreduce/lib/*:/bdapps/hadoop/share/hadoop/mapreduce/*:/contrib/capacity-scheduler/*.jar:/bdapps/hadoop/share/hadoop/yarn/*:/bdapps/hadoop/share/hadoop/yarn/lib/*

[hadoop@node1 ~]$ yarn rmadmin -refreshNodes

19/05/19 21:49:56 INFO client.RMProxy: Connecting to ResourceManager at master/172.16.100.67:8033