Iterable objects

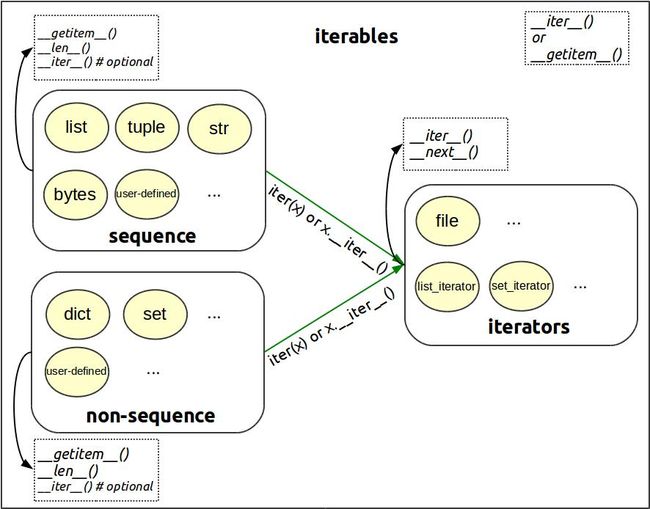

Iterable is a category of objects which can return its element every other time. In fact, any instance carries method __iter__() or __getitem__() will be considered as iterable.

There are many iterable objects in python: list, str, tuple, dict, file, xrange...

- Sequence

A sequence is an ordered list. Like a set, it contains members (also called elements, or terms).

The number of ordered elements (possibly infinite) is called the length of the sequence. Python sequence is an iterable which supports efficient element access using integer indices via the __getitem__()

special method and defines a __len__() method that returns the length of the sequence

- iterator

An iterator is an object that implements next. next is expected to return the next element of the iterable object that returned it, and raise a StopIteration exception when no more elements are available.

In the simplest case the iterable will implement next itself and return self in __iter__.

Following fig shows the relationship of them.

Code sample

Firstly, we will define a class that has followed sequence protocol:

class TestCase(object):

def __init__(self, cases):

self.cases = cases

def __len__(self):

return self.cases

def __iter__(self):

return self

def __getitem__(self, key):

if key >= 0:

index = key

else:

index = self.cases + key

if 0 <= index < len(self):

return 'Test case #%s' % (index + 1)

else:

raise IndexError('No carriage at #%s' % key)

Then, we can use it as iterable:

>>> from generator import TestCase

>>> case = TestCase(5)

>>> len(case)

5

>>> case[0]

'Test case #1'

>>> for c in case:

... print c

...

Test case #1

Test case #2

Test case #3

Test case #4

Test case #5

Note that, case we defined is a sequence and also an iterable, which means we can iterate it inside a loop many times. Things become different if we're using iterator of case:

>>> case_i = iter(case)

>>> case_i

>>> case_i[0]

Traceback (most recent call last):

File "", line 1, in

TypeError: 'iterator' object has no attribute '__getitem__'

>>> case_i.next()

'Test case #1'

>>> case_i.next()

'Test case #2'

>>> for c in case_i:

... print c

...

Test case #3

Test case #4

Test case #5

>>> case_i.next()

Traceback (most recent call last):

File "", line 1, in

StopIteration

Obviously, the iterator of case can be used only once, after we called next() method it returns one element and all elements will be used up if we already get all elements.

So, iterator actually works the same as generator.

Generator

Generators are iterators, but you can only iterate over them once. It's because they do not store all the values in memory, they generate the values on the fly.

yield is a keyword that is used like return, except the function will return a generator.

>>> def my_generator():

... for i in range(3):

... yield i*i

...

>>> gen = my_generator()

>>> gen

>>> gen.next()

0

>>> for i in gen:

... print i

...

1

4

>>> gen.next()

Traceback (most recent call last):

File "", line 1, in

StopIteration

Firstly, we call my_generator to create a generator, it will not return any value until we call next() method or iterate over it.

Coroutine

Coroutines are computer program components that generalize subroutines for nonpreemptive multitasking, by allowing multiple entry points for suspending and resuming execution at certain locations.

So we can simply use generator to create coroutine:

def printer():

count = 0

r = ''

while True:

content = yield r

print '[{0}]:{1}'.format(count, content)

count += 1

r = 'I\'m fine, thank you!'

if __name__ == '__main__':

p = printer()

p.send(None)

msg = ['Hi','My name is myan','Bye']

for m in msg:

res = p.send(m)

print "Returns from generator: %s" % res

We create a generator in main thread, and using send(None) to startup it. Then every time we call send method, the printer will begins its work and return something. In this case, it works similar to coroutine.

So we will see following output:

[0]:Hi

Returns from generator: I'm fine, thank you!

[1]:My name is myan

Returns from generator: I'm fine, thank you!

[2]:Bye

Returns from generator: I'm fine, thank you!

If a function uses keyword yield instead of return, then it will become a generator. Every time when programme encountered yield, the function will be hang up and stores the value we passed in. We often calls next to startup this generator, and send method to resume generator executing. In this way, a generator may become the sub-thread of another main thread, but they all shares the same runtime context.

If you're using python 3.5 or above, async and await syntax has already provide coroutine functions.

In the next chapter, we will build an async IO web server based on coroutine.