纯干货7 Domain Adaptation视频教程(附PPT)及经典论文分享

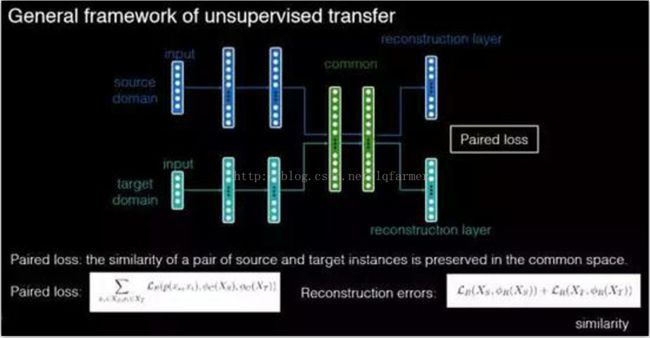

之前,在文章《模型汇总15 领域适应性Domain Adaptation、One-shot/zero-shot Learning概述》中,介绍了关于Domain Adaptation的相关基础知识,并没有深入介绍Domain Adaptation相关算法的原理。今天,分享一个与Domain Adaptation相关的视频(附PPT),感兴趣的同学可以下载。

视频及PPT下载链接:https://pan.baidu.com/s/1i5HuusX 密码: 公众号回复“da”即可获得密码

Domain Adaptation相关的经典论文分享。按原理分类:

Covariate Shift Based:

[1] James Heckman. Sample Selection Bias as a Specification Error. 1979. Nobel prize-winning paper which introduced Sample Selection Bias.

[2] Arthur Gretton et al. Covariate Shift by Kernel Mean Matching. 2008. Describes a procedure for computing the optimal source weights, given infinite unlabeled data and a universal kernel. Also provides target error bounds when learning under the labeled source distribution.

[3] Corinna Cortes et al. Sample Selection Bias Correction Theory. 2008. Analyzes the case when we have only finite unlabeled data, so that we cannot determine the optimal weights exactly. This leads to a bias when learning from

the source domain.

[4] Steffen Bickel. Discriminative Learning under Covariate Shift. 2009. Introduces the logistic regression model for learning source weights directly from unlabeled data.

Representation Learning Based:

[5] John Blitzer et al. Domain Adaptation with Structural Correspondence Learning. 2006. Describes the projection-learning technique from this section.

[6] Shai Ben-David et al. Analysis of Representations for Domain Adaptation. 2007. Gives an early version of the discrepancy distance and its relation to adaptation error.

[7] John Blitzer et al. Domain Adaptation for Sentiment Classification. 2007. Describes an application of projection learning to sentiment classification. These are the results from the previous section.

[8] Yishay Mansour et al. Domain Adaptation: Learning Bounds and Algorithms. 2009. Generalizes the discrepancy distance to arbitrary losses and gives a tighter bound than above. Also describes techniques for learning instances weights to directly minimize discrepancy distance.

Feature Based Supervised Adaptation Based:

[9] T. Evgeniou and M. Pontil. Regularized Multi-task Learning. 2004.

[10] Hal Daume III. Frustratingly Easy Domain Adaptation. 2007. Describes the feature replication algorithm from this section.

[11] Kilian Weinberger et al. Feature Hashing for Large Scale Multitask Learning. 2009. Describes the feature hashing technique for handling millions of domains or tasks simultaneously.

[12] A. Kumar et al. Frustratingly Easy Semi-supervised Domain Adaptation. 2010.Shows how to incorporate unlabeled data in the feature replication framework.

Parameter Based Supervised Adaptation Based:

[13] Olivier Chapelle et al. A machine learning approach to conjoint analysis. 2005.

[14] Kai Yu et al. Learning Gaussian Processes from Multiple Tasks. 2005. Kernelized multi-task learning with parameters linked via GP prior.

[11] Ya Xue et al. Multi-task Learning for Classification with Dirichlet Process Priors. 2007. Learning with parameters linked via DP clustering.

[12] Hal Daume III. Bayesian Multitask Learning with Latent Hierarchies. 2009. Describes the latent hierarchical modeling.

往期精彩内容推荐:

模型汇总16 各类Seq2Seq模型对比及《Attention Is All You Need》中技术详解

深度学习模型-13 迁移学习(Transfer Learning)技术概述

<纯干货-5>Deep Reinforcement Learning深度强化学习_论文大集合

<模型汇总-9> VAE基础:LVM、MAP、EM、MCMC、Variational Inference(VI)

<模型汇总-6>基于CNN的Seq2Seq模型-Convolutional Sequence to Sequence

更多精彩,点击公众号“往期内容”查看。

更多深度学习在NLP方面应用的经典论文、实践经验和最新消息,欢迎关注微信公众号“深度学习与NLP”或“DeepLearning_NLP”或扫描二维码添加关注。