python训练Faster RCNN&&C++调用训练好的模型进行物体检测-基于opencv3.4.3(超详细)

介绍

上一篇博文讲到tensorflow Object Detection api 基于SSD模型对数据进行训练,然后通过C++版本的opencv进行调用,但是通过实验发现,SSD虽然快但是准确率实在太低了,所以又重新使用Faster RCNN进行重新训练~废话不多说了,开始主要内容介绍了!

训练阶段

配置:GTX1060、I7-8700k~

关于object detection api的配置使用就不多说了,详细请参考:

https://blog.csdn.net/zong596568821xp/article/details/82015126

https://blog.csdn.net/chuquanchang1051/article/details/79804965

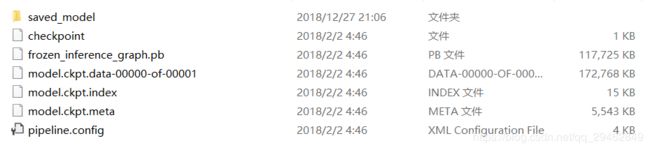

这里是基于faster_rcnn_resnet50_coco模型,下载链接见:faster_rcnn_resnet50_coco_2018_01_28,下载之后解压,解压后的文件如下图所示:

当解压模型后,这时需要到object_detection/samples/configs/文件夹中找到对应的config文件,这里是faster_rcnn_resnet50_coco.config文件,打开config文件,在这里我修改了它的类别数目,由于这里只有一种garbage类别,所以num_classes=1。

model {

faster_rcnn {

num_classes: 1 //有几种类别就写几种

image_resizer {

keep_aspect_ratio_resizer {

min_dimension: 600

max_dimension: 1024

}

除此之外,还需要配置一些文件目录,其中fine_tune_checkpoint就是下载模型中的model.ckpt,input_path和label_map_path就是训练数据和标签,其中object-detection.pbtxt是自己建立的文件,记录着自己的标签信息,因为这里只有一种类别,格式如下:

item {

id: 1

name: 'garbage'

}

use_moving_average: false

}

gradient_clipping_by_norm: 10.0

fine_tune_checkpoint: "model_zoo/faster_rcnn_resnet50_coco_2018_01_28/model.ckpt"

from_detection_checkpoint: true

# Note: The below line limits the training process to 200K steps, which we

# empirically found to be sufficient enough to train the pets dataset. This

# effectively bypasses the learning rate schedule (the learning rate will

# never decay). Remove the below line to train indefinitely.

num_steps: 200000

data_augmentation_options {

random_horizontal_flip {

}

}

}

train_input_reader: {

tf_record_input_reader {

input_path: "train_data/garbage/train/tf_record/train.record"

}

label_map_path: "model_zoo/faster_rcnn_resnet50_coco_2018_01_28/object-detection.pbtxt"

}

eval_config: {

num_examples: 116 #测试集的数据数量,需要修改下

# Note: The below line limits the evaluation process to 10 evaluations.

# Remove the below line to evaluate indefinitely.

max_evals: 10

}

eval_input_reader: {

tf_record_input_reader {

input_path: "train_data/garbage/test/tf_record/test.record"

}

label_map_path: "model_zoo/faster_rcnn_resnet50_coco_2018_01_28/object-detection.pbtxt"

shuffle: false

num_readers: 1

}

修改完配置文件之后,回到object detection api的train.py文件中,train.py文件原来在legacy文件夹中,将其复制出来即可。在train.py文件中,修改以下两项内容:

#模型保存路径

flags.DEFINE_string('train_dir', default='training_model/garbage/',help='')

#修改过的配置文件.config路径

flags.DEFINE_string('pipeline_config_path', default='samples/configs/faster_rcnn_resnet50_coco.config',help='')

模型的转换

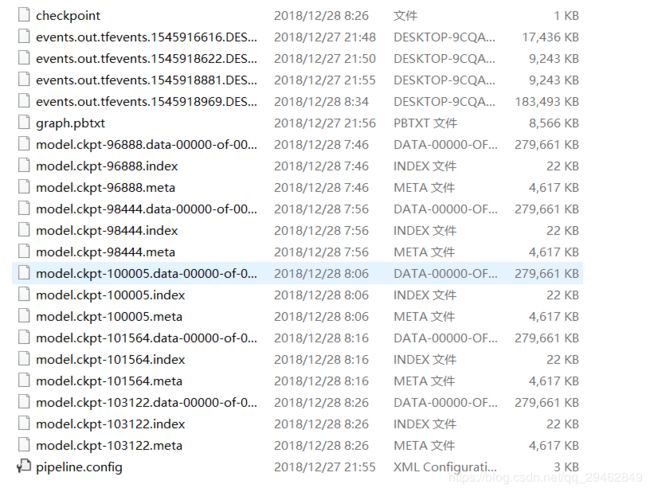

当训练结束后,需要把训练好的模型转换为.pb文件,下图为训练生成的文件。

转换文件为export_inference_graph.py,源代码如下所示,其中转换的模型要为最好的模型:

import tensorflow as tf

from google.protobuf import text_format

from object_detection import exporter

from object_detection.protos import pipeline_pb2

slim = tf.contrib.slim

flags = tf.app.flags

flags.DEFINE_string('input_type', 'image_tensor', 'Type of input node. Can be '

'one of [`image_tensor`, `encoded_image_string_tensor`, '

'`tf_example`]')

flags.DEFINE_string('input_shape', None,

'If input_type is `image_tensor`, this can explicitly set '

'the shape of this input tensor to a fixed size. The '

'dimensions are to be provided as a comma-separated list '

'of integers. A value of -1 can be used for unknown '

'dimensions. If not specified, for an `image_tensor, the '

'default shape will be partially specified as '

'`[None, None, None, 3]`.')

flags.DEFINE_string('pipeline_config_path', 'samples/configs/faster_rcnn_resnet50_coco.config',

'Path to a pipeline_pb2.TrainEvalPipelineConfig config '

'file.')

flags.DEFINE_string('trained_checkpoint_prefix', 'training_model/garbage/model.ckpt-103122',

'Path to trained checkpoint, typically of the form '

'path/to/model.ckpt')

flags.DEFINE_string('output_directory', 'pb_model/faster_rcnn_resnet50_coco', 'Path to write outputs.')

flags.DEFINE_string('config_override', '',

'pipeline_pb2.TrainEvalPipelineConfig '

'text proto to override pipeline_config_path.')

flags.DEFINE_boolean('write_inference_graph', False,

'If true, writes inference graph to disk.')

tf.app.flags.mark_flag_as_required('pipeline_config_path')

tf.app.flags.mark_flag_as_required('trained_checkpoint_prefix')

tf.app.flags.mark_flag_as_required('output_directory')

FLAGS = flags.FLAGS

def main(_):

pipeline_config = pipeline_pb2.TrainEvalPipelineConfig()

with tf.gfile.GFile(FLAGS.pipeline_config_path, 'r') as f:

text_format.Merge(f.read(), pipeline_config)

text_format.Merge(FLAGS.config_override, pipeline_config)

if FLAGS.input_shape:

input_shape = [

int(dim) if dim != '-1' else None

for dim in FLAGS.input_shape.split(',')

]

else:

input_shape = None

exporter.export_inference_graph(

FLAGS.input_type, pipeline_config, FLAGS.trained_checkpoint_prefix,

FLAGS.output_directory, input_shape=input_shape,

write_inference_graph=FLAGS.write_inference_graph)

if __name__ == '__main__':

tf.app.run()

这和最初下载的模型非常相似,但是里面的结构有所不同。opencv在调用faster rcnn等物体检测模型时,还需要一个.pbtxt文件,它可以告诉函数该怎么读取模型,该转换由tf_text_graph_faster_rcnn.py来完成。

详细请参考https://github.com/opencv/opencv/wiki/TensorFlow-Object-Detection-API#generate-a-config-file,该文件在opencv的dnn模块中,下载链接:dnn模块,下载完之后放到object_detection文件夹下即可。

需要修改的地方有input、output、config、num_classes,如果没有num_classes,可以将其加上,也可以不加,.config文件中包含这项。

if __name__ == "__main__":

parser = argparse.ArgumentParser(description='Run this script to get a text graph of '

'Faster-RCNN model from TensorFlow Object Detection API. '

'Then pass it with .pb file to cv::dnn::readNetFromTensorflow function.')

parser.add_argument('--input', default='F:/tensorflow_object_detection/object_detection/pb_model/faster_rcnn_resnet50_coco/frozen_inference_graph.pb',help='Path to frozen TensorFlow graph.')

parser.add_argument('--output', default='F:/tensorflow_object_detection/object_detection/pb_model/faster_rcnn_resnet50_coco/faster_rcnn.pbtxt',help='Path to output text graph.')

parser.add_argument('--config',default='F:/tensorflow_object_detection/object_detection/samples/configs/faster_rcnn_resnet50_coco.config',help='Path to a *.config file is used for training.')

# parser.add_argument('--num_classes', required=True, default=1,help='Path to a *.config file is used for training.')

args = parser.parse_args()

createFasterRCNNGraph(args.input, args.config, args.output)

转换完成之后会生成faster_rcnn.pbtxt文件,然后就交给C++调用吧!除了C++,还有一个opencv-python版本的测试程序:

import cv2 as cv

cvNet = cv.dnn.readNetFromTensorflow('C:/Users/18301/Desktop/faster_rcnn_resnet50_coco/frozen_inference_graph.pb',

'C:/Users/18301/Desktop/faster_rcnn_resnet50_coco/faster_rcnn.pbtxt')

img = cv.imread('C:/Users/18301/Desktop/images/image12.jpg')

rows = img.shape[0]

cols = img.shape[1]

cvNet.setInput(cv.dnn.blobFromImage(img, size=(300, 300), swapRB=True, crop=False))

cvOut = cvNet.forward()

print(cvOut)

for detection in cvOut[0,0,:,:]:

score = float(detection[2])

if score > 0.3:

left = detection[3] * cols

top = detection[4] * rows

right = detection[5] * cols

bottom = detection[6] * rows

cv.rectangle(img, (int(left), int(top)), (int(right), int(bottom)), (23, 230, 210), thickness=2)

cv.imshow('img', img)

cv.waitKey()

Opencv调用Faster RCNN源代码

#include

#include

#include

#include 注意:cv::Mat blob = cv::dnn::blobFromImage(frame, 1, Size(inWidth, inHeight), false, true);这里会导致faster rcnn检测结果乱码,自己也是查了好久才发现,按这个格式来,亲测有效!

这里的宽度和高度不能太小了,否则识别率会降低,但是大的尺寸会消耗比较多的时间,自己找一个折衷吧!

const size_t inWidth = 600;

const size_t inHeight = 600;

实验结果