Spark 读取 Hive 数据及相关问题解决

-

示例代码

-

SparkHiveAPP 主类

注意:

需要将 core-site.xml,hdfs-site.xml, yarn-site.xml,mapred-site.xml 和 hive-site.xml 放到 resource 下面,程序运行的时候需要这些环境。import org.apache.log4j.{Level, Logger} import org.apache.spark.SparkConf import org.apache.spark.sql.SparkSession object SparkHiveAPP { def main(args: Array[String]): Unit = { Logger.getLogger("org").setLevel(Level.WARN) /** * 不设置 System.setProperty("HADOOP_USER_NAME", "root") 会出现异常 * org.apache.hadoop.security.AccessControlException: Permission denied */ System.setProperty("HADOOP_USER_NAME", "root") val conf = new SparkConf() .setIfMissing("spark.master", "local[2]") .set("spark.sql.warehouse.dir", "/user/hive/warehouse") .setAppName("Spark_Hive_APP") val spark: SparkSession = SparkSession.builder().config(conf) .enableHiveSupport() .getOrCreate() spark.sparkContext.setLogLevel("WARN") spark.sql("SELECT * FROM test.test1").show() } } -

pom.xml 文件

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0modelVersion> <groupId>com.clouderagroupId> <artifactId>RemoteSubmitSparkToYarnartifactId> <version>1.0-SNAPSHOTversion> <packaging>jarpackaging> <name>RemoteSubmitSparkToYarnname> <repositories> <repository> <id>clouderaid> <url>https://repository.cloudera.com/artifactory/cloudera-repos/url> <name>Cloudera Repositoriesname> <releases> <enabled>trueenabled> releases> <snapshots> <enabled>falseenabled> snapshots> repository> repositories> <properties> <project.build.sourceEncoding>UTF-8project.build.sourceEncoding> <project.reporting.outputEncoding>UTF-8project.reporting.outputEncoding> <java.version>1.8java.version> <scala.version>2.11.12scala.version> <hbase.version>1.3.0hbase.version> <hive.version>1.2.0hive.version> <kafka.version>0.10.0.1kafka.version> <spark.version>2.2.0spark.version> <kafka.scope>compilekafka.scope> <provided.scope>compileprovided.scope> properties> <dependencies> <dependency> <groupId>org.scala-langgroupId> <artifactId>scala-libraryartifactId> <version>${scala.version}version> <scope>${provided.scope}scope> dependency> <dependency> <groupId>org.scala-langgroupId> <artifactId>scala-compilerartifactId> <version>${scala.version}version> <scope>${provided.scope}scope> dependency> <dependency> <groupId>org.scala-langgroupId> <artifactId>scala-reflectartifactId> <version>${scala.version}version> <scope>${provided.scope}scope> dependency> <dependency> <groupId>org.apache.sparkgroupId> <artifactId>spark-core_2.11artifactId> <version>${spark.version}version> <exclusions> <exclusion> <groupId>org.glassfish.jersey.bundles.repackagedgroupId> <artifactId>jersey-guavaartifactId> exclusion> exclusions> <scope>${provided.scope}scope> dependency> <dependency> <groupId>org.apache.sparkgroupId> <artifactId>spark-streaming_2.11artifactId> <version>${spark.version}version> <scope>${provided.scope}scope> dependency> <dependency> <groupId>org.apache.sparkgroupId> <artifactId>spark-sql_2.11artifactId> <version>${spark.version}version> <scope>${provided.scope}scope> dependency> <dependency> <groupId>org.apache.sparkgroupId> <artifactId>spark-hive_2.11artifactId> <version>${spark.version}version> <scope>${provided.scope}scope> dependency> <dependency> <groupId>org.apache.hivegroupId> <artifactId>hive-execartifactId> <version>${hive.version}version> dependency> <dependency> <groupId>org.apache.sparkgroupId> <artifactId>spark-yarn_2.11artifactId> <version>${spark.version}version> <scope>${provided.scope}scope> dependency> <dependency> <groupId>org.apache.sparkgroupId> <artifactId>spark-sql-kafka-0-10_2.11artifactId> <version>${spark.version}version> <scope>${provided.scope}scope> dependency> <dependency> <groupId>org.apache.sparkgroupId> <artifactId>spark-streaming-kafka-0-10_2.11artifactId> <version>${spark.version}version> <scope>${provided.scope}scope> dependency> <dependency> <groupId>org.apache.kafkagroupId> <artifactId>kafka_2.11artifactId> <version>${kafka.version}version> <scope>${kafka.scope}scope> dependency> <dependency> <groupId>org.apache.kafkagroupId> <artifactId>kafka-clientsartifactId> <version>0.10.0.1version> <scope>${kafka.scope}scope> dependency> dependencies> <build> <pluginManagement> <plugins> <plugin> <groupId>org.apache.maven.pluginsgroupId> <artifactId>maven-compiler-pluginartifactId> <version>3.8.0version> <configuration> <source>1.8source> <target>1.8target> configuration> plugin> <plugin> <groupId>org.apache.maven.pluginsgroupId> <artifactId>maven-resources-pluginartifactId> <version>3.0.2version> <configuration> <encoding>UTF-8encoding> configuration> plugin> <plugin> <groupId>net.alchim31.mavengroupId> <artifactId>scala-maven-pluginartifactId> <version>3.2.2version> <executions> <execution> <goals> <goal>compilegoal> <goal>testCompilegoal> goals> execution> executions> plugin> <plugin> <groupId>org.apache.maven.pluginsgroupId> <artifactId>maven-resources-pluginartifactId> <version>3.0.2version> <configuration> <encoding>UTF-8encoding> configuration> plugin> plugins> pluginManagement> <plugins> <plugin> <groupId>net.alchim31.mavengroupId> <artifactId>scala-maven-pluginartifactId> <executions> <execution> <id>scala-compile-firstid> <phase>process-resourcesphase> <goals> <goal>add-sourcegoal> <goal>compilegoal> goals> execution> <execution> <id>scala-test-compileid> <phase>process-test-resourcesphase> <goals> <goal>testCompilegoal> goals> execution> executions> plugin> <plugin> <groupId>org.apache.maven.pluginsgroupId> <artifactId>maven-compiler-pluginartifactId> <executions> <execution> <phase>compilephase> <goals> <goal>compilegoal> goals> execution> executions> plugin> <plugin> <groupId>org.apache.maven.pluginsgroupId> <artifactId>maven-shade-pluginartifactId> <version>2.4.3version> <executions> <execution> <phase>packagephase> <goals> <goal>shadegoal> goals> <configuration> <filters> <filter> <artifact>*:*artifact> <excludes> <exclude>META-INF/*.SFexclude> <exclude>META-INF/*.DSAexclude> <exclude>META-INF/*.RSAexclude> excludes> filter> filters> configuration> execution> executions> plugin> plugins> <resources> <resource> <directory>${basedir}/src/main/resourcesdirectory> <excludes> <exclude>env/*/*exclude> excludes> <includes> <include>**/*include> includes> resource> <resource> <directory>${basedir}/src/main/resources/env/${profile.active}directory> <includes> <include>**/*.propertiesinclude> <include>**/*.xmlinclude> includes> resource> resources> build> <profiles> <profile> <id>devid> <properties> <profile.active>devprofile.active> properties> <activation> <activeByDefault>trueactiveByDefault> activation> profile> <profile> <id>testid> <properties> <profile.active>testprofile.active> properties> profile> <profile> <id>prodid> <properties> <profile.active>prodprofile.active> properties> profile> profiles> project> -

运行结果

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties 18/06/27 10:30:40 INFO metastore: Trying to connect to metastore with URI thrift://cdh01:9083 18/06/27 10:30:41 WARN ShellBasedUnixGroupsMapping: got exception trying to get groups for user root: GetLocalGroupsForUser error (1332): ????????????????? 18/06/27 10:30:41 WARN UserGroupInformation: No groups available for user root 18/06/27 10:30:41 INFO metastore: Connected to metastore. 18/06/27 10:30:42 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 18/06/27 10:30:42 WARN UserGroupInformation: No groups available for user root 18/06/27 10:30:42 WARN UserGroupInformation: No groups available for user root 18/06/27 10:30:42 WARN UserGroupInformation: No groups available for user root 18/06/27 10:30:42 WARN UserGroupInformation: No groups available for user root +---+--------+------------+ | id| name| hobby| +---+--------+------------+ | 1|zhangsan|[唱歌, 跳舞, 游泳]| | 2| lisi| [打游戏, 篮球]| | 3| wangwu| [唱歌, 游泳]| +---+--------+------------+ Process finished with exit code 0

-

-

遇到的问题

-

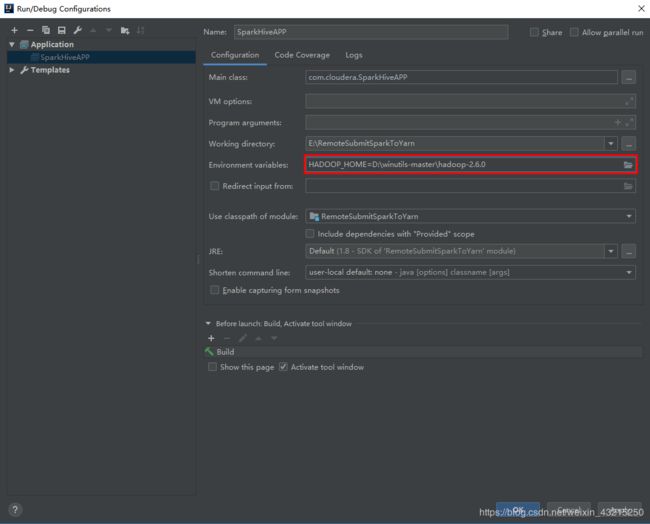

本地找不到未 winutils 二进制文件

问题日志:

18/06/27 10:35:18 ERROR Shell: Failed to locate the winutils binary in the hadoop binary path java.io.IOException: Could not locate executable null\bin\winutils.exe in the Hadoop binaries. at org.apache.hadoop.util.Shell.getQualifiedBinPath(Shell.java:378) at org.apache.hadoop.util.Shell.getWinUtilsPath(Shell.java:393) at org.apache.hadoop.util.Shell.getGroupsForUserCommand(Shell.java:163) at org.apache.hadoop.security.ShellBasedUnixGroupsMapping.getUnixGroups(ShellBasedUnixGroupsMapping.java:84) at org.apache.hadoop.security.ShellBasedUnixGroupsMapping.getGroups(ShellBasedUnixGroupsMapping.java:52) at org.apache.hadoop.security.Groups$GroupCacheLoader.fetchGroupList(Groups.java:231) at org.apache.hadoop.security.Groups$GroupCacheLoader.load(Groups.java:211) at org.apache.hadoop.security.Groups$GroupCacheLoader.load(Groups.java:199) at com.google.common.cache.LocalCache$LoadingValueReference.loadFuture(LocalCache.java:3524) at com.google.common.cache.LocalCache$Segment.loadSync(LocalCache.java:2317) at com.google.common.cache.LocalCache$Segment.lockedGetOrLoad(LocalCache.java:2280) at com.google.common.cache.LocalCache$Segment.get(LocalCache.java:2195) at com.google.common.cache.LocalCache.get(LocalCache.java:3934) at com.google.common.cache.LocalCache.getOrLoad(LocalCache.java:3938) at com.google.common.cache.LocalCache$LocalLoadingCache.get(LocalCache.java:4821) at org.apache.hadoop.security.Groups.getGroups(Groups.java:173) at org.apache.hadoop.security.UserGroupInformation.getGroupNames(UserGroupInformation.java:1552) at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.open(HiveMetaStoreClient.java:436) at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.(HiveMetaStoreClient.java:236) at org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient. (SessionHiveMetaStoreClient.java:74) at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) at java.lang.reflect.Constructor.newInstance(Constructor.java:423) at org.apache.hadoop.hive.metastore.MetaStoreUtils.newInstance(MetaStoreUtils.java:1521) at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient. (RetryingMetaStoreClient.java:86) at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:132) at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:104) at org.apache.hadoop.hive.ql.metadata.Hive.createMetaStoreClient(Hive.java:3005) at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:3024) at org.apache.hadoop.hive.ql.metadata.Hive.getAllDatabases(Hive.java:1234) at org.apache.hadoop.hive.ql.metadata.Hive.reloadFunctions(Hive.java:174) at org.apache.hadoop.hive.ql.metadata.Hive. (Hive.java:166) at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:503) at org.apache.spark.sql.hive.client.HiveClientImpl. (HiveClientImpl.scala:191) at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) at java.lang.reflect.Constructor.newInstance(Constructor.java:423) at org.apache.spark.sql.hive.client.IsolatedClientLoader.createClient(IsolatedClientLoader.scala:264) at org.apache.spark.sql.hive.HiveUtils$.newClientForMetadata(HiveUtils.scala:362) at org.apache.spark.sql.hive.HiveUtils$.newClientForMetadata(HiveUtils.scala:266) at org.apache.spark.sql.hive.HiveExternalCatalog.client$lzycompute(HiveExternalCatalog.scala:66) at org.apache.spark.sql.hive.HiveExternalCatalog.client(HiveExternalCatalog.scala:65) at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$databaseExists$1.apply$mcZ$sp(HiveExternalCatalog.scala:194) at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$databaseExists$1.apply(HiveExternalCatalog.scala:194) at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$databaseExists$1.apply(HiveExternalCatalog.scala:194) at org.apache.spark.sql.hive.HiveExternalCatalog.withClient(HiveExternalCatalog.scala:97) at org.apache.spark.sql.hive.HiveExternalCatalog.databaseExists(HiveExternalCatalog.scala:193) at org.apache.spark.sql.internal.SharedState.externalCatalog$lzycompute(SharedState.scala:105) at org.apache.spark.sql.internal.SharedState.externalCatalog(SharedState.scala:93) at org.apache.spark.sql.hive.HiveSessionStateBuilder.externalCatalog(HiveSessionStateBuilder.scala:39) at org.apache.spark.sql.hive.HiveSessionStateBuilder.catalog$lzycompute(HiveSessionStateBuilder.scala:54) at org.apache.spark.sql.hive.HiveSessionStateBuilder.catalog(HiveSessionStateBuilder.scala:52) at org.apache.spark.sql.hive.HiveSessionStateBuilder.catalog(HiveSessionStateBuilder.scala:35) at org.apache.spark.sql.internal.BaseSessionStateBuilder.build(BaseSessionStateBuilder.scala:289) at org.apache.spark.sql.SparkSession$.org$apache$spark$sql$SparkSession$$instantiateSessionState(SparkSession.scala:1050) at org.apache.spark.sql.SparkSession$$anonfun$sessionState$2.apply(SparkSession.scala:130) at org.apache.spark.sql.SparkSession$$anonfun$sessionState$2.apply(SparkSession.scala:130) at scala.Option.getOrElse(Option.scala:121) at org.apache.spark.sql.SparkSession.sessionState$lzycompute(SparkSession.scala:129) at org.apache.spark.sql.SparkSession.sessionState(SparkSession.scala:126) at org.apache.spark.sql.SparkSession$Builder$$anonfun$getOrCreate$5.apply(SparkSession.scala:938) at org.apache.spark.sql.SparkSession$Builder$$anonfun$getOrCreate$5.apply(SparkSession.scala:938) at scala.collection.mutable.HashMap$$anonfun$foreach$1.apply(HashMap.scala:130) at scala.collection.mutable.HashMap$$anonfun$foreach$1.apply(HashMap.scala:130) at scala.collection.mutable.HashTable$class.foreachEntry(HashTable.scala:236) at scala.collection.mutable.HashMap.foreachEntry(HashMap.scala:40) at scala.collection.mutable.HashMap.foreach(HashMap.scala:130) at org.apache.spark.sql.SparkSession$Builder.getOrCreate(SparkSession.scala:938) at com.cloudera.SparkHiveAPP$.main(SparkHiveAPP.scala:24) at com.cloudera.SparkHiveAPP.main(SparkHiveAPP.scala) 解决办法:

-

不能访问 metastore, 无法实例化 SessionHiveMetaStoreClient

原因: 在上面 pom.xml 中把整合 HBsae 的相关jar引入后,访问 Hive 时会报以下异常,与未整合 HBsae 报错不一样。解决办法同上。

问题日志:

log4j:WARN No appenders could be found for logger (org.apache.hadoop.util.Shell). log4j:WARN Please initialize the log4j system properly. log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info. Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties 18/06/27 10:47:01 INFO metastore: Trying to connect to metastore with URI thrift://cdh01:9083 18/06/27 10:47:01 WARN Hive: Failed to access metastore. This class should not accessed in runtime. org.apache.hadoop.hive.ql.metadata.HiveException: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient at org.apache.hadoop.hive.ql.metadata.Hive.getAllDatabases(Hive.java:1236) at org.apache.hadoop.hive.ql.metadata.Hive.reloadFunctions(Hive.java:174) at org.apache.hadoop.hive.ql.metadata.Hive.(Hive.java:166) at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:503) at org.apache.spark.sql.hive.client.HiveClientImpl. (HiveClientImpl.scala:191) at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) at java.lang.reflect.Constructor.newInstance(Constructor.java:423) at org.apache.spark.sql.hive.client.IsolatedClientLoader.createClient(IsolatedClientLoader.scala:264) at org.apache.spark.sql.hive.HiveUtils$.newClientForMetadata(HiveUtils.scala:362) at org.apache.spark.sql.hive.HiveUtils$.newClientForMetadata(HiveUtils.scala:266) at org.apache.spark.sql.hive.HiveExternalCatalog.client$lzycompute(HiveExternalCatalog.scala:66) at org.apache.spark.sql.hive.HiveExternalCatalog.client(HiveExternalCatalog.scala:65) at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$databaseExists$1.apply$mcZ$sp(HiveExternalCatalog.scala:194) at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$databaseExists$1.apply(HiveExternalCatalog.scala:194) at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$databaseExists$1.apply(HiveExternalCatalog.scala:194) at org.apache.spark.sql.hive.HiveExternalCatalog.withClient(HiveExternalCatalog.scala:97) at org.apache.spark.sql.hive.HiveExternalCatalog.databaseExists(HiveExternalCatalog.scala:193) at org.apache.spark.sql.internal.SharedState.externalCatalog$lzycompute(SharedState.scala:105) at org.apache.spark.sql.internal.SharedState.externalCatalog(SharedState.scala:93) at org.apache.spark.sql.hive.HiveSessionStateBuilder.externalCatalog(HiveSessionStateBuilder.scala:39) at org.apache.spark.sql.hive.HiveSessionStateBuilder.catalog$lzycompute(HiveSessionStateBuilder.scala:54) at org.apache.spark.sql.hive.HiveSessionStateBuilder.catalog(HiveSessionStateBuilder.scala:52) at org.apache.spark.sql.hive.HiveSessionStateBuilder.catalog(HiveSessionStateBuilder.scala:35) at org.apache.spark.sql.internal.BaseSessionStateBuilder.build(BaseSessionStateBuilder.scala:289) at org.apache.spark.sql.SparkSession$.org$apache$spark$sql$SparkSession$$instantiateSessionState(SparkSession.scala:1050) at org.apache.spark.sql.SparkSession$$anonfun$sessionState$2.apply(SparkSession.scala:130) at org.apache.spark.sql.SparkSession$$anonfun$sessionState$2.apply(SparkSession.scala:130) at scala.Option.getOrElse(Option.scala:121) at org.apache.spark.sql.SparkSession.sessionState$lzycompute(SparkSession.scala:129) at org.apache.spark.sql.SparkSession.sessionState(SparkSession.scala:126) at org.apache.spark.sql.SparkSession$Builder$$anonfun$getOrCreate$5.apply(SparkSession.scala:938) at org.apache.spark.sql.SparkSession$Builder$$anonfun$getOrCreate$5.apply(SparkSession.scala:938) at scala.collection.mutable.HashMap$$anonfun$foreach$1.apply(HashMap.scala:130) at scala.collection.mutable.HashMap$$anonfun$foreach$1.apply(HashMap.scala:130) at scala.collection.mutable.HashTable$class.foreachEntry(HashTable.scala:236) at scala.collection.mutable.HashMap.foreachEntry(HashMap.scala:40) at scala.collection.mutable.HashMap.foreach(HashMap.scala:130) at org.apache.spark.sql.SparkSession$Builder.getOrCreate(SparkSession.scala:938) at com.cloudera.SparkHiveAPP$.main(SparkHiveAPP.scala:24) at com.cloudera.SparkHiveAPP.main(SparkHiveAPP.scala) Caused by: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient at org.apache.hadoop.hive.metastore.MetaStoreUtils.newInstance(MetaStoreUtils.java:1523) at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient. (RetryingMetaStoreClient.java:86) at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:132) at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:104) at org.apache.hadoop.hive.ql.metadata.Hive.createMetaStoreClient(Hive.java:3005) at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:3024) at org.apache.hadoop.hive.ql.metadata.Hive.getAllDatabases(Hive.java:1234) ... 41 more Caused by: java.lang.reflect.InvocationTargetException at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) at java.lang.reflect.Constructor.newInstance(Constructor.java:423) at org.apache.hadoop.hive.metastore.MetaStoreUtils.newInstance(MetaStoreUtils.java:1521) ... 47 more Caused by: java.lang.NullPointerException at java.lang.ProcessBuilder.start(ProcessBuilder.java:1012) at org.apache.hadoop.util.Shell.runCommand(Shell.java:482) at org.apache.hadoop.util.Shell.run(Shell.java:455) at org.apache.hadoop.util.Shell$ShellCommandExecutor.execute(Shell.java:702) at org.apache.hadoop.util.Shell.execCommand(Shell.java:791) at org.apache.hadoop.util.Shell.execCommand(Shell.java:774) at org.apache.hadoop.security.ShellBasedUnixGroupsMapping.getUnixGroups(ShellBasedUnixGroupsMapping.java:84) at org.apache.hadoop.security.ShellBasedUnixGroupsMapping.getGroups(ShellBasedUnixGroupsMapping.java:52) at org.apache.hadoop.security.Groups.getGroups(Groups.java:139) at org.apache.hadoop.security.UserGroupInformation.getGroupNames(UserGroupInformation.java:1474) at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.open(HiveMetaStoreClient.java:436) at org.apache.hadoop.hive.metastore.HiveMetaStoreClient. (HiveMetaStoreClient.java:236) at org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient. (SessionHiveMetaStoreClient.java:74) ... 52 more 18/06/27 10:47:01 INFO metastore: Trying to connect to metastore with URI thrift://cdh01:9083 Exception in thread "main" java.lang.IllegalArgumentException: Error while instantiating 'org.apache.spark.sql.hive.HiveSessionStateBuilder': at org.apache.spark.sql.SparkSession$.org$apache$spark$sql$SparkSession$$instantiateSessionState(SparkSession.scala:1053) at org.apache.spark.sql.SparkSession$$anonfun$sessionState$2.apply(SparkSession.scala:130) at org.apache.spark.sql.SparkSession$$anonfun$sessionState$2.apply(SparkSession.scala:130) at scala.Option.getOrElse(Option.scala:121) at org.apache.spark.sql.SparkSession.sessionState$lzycompute(SparkSession.scala:129) at org.apache.spark.sql.SparkSession.sessionState(SparkSession.scala:126) at org.apache.spark.sql.SparkSession$Builder$$anonfun$getOrCreate$5.apply(SparkSession.scala:938) at org.apache.spark.sql.SparkSession$Builder$$anonfun$getOrCreate$5.apply(SparkSession.scala:938) at scala.collection.mutable.HashMap$$anonfun$foreach$1.apply(HashMap.scala:130) at scala.collection.mutable.HashMap$$anonfun$foreach$1.apply(HashMap.scala:130) at scala.collection.mutable.HashTable$class.foreachEntry(HashTable.scala:236) at scala.collection.mutable.HashMap.foreachEntry(HashMap.scala:40) at scala.collection.mutable.HashMap.foreach(HashMap.scala:130) at org.apache.spark.sql.SparkSession$Builder.getOrCreate(SparkSession.scala:938) at com.cloudera.SparkHiveAPP$.main(SparkHiveAPP.scala:24) at com.cloudera.SparkHiveAPP.main(SparkHiveAPP.scala) Caused by: org.apache.spark.sql.AnalysisException: java.lang.RuntimeException: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient; at org.apache.spark.sql.hive.HiveExternalCatalog.withClient(HiveExternalCatalog.scala:106) at org.apache.spark.sql.hive.HiveExternalCatalog.databaseExists(HiveExternalCatalog.scala:193) at org.apache.spark.sql.internal.SharedState.externalCatalog$lzycompute(SharedState.scala:105) at org.apache.spark.sql.internal.SharedState.externalCatalog(SharedState.scala:93) at org.apache.spark.sql.hive.HiveSessionStateBuilder.externalCatalog(HiveSessionStateBuilder.scala:39) at org.apache.spark.sql.hive.HiveSessionStateBuilder.catalog$lzycompute(HiveSessionStateBuilder.scala:54) at org.apache.spark.sql.hive.HiveSessionStateBuilder.catalog(HiveSessionStateBuilder.scala:52) at org.apache.spark.sql.hive.HiveSessionStateBuilder.catalog(HiveSessionStateBuilder.scala:35) at org.apache.spark.sql.internal.BaseSessionStateBuilder.build(BaseSessionStateBuilder.scala:289) at org.apache.spark.sql.SparkSession$.org$apache$spark$sql$SparkSession$$instantiateSessionState(SparkSession.scala:1050) ... 15 more Caused by: java.lang.RuntimeException: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:522) at org.apache.spark.sql.hive.client.HiveClientImpl. (HiveClientImpl.scala:191) at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) at java.lang.reflect.Constructor.newInstance(Constructor.java:423) at org.apache.spark.sql.hive.client.IsolatedClientLoader.createClient(IsolatedClientLoader.scala:264) at org.apache.spark.sql.hive.HiveUtils$.newClientForMetadata(HiveUtils.scala:362) at org.apache.spark.sql.hive.HiveUtils$.newClientForMetadata(HiveUtils.scala:266) at org.apache.spark.sql.hive.HiveExternalCatalog.client$lzycompute(HiveExternalCatalog.scala:66) at org.apache.spark.sql.hive.HiveExternalCatalog.client(HiveExternalCatalog.scala:65) at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$databaseExists$1.apply$mcZ$sp(HiveExternalCatalog.scala:194) at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$databaseExists$1.apply(HiveExternalCatalog.scala:194) at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$databaseExists$1.apply(HiveExternalCatalog.scala:194) at org.apache.spark.sql.hive.HiveExternalCatalog.withClient(HiveExternalCatalog.scala:97) ... 24 more Caused by: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient at org.apache.hadoop.hive.metastore.MetaStoreUtils.newInstance(MetaStoreUtils.java:1523) at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient. (RetryingMetaStoreClient.java:86) at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:132) at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:104) at org.apache.hadoop.hive.ql.metadata.Hive.createMetaStoreClient(Hive.java:3005) at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:3024) at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:503) ... 38 more Caused by: java.lang.reflect.InvocationTargetException at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) at java.lang.reflect.Constructor.newInstance(Constructor.java:423) at org.apache.hadoop.hive.metastore.MetaStoreUtils.newInstance(MetaStoreUtils.java:1521) ... 44 more Caused by: java.lang.NullPointerException at java.lang.ProcessBuilder.start(ProcessBuilder.java:1012) at org.apache.hadoop.util.Shell.runCommand(Shell.java:482) at org.apache.hadoop.util.Shell.run(Shell.java:455) at org.apache.hadoop.util.Shell$ShellCommandExecutor.execute(Shell.java:702) at org.apache.hadoop.util.Shell.execCommand(Shell.java:791) at org.apache.hadoop.util.Shell.execCommand(Shell.java:774) at org.apache.hadoop.security.ShellBasedUnixGroupsMapping.getUnixGroups(ShellBasedUnixGroupsMapping.java:84) at org.apache.hadoop.security.ShellBasedUnixGroupsMapping.getGroups(ShellBasedUnixGroupsMapping.java:52) at org.apache.hadoop.security.Groups.getGroups(Groups.java:139) at org.apache.hadoop.security.UserGroupInformation.getGroupNames(UserGroupInformation.java:1474) at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.open(HiveMetaStoreClient.java:436) at org.apache.hadoop.hive.metastore.HiveMetaStoreClient. (HiveMetaStoreClient.java:236) at org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient. (SessionHiveMetaStoreClient.java:74) ... 49 more Process finished with exit code 1

-