HDP 下 SPARK2 与 Phoenix 的整合

环境:

操作系统:Centos7.2 1511

Ambari:2.6.2.0

HDP:2.6.5.0

Spark:2.x (HDP)

Phoenix:4.7.x (HDP)

条件:

1. HBase 安装完成;

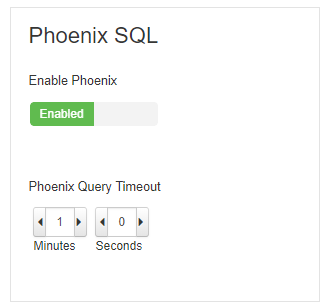

2. Phoenix已经启用,ambari界面如下所示:

3. Spark2 安装完成。

配置方式:

方式一

1. 进入 Ambari Spark2 配置界面;

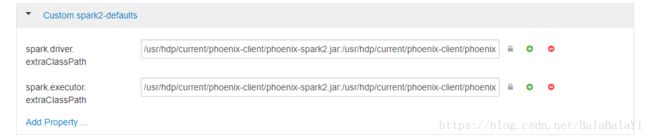

2. 找到 "Custom spark2-defaults" 并添加如下配置项:

spark.driver.extraClassPath=/usr/hdp/current/phoenix-client/phoenix-spark2.jar:/usr/hdp/current/phoenix-client/phoenix-client.jar

spark.executor.extraClassPath=/usr/hdp/current/phoenix-client/phoenix-spark2.jar:/usr/hdp/current/phoenix-client/phoenix-client.jar

如图所示:

注意:

1. 上述2个配置项下的内容,均指向ambari集群默认安装后的目录,请事先检查对应目录是否被更改;

2. 因为HDP 2.6.5.0 采用的是4.7.0版本的Phoenix,因此上述 phoenix-spark2.jar以及phoenix-client 2个jar必须按照上述顺序进行引用;

3. 针对更高的 HDP 3.x版本(3.0.0 对应的 Phoenix 版本是 5.0.0),只需引用 phoenix-client 1个jar包即可。

Phoenix 官网文档地址如下:https://phoenix.apache.org/phoenix_spark.html

测试:

执行 spark-client 命令行,例如:

./bin/pyspark.py执行:

df = spark.read.format("org.apache.phoenix.spark").option("table", "TEST").option("zkUrl", "hostname:2181").load()

df.show()将会打印出TEST表的内容

方式二

在运行 spark-shell 或是 spark-submit 的同时覆盖此配置项:

spark-shell --conf "spark.executor.extraClassPath=/usr/hdp/current/phoenix-client/phoenix-spark2.jar:/usr/hdp/current/phoenix-client/phoenix-client.jar" --conf "spark.driver.extraClassPath=/usr/hdp/current/phoenix-client/phoenix-spark2.jar:/usr/hdp/current/phoenix-client/phoenix-client.jar"or

spark-submit --conf "spark.executor.extraClassPath=/usr/hdp/current/phoenix-client/phoenix-spark2.jar:/usr/hdp/current/phoenix-client/phoenix-client.jar" --conf "spark.driver.extraClassPath=/usr/hdp/current/phoenix-client/phoenix-spark2.jar:/usr/hdp/current/phoenix-client/phoenix-client.jar"

如果是编写作业应用,分为两种情况:

client模式:

需要在提交命令中(在配置文件中默认指定也可),使用 --driver-class-path 指定 driver 的 extraClasspath 参数(因为 driver 模式下 jvm 已经被启动),如下所示:

spark-submit --driver-class-path /usr/hdp/current/phoenix-client/phoenix-spark2.jar:/usr/hdp/current/phoenix-client/phoenix-client.jar test.py作业代码可通过 sparkConf 的设置指定 executor 的 extraClasspath 参数:

SparkConf().set("spark.executor.extraClassPath", "/usr/hdp/current/phoenix-client/phoenix-spark2.jar:/usr/hdp/current/phoenix-client/phoenix-client.jar")

cluster模式:

作业代码可通过 sparkConf 的设置指定 driver 和 executor 的 extraClasspath 参数:

SparkConf().set("spark.driver.extraClassPath", "/usr/hdp/current/phoenix-client/phoenix-spark2.jar:/usr/hdp/current/phoenix-client/phoenix-client.jar").set("spark.executor.extraClassPath", "/usr/hdp/current/phoenix-client/phoenix-spark2.jar:/usr/hdp/current/phoenix-client/phoenix-client.jar")

测试:

执行 spark-client 命令行,例如:

./bin/pyspark.py --conf "spark.executor.extraClassPath=/usr/hdp/current/phoenix-client/phoenix-spark2.jar:/usr/hdp/current/phoenix-client/phoenix-client.jar" --conf "spark.driver.extraClassPath=/usr/hdp/current/phoenix-client/phoenix-spark2.jar:/usr/hdp/current/phoenix-client/phoenix-client.jar"执行:

df = spark.read.format("org.apache.phoenix.spark").option("table", "TEST").option("zkUrl", "hostname:2181").load()

df.show()将会打印出TEST表的内容

关于HDP2.6.5.x的Spark2与Phoenix集成使用Jar冲突问题:

问题现象:

引用 phoenix-client.jar 的 Spark2 作业,对应的 application log ui 界面无法加载css和js(页面混乱)。

问题原因:

phoenix-client.jar 使用 servlet-api 2.x 版本,而 HDP 2.6.5.x 默认的 Spark2 使用 servlet-api 3.x版本,因此jar冲突。

解决方式:

Pyspark作业:

1). 在 spark-submit 中添加如下参数(支持oozie方式的提交,推荐方式,此方式影响范围较小,只影响对应作业):

--conf spark.driver.extraClassPath=/usr/hdp/current/spark2-client/jars/javax.servlet-api-3.1.0.jar:/usr/hdp/current/spark2-client/jars/javax.ws.rs-api-2.0.1.jar:/usr/hdp/current/spark2-client/jars/jersey-server-2.22.2.jar:/usr/hdp/current/phoenix-client/phoenix-spark2.jar:/usr/hdp/current/phoenix-client/phoenix-client.jar --conf spark.executor.extraClassPath=/usr/hdp/current/spark2-client/jars/javax.servlet-api-3.1.0.jar:/usr/hdp/current/spark2-client/jars/javax.ws.rs-api-2.0.1.jar:/usr/hdp/current/spark2-client/jars/jersey-server-2.22.2.jar:/usr/hdp/current/phoenix-client/phoenix-spark2.jar:/usr/hdp/current/phoenix-client/phoenix-client.jar2). 在 spark2-defaults 配置文件中添加上述参数(同样支持oozie方式的提交):

spark.driver.extraClassPath=/usr/hdp/current/spark2-client/jars/javax.servlet-api-3.1.0.jar:/usr/hdp/current/spark2-client/jars/javax.ws.rs-api-2.0.1.jar:/usr/hdp/current/spark2-client/jars/jersey-server-2.22.2.jar:/usr/hdp/current/phoenix-client/phoenix-spark2.jar:/usr/hdp/current/phoenix-client/phoenix-client.jar

spark.executor.extraClassPath=/usr/hdp/current/spark2-client/jars/javax.servlet-api-3.1.0.jar:/usr/hdp/current/spark2-client/jars/javax.ws.rs-api-2.0.1.jar:/usr/hdp/current/spark2-client/jars/jersey-server-2.22.2.jar:/usr/hdp/current/phoenix-client/phoenix-spark2.jar:/usr/hdp/current/phoenix-client/phoenix-client.jar

Java/Scala spark作业:

使用maven自行管理dependencies和对应scope即可。