拍拍贷魔镜杯风控算法大赛——基于lightgbm

本文仿照知乎一位大神的文章,基于理解的基础上,修改了部分代码~感谢前辈的分享~

参考文献:

https://zhuanlan.zhihu.com/p/56864235

原始数据来源:

https://www.kesci.com/home/competition/56cd5f02b89b5bd026cb39c9/content/1

数据集构成:

三万条已知标签的训练集,二万条不知标签的测试集

训练集和测试集均有三种表:

Master(主要的特征表),Log_Info(用户登陆信息表),Userupdate_Info(客户信息修改更新表)

(1)

- Master

每一行代表一个样本(一笔成功成交借款),每个样本包含200多个各类字段。

idx:每一笔贷款的unique key,可以与另外2个文件里的idx相匹配。

UserInfo_*:借款人特征字段

WeblogInfo_*:Info网络行为字段

Education_Info*:学历学籍字段

ThirdParty_Info_PeriodN_*:第三方数据时间段N字段

SocialNetwork_*:社交网络字段

LinstingInfo:借款成交时间

Target:违约标签(1 = 贷款违约,0 = 正常还款)。

测试集里不包含target字段。

(2)

- Log_Info

借款人的登陆信息。

ListingInfo:借款成交时间

LogInfo1:操作代码

LogInfo2:操作类别

LogInfo3:登陆时间

idx:每一笔贷款的unique key

(3)

- Userupdate_Info

借款人修改信息

ListingInfo1:借款成交时间

UserupdateInfo1:修改内容

UserupdateInfo2:修改时间

idx:每一笔贷款的unique key

本文大体的步骤是:

1)训练数据和测试数据的合并(为了一起对特征进行处理)

2)分类型变量的清洗

3)基于一些分类型变量和其他表数据(登陆信息表、修改信息表)的特征衍生

4)数值型变量不做处理,缺失值不填充,因为lightgbm可以自行处理缺失值

5)最后对特征工程后的数据集进行特征筛选

6)筛选完后进行建模预测

7)通过调整lightgbm的参数,来提高模型的精度

代码如下:

import numpy as np

import pandas as pd

import warnings

import matplotlib.pyplot as plt

import seaborn as sns

import os

# os.chdir()用于改变当前工作目录到指定路径

os.chdir("D:\Py_Data\拍拍贷“魔镜杯”风控初赛数据")

######################################数据的合并#########################################

# 训练集

train_LogInfo = pd.read_csv('.\Training Set\PPD_LogInfo_3_1_Training_Set.csv',encoding='gbk')

train_Master = pd.read_csv('.\Training Set\PPD_Training_Master_GBK_3_1_Training_Set.csv',encoding='gbk')

train_Userupdate = pd.read_csv('.\Training Set\PPD_Userupdate_Info_3_1_Training_Set.csv',encoding='gbk')

# 测试集

test_LogInfo = pd.read_csv('.\Test Set\PPD_LogInfo_2_Test_Set.csv',encoding='gbk')

test_Master = pd.read_csv('.\Test Set\PPD_Master_GBK_2_Test_Set.csv',encoding='gb18030')

test_Userupdate = pd.read_csv('.\Test Set\PPD_Userupdate_Info_2_Test_Set.csv',encoding='gbk')

# 合并时用于标记哪些样本来自训练集和测试集

train_Master['sample_status']='train'

test_Master['sample_status']='test'

# 训练集和测试集的合并(axis=0,增加行)

df_Master = pd.concat([train_Master,test_Master],axis=0).reset_index(drop=True)

df_LogInfo=pd.concat([train_LogInfo,test_LogInfo],axis=0).reset_index(drop=True)

df_Userupdate=pd.concat([train_Userupdate,test_Userupdate],axis=0).reset_index(drop=True)

df_Master.to_csv("D:\Py_Data\拍拍贷“魔镜杯”风控初赛数据\df_Master.csv",encoding='gb18030',index=False)

df_LogInfo.to_csv("D:\Py_Data\拍拍贷“魔镜杯”风控初赛数据\df_LogInfo.csv",encoding='gb18030',index=False)

df_Userupdate.to_csv("D:\Py_Data\拍拍贷“魔镜杯”风控初赛数据\df_Userupdate.csv",encoding='gb18030',index=False)

#####################################数据的探索行分析#####################################

# 导入合并后的数据

df_Master = pd.read_csv('df_Master.csv',encoding='gb18030')

df_LogInfo = pd.read_csv('df_LogInfo.csv',encoding='gb18030')

df_Userupdate = pd.read_csv('df_Userupdate.csv',encoding='gb18030')

# 定义显示形式

pd.set_option("display.max_columns",len(train_Master.columns))

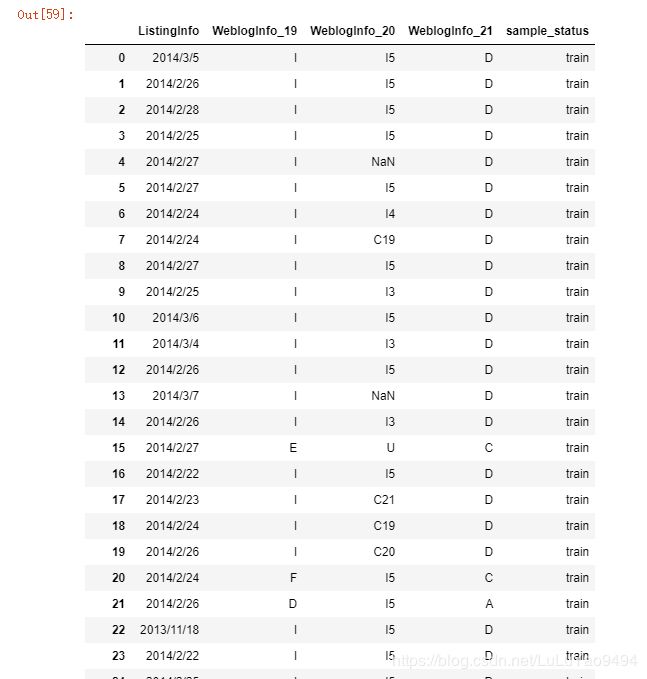

df_Master.head(20)

# 可以看到的是,数据主要分为:

# 教育信息、第三方信息、社交网络信息、用户信息、网络博客信息、目标标签(target)和sample_status(自定义,用于区分数据来源于测试/训练集)

# 察看训练集中好坏样本比例,1为坏样本

df_Master.target.value_counts()

# 每个个体都是独一的

len(np.unique(df_Master.Idx))

#######################################(1)缺失值处理###################################

# 原始中大量的缺失值用-1标识,我们将其替换成np.nan

df_Master = df_Master.replace({-1:np.nan})

df_Master.head(15)

# 缺失值的可视化——白色越多,代表变量缺失越多

import missingno as msno

%matplotlib inline

msno.bar(df_Master)

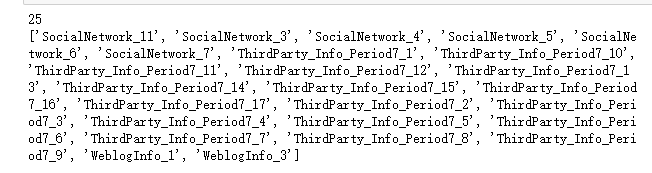

# 缺失占比超过80%的变量列表

missing_columns=[]

for column in df_Master.columns:

if sum(pd.isnull(df_Master[column]))/len(df_Master)>=0.8:

missing_columns.append(column)

print(len(missing_columns))

print(missing_columns)# 筛掉缺失大于80%的变量

df_Master = df_Master.loc[:,list(~df_Master.columns.isin(missing_columns))]

df_Master.shape

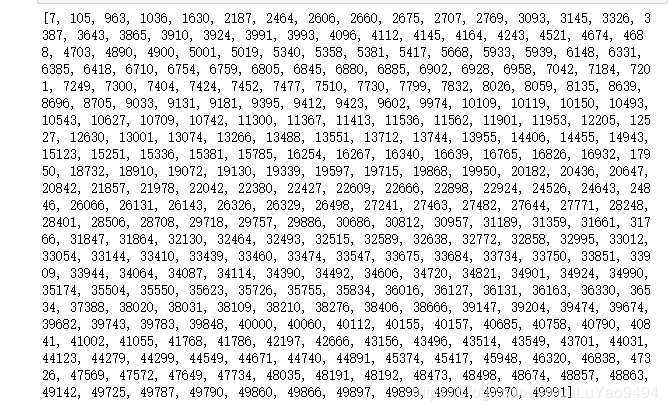

# 再来看样本的特征缺失(行缺失)

# 对于某个样本,特征缺失大于100

missing_index=[]

for i in np.arange(df_Master.shape[0]):

if list(df_Master.loc[i,:].isnull()).count(True)>100:

missing_index.append(i)

print(missing_index)

# 删除特征缺失超过100的行

df_Master = df_Master.drop(missing_index).reset_index(drop=True)

df_Master.shape

# 单变量占比分析

print("原变量总数:",'\n',len(df_Master.columns))

cols = [col for col in df_Master.columns if col not in ('target','sample_status')]

print("排除目标标签和标记训练集和测试集来源的变量总数:",'\n',len(cols))

# 某个变量的某个取值占比超过90%,说明信息含量低,可以删除

drop_cols_simple=[]

for col in cols:

if max(df_Master[col].value_counts())/len(df_Master)>0.9:

drop_cols_simple.append(col)

print(drop_cols_simple)

print(len(drop_cols_simple))

df_Master = df_Master.drop(drop_cols_simple,axis=1)

df_Master.shape

df_Master = df_Master.reset_index(drop=True)

# 剩下的变量的类型

df_Master.dtypes.value_counts()

objectcol = df_Master.select_dtypes(include=["object"]).columns

numcol = df_Master.select_dtypes(include=[np.float64]).columns

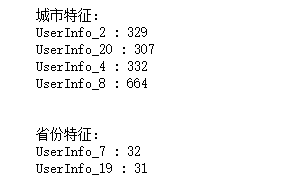

# 分类型变量只有12个,我们来看一下这些变量有什么规律

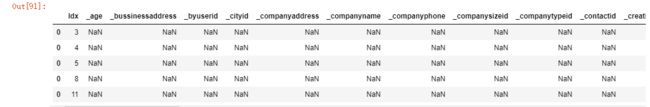

df_Master[objectcol]

# 可以看到的是

# 表示省份的有

# UserInfo_19和UserInfo_7

# 表示城市的有

# UserInfo_2,UserInfo_20,UserInfo_4,UserInfo_8

city_feature = ['UserInfo_2','UserInfo_20','UserInfo_4','UserInfo_8']

province_feature=['UserInfo_7','UserInfo_19']

print("城市特征:")

for col in city_feature:

print(col,":",df_Master[col].nunique())

print('\n')

print("省份特征:")

for col in province_feature:

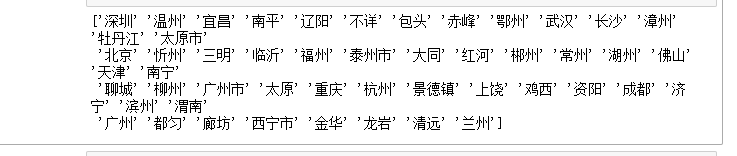

print(col,":",df_Master[col].nunique())print(df_Master.UserInfo_8.unique()[:50])

# 可以看到,同一个城市表达不一

# 去掉字段中的“市”,保持统一

df_Master['UserInfo_8'] = [a[:-1] if a.find('市')!= -1 else a[:] for a in df_Master['UserInfo_8']]

# 清理后非重复计数减小

df_Master['UserInfo_8'].nunique()

# 再来看看数值型变量

df_Master[numcol].head(20)

# 这里我们不对数值变量进行缺失值插值或者填充,直接用于后期建模

# 再来看看其他的表——该表显示了客户修改信息的日志

df_Userupdate# 将上表的大小写进行统一

df_Userupdate['UserupdateInfo1'] = df_Userupdate.UserupdateInfo1.map(lambda s:s.lower())

######################################特征工程阶段#######################################

# 至此,我们进入特征处理阶段

# 首先对类别变量进行变换

df_Master[objectcol]

# 1)省份特征————————推测可能一个是籍贯省份,一个是居住省份

# 首先看看各省份好坏样本的分布占比

def get_badrate(df,col):

'''

根据某个变量计算违约率

'''

group = df.groupby(col)

df=pd.DataFrame()

df['total'] = group.target.count()

df['bad'] = group.target.sum()

df['badrate'] = round(df['bad']/df['total'],4)*100 # 百分比形式

return df.sort_values('badrate',ascending=False)

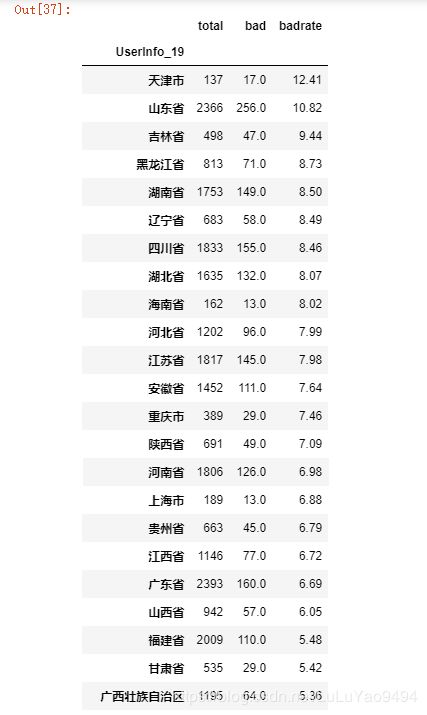

# 户籍省份的违约率计算

province_original = get_badrate(df_Master,'UserInfo_19')

province_original

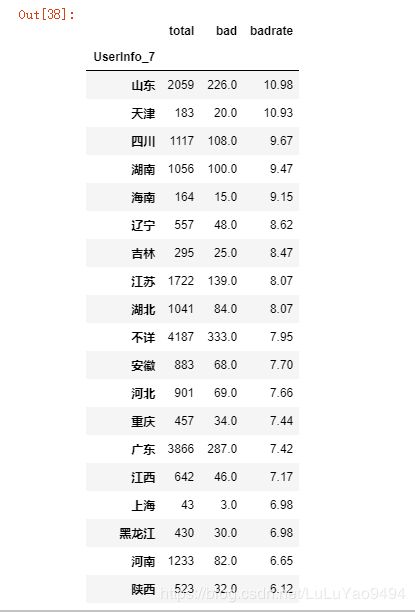

# 居住地省份的违约率计算

province_current = get_badrate(df_Master,'UserInfo_7')

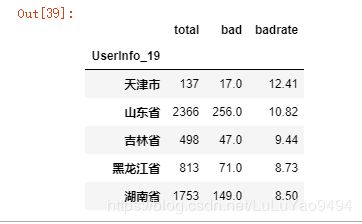

province_current # 各取前5名的省份进行二值化

province_original.iloc[:5,]province_current.iloc[:5,]

# 分别对户籍省份和居住省份排名前五的省份进行二值化

# 户籍省份的二值化

df_Master['is_tianjin_UserInfo_19']=df_Master.apply(lambda x:1 if x.UserInfo_19=='天津市' else 0,axis=1)

df_Master['is_shandong_UserInfo_19']=df_Master.apply(lambda x:1 if x.UserInfo_19=='山东省' else 0,axis=1)

df_Master['is_jilin_UserInfo_19']=df_Master.apply(lambda x:1 if x.UserInfo_19=='吉林省' else 0,axis=1)

df_Master['is_heilongjiang_UserInfo_19']=df_Master.apply(lambda x:1 if x.UserInfo_19=='黑龙江省' else 0,axis=1)

df_Master['is_hunan_UserInfo_19']=df_Master.apply(lambda x:1 if x.UserInfo_19=='湖南省' else 0,axis=1)

# 居住省份的二值化

df_Master['is_tianjin_UserInfo_7']=df_Master.apply(lambda x:1 if x.UserInfo_7=='天津' else 0,axis=1)

df_Master['is_shandong_UserInfo_7']=df_Master.apply(lambda x:1 if x.UserInfo_7=='山东' else 0,axis=1)

df_Master['is_sichuan_UserInfo_7']=df_Master.apply(lambda x:1 if x.UserInfo_7=='四川' else 0,axis=1)

df_Master['is_hainan_UserInfo_7']=df_Master.apply(lambda x:1 if x.UserInfo_7=='海南' else 0,axis=1)

df_Master['is_hunan_UserInfo_7']=df_Master.apply(lambda x:1 if x.UserInfo_7=='湖南' else 0,axis=1)

# 户籍省份和居住地省份不一致的特征衍生

print(df_Master.UserInfo_19.unique())

print('\n')

print(df_Master.UserInfo_7.unique())

# 首先将两者改成相同的形式

UserInfo_19_change = []

for i in df_Master.UserInfo_19:

if i in ('内蒙古自治区','黑龙江省'):

j = i[:3]

else:

j = i[:2]

UserInfo_19_change.append(j)

print(np.unique(UserInfo_19_change))

# 判断UserInfo_7和UserInfo_19是否一致

is_same_province=[]

for i,j in zip(df_Master.UserInfo_7,UserInfo_19_change):

if i==j:

a=1

else:

a=0

is_same_province.append(a)

df_Master['is_same_province'] = is_same_province

# 2)城市特征

# 原数据中有四个城市特征,推测为用户常登陆的IP地址城市

# 特征衍生思路:

# 一,通过xgboost挑选重要的城市,进行二值化

# 二,由四个城市特征的非重复计数衍生生成登陆IP地址的变更次数

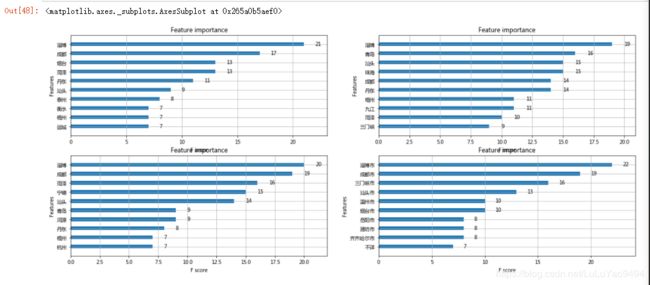

# 根据xgboost变量重要性的输出对城市作二值化衍生

df_Master_temp = df_Master[['UserInfo_2','UserInfo_4','UserInfo_8','UserInfo_20','target']]

df_Master_temp.head()area_list=[]

# 将四个城市特征都进行哑变量处理

for col in df_Master_temp:

dummy_df = pd.get_dummies(df_Master_temp[col])

dummy_df = pd.concat([dummy_df,df_Master_temp['target']],axis=1)

area_list.append(dummy_df)

df_area1 = area_list[0]

df_area2 = area_list[1]

df_area3 = area_list[2]

df_area4 = area_list[3]

df_area1# 使用xgboost筛选出重要的城市

from xgboost.sklearn import XGBClassifier

from xgboost import plot_importance

# 注意,这里需要把合并后的没有目标标签的行数据删除

# df_area1[~(df_area1['target'].isnull())]

x_area1 = df_area1[~(df_area1['target'].isnull())].drop(['target'],axis=1)

y_area1 = df_area1[~(df_area1['target'].isnull())]['target']

x_area2 = df_area2[~(df_area2['target'].isnull())].drop(['target'],axis=1)

y_area2 = df_area2[~(df_area2['target'].isnull())]['target']

x_area3 = df_area3[~(df_area3['target'].isnull())].drop(['target'],axis=1)

y_area3 = df_area3[~(df_area3['target'].isnull())]['target']

x_area4 = df_area4[~(df_area4['target'].isnull())].drop(['target'],axis=1)

y_area4 = df_area4[~(df_area4['target'].isnull())]['target']

xg_area1 = XGBClassifier(random_state=0).fit(x_area1,y_area1)

xg_area2 = XGBClassifier(random_state=0).fit(x_area2,y_area2)

xg_area3 = XGBClassifier(random_state=0).fit(x_area3,y_area3)

xg_area4 = XGBClassifier(random_state=0).fit(x_area4,y_area4)

plt.rcParams['font.sans-serif'] = ['Microsoft YaHei']

fig = plt.figure(figsize=(20,8))

ax1 = fig.add_subplot(2,2,1)

ax2 = fig.add_subplot(2,2,2)

ax3 = fig.add_subplot(2,2,3)

ax4 = fig.add_subplot(2,2,4)

plot_importance(xg_area1,ax=ax1,max_num_features=10,height=0.4)

plot_importance(xg_area2,ax=ax2,max_num_features=10,height=0.4)

plot_importance(xg_area3,ax=ax3,max_num_features=10,height=0.4)

plot_importance(xg_area4,ax=ax4,max_num_features=10,height=0.4)

# 将特征重要性排名前三的城市进行二值化:

df_Master['is_zibo_UserInfo_2'] = df_Master.apply(lambda x:1 if x.UserInfo_2=='淄博' else 0,axis=1)

df_Master['is_chengdu_UserInfo_2'] = df_Master.apply(lambda x:1 if x.UserInfo_2=='成都' else 0,axis=1)

df_Master['is_yantai_UserInfo_2'] = df_Master.apply(lambda x:1 if x.UserInfo_2=='烟台' else 0,axis=1)

df_Master['is_zibo_UserInfo_4'] = df_Master.apply(lambda x:1 if x.UserInfo_4=='淄博' else 0,axis=1)

df_Master['is_qingdao_UserInfo_4'] = df_Master.apply(lambda x:1 if x.UserInfo_4=='青岛' else 0,axis=1)

df_Master['is_shantou_UserInfo_4'] = df_Master.apply(lambda x:1 if x.UserInfo_4=='汕头' else 0,axis=1)

df_Master['is_zibo_UserInfo_8'] = df_Master.apply(lambda x:1 if x.UserInfo_8=='淄博' else 0,axis=1)

df_Master['is_chengdu_UserInfo_8'] = df_Master.apply(lambda x:1 if x.UserInfo_8=='成都' else 0,axis=1)

df_Master['is_heze_UserInfo_8'] = df_Master.apply(lambda x:1 if x.UserInfo_8=='菏泽' else 0,axis=1)

df_Master['is_ziboshi_UserInfo_20'] = df_Master.apply(lambda x:1 if x.UserInfo_20=='淄博市' else 0,axis=1)

df_Master['is_chengdushi_UserInfo_20'] = df_Master.apply(lambda x:1 if x.UserInfo_20=='成都市' else 0,axis=1)

df_Master['is_sanmenxiashi_UserInfo_20'] = df_Master.apply(lambda x:1 if x.UserInfo_20=='三门峡市' else 0,axis=1)

#特征衍生-IP地址变更次数特征

df_Master['UserInfo_20'] = [a[:-1] if a.find('市')!= -1 else i[:] for a in df_Master.UserInfo_20]

city_df = df_Master[['UserInfo_2','UserInfo_4','UserInfo_8','UserInfo_20']]

city_change_cnt =[]

for i in range(city_df.shape[0]):

a = list(city_df.iloc[i])

city_count = len(set(a))

city_change_cnt.append(city_count)

df_Master['city_count_cnt'] = city_change_cnt

# 3)运营商种类少,直接将其转换成哑变量

print(df_Master.UserInfo_9.value_counts())

print(set(df_Master.UserInfo_9))df_Master['UserInfo_9'] = df_Master.UserInfo_9.replace({'中国联通 ':'china_unicom',

'中国联通':'china_unicom',

'中国移动':'china_mobile',

'中国移动 ':'china_mobile',

'中国电信':'china_telecom',

'中国电信 ':'china_telecom',

'不详':'operator_unknown'

})

operator_dummy = pd.get_dummies(df_Master.UserInfo_9)

df_Master = pd.concat([df_Master,operator_dummy],axis=1)

# 删除原变量

df_Master = df_Master.drop(['UserInfo_9'],axis=1)

df_Master = df_Master.drop(['UserInfo_19','UserInfo_2','UserInfo_4','UserInfo_7','UserInfo_8','UserInfo_20'],axis=1)

# 看看还剩下哪些类型变量要处理

df_Master.dtypes.value_counts()

df_Master.select_dtypes(include='object')# 可以看到,我们要将这些weibo变量进行处理

# 4) 微博特征

for col in ['WeblogInfo_19','WeblogInfo_20','WeblogInfo_21']:

df_Master[col].replace({'nan':np.nan}) # 将字符型的nan,利用众数填充

df_Master[col] = df_Master[col].fillna(df_Master[col].mode()[0])

# 看看这些变量有几种类型的值

for col in ['WeblogInfo_19','WeblogInfo_20','WeblogInfo_21']:

print(df_Master[col].value_counts())

print('\n')

# 这里我们猜测WeblogInfo_20是WeblogInfo_19和21的更细化表达,这里直接删除该变量

# 对其他变量进行哑变量处理

df_Master['WeblogInfo_19'] = ['WeblogInfo_19'+ i for i in df_Master.WeblogInfo_19]

df_Master['WeblogInfo_21'] = ['WeblogInfo_21'+ i for i in df_Master.WeblogInfo_21]

for col in ['WeblogInfo_19','WeblogInfo_21']:

weibo_dummy = pd.get_dummies(df_Master[col])

df_Master = pd.concat([df_Master,weibo_dummy],axis=1)

# 删除原变量

df_Master = df_Master.drop(['WeblogInfo_19','WeblogInfo_21','WeblogInfo_20'],axis=1)

# 至此,类别变量处理完毕

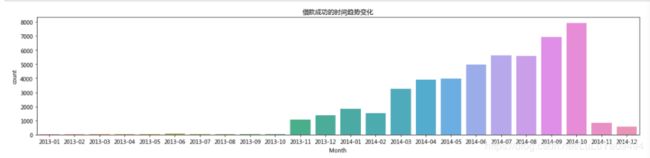

df_Master.dtypes.value_counts()# 我们来看看借款的成交时间趋势

# 首先将字符型的日期转换成时间戳形式

import datetime

from datetime import datetime

df_Master['ListingInfo'] = pd.to_datetime(df_Master.ListingInfo)

df_Master["Month"] = df_Master.ListingInfo.apply(lambda x:datetime.strftime(x,"%Y-%m"))

plt.figure(figsize=(20,4))

plt.title("借款成功的时间趋势变化")

plt.rcParams['font.sans-serif']=['Microsoft YaHei']

sns.countplot(data=df_Master.sort_values('Month'),x='Month')

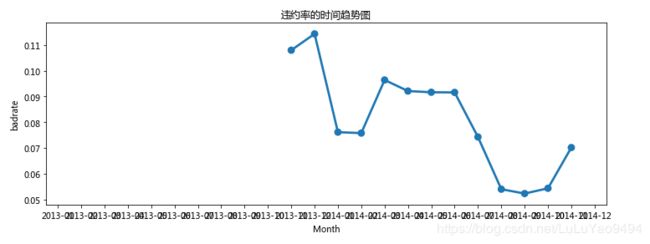

plt.show()# 也可以看看违约率的月变化趋势

month_group = df_Master.groupby('Month')

df_badrate_month = pd.DataFrame()

df_badrate_month['total'] = month_group.target.count()

df_badrate_month['bad'] = month_group.target.sum()

df_badrate_month['badrate'] = df_badrate_month['bad']/df_badrate_month['total']

df_badrate_month=df_badrate_month.reset_index()

plt.figure(figsize=(12,4))

plt.title('违约率的时间趋势图')

sns.pointplot(data=df_badrate_month,x='Month',y='badrate',linestyles='-')

plt.show()

# 注:空值的部分代表的是预测样本# 我们不对数值型变量的缺失值做处理

df_Master = df_Master.drop('Month',axis=1)

# LogInfo表

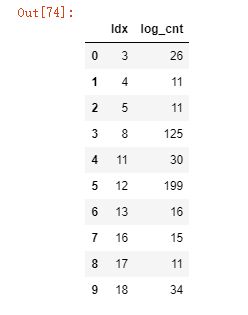

df_LogInfo

# 衍生的变量有

# 1)累计登陆次数

# 2)登陆时间的平均间隔

# 3)最近一次的登陆时间距离成交时间差

# 1)累计登陆次数

log_cnt = df_LogInfo.groupby('Idx',as_index=False).LogInfo3.count().rename(

columns={'LogInfo3':'log_cnt'})

log_cnt.head(10)

# 2)最近一次的登陆时间距离成交时间差

# 最近一次的登录时间距离当前时间差

df_LogInfo['Listinginfo1']=pd.to_datetime(df_LogInfo.Listinginfo1)

df_LogInfo['LogInfo3'] = pd.to_datetime(df_LogInfo.LogInfo3)

time_log_span = df_LogInfo.groupby('Idx',as_index=False).agg({'Listinginfo1':np.max,

'LogInfo3':np.max})

time_log_span.head()

time_log_span['log_timespan'] = time_log_span['Listinginfo1']-time_log_span['LogInfo3']

time_log_span['log_timespan'] = time_log_span['log_timespan'].map(lambda x:str(x))

time_log_span['log_timespan'] = time_log_span['log_timespan'].map(lambda x:int(x[:x.find('d')]))

time_log_span= time_log_span[['Idx','log_timespan']]

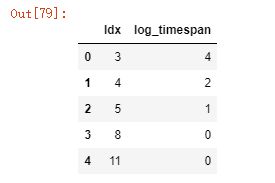

time_log_span.head()# 3)登陆时间的平均时间间隔

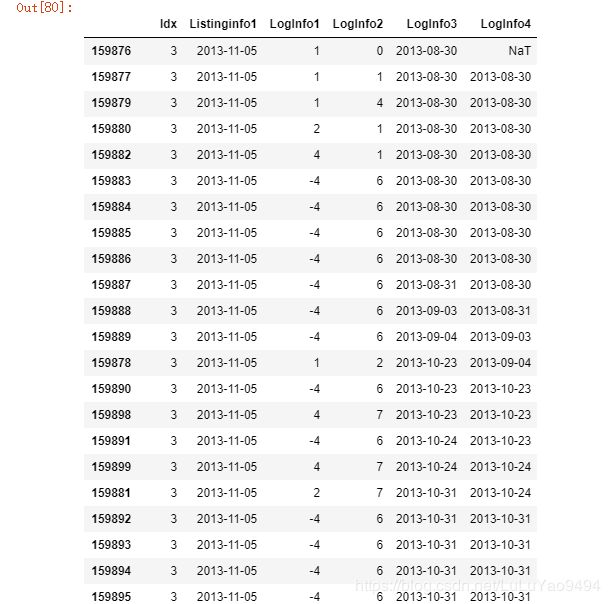

df_temp_timeinterval = df_LogInfo.sort_values(by=['Idx','LogInfo3'],ascending=['True','True'])

df_temp_timeinterval['LogInfo4'] = df_temp_timeinterval.groupby('Idx')['LogInfo3'].apply(lambda x:x.shift(1))

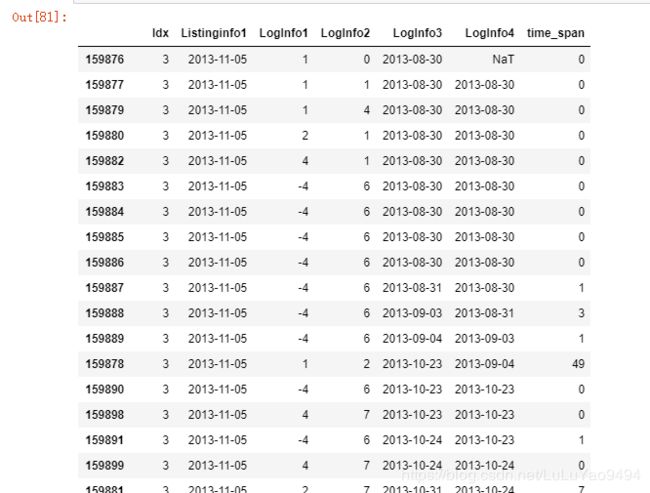

df_temp_timeintervaldf_temp_timeinterval['time_span'] = df_temp_timeinterval['LogInfo3'] - df_temp_timeinterval['LogInfo4']

df_temp_timeinterval['time_span'] = df_temp_timeinterval['time_span'] .map(lambda x:str(x))

df_temp_timeinterval['time_span'] = df_temp_timeinterval['time_span'].replace({'NaT':'0 days 00:00:00'})

df_temp_timeinterval['time_span'] = df_temp_timeinterval['time_span'].map(lambda x:int(x[:x.find('d')]))

df_temp_timeintervalavg_log_timespan = df_temp_timeinterval.groupby('Idx',as_index=False).time_span.mean().rename(columns={'time_span':'avg_log_timespan'})

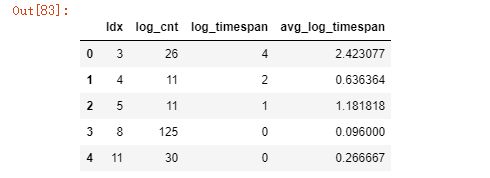

log_info = pd.merge(log_cnt,time_log_span,how='left',on='Idx')

log_info = pd.merge(log_info,avg_log_timespan,how='left',on='Idx')

log_info.head()log_info.to_csv('D:\Py_Data\拍拍贷“魔镜杯”风控初赛数据\log_info_feature.csv',encoding='gbk',index=False)

# 修改信息表

# 衍生变量:

# 1)最近的修改时间距离成交时间差;

# 2)修改信息总次数

# 3)每种信息修改的次数

# 4)按照日期修改的次数

# 1)最近的修改时间距离成交时间差;

df_Userupdate['ListingInfo1']=pd.to_datetime(df_Userupdate['ListingInfo1'])

df_Userupdate['UserupdateInfo2']=pd.to_datetime(df_Userupdate['UserupdateInfo2'])

time_span = df_Userupdate.groupby('Idx',as_index=False).agg({'UserupdateInfo2':np.max,'ListingInfo1':np.max})

time_span['update_timespan'] = time_span['ListingInfo1']-time_span['UserupdateInfo2']

time_span['update_timespan'] = time_span['update_timespan'].map(lambda x:str(x))

time_span['update_timespan'] = time_span['update_timespan'].map(lambda x:int(x[:x.find('d')]))

time_span = time_span[['Idx','update_timespan']]

# 2)计算每个用户修改不同类别信息的次数

group = df_Userupdate.groupby(['Idx','UserupdateInfo1'],as_index=False).agg({'UserupdateInfo2':pd.Series.nunique})

# 3)每种信息修改的次数的衍生

user_df_list=[]

for idx in group.Idx.unique():

user_df = group[group.Idx==idx]

change_cate = list(user_df.UserupdateInfo1)

change_cnt = list(user_df.UserupdateInfo2)

user_col = ['Idx']+change_cate

user_value = [user_df.iloc[0]['Idx']]+change_cnt

user_df2 = pd.DataFrame(np.array(user_value).reshape(1,len(user_value)),columns=user_col)

user_df_list.append(user_df2)

cate_change_df = pd.concat(user_df_list,axis=0)

cate_change_df.head()

# 将cate_change_df里的空值填为0

cate_change_df = cate_change_df.fillna(0)

cate_change_df.shape

df_Userupdate

# 4)修改信息的总次数,按照日期修改的次数的衍生

update_cnt = df_Userupdate.groupby('Idx',as_index=False).agg({'UserupdateInfo2':pd.Series.nunique,

'ListingInfo1':pd.Series.count}).\

rename(columns={'UserupdateInfo2':'update_time_cnt',

'ListingInfo1':'update_all_cnt'})

update_cnt.head()# 将三个衍生特征的临时表进行关联

update_info = pd.merge(time_span,cate_change_df,on='Idx',how='left')

update_info = pd.merge(update_info,update_cnt,on='Idx',how='left')

update_info.head()# 保存数据至本地

update_info.to_csv(r'D:\Py_Data\拍拍贷“魔镜杯”风控初赛数据\update_feature.csv',encoding='gbk',index=False)

df_Master.to_csv(r'D:\Py_Data\拍拍贷“魔镜杯”风控初赛数据\df_Master_tackled.csv',encoding='gbk',index=False)# 合并三个表的数据

df_Master_tackled= pd.read_csv('df_Master_tackled.csv',encoding='gbk')

df_LogInfo_tackled = pd.read_csv('log_info_feature.csv',encoding='gbk')

df_Userupdate_tackled = pd.read_csv('update_feature.csv',encoding='gbk')

df_final = pd.merge(df_Master_tackled,df_LogInfo_tackled,on='Idx',how='left')

df_final = pd.merge(df_final,df_Userupdate_tackled,on='Idx',how='left')

df_final.shape#########################################特征筛选#######################################

# 用lightGBM筛选特征,

# 这里训练10个模型,并对10个模型输出的特征重要性取平均,最后对特征重要性的值进行归一化

# 以上将训练集和测试集合并是为了处理特征,现在再将两者划分开,用于模型训练

# 将三万训练集划分成训练集和测试集,没有目标标签的2万样本作为预测集

from sklearn.model_selection import train_test_split

X_train,X_test, y_train, y_test = train_test_split(df_final[df_final.sample_status=='train'].drop(['Idx','sample_status','target','ListingInfo'],axis=1),

df_final[df_final.sample_status=='train']['target'],

test_size=0.3,

random_state=0)

train_fea = np.array(X_train)

test_fea = np.array(X_test)

evaluate_fea = np.array(df_final[df_final.sample_status=='test'].drop(['Idx','sample_status','target','ListingInfo'],axis=1))

# # reshape(-1,1转成一列

train_label = np.array(y_train).reshape(-1,1)

test_label = np.array(y_test).reshape(-1,1)

evaluate_label = np.array(df_final[df_final.sample_status=='test']['target']).reshape(-1,1)

fea_names = list(X_train.columns)

feature_importance_values = np.zeros(len(fea_names))

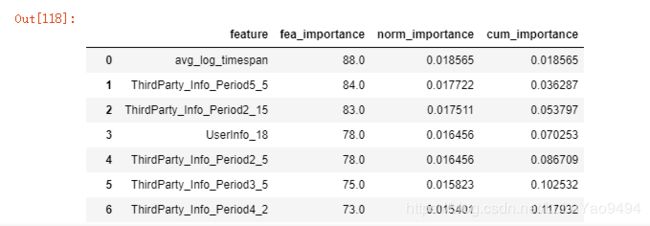

# 训练10个lightgbm,并对10个模型输出的feature_importances_取平均

import lightgbm as lgb

from lightgbm import plot_importance

for i in np.arange(10):

model = lgb.LGBMClassifier(n_estimators=1000,

learning_rate=0.05,

n_jobs=-1,

verbose=-1)

model.fit(train_fea,train_label,

eval_metric='auc',

eval_set = [(test_fea, test_label)],

early_stopping_rounds=100,

verbose = -1)

feature_importance_values += model.feature_importances_/10

# 将feature_importance_values存成临时表

fea_imp_df1 = pd.DataFrame({'feature':fea_names,

'fea_importance':feature_importance_values})

fea_imp_df1 = fea_imp_df1.sort_values('fea_importance',ascending=False).reset_index(drop=True)

fea_imp_df1['norm_importance'] = fea_imp_df1['fea_importance']/fea_imp_df1['fea_importance'].sum() # 特征重要性value的归一化

fea_imp_df1['cum_importance'] = np.cumsum(fea_imp_df1['norm_importance'])# 特征重要性value的累加值

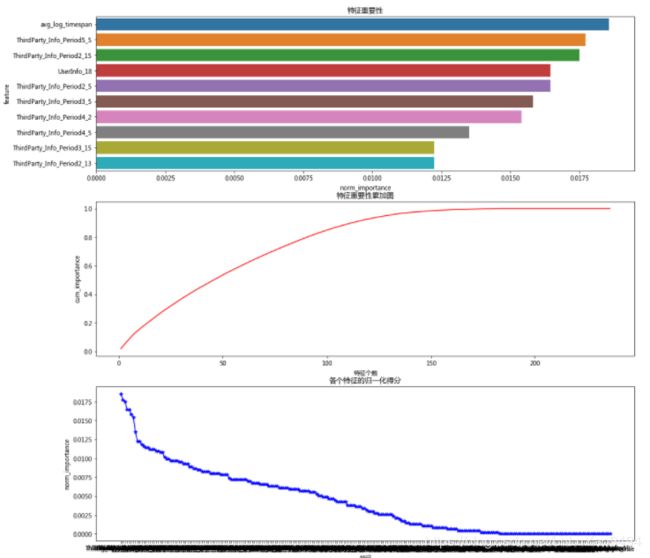

fea_imp_df1# 特征重要性可视化

plt.figure(figsize=(16,16))

plt.rcParams['font.sans-serif']=['Microsoft YaHei']

plt.subplot(3,1,1)

plt.title('特征重要性')

sns.barplot(data=fea_imp_df1.iloc[:10,:],x='norm_importance',y='feature')

plt.subplot(3,1,2)

plt.title('特征重要性累加图')

plt.xlabel('特征个数')

plt.ylabel('cum_importance')

plt.plot(list(range(1, len(fea_names)+1)),fea_imp_df1['cum_importance'], 'r-')

plt.subplot(3,1,3)

plt.title('各个特征的归一化得分')

plt.xlabel('特征')

plt.ylabel('norm_importance')

plt.plot(fea_imp_df1.feature,fea_imp_df1['norm_importance'], 'b*-')

plt.show()# 剔除特征重要性为0的变量

zero_imp_col = list(fea_imp_df1[fea_imp_df1.fea_importance==0].feature)

fea_imp_df11 = fea_imp_df1[~(fea_imp_df1.feature.isin(zero_imp_col))]

print('特征重要性为0的变量个数为 :{}'.format(len(zero_imp_col)))

print(zero_imp_col)# 剔除特征重要性比较弱的变量

low_imp_col = list(fea_imp_df11[fea_imp_df11.cum_importance>=0.99].feature)

print('特征重要性比较弱的变量个数为:{}'.format(len(low_imp_col)))

print(low_imp_col)

# 删除特征重要性为0和比较弱的特征

drop_imp_col = zero_imp_col+low_imp_col

mydf_final_fea_selected = df_final.drop(drop_imp_col,axis=1)

mydf_final_fea_selected.shape

# (49701, 160)

mydf_final_fea_selected.to_csv(

r'D:\Py_Data\拍拍贷“魔镜杯”风控初赛数据\mydf_final_fea_selected.csv',encoding='gbk',index=False)

##############################################建模######################################

# 筛选完特征后,再将该数据集切分成训练集和测试集,并通过调参提高精度,然后使用精度最高的模型预测2万个样本的标签

# 导入数据.用于建模

df = pd.read_csv('mydf_final_fea_selected.csv',encoding='gbk')

x_data = df[df.sample_status=='train'].drop(['Idx','sample_status','target','ListingInfo'],axis=1)

y_data = df[df.sample_status=='train']['target']

# 划分训练集和测试集

x_train,x_test, y_train, y_test = train_test_split(x_data,

y_data,

test_size=0.2)

# 训练模型

lgb_sklearn = lgb.LGBMClassifier(random_state=0).fit(x_train,y_train)

# # 预测测试集的样本

lgb_sklearn_pre = lgb_sklearn.predict_proba(x_test)

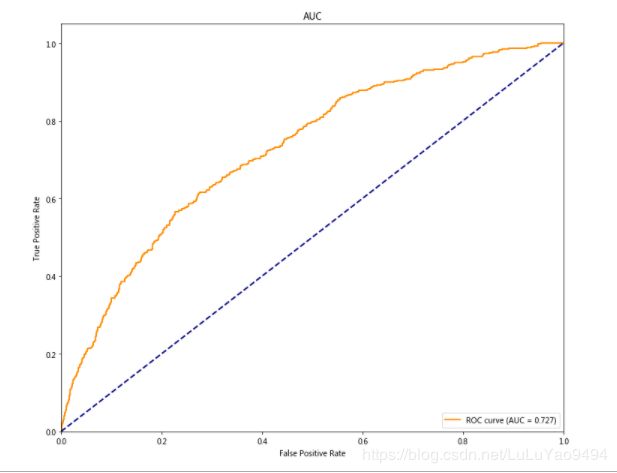

###计算roc和auc

from sklearn.metrics import roc_curve, auc

def acu_curve(y,prob):

# y真实,

# prob预测

fpr,tpr,threshold = roc_curve(y,prob) ###计算真阳性率(真正率)和假阳性率(假正率)

roc_auc = auc(fpr,tpr) ###计算auc的值

plt.figure()

lw = 2

plt.figure(figsize=(12,10))

plt.plot(fpr, tpr, color='darkorange',

lw=lw, label='ROC curve (AUC = %0.3f)' % roc_auc) ###假正率为横坐标,真正率为纵坐标做曲线

plt.plot([0, 1], [0, 1], color='navy', lw=lw, linestyle='--')

plt.xlim([0.0, 1.0])

plt.ylim([0.0, 1.05])

plt.xlabel('False Positive Rate')

plt.ylabel('True Positive Rate')

plt.title('AUC')

plt.legend(loc="lower right")

plt.show()

acu_curve(y_test,lgb_sklearn_pre[:,1])

# 以上是sklearn版,下面是原生版本

import time

# 原生的lightgbm

lgb_train = lgb.Dataset(x_train,y_train)

lgb_test = lgb.Dataset(x_test,y_test,reference=lgb_train)

lgb_origi_params = {'boosting_type':'gbdt',

'max_depth':-1,

'num_leaves':31,

'bagging_fraction':1.0,

'feature_fraction':1.0,

'learning_rate':0.1,

'metric': 'auc'}

start = time.time()

lgb_origi = lgb.train(train_set=lgb_train,

early_stopping_rounds=10,

num_boost_round=400,

params=lgb_origi_params,

valid_sets=lgb_test)

end = time.time()

print('运行时间为{}秒'.format(round(end-start,0)))

# 原生的lightgbm的AUC

lgb_origi_pre = lgb_origi.predict(x_test)

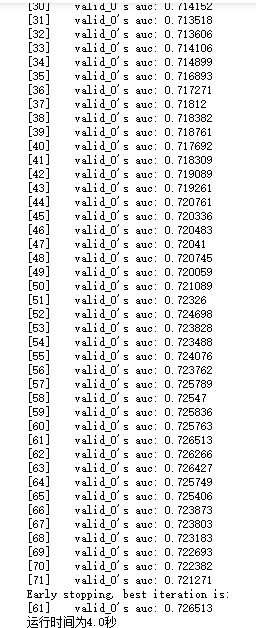

acu_curve(y_test,lgb_origi_pre)########################################lightgbm尝试调参#################################

# 确定最大迭代次数,学习率设为0.1

base_parmas={'boosting_type':'gbdt', # 使用的算法,还有rf,dart,goss

'learning_rate':0.1,

'num_leaves':40, # 一棵树上的叶子数,默认31

'max_depth':-1, # 树的最大深度,0:无限制

'bagging_fraction':0.8, # 每次迭代随机选取部分数据

'feature_fraction':0.8, # 每次迭代随机选取部分特征

'lambda_l1':0, # 正则化,

'lambda_l2':0,

'min_data_in_leaf':20, # 一个叶子上数据的最小数量,默认20,处理过拟合,设置较大可以避免生成一个较深的树,

'min_sum_hessian_inleaf':0.001, # 一个叶子上最小hessian和,,处理过拟合

'metric':'auc'}

cv_result = lgb.cv(train_set=lgb_train,

num_boost_round=200, # 迭代次数,默认100

early_stopping_rounds=5, # 没有提高,模型将停止训练

nfold=5,

stratified=True,

shuffle=True,

params=base_parmas,

metrics='auc',

seed=0)

print('最大的迭代次数: {}'.format(len(cv_result['auc-mean'])))

print('交叉验证的AUC: {}'.format(max(cv_result['auc-mean'])))

# 输出

# 最大的迭代次数: 28

# 交叉验证的AUC: 0.7136171096752256# num_leaves ,步长设为5

from sklearn.model_selection import GridSearchCV

from sklearn.model_selection import cross_val_score

from sklearn.model_selection import StratifiedKFold

param_find1 = {'num_leaves':range(10,50,5)}

cv_fold = StratifiedKFold(n_splits=5,random_state=0,shuffle=True)

start = time.time()

grid_search1 = GridSearchCV(estimator=lgb.LGBMClassifier(learning_rate=0.1,

n_estimators = 28,

max_depth=-1,

min_child_weight=0.001,

min_child_samples=20,

subsample=0.8,

colsample_bytree=0.8,

reg_lambda=0,

reg_alpha=0),

cv = cv_fold,

n_jobs=-1,

param_grid = param_find1,

scoring='roc_auc')

grid_search1.fit(x_train,y_train)

end = time.time()

print('运行时间为:{}'.format(round(end-start,0)))

print(grid_search1.get_params)

print('\t')

print(grid_search1.best_params_)

print('\t')

print(grid_search1.best_score_)

grid_search1.get_params# num_leaves,步长为1

param_find2 = {'num_leaves':range(40,50,1)}

grid_search2 = GridSearchCV(estimator=lgb.LGBMClassifier(n_estimators=28,

learning_rate=0.1,

min_child_weight=0.001,

min_child_samples=20,

subsample=0.8,

colsample_bytree=0.8,

reg_lambda=0,

reg_alpha=0

),

cv=cv_fold,

n_jobs=-1,

scoring='roc_auc',

param_grid = param_find2)

grid_search2.fit(x_train,y_train)

print(grid_search2.get_params)

print('\t')

print(grid_search2.best_params_)

print('\t')

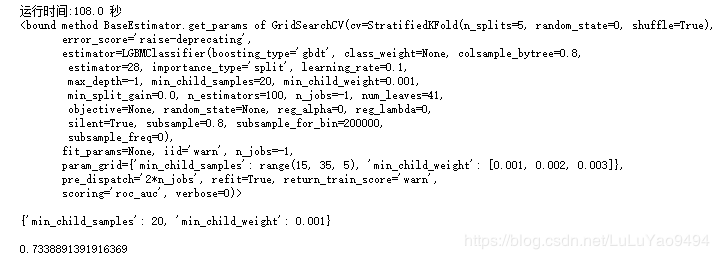

print(grid_search2.best_score_)# 确定num_leaves 为41 ,下面进行min_child_samples 和 min_child_weight的调参,设定步长为5

param_find3 = {'min_child_samples':range(15,35,5),

'min_child_weight':[x/1000 for x in range(1,4,1)]}

grid_search3 = GridSearchCV(estimator=lgb.LGBMClassifier(estimator=28,

learning_rate=0.1,

num_leaves=41,

subsample=0.8,

colsample_bytree=0.8,

reg_lambda=0,

reg_alpha=0

),

cv=cv_fold,

scoring='roc_auc',

param_grid = param_find3,

n_jobs=-1)

start = time.time()

grid_search3.fit(x_train,y_train)

end = time.time()

print('运行时间:{} 秒'.format(round(end-start,0)))

print(grid_search3.get_params)

print('\t')

print(grid_search3.best_params_)

print('\t')

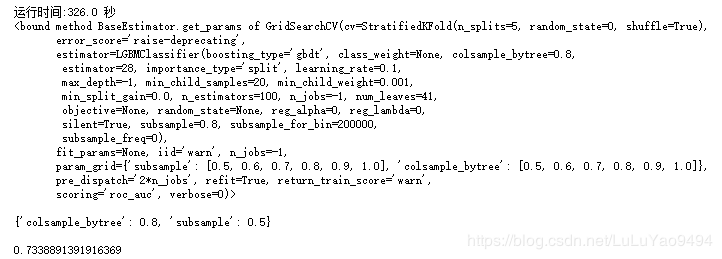

print(grid_search3.best_score_)# 确定min_child_weight为0.001,min_child_samples为20,下面对subsample和colsample_bytree进行调参

param_find4 = {'subsample':[x/10 for x in range(5,11,1)],

'colsample_bytree':[x/10 for x in range(5,11,1)]}

grid_search4 = GridSearchCV(estimator=lgb.LGBMClassifier(estimator=28,

learning_rate=0.1,

min_child_samples=20,

min_child_weight=0.001,

num_leaves=41,

reg_lambda=0,

reg_alpha=0

),

cv=cv_fold,

scoring='roc_auc',

param_grid = param_find4,

n_jobs=-1)

start = time.time()

grid_search4.fit(x_train,y_train)

end = time.time()

print('运行时间:{} 秒'.format(round(end-start,0)))

print(grid_search4.get_params)

print('\t')

print(grid_search4.best_params_)

print('\t')

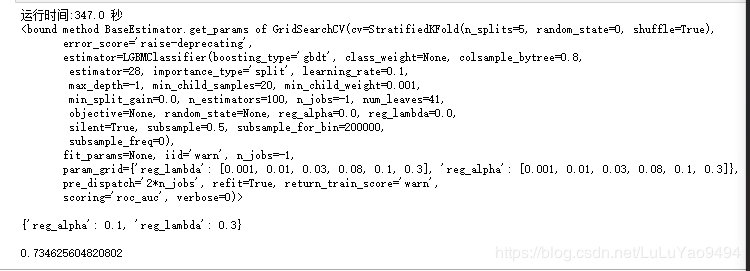

print(grid_search4.best_score_)# 再调整reg_lambda和reg_alpha

param_find5 = {'reg_lambda':[0.001,0.01,0.03,0.08,0.1,0.3],

'reg_alpha':[0.001,0.01,0.03,0.08,0.1,0.3]}

grid_search5 = GridSearchCV(estimator=lgb.LGBMClassifier(estimator=28,

learning_rate=0.1,

min_child_samples=20,

min_child_weight=0.001,

num_leaves=41,

subsample= 0.5,

colsample_bytree=0.8

),

cv=cv_fold,

scoring='roc_auc',

param_grid = param_find5,

n_jobs=-1)

start = time.time()

grid_search5.fit(x_train,y_train)

end = time.time()

print('运行时间:{} 秒'.format(round(end-start,0)))

print(grid_search5.get_params)

print('\t')

print(grid_search5.best_params_)

print('\t')

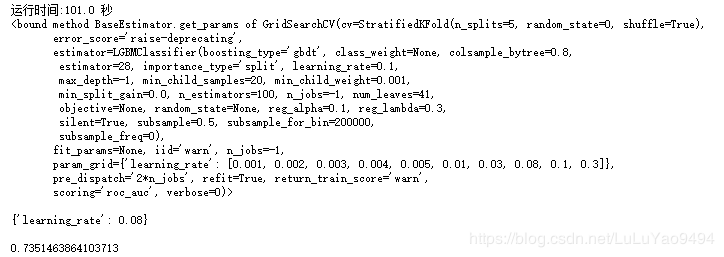

print(grid_search5.best_score_)param_find6 = {'learning_rate':[0.001,0.002,0.003,0.004,0.005,0.01,0.03,0.08,0.1,0.3,0.5]}

grid_search6 = GridSearchCV(estimator=lgb.LGBMClassifier(estimator=28,

min_child_samples=20,

min_child_weight=0.001,

num_leaves=41,

subsample= 0.5,

colsample_bytree=0.8 ,

reg_alpha=0.1,

reg_lambda=0.3

),

cv=cv_fold,

scoring='roc_auc',

param_grid = param_find6,

n_jobs=-1)

start = time.time()

grid_search6.fit(x_train,y_train)

end = time.time()

print('运行时间:{} 秒'.format(round(end-start,0)))

print(grid_search6.get_params)

print('\t')

print(grid_search6.best_params_)

print('\t')

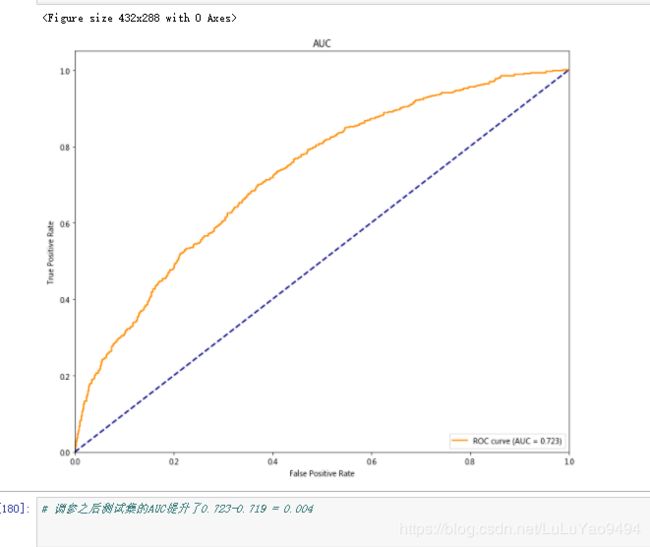

print(grid_search6.best_score_)# 将最佳参数再次带入cv函数

best_params = {

'boosting_type':'gbdt',

'learning_rate': 0.08,

'num_leaves':41,

'max_depth':-1,

'bagging_fraction':0.5,

'feature_fraction':0.8,

'min_data_in_leaf':20,

'min_sum_hessian_in_leaf':0.001,

'lambda_l1':0.1,

'lambda_l2':0.3,

'metric':'auc'

}

best_cv = lgb.cv(train_set=lgb_train,

early_stopping_rounds=5,

num_boost_round=200,

nfold=5,

params=best_params,

metrics='auc',

stratified=True,

shuffle=True,

seed=0)

print('最佳参数的迭代次数: {}'.format(len(best_cv['auc-mean'])))

print('交叉验证的AUC: {}'.format(max(best_cv['auc-mean'])))

# 最佳参数的迭代次数: 50

# 交叉验证的AUC: 0.7167089545162871

lgb_single_model = lgb.LGBMClassifier(n_estimators=50,

learning_rate=0.08,

min_child_weight=0.001,

min_child_samples = 20,

subsample=0.5,

colsample_bytree=0.8,

num_leaves=41,

max_depth=-1,

reg_lambda=0.3,

reg_alpha=0.1,

random_state=0)

lgb_single_model.fit(x_train,y_train)

pre = lgb_single_model.predict_proba(x_test)[:,1]

acu_curve(y_test,pre)