TensorFlow实现DCGAN

DCGAN叫做深层卷积生成对抗网络,它是在GAN的基础上把GAN的生成模型和判别模型用CNN 实现,而不是简单的多层感知机。此外,论文还对CNN 进行改进,去掉了了CNN 中的全连接层,批量归一化处理,使用了反卷积操作,以及使用了LReLu激活函数等等。参考论文:《Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks》,Github代码地址:https://github.com/carpedm20/DCGAN-tensorflow。在作者源码的基础上稍作修改,使之用于mnist数据集,收敛速度很快,代码如下:

#coding=utf-8

import tensorflow as tf

import pickle

import os

import numpy as np

from scipy.misc import imsave

import matplotlib.gridspec as gridspec

import shutil

import math

# 定义一个mnist数据集的类

class mnistReader():

def __init__(self,mnistPath,onehot=True):

self.mnistPath=mnistPath

self.onehot=onehot

self.batch_index=0

print ('read:',self.mnistPath)

fo = open(self.mnistPath, 'rb')

self.train_set,self.valid_set,self.test_set = pickle.load(fo,encoding='bytes')

fo.close()

self.data_label_train=list(zip(self.train_set[0],self.train_set[1]))

np.random.shuffle(self.data_label_train)

# 获取下一个训练集的batch

def next_train_batch(self,batch_size=100):

if self.batch_index < int(len(self.data_label_train)/batch_size):

# print ("batch_index:",self.batch_index )

datum=self.data_label_train[self.batch_index*batch_size:(self.batch_index+1)*batch_size]

self.batch_index+=1

return self._decode(datum,self.onehot)

else:

self.batch_index=0

np.random.shuffle(self.data_label_train)

datum=self.data_label_train[self.batch_index*batch_size:(self.batch_index+1)*batch_size]

self.batch_index+=1

return self._decode(datum,self.onehot)

# 获取样本标签,作为生成图片的条件

def get_sample_label(self,batch_size=64):

sample=self.train_set[1][0:batch_size]

rlabel=list()

for index in sample:

hot=np.zeros(10)

hot[int(index)]=1

rlabel.append(hot)

return rlabel

# 把label变成one-hot向量

def _decode(self,datum,onehot):

rdata=list() # batch训练数据

rlabel=list()

if onehot:

for d,l in datum:

rdata.append(np.reshape(d,[28,28,1]))

hot=np.zeros(10)

hot[int(l)]=1 # label设为10维的one-hot向量

rlabel.append(hot)

else:

for d,l in datum:

rdata.append(np.reshape(d,[28,28,1]))

rlabel.append(int(l))

return rdata,rlabel

# 批量归一化类的定义

class batch_norm(object):

def __init__(self, epsilon=1e-5, momentum = 0.9, name="batch_norm"):

with tf.variable_scope(name):

self.epsilon = epsilon

self.momentum = momentum

self.name = name

def __call__(self, x, train=True):

return tf.contrib.layers.batch_norm(x,\

decay=self.momentum, \

updates_collections=None,\

epsilon=self.epsilon, \

scale=True, \

is_training=train,\

scope=self.name)

input_height=28 # 输入图像高度

input_width=28 # 输入图像宽度

output_height=28 # 输出图像高度

output_width=28 # 输出图像宽度

max_epoch=100 # 最大的迭代次数

batch_size=64 # batch块大小

y_dim=10 # 条件向量的维度,如果不用可以设置为 None

z_dim=100 # 噪声向量的维度

gf_dim=64 # 生成器的第一层卷积层卷积核个数

df_dim=64 # 判别器的第一层卷积层卷积核个数

gfc_dim=1024 # 全连接层单元数

dfc_dim=1024 # 全连接层单元数

c_dim=1 # 图片的通道数

output_path="DCGAN" # 保存路径

# 定义判别器,batch_size=64,image的维度为[64 28 28 1],y的维度是[64 10],

def discriminator(image, y=None, reuse=False):

# 定义变量的命名空间

with tf.variable_scope("discriminator") as scope:

if reuse:

scope.reuse_variables() # 表示复用命名空间内的变量

d_bn1 = batch_norm(name='d_bn1')

d_bn2 = batch_norm(name='d_bn2')

if not y_dim:

d_bn3 = batch_norm(name='d_bn3')

# 如果y_dim为 None,表示没有条件变量

if not y_dim:

# 第一层卷积,h0维度为[64 14 14 64],卷积核均为5*5,步长为 2,

# 填充方式为same,所以卷积后输出图像为原来的1/4

h0 = lrelu(conv2d(image, df_dim, name='d_h0_conv'))

# 第二层卷积,h1的形状为 [64 7 7 128]

h1 = lrelu(d_bn1(conv2d(h0, df_dim*2, name='d_h1_conv')))

# 第三层卷积,h2的形状为 [64 4 4 256]

h2 = lrelu(d_bn2(conv2d(h1, df_dim*4, name='d_h2_conv')))

#第四层卷积,h3的形状为 [64 2 2 512]

h3 = lrelu(d_bn3(conv2d(h2, df_dim*8, name='d_h3_conv')))

# 把tensor展开为一维向量[64 2048],输入线性函数,h4的形状为 [64 1]

h4 = linear(tf.reshape(h3, [batch_size, -1]), 1, 'd_h4_lin')

# 输入sigmod函数,求出概率值,形状为[64 1]

return tf.nn.sigmoid(h4), h4

# 如果y_dim为真,表示有条件变量

else:

# 这里yb的维度[64 1 1 10]

yb = tf.reshape(y, [batch_size, 1, 1, y_dim])

'''

x将image和 yb连接起来,image的维度为[64 28 28 1]。这里先将yb和[64 28 28 1]的单位

张量进行了逐元素积,得到张量[64 28 28 10],逐元素积用到了广播机制,然后[64 28 28 10]

和image在第4维进行矩阵连接得到[64 28 28 11].这相当于是使用了Conditional GAN,为图像

提供标签作为条件信息,将x=[64 28 28 11]输入到卷积层conv2d

'''

x = conv_cond_concat(image, yb)

# 第一个卷积层,卷积后和yb进行融合,h0维度为[64 14 14 21]

h0 = lrelu(conv2d(x, c_dim + y_dim, name='d_h0_conv'))

h0 = conv_cond_concat(h0, yb)

# 第二个卷积层,h1的维度为[64 7*7*74+10]

h1 = lrelu(d_bn1(conv2d(h0, df_dim + y_dim, name='d_h1_conv')))

h1 = tf.reshape(h1, [batch_size, -1])

h1 = tf.concat([h1, y], 1)

# 线性输入层 1 , h2的维度为[64 1024+10]

h2 = lrelu(d_bn2(linear(h1, dfc_dim, 'd_h2_lin')))

h2 = tf.concat([h2, y], 1)

# 线性输出层 2 , h3的维度为[64 1]

h3 = linear(h2, 1, 'd_h3_lin')

# 输入sigmod函数,求出概率值,形状为[64 1]

return tf.nn.sigmoid(h3), h3

# 定义生成器,在这里z为平均分布的随机分布数,z的维度为[64 100],y的维度为[64 10]

def generator(z, y):

with tf.variable_scope("generator") as scope:

g_bn0 = batch_norm(name='g_bn0')

g_bn1 = batch_norm(name='g_bn1')

g_bn2 = batch_norm(name='g_bn2')

if not y_dim:

g_bn3 = batch_norm(name='g_bn3')

#如果条件变量不存在

if not y_dim:

# s_h和s_w为 28

s_h, s_w = output_height, output_width

#s_h2和s_w2为 14

s_h2, s_w2 = conv_out_size_same(s_h, 2), conv_out_size_same(s_w, 2)

# s_h4和s_w4为 7

s_h4, s_w4 = conv_out_size_same(s_h2, 2), conv_out_size_same(s_w2, 2)

# s_h8, s_w8为 4

s_h8, s_w8 = conv_out_size_same(s_h4, 2), conv_out_size_same(s_w4, 2)

# s_h16, s_w16为 2

s_h16, s_w16 = conv_out_size_same(s_h8, 2), conv_out_size_same(s_w8, 2)

# 对噪声变量z全连接,隐射为 [64 64*8*2*2] 维向量

z_, h0_w, h0_b = linear(z, gf_dim*8*s_h16*s_w16, 'g_h0_lin', with_w=True)

# 改变其形状为 [64 2 2 64*8]

h0 = tf.reshape(z_, [-1, s_h16, s_w16, gf_dim * 8])

h0 = tf.nn.relu(g_bn0(h0))

# 反卷积第一层,输出h1形状为:[64 4 4 256]

h1, h1_w, h1_b = deconv2d(h0, [batch_size, s_h8, s_w8, gf_dim*4], name='g_h1', with_w=True)

h1 = tf.nn.relu(g_bn1(h1))

# 反卷积第二层,输出h2的形状为 [64 7 7 128]

h2, h2_w, h2_b = deconv2d(h1, [batch_size, s_h4, s_w4, gf_dim*2], name='g_h2', with_w=True)

h2 = tf.nn.relu(g_bn2(h2))

# 反卷机第三层,输出的形状为 [64 14 14 64]

h3, h3_w, h3_b = deconv2d(h2, [batch_size, s_h2, s_w2, gf_dim*1], name='g_h3', with_w=True)

h3 = tf.nn.relu(g_bn3(h3))

# 反卷机第四层,输出为[64 28 28 1]

h4, h4_w, h4_b = deconv2d(h3, [batch_size, s_h, s_w, c_dim], name='g_h4', with_w=True)

# 输入tanh函数,得到[-1,1]之间的值

return tf.nn.tanh(h4)

# 如果条件变量存在

else:

# s_h,s_w为 28

s_h, s_w = output_height, output_width

# s_h2,s_h4分别为 14,7

s_h2, s_h4 = int(s_h/2), int(s_h/4)

# s_w2,s_w4分别为 14 ,7

s_w2, s_w4 = int(s_w/2), int(s_w/4)

#y的维度为[64 10],z的维度为 [64 100],z与y连接后为[64 110]

z = tf.concat([z, y], 1)

# h0的维度是[64 1024+10]

h0 = tf.nn.relu(g_bn0(linear(z, gfc_dim, 'g_h0_lin')))

h0 = tf.concat([h0, y], 1)

# h1的维度为[64 7 7 128+10]

h1 = tf.nn.relu(g_bn1(linear(h0, gf_dim*2*s_h4*s_w4, 'g_h1_lin')))

h1 = tf.reshape(h1, [batch_size, s_h4, s_w4, gf_dim * 2])

yb = tf.reshape(y, [batch_size, 1, 1, y_dim])

h1 = conv_cond_concat(h1, yb)

# 第一次翻卷机,h2的维度是[64 14 14 128+10]

h2 = tf.nn.relu(g_bn2(deconv2d(h1,[batch_size, s_h2, s_w2, gf_dim * 2], name='g_h2')))

h2 = conv_cond_concat(h2, yb)

# 第二次反卷积,返回的形状为[64 28 28 1]

return tf.nn.sigmoid(deconv2d(h2, [batch_size, s_h, s_w, c_dim], name='g_h3'))

# 卷积的尺寸除以步长

def conv_out_size_same(size, stride):

return int(math.ceil(float(size) / float(stride)))

# 将两个tensor进行融合,这里x代表图像,y代表条件变量

def conv_cond_concat(x, y):

x_shapes = x.get_shape()

y_shapes = y.get_shape()

# 这里的点乘用到的TensorFlow的广播机制

return tf.concat([x, y*tf.ones([x_shapes[0], x_shapes[1], x_shapes[2], y_shapes[3]] ) ], 3)

# 正常的卷积操作

def conv2d(input_,output_dim,k_h=5, k_w=5, d_h=2, d_w=2, stddev=0.02,name="conv2d"):

with tf.variable_scope(name):

w = tf.get_variable('w', [k_h, k_w, input_.get_shape()[-1], output_dim],initializer=tf.truncated_normal_initializer(stddev=stddev))

'''

tf.get_variable:可以用来创建或者获取变量,当创建变量时,与tf.Variable是一样的。

k_h: 卷积核高度

k_w:卷积核尺寸

input_.get_shape()[-1] :卷积核的通道数

d_h:卷积纵向步长

d_w:卷积的横向步长

output_dim: 卷积核个数

'''

conv = tf.nn.conv2d(input_, w, strides=[1, d_h, d_w, 1], padding='SAME')

biases = tf.get_variable('biases', [output_dim], initializer=tf.constant_initializer(0.0))

conv = tf.reshape(tf.nn.bias_add(conv, biases), conv.get_shape())

return conv

# 反卷积操作定义,反卷积是正卷积的逆向操作

def deconv2d(input_,output_shape,k_h=5,k_w=5,d_h=2,d_w=2,stddev=0.02,name="deconv2d",with_w=False):

with tf.variable_scope(name):

# filter : [height, width, output_channels, in_channels]

w = tf.get_variable('w', [k_h, k_w, output_shape[-1], input_.get_shape()[-1]],

initializer=tf.random_normal_initializer(stddev=stddev))

try:

deconv = tf.nn.conv2d_transpose(input_, w, output_shape=output_shape,

strides=[1, d_h, d_w, 1])

# Support for verisons of TensorFlow before 0.7.0

except AttributeError:

deconv = tf.nn.deconv2d(input_, w, output_shape=output_shape,strides=[1, d_h, d_w, 1])

biases = tf.get_variable('biases', [output_shape[-1]], initializer=tf.constant_initializer(0.0))

deconv = tf.reshape(tf.nn.bias_add(deconv, biases), deconv.get_shape())

if with_w:

return deconv, w, biases

else:

return deconv

# lrelu激活函数的定义

def lrelu(x, leak=0.2, name="lrelu"):

return tf.maximum(x, leak*x)

# 线性变换函数,相当于全连接层

def linear(input_, output_size, scope=None, stddev=0.02, bias_start=0.0, with_w=False):

shape = input_.get_shape().as_list()

with tf.variable_scope(scope or "Linear"):

matrix = tf.get_variable("Matrix", [shape[1], output_size], tf.float32,tf.random_normal_initializer(stddev=stddev))

bias = tf.get_variable("bias", [output_size],initializer=tf.constant_initializer(bias_start))

if with_w:

return tf.matmul(input_, matrix) + bias, matrix, bias

else:

return tf.matmul(input_, matrix) + bias

# 训练模型

def train():

# 定义输入的各变量形状

y = tf.placeholder(tf.float32, [batch_size, y_dim], name='y')

image_dims = [input_height, input_width, c_dim]

inputs=tf.placeholder(tf.float32, [batch_size] + image_dims, name='real_images')

z = tf.placeholder(tf.float32, [batch_size, z_dim], name='z')

# 定义生成器和判别器的计算

G = generator(z, y)

D, D_logits = discriminator(inputs, y, reuse=False)

D_, D_logits_ = discriminator(G, y, reuse=True)

# 定义损失函数

d_loss_real = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(logits=D_logits, labels=tf.ones_like(D)))

d_loss_fake = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(logits=D_logits_, labels=tf.zeros_like(D_)))

g_loss = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(logits=D_logits_, labels=tf.ones_like(D_)))

d_loss = d_loss_real + d_loss_fake

# 定义需要优化的参数

t_vars = tf.trainable_variables() #显示图中可训练的变量。

d_vars = [var for var in t_vars if 'd_' in var.name]

g_vars = [var for var in t_vars if 'g_' in var.name]

# 定义优化器

d_optim = tf.train.AdamOptimizer(0.0001).minimize(d_loss, var_list=d_vars)

g_optim = tf.train.AdamOptimizer(0.0001).minimize(g_loss, var_list=g_vars)

init = tf.global_variables_initializer()

sess = tf.Session()

sess.run(init)

if os.path.exists(output_path):

shutil.rmtree(output_path) # 删除目录树

os.mkdir(output_path) # 重新创建目录树

sample_z = np.random.uniform(-1, 1, size=(batch_size , z_dim))

mnist=mnistReader(mnistPath="E:/testdata/mnist.pkl")

counter = 1

for i in range(max_epoch):

for j in range(100):

print ("epoch:%s, iter:%s" % (i, j) )

batch_images, batch_labels=mnist.next_train_batch(batch_size=batch_size)

batch_z = np.random.uniform(-1, 1, [batch_size, z_dim]).astype(np.float32)

sess.run(d_optim, feed_dict={inputs: batch_images,z: batch_z,y:batch_labels})

sess.run(g_optim, feed_dict={z: batch_z, y:batch_labels})

sess.run(g_optim, feed_dict={z: batch_z, y:batch_labels})

sample_labels=mnist.get_sample_label(batch_size=batch_size)

samples = sess.run(G,feed_dict={z: sample_z,y:sample_labels})

show_result(samples, os.path.join(output_path, "random_sample%s.jpg" % counter))

counter+=1

# 保存生成的图片结果

def show_result(batch_res, fname, grid_size=(8, 8), grid_pad=5):

# 有条件变量的GAN用了sigmod函数,没有条件变量的用了tanh函数

if not y_dim:

batch_res =0.5*batch_res.reshape((batch_res.shape[0], 28, 28))+0.5

else:

batch_res = batch_res.reshape((batch_res.shape[0], 28, 28))

img_h, img_w = batch_res.shape[1], batch_res.shape[2]

grid_h = img_h * grid_size[0] + grid_pad * (grid_size[0] - 1)

grid_w = img_w * grid_size[1] + grid_pad * (grid_size[1] - 1)

img_grid = np.zeros((grid_h, grid_w), dtype=np.uint8)

for i, res in enumerate(batch_res):

if i >= grid_size[0] * grid_size[1]:

break

img = (res) * 255 # 生成器生成的是0-1的值,所以要乘以255变成像素值

img = img.astype(np.uint8)

row = (i // grid_size[0]) * (img_h + grid_pad)

col = (i % grid_size[1]) * (img_w + grid_pad)

img_grid[row:row + img_h, col:col + img_w] = img

imsave(fname, img_grid)

if __name__ == '__main__':

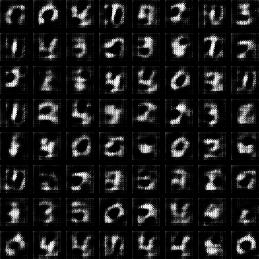

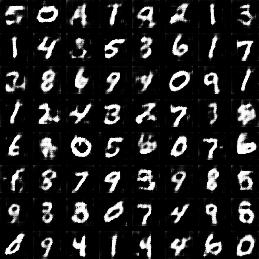

train()第一次epoch和第十四次epoch的结果如下: