数仓项目分层简介和Hive环境搭建(替换计算引擎为Tez)

文章目录

- 一 分层架构

- 二 hive 安装(1.2.1)

- 2.1 集群规划

- 2.2 hive 安装配置

- 2.2.1 上传hive安装包修改配置文件

- 2.2.2 修改元数据存储为mysql(安装mysql切换至root用户)

- 2.2.2.1 检查是否安装的有mysql,有则卸载掉自带的

- 2.2.2.2 安装mysql服务端

- 2.2.2.3 安装mysql客户端

- 2.2.2.4 修改mysql密码和授权用户访问

- 2.2.2.5 新增hive-site.xml 配置mysql连接四个字符串

- 2.2.2.6 拷贝mysql驱动至hive/lib目录下

- 2.2.3 修改hive的log存储目录(默认存在/tmp)

- 2.2.4 hive启动测试

- 2.2.5 修改计算引擎为Tez

- 2.2.5.1 上传Tez压缩包解压

- 2.2.5.2 hive配置Tez引擎

- 1 在hive-env.sh文件中添加tez环境变量配置和依赖包环境变量配置

- 2 在hive-site.xml文件中添加如下配置,更改hive计算引擎

- 3 在Hive的/opt/module/hive/conf下面创建一个tez-site.xml文件

- 4 将/opt/module/tez-0.9.1上传到HDFS的/tez路径

- 5 测试

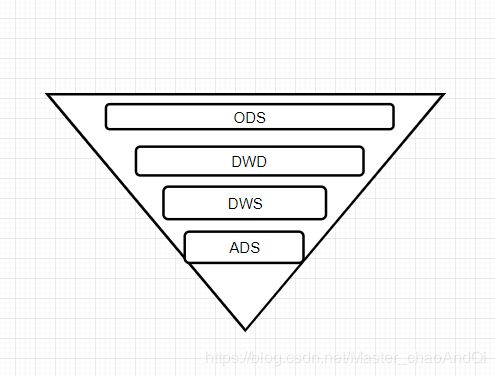

一 分层架构

- ODS(Operation Data Sore)原始数据层,将数据原封不动的加载到hive表中

- DWD(Data Warehouse Detail)数据明细层,对原始数据进行ETL后的数据

- DWS(Data Warehouse Service) 数据服务层,以DWD为基础进行轻度汇总

- ADS(application data store)数据应用层,再DWS基础上对数据进行汇总

命名规范: - ODS层命名为ods

- DWD层命名为dwd

- DWS层命名为dws

- ADS层命名为ads

- 临时表数据库命名为xxx_tmp

- 备份数据数据库命名为xxx_bak

二 hive 安装(1.2.1)

2.1 集群规划

| 服务器hadoop102 | 服务器hadoop102 | 服务器hadoop102 | |

|---|---|---|---|

| hive | √ | ||

| mysql | √ |

2.2 hive 安装配置

2.2.1 上传hive安装包修改配置文件

修改hive安装目录下conf目录下的hive-env.sh.template为hive.env.sh

export HADOOP_HOME=/opt/module/hadoop-2.7.2

export HIVE_CONF_DIR=/opt/module/hive1.2.1/conf

2.2.2 修改元数据存储为mysql(安装mysql切换至root用户)

2.2.2.1 检查是否安装的有mysql,有则卸载掉自带的

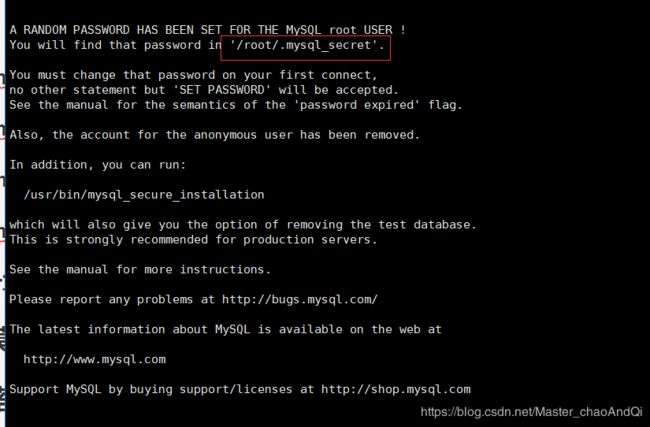

2.2.2.2 安装mysql服务端

rpm -ivh MySQL-server-5.6.24-1.el6.x86_64.rpm

cat /root/.mysql_secret

2.2.2.3 安装mysql客户端

rpm -ivh MySQL-client-5.6.24-1.el6.x86_64.rpm

使用默认密码登陆后修改密码

mysql -uroot -phT1sbje3p4fP2bQJ

mysql> SET PASSWORD=PASSWORD('root');

update user set host='%' where host='localhost';

flush privileges;

2.2.2.4 修改mysql密码和授权用户访问

SET PASSWORD=PASSWORD('root');

delete from mysql.user where host!='localhost'

2.2.2.5 新增hive-site.xml 配置mysql连接四个字符串

javax.jdo.option.ConnectionURL

jdbc:mysql://hadoop102:3306/metastore?createDatabaseIfNotExist=true

JDBC connect string for a JDBC metastore

javax.jdo.option.ConnectionDriverName

com.mysql.jdbc.Driver

Driver class name for a JDBC metastore

javax.jdo.option.ConnectionUserName

root

username to use against metastore database

javax.jdo.option.ConnectionPassword

000000

password to use against metastore database

hive.cli.print.header

true

hive.cli.print.current.db

true

2.2.2.6 拷贝mysql驱动至hive/lib目录下

cp mysql-connector-java-5.1.27-bin.jar /opt/module/hive1.2.1/lib/

2.2.3 修改hive的log存储目录(默认存在/tmp)

mv hive-log4j.properties.template hive-log4j.properties

vim hive-log4j.properties

hive.log.dir=/opt/module/hive1.2.1/logs/

2.2.4 hive启动测试

尖叫提示:启动hive之前需先启动hadoop

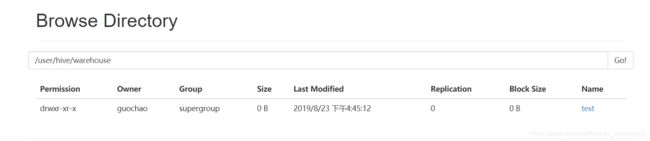

启动之前在hdfs上创建hive的数据目录

hadoop fs -mkdir /tmp

hadoop fs -mkdir -p /user/hive/warehouse

hadoop fs -chmod g+w /tmp

hadoop fs -chmod g+w /user/hive/warehouse

启动hive使用belline连接

/opt/module/hive1.2.1/bin/hiveserver2 &

/opt/module/hive1.2.1/bin/belline

!connect jdbc:hive2://hadoop102:10000

输入用户名回车

0: jdbc:hive2://hadoop102:10000> show databases;

OK

+----------------+--+

| database_name |

+----------------+--+

| default |

+----------------+--+

1 row selected (1.262 seconds)

0: jdbc:hive2://hadoop102:10000> create table test(id int,name string);

OK

No rows affected (0.29 seconds)

0: jdbc:hive2://hadoop102:10000> show tables;

OK

+-----------+--+

| tab_name |

+-----------+--+

| test |

+-----------+--+

1 row selected (0.047 seconds)

2.2.5 修改计算引擎为Tez

Tez是一个Hive的运行引擎,性能优于MR

2.2.5.1 上传Tez压缩包解压

tar -zxf apache-tez-0.9.1-bin.tar.gz -C /opt/module/

修改文件名为

mv apache-tez-0.9.1-bin/ tez-0.9.1

2.2.5.2 hive配置Tez引擎

1 在hive-env.sh文件中添加tez环境变量配置和依赖包环境变量配置

export TEZ_HOME=/opt/module/tez-0.9.1 #是你的tez的解压目录

export TEZ_JARS=""

for jar in `ls $TEZ_HOME |grep jar`; do

export TEZ_JARS=$TEZ_JARS:$TEZ_HOME/$jar

done

for jar in `ls $TEZ_HOME/lib`; do

export TEZ_JARS=$TEZ_JARS:$TEZ_HOME/lib/$jar

done

export HIVE_AUX_JARS_PATH=/opt/module/hadoop-2.7.2/share/hadoop/common/hadoop-lzo-0.4.20.jar$TEZ_JARS

2 在hive-site.xml文件中添加如下配置,更改hive计算引擎

hive.execution.engine

tez

3 在Hive的/opt/module/hive/conf下面创建一个tez-site.xml文件

tez.lib.uris

#${fs.defaultFS}/tez/tez-0.9.1 hadoop上对应的目录

${fs.defaultFS}/tez/tez-0.9.1,${fs.defaultFS}/tez/tez-0.9.1/lib

tez.lib.uris.classpath

${fs.defaultFS}/tez/tez-0.9.1,${fs.defaultFS}/tez/tez-0.9.1/lib

tez.use.cluster.hadoop-libs

true

tez.history.logging.service.class

org.apache.tez.dag.history.logging.ats.ATSHistoryLoggingService

4 将/opt/module/tez-0.9.1上传到HDFS的/tez路径

hadoop fs -mkdir /tez

hadoop fs -put /opt/module/tez-0.9.1/ /tez

5 测试

create table test(id int,name string);

insert into test values(1,'zhangsan');

运行时界面

--------------------------------------------------------------------------------

VERTICES STATUS TOTAL COMPLETED RUNNING PENDING FAILED KILLED

--------------------------------------------------------------------------------

--------------------------------------------------------------------------------

VERTICES: 00/00 [>>--------------------------] 0% ELAPSED TIME: 20.10 s

--------------------------------------------------------------------------------

运行报错:(这种问题是从机上运行的Container试图使用过多的内存,而被NodeManager kill掉了)

240.6 MB of 1 GB physical memory used; 2.6 GB of 2.1 GB virtual memory used. Killing container.

Failing this attempt. Failing the application.

FAILED: Execution Error, return code 2 from org.apache.hadoop.hive.ql.exec.tez.TezTask. Application application_1566549334337_0002 failed 2 times due to AM Container for appattempt_1566549334337_0002_000002 exited with exitCode: -103

For more detailed output, check application tracking page:http://hadoop103:8088/cluster/app/application_1566549334337_0002Then, click on links to logs of each attempt.

Diagnostics: Container [pid=10784,containerID=container_1566549334337_0002_02_000001] is running beyond virtual memory limits. Current usage: 240.6 MB of 1 GB physical memory used; 2.6 GB of 2.1 GB virtual memory used. Killing container.

Dump of the process-tree for container_1566549334337_0002_02_000001 :

解决:

方案一:或者是关掉虚拟内存检查。我们选这个,修改yarn-site.xml

yarn.nodemanager.vmem-check-enabled

false

方案二:mapred-site.xml中设置Map和Reduce任务的内存配置如下:(value中实际配置的内存需要根据自己机器内存大小及应用情况进行修改)

mapreduce.map.memory.mb

1536

mapreduce.map.java.opts

-Xmx1024M

mapreduce.reduce.memory.mb

3072

mapreduce.reduce.java.opts

-Xmx2560M

分发配置文件,重启集群测试成功

hive (default)> insert into test values(1,"zhangsan");

Query ID = guochao_20190823172000_58ae4b86-f130-4dd6-b1bb-8969c0b19f72

Total jobs = 1

Launching Job 1 out of 1

Status: Running (Executing on YARN cluster with App id application_1566551738666_0001)

--------------------------------------------------------------------------------

VERTICES STATUS TOTAL COMPLETED RUNNING PENDING FAILED KILLED

--------------------------------------------------------------------------------

Map 1 .......... SUCCEEDED 1 1 0 0 0 0

--------------------------------------------------------------------------------

VERTICES: 01/01 [==========================>>] 100% ELAPSED TIME: 8.11 s

--------------------------------------------------------------------------------

Loading data to table default.test

Table default.test stats: [numFiles=1, numRows=1, totalSize=11, rawDataSize=10]

OK

_col0 _col1

Time taken: 10.39 seconds

总结:

- 在启动hive的时候需先启动hdfs

- 为防止权限检测问题,需先在hdfs上创建指定的目录,并修改对应的权限

存在的问题:

- 由于hive的元数据信息存储在mysql中,所以mysql需要做高可用,可参考技术

基于keepalive实现mysql的高可用。