autoEncoder

autoEncoder

Deep Learning最简单的一种方法是利用人工神经网络的特点,人工神经网络(ANN)本身就是具有层次结构的系统,如果给定一个神经网络,我们假设其输出与输入是相同的,然后训练调整其参数,得到每一层中的权重。自然地,我们就得到了输入I的几种不同表示(每一层代表一种表示),这些表示就是特征。自动编码器就是一种尽可能复现输入信号的神经网络。为了实现这种复现,自动编码器就必须捕捉可以代表输入数据的最重要的因素,就像PCA那样,找到可以代表原信息的主要成分。————摘自此文

%matplotlib inline

import numpy as np

import sklearn.preprocessing as prep

import tensorflow as tf

import matplotlib.pyplot as plt

from tensorflow.examples.tutorials.mnist import input_data这里采用xavier initialization来初始化参数,特点是会根据某一层网络的INPUT, OUTPUT节点数量自动调节最适合的分布。让权重被初始化得不大不小正好合适。

def xavier_init(fan_in, fan_out, constant = 1):

low = -constant * np.sqrt(6.0 / (fan_in + fan_out))

high = constant * np.sqrt(6.0 / (fan_in + fan_out))

return tf.random_uniform((fan_in, fan_out), #均匀分布

minval = low, maxval = high,

dtype = tf.float32)class AdditiveGaussianNoiseAutoencoder(object):

def __init__(self,

n_input, #输入变量数

n_hidden, #隐藏层节点数

transfer_function = tf.nn.softplus, #激活函数

optimizer = tf.train.AdamOptimizer(), #优化器

scale = 0.1):

self.n_input = n_input

self.n_hidden = n_hidden

self.transfer = transfer_function

self.scale = tf.placeholder(tf.float32)

self.traning_scale = scale

network_weights = self._initialize_weights() #调用成员函数,得到初始化权重w、偏移b

self.weights = network_weights

#开始定义网格结构

self.x = tf.placeholder(tf.float32, [None, self.n_input])

self.hidden = self.transfer(tf.add(tf.matmul( #给x加上噪声,并通过激活函数

self.x + scale * tf.random_normal((n_input,)),

self.weights['w1']), self.weights['b1']))

self.reconstruction = tf.add(tf.matmul(self.hidden, #隐含层的输出的线性组合

self.weights['w2']), self.weights['b2'])

#定义自编码器的损失函数

self.cost = 0.5 * tf.reduce_sum(tf.pow(tf.subtract( #reconstruction与x的差的平方,在sum

self.reconstruction, self.x), 2.0))

self.optimizer = optimizer.minimize(self.cost)

init = tf.global_variables_initializer()

self.sess = tf.Session()

self.sess.run(init)

def _initialize_weights(self):

all_weights = {}

all_weights['w1'] = tf.Variable(xavier_init(self.n_input, #可以改为get_variables

self.n_hidden))

all_weights['b1'] = tf.Variable(tf.zeros([self.n_hidden],

dtype=tf.float32))

all_weights['w2'] = tf.Variable(tf.zeros([self.n_hidden, self.n_input],

dtype=tf.float32))

all_weights['b2'] = tf.Variable(tf.zeros([self.n_input],

dtype=tf.float32))

return all_weights

def partial_fit(self, X): #会触发训练操作,用一个batch训练并返回cost

cost, opt = self.sess.run((self.cost, self.optimizer),

feed_dict = {self.x: X, self.scale: self.traning_scale})

return cost

def calc_total_cost(self, X):

return self.sess.run(self.cost, #不会出发训练操作

feed_dict = {self.x: X, self.scale: self.traning_scale})

def transform(self, X): #计算隐藏层的输出

return self.sess.run(self.hidden, feed_dict = {self.x: X,

self.scale: self.training_scale})

def generate(self, hidden = None):

if hidden is None:

hidden = np.random.normal(size=self.weights['b1'])

return self.sess.run(self.reconstruction,

feed_dict = {self.hidden: hidden})

def reconstruct(self, X):

return self.sess.run(self.reconstruction,

feed_dict = {self.x: X, self.scale: self.traning_scale})

def getWeights(self):

return self.sess.run(self.weights['w1'])

def getBiases(self):

return self.sess.run(self.weights['b1'])mnist = input_data.read_data_sets("../../mnist/.", one_hot = True)Extracting ../../mnist/./train-images-idx3-ubyte.gz

Extracting ../../mnist/./train-labels-idx1-ubyte.gz

Extracting ../../mnist/./t10k-images-idx3-ubyte.gz

Extracting ../../mnist/./t10k-labels-idx1-ubyte.gz

#标准化处理(均值为0, 标准差为1的分布),这里调用sklearn.preprossing的一个类,现在训练集上fit,再应用到train集跟test集上

def standard_scale(X_train, X_test):

preprocessor = prep.StandardScaler().fit(X_train)

X_train = preprocessor.transform(X_train)

X_test = preprocessor.transform(X_test)

return X_train, X_testdef get_random_block_from_data(data, batch_size):

start_index = np.random.randint(0, len(data) - batch_size)

return data[start_index:(start_index + batch_size)]#将标准化变化应用到train集跟test集上

X_train, X_test = standard_scale(mnist.train.images, mnist.test.images)mnist.test.cls = np.array([label.argmax() for label in mnist.test.labels])

img_size = 28

img_size_flat = img_size * img_size

img_shape = (img_size, img_size)

num_classes = 10

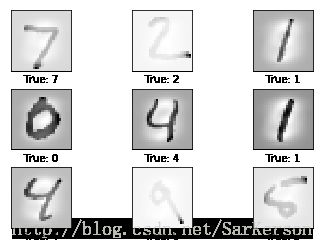

def plot_images(images, cls_true, cls_pred = None):

assert len(images) == len(cls_true) == 9

fig, axes = plt.subplots(3, 3)

fig.subplots_adjust(hspace=0.3, wspace=0.3)

for i, ax in enumerate(axes.flat):

ax.imshow(images[i].reshape(img_shape), cmap='binary')

if cls_pred is None:

xlabel = "True: {0}".format(cls_true[i])

else:

xlabel = "True: {0}, Pred: {1}".format(cls_true[i], cls_pred[i])

ax.set_xlabel(xlabel)

ax.set_xticks([])

ax.set_yticks([])

#plot some images in the standarded test-set

images = X_test[0:9]

cls_true = mnist.test.cls[0:9]

plot_images(images=images, cls_true=cls_true)n_samples = int(mnist.train.num_examples)

training_epochs = 30 #最大训练轮数

batch_size = 64

display_step = 10#创建一个AGN自编码器实例

autoEncoder = AdditiveGaussianNoiseAutoencoder(n_input=img_size_flat,

n_hidden=200,

transfer_function=tf.nn.softplus,

optimizer=tf.train.AdamOptimizer(learning_rate=0.001),

scale=0.01)#开始训练过程

for epoch in range(training_epochs):

avg_cost = 0.

total_batch = int(n_samples / batch_size)

for i in range(total_batch): #在每一个batch里面

batch_xs = get_random_block_from_data(X_train, batch_size) #获得一个随机的batch(不放回抽样,提高数据利用效率)

cost = autoEncoder.partial_fit(batch_xs) #对batch进行训练,并返回cost

avg_cost += cost / n_samples * batch_size #计算平均cost

if epoch % display_step == 0:

print("Epoch:", '%04d' % (epoch + 1), "cost=",

"{:.9f}".format(avg_cost))('Epoch:', '0001', 'cost=', '8004.381811364')

('Epoch:', '0011', 'cost=', '4676.882887358')

('Epoch:', '0021', 'cost=', '4472.520707528')

print("Total cost: " + str(autoEncoder.calc_total_cost(X_test)))Total cost: 655923.0

images = X_test[0:9]

cls_true = mnist.test.cls[0:9]

re_images = autoEncoder.reconstruct(images)

plot_images(images=re_images, cls_true=cls_true)

print("---------------------------------------------------------")

plot_images(images=images, cls_true=cls_true)---------------------------------------------------------