docker系列-06.docker搭建ELK,集成filebeat客户端配置使用(二)

接上一篇docker系列-06.docker搭建ELK(一)。

本篇将就filebeat远程将日志文件中的内容远程写入elk服务器中的logstash配置和使用做详细说明。

1.在客户端中搭建filebeat

filebeat将会将客户端中的日志文件远程写入elk服务器中的logstash中,

Elasticsearch处理完这些日志后,提供给kibana搜索。如果有多台客户端的日志需要写入到elk的logstash中,每台客户端都需要安装filebeat

本人选择docker的搭建方式。

首先搜索filebeat的docker镜像:

[root@localhost logs]# docker search filebeat

NAME DESCRIPTION STARS OFFICIAL AUTOMATED

prima/filebeat Filebeat docker image 46 [OK]

bargenson/filebeat A docker image using the Docker API to col... 8 [OK]

olinicola/filebeat Docker image for Elastic Filebeat 6

willfarrell/filebeat ElasticStack / Beats / Filebeat 4 [OK]

nguoianphu/docker-filebeat Docker Filebeat image 4 [OK]

zot24/filebeat Alpine Docker Image for Filebeat 2 [OK]

prameswar/filebeat filebeat installation 1 [OK]

ninech/openshift-filebeat Filebeat image that runs on Openshift. 1 [OK]

apsops/filebeat-kubernetes Filebeat container, alternative to fluentd... 1 [OK]

portbase/elk-filebeat elk-filebeat 1

ibmcom/filebeat Docker Image for IBM Cloud private-CE (Com... 0

watsco/filebeat filebeat 0 [OK]

pdffiller/filebeat-consul filebeat client configured with consul 0

bmcbm/docker-filebeat Ubuntu Xeinal based image running Elastico... 0 [OK]

devopsil/filebeat-kubernetes filebeat-kubernetes Docker image 0 [OK]

ackstorm/relk-filebeat 0

dsop/alpine-filebeat a filebeat image 0 [OK]

komljen/filebeat Filebeat docker image for Kubernetes. 0 [OK]

mewm/alpine-filebeat Filebeat 1.2.3 based on Alpine linux 0 [OK]

anchorfree/filebeat 0

sensnet/filebeat filebeat 0 [OK]

mspanakis/docker-filebeat Docker container for filebeat 0 [OK]

microservicestoday/filebeat 0

bdclark/nomad-filebeat Filebeat log shipper for a Nomad task group. 0 [OK]

djenriquez/filebeat Dockerized Filebeat 0 [OK]

这里选择的是prima/filebeat镜像

2.创建filebeat配置文件,filebeat.yml,放入到/home/filebeat/文件夹中,filebeat.yml配置文件如下:

###################### Filebeat Configuration Example #########################

# This file is an example configuration file highlighting only the most common

# options. The filebeat.reference.yml file from the same directory contains all the

# supported options with more comments. You can use it as a reference.

#

# You can find the full configuration reference here:

# https://www.elastic.co/guide/en/beats/filebeat/index.html

# For more available modules and options, please see the filebeat.reference.yml sample

# configuration file.

#=========================== Filebeat prospectors =============================

filebeat.prospectors:

# Each - is a prospector. Most options can be set at the prospector level, so

# you can use different prospectors for various configurations.

# Below are the prospector specific configurations.

- type: log

# Change to true to enable this prospector configuration.

enabled: true

# Paths that should be crawled and fetched. Glob based paths.

#配置filebeat要读取的log文件路径,有多个的话可以使用通配符或者多个paths节点配置

paths:

- /home/logs/*_all.log

#- c:\programdata\elasticsearch\logs\*

# Exclude lines. A list of regular expressions to match. It drops the lines that are

# matching any regular expression from the list.

#exclude_lines: ['^DBG']

# Include lines. A list of regular expressions to match. It exports the lines that are

# matching any regular expression from the list.

#include_lines: ['^ERR', '^WARN']

# Exclude files. A list of regular expressions to match. Filebeat drops the files that

# are matching any regular expression from the list. By default, no files are dropped.

#exclude_files: ['.gz$']

# Optional additional fields. These fields can be freely picked

# to add additional information to the crawled log files for filtering

#fields:

# level: debug

# review: 1

### Multiline options

# Mutiline can be used for log messages spanning multiple lines. This is common

# for Java Stack Traces or C-Line Continuation

# The regexp Pattern that has to be matched. The example pattern matches all lines starting with [

#multiline.pattern: ^\[

# Defines if the pattern set under pattern should be negated or not. Default is false.

#multiline.negate: false

# Match can be set to "after" or "before". It is used to define if lines should be append to a pattern

# that was (not) matched before or after or as long as a pattern is not matched based on negate.

# Note: After is the equivalent to previous and before is the equivalent to to next in Logstash

#multiline.match: after

#============================= Filebeat modules ===============================

#modules也没有使用到,暂时不知道怎么使用,本人也注释掉了

#filebeat.config.modules:

# Glob pattern for configuration loading

# path: ${path.config}/modules.d/*.yml

# Set to true to enable config reloading

# reload.enabled: false

# Period on which files under path should be checked for changes

#reload.period: 10s

#==================== Elasticsearch template setting ==========================

#setup.template.settings:

# index.number_of_shards: 3

#index.codec: best_compression

#_source.enabled: false

#================================ General =====================================

# The name of the shipper that publishes the network data. It can be used to group

# all the transactions sent by a single shipper in the web interface.

#name:

# The tags of the shipper are included in their own field with each

# transaction published.

#tags: ["service-X", "web-tier"]

# Optional fields that you can specify to add additional information to the

# output.

#fields:

# env: staging

#============================== Dashboards =====================================

# These settings control loading the sample dashboards to the Kibana index. Loading

# the dashboards is disabled by default and can be enabled either by setting the

# options here, or by using the `-setup` CLI flag or the `setup` command.

#setup.dashboards.enabled: false

# The URL from where to download the dashboards archive. By default this URL

# has a value which is computed based on the Beat name and version. For released

# versions, this URL points to the dashboard archive on the artifacts.elastic.co

# website.

#setup.dashboards.url:

#============================== Kibana =====================================

# Starting with Beats version 6.0.0, the dashboards are loaded via the Kibana API.

# This requires a Kibana endpoint configuration.

setup.kibana:

# Kibana Host

# Scheme and port can be left out and will be set to the default (http and 5601)

# In case you specify and additional path, the scheme is required: http://localhost:5601/path

# IPv6 addresses should always be defined as: https://[2001:db8::1]:5601

#host: "localhost:5601"

#============================= Elastic Cloud ==================================

# These settings simplify using filebeat with the Elastic Cloud (https://cloud.elastic.co/).

# The cloud.id setting overwrites the `output.elasticsearch.hosts` and

# `setup.kibana.host` options.

# You can find the `cloud.id` in the Elastic Cloud web UI.

#cloud.id:

# The cloud.auth setting overwrites the `output.elasticsearch.username` and

# `output.elasticsearch.password` settings. The format is `:` .

#cloud.auth:

#================================ Outputs =====================================

# Configure what output to use when sending the data collected by the beat.

#-------------------------- Elasticsearch output ------------------------------

#本人没有使用elasticseach,注释掉了

#output.elasticsearch:

# Array of hosts to connect to.

# hosts: ["192.168.1.33:9200"]

# Optional protocol and basic auth credentials.

#protocol: "https"

#username: "elastic"

#password: "changeme"

#----------------------------- Logstash output --------------------------------

output.logstash:

# The Logstash hosts,elk服务器logstash开放的地址和端口,嫌使用ssl的方式太麻烦,内网使用,所以ssl的相关配置也注释掉了

hosts: ["192.168.1.33:5044"]

logging.metrics.enabled: false

# Optional SSL. By default is off.

# List of root certificates for HTTPS server verifications

#ssl.certificate_authorities: ["/etc/pki/root/ca.pem"]

# Certificate for SSL client authentication

#ssl.certificate: "/etc/pki/client/cert.pem"

# Client Certificate Key

#ssl.key: "/etc/pki/client/cert.key"

#================================ Logging =====================================

# Sets log level. The default log level is info.

# Available log levels are: critical, error, warning, info, debug

#logging.level: debug

# At debug level, you can selectively enable logging only for some components.

# To enable all selectors use ["*"]. Examples of other selectors are "beat",

# "publish", "service".

#logging.selectors: ["*"]

3.因为没使用到上述的modules,为了使filebeat启动的时候不报错,在home文件夹下创建一个modules的空目录

至此,filebeat客户端的安装准备工作完毕。

4.切换到elk服务器,配置logstash端口接收部分:

1)执行以下命令,进入到elk容器内部

docker exec -it elk(本人elk的docker容器名字) /bin/bash2)修改logstash的input配置文件(也可以通过使用苏追究文件映射的方式来处理)

root@b15eb2b7fdbb:cd /etc/logstash/conf.d

root@b15eb2b7fdbb:cd vi 02-beats-input.conf将文件内容修改成以下内容即可:

client_inactivity_timeout为客户端连接超时时间,默认是5秒貌似。

input {

beats {

port => 5044

client_inactivity_timeout => 36000

}

}5.重启elk

docker restart elk6.切换到filebeat客户端机器,运行以下命令,启动filebeat docker容器

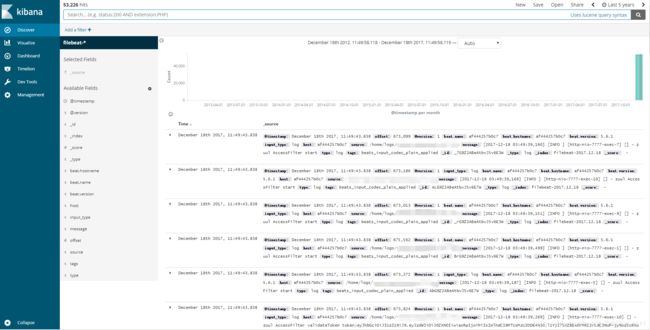

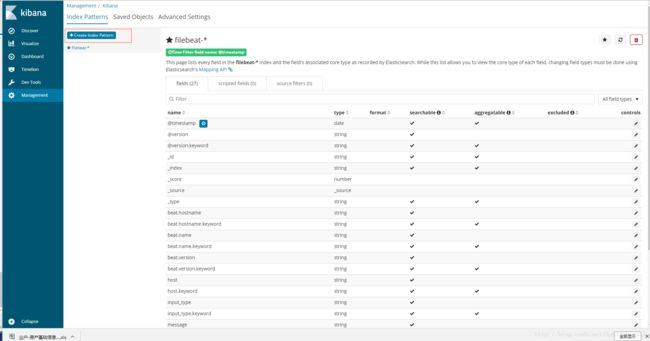

docker run --name filebeat -v /home/filebeat/filebeat.yml:/filebeat.yml -v /home/logs/:/home/logs/ -v /home/filebeat/module:/module --net=host prima/filebeat7.打开kibana,创建filebeat-*索引,

我这里已经创建好了,就不再创建了。

至此,整个elk的搭建和客户端filebeat的搭建使用介绍完毕。