【神经网络】AutoEncoder自编码器

1.基本功能

自编码器最大的特点是输入与输出的节点数是一致的,其功能并不是进行分类或者是回归预测,而是将原始数据转换为一种特殊的特征表示方式,在训练的过程中,依然采用反向传播算法进行参数优化,目标函数为使输出与输入之间的误差最小化。

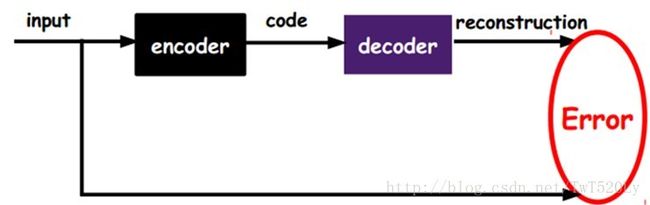

基本功能如图,如图所示,普通神经网络是训练类别(或者离散值)与输入之间的映射关系,而AutoEncoder是将input通过encoder进行编码得到code,然后再使用decoder进行解码,得到原始数据相同维度的表示方式reconstruction,进而通过反向传播优化input与rexonstruction之间的误差。

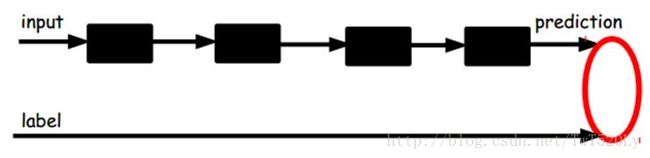

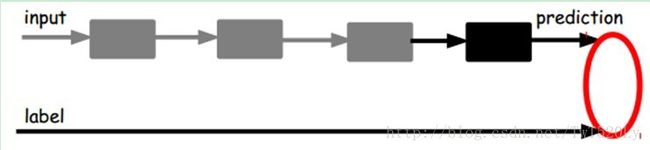

到目前为止,只是获取到了一个新的特征向量,因此需要连接其他网络结构实现预测分类等功能。通过训练,这个特征可以在最大程度上表示原始数据。最终我们的模型是AutoEncoder+分类器。如图分别为两种监督训练方式:

第一张图片表示在训练prediction与label过程中对整个网络进行参数调节;第二张图片表示在训练过程中仅调节分类器,不修改编码器内部参数。第一种属于End-To-End算法,效果较好。

2.算法实现

(1)导入包

import tflearn.datasets.mnist as mnist

import tflearn

import numpy as np

import matplotlib.pyplot as plt

from tflearn.layers.core import fully_connected, input_data(2)构建Encoder和Decoder

X, Y, testX, testY = mnist.load_data(one_hot=True)

encoder = input_data(shape=[None, 784])

encoder = fully_connected(encoder, 256)

encoder = fully_connected(encoder, 64)

decoder = fully_connected(encoder, 256)

decoder = fully_connected(encoder, 784)

net = tflearn.regression(decoder, optimizer='adam', loss='mean_square',

learning_rate=0.001, metric=None)

model = tflearn.DNN(net)

model.fit(X, X,n_epoch=3, validation_set=(testX, testX),show_metric=True,batch_size=256)(3)测试Encoder结果

print('\nTest encoding of X[0]: ')

encoding_model = tflearn.DNN(encoder, session=model.session)

print(encoding_model.predict([X[0]]))通过调用model.fit()的会话实现权重共享。

(4)可视化结果

print('\nVisualizing results after being encoder and decoder')

testX = tflearn.data_utils.shuffle(testX)[0]

encoder_decoder = model.predict(testX)

# plt

f, a = plt.subplots(2, 10, figsize=(10, 2))

for i in range(10):

temp = [[ii, ii, ii] for ii in list(testX[i])]

a[0][i].imshow(np.reshape(temp, (28, 28, 3)))

temp = [[ii, ii, ii] for ii in list(encoder_decoder[i])]

a[1][i].imshow(np.reshape(temp, (28, 28, 3)))

f.show()

plt.draw()

plt.waitforbuttonpress()(5)连接分类器

此处以Alexnet为例进行测试,效果一般,主要是理解过程

from tflearn.layers.estimator import regression

from tflearn.layers.conv import conv_2d, max_pool_2d

from tflearn.layers.normalization import local_response_normalization

from tflearn.layers.core import dropout

import tensorflow as tf

tf.reset_default_graph()

codeX = model.predict(X)

codeX = codeX.reshape([-1, 28, 28, 1])

network = input_data(shape=[None, 28, 28, 1])

network = conv_2d(network, nb_filter=96, filter_size=8, strides=2, activation='relu')

network = max_pool_2d(network, kernel_size=3, strides=1)

network = local_response_normalization(network)

network = conv_2d(network, nb_filter=256, filter_size=5, strides=1, activation='relu')

network = max_pool_2d(network, kernel_size=3, strides=1)

network = local_response_normalization(network)

network = conv_2d(network, nb_filter=384, filter_size=3, activation='relu')

network = conv_2d(network, nb_filter=384, filter_size=3, activation='relu')

network = conv_2d(network, nb_filter=256, filter_size=3, activation='relu')

network = max_pool_2d(network, kernel_size=3, strides=1)

network = fully_connected(network, 2048, activation='tanh')

network = dropout(network, 0.5)

network = fully_connected(network, 2048, activation='tanh')

network = dropout(network, 0.5)

network = fully_connected(network, 10, activation='softmax')

network = regression(network, optimizer='momentum', loss='categorical_crossentropy', learning_rate=0.001)

model_alexnet = tflearn.DNN(network)

model_alexnet.fit(codeX, Y, n_epoch=100, validation_set=0.2, show_metric=True)

参考链接:http://blog.csdn.net/u010555688/article/details/24438311