玩转Jetson Nano(七)人脸识别(一)

之前的几篇博文都是搭建环境,总觉得不拿nano做点什么写出来我的这个系列就好像缺点啥,今天难得有空,写写自己做的一个人脸识别的心得。这个小程序用了一天写出来,再加上已经很久不写代码了,瑕疵在所难免,请各位包涵了!

其实这个小项目是分两个部分,第一部分是通过Jetson Nano完成,主要工作是建立模型,训练模型以及导出模型参数。第二部分是在我的笔记本上完成,因为我的笔记本有摄像头,而且用windows系统设计界面要容易的多。

一,前期准备

1. 准备数据

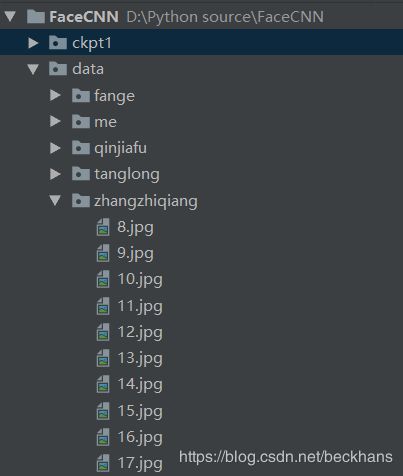

人脸识别系统的数据当然是人脸,我没用网上那些人脸库,而是用的自己同事的脸。因为我是团队的领导,这些同事迫于我的淫威...。 这里要说明一下图片存储的结构,因为以后做标签的时候方便。你应该给每一个同事设定一个文件夹,然后把这些文件夹存放在一个大的文件夹里。我的目录结构如下图所示,data文件夹下都是照片,每个人100张

2. 系统环境如下,如何搭建参看前文

nano 上: Keras2.2.4 ,OpenCV3.3.1,Python3.6.7

笔记本上: Keras2.2.4 ,OpenCV4.1, Python3.6.7,Pyqt5.0

二,代码编写

1. 建立样本集

import numpy as np

import os

import glob

import cv2

from keras.utils.np_utils import to_categorical

from keras.layers.convolutional import Conv2D, MaxPooling2D

from keras.models import Sequential

from keras.initializers import truncated_normal

from keras.layers import Flatten, Dense, Dropout

from keras import regularizers

from keras.optimizers import Adam

# 训练用图片存放路径

p_path = '/home/beckhans/Projects/FaceCNN/data'

# 将所有的图片resize成128*128

w = 128

h = 128

c = 3

def read_img(path):

# 读取当前文件夹下面的所有子文件夹

cate = [path + '/' + x for x in os.listdir(path) if os.path.isdir(path + '/' + x)]

# 图片数据集

imgs = []

# 标签数据集

labels = []

for idx, folder in enumerate(cate):

for im in glob.glob(folder + '/*.jpg'):

print('reading the images:%s' % im)

# 读取照片

img = cv2.imread(im)

# 将照片resize128*128*3

img = cv2.resize(img, (w, h))

imgs.append(img)

labels.append(idx)

return np.asarray(imgs, np.float32), np.asarray(labels, np.int32)上面的代码关键位置都写了注释,相信大家都看得懂。标签部分我是用文件夹的索引值代替。下面截了一个我程序的debug画面来说明,其中0~4分别是每个人的索引值,我是4号。

2. 搭建模型

图片输入后,经历了4次卷积,每一次卷积后进行了池化。然后铺平,经过三个全连接层输出结果。代码并不难,相信大家能够看懂。

def cnnlayer():

# 第一个卷积层(128——>64)

model = Sequential()

conv1 = Conv2D(

input_shape=[128, 128, 3],

filters=32,

kernel_size=(5, 5),

strides=(1, 1),

padding="same",

activation="relu",

kernel_initializer=truncated_normal(mean=0.0, stddev=0.01, seed=None))

pool1 = MaxPooling2D(pool_size=(2, 2),

strides=2)

model.add(conv1)

model.add(pool1)

# 第二个卷积层(64->32)

conv2 = Conv2D(filters=64,

kernel_size=(5, 5),

padding="same",

activation="relu",

kernel_initializer=truncated_normal(mean=0.0, stddev=0.01, seed=None))

pool2 = MaxPooling2D(pool_size=[2, 2],

strides=2)

model.add(conv2)

model.add(pool2)

# 第三个卷积层(32->16)

conv3 = Conv2D(filters=128,

kernel_size=[3, 3],

padding="same",

activation="relu",

kernel_initializer=truncated_normal(mean=0.0, stddev=0.01, seed=None))

pool3 = MaxPooling2D(pool_size=[2, 2],

strides=2)

model.add(conv3)

model.add(pool3)

# 第四个卷积层(16->8)

conv4 = Conv2D(filters=128,

kernel_size=[3, 3],

padding="same",

activation="relu",

kernel_initializer=truncated_normal(mean=0.0, stddev=0.01, seed=None))

pool4 = MaxPooling2D(pool_size=[2, 2],

strides=2)

model.add(conv4)

model.add(pool4)

model.add(Flatten())

model.add(Dropout(0.5))

# 全连接层

dense1 = Dense(units=1024,

activation="relu",

kernel_initializer=truncated_normal(mean=0.0, stddev=0.01, seed=None),

kernel_regularizer=regularizers.l2(0.003))

model.add(Dropout(0.5))

dense2 = Dense(units=512,

activation="relu",

kernel_initializer=truncated_normal(mean=0.0, stddev=0.01, seed=None),

kernel_regularizer=regularizers.l2(0.003))

model.add(Dropout(0.5))

dense3 = Dense(units=5,

activation='softmax',

kernel_initializer=truncated_normal(mean=0.0, stddev=0.01, seed=None),

kernel_regularizer=regularizers.l2(0.003))

model.add(dense1)

model.add(dense2)

model.add(dense3)

return model3. 训练模型

if __name__ == '__main__':

data, label = read_img(p_path)

num_example = data.shape[0]

arr = np.arange(num_example)

# 打乱顺序

np.random.shuffle(arr)

data = data[arr]

label = label[arr]

# 将所有数据分为训练集和验证集

ratio = 0.8

s = np.int(num_example * ratio)

x_train = data[:s]

y_train = label[:s]

y_train = to_categorical(y_train, num_classes=5)

x_val = data[s:]

y_val = label[s:]

y_val = to_categorical(y_val, num_classes=5)

# 使用Adam优化器

model = cnnlayer()

# 设定学习率0.01

adam = Adam(lr=0.001)

# 使用分类交叉熵作为损失函数

model.compile(loss='categorical_crossentropy', optimizer=adam, metrics=['accuracy'])

# 训练模型

model.fit(x_train, y_train, batch_size=32, epochs=10)

score = model.evaluate(x_val, y_val, batch_size=32)

print('Test score:', score[0])

print('Test accuracy:', score[1])

# 模型保存

model.save("CNN.model")运行上面这段代码时,pycharm给出的调试信息,禁用Ubuntu桌面后,freeMemory还是挺大的。

name: NVIDIA Tegra X1 major: 5 minor: 3 memoryClockRate(GHz): 0.9216

pciBusID: 0000:00:00.0

totalMemory: 3.87GiB freeMemory: 2.35GiB经历了几分钟的等待,把十次Epoch的结果贴出来,准确率居然达到100%,看来我的388张测试照片还是有点单调。

2019-04-26 12:59:37.485012: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1512] Adding visible gpu devices: 0

2019-04-26 12:59:43.495710: I tensorflow/core/common_runtime/gpu/gpu_device.cc:984] Device interconnect StreamExecutor with strength 1 edge matrix:

2019-04-26 12:59:43.495780: I tensorflow/core/common_runtime/gpu/gpu_device.cc:990] 0

2019-04-26 12:59:43.495805: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1003] 0: N

2019-04-26 12:59:43.495943: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1115] Created TensorFlow device (/job:localhost/replica:0/task:0/device:GPU:0 with 1632 MB memory) -> physical GPU (device: 0, name: NVIDIA Tegra X1, pci bus id: 0000:00:00.0, compute capability: 5.3)

2019-04-26 12:59:51.670396: I tensorflow/stream_executor/dso_loader.cc:153] successfully opened CUDA library libcublas.so.10.0 locally

388/388 [==============================] - 44s 114ms/step - loss: 2.7606 - acc: 0.2294

Epoch 2/10

388/388 [==============================] - 8s 20ms/step - loss: 1.8454 - acc: 0.2758

Epoch 3/10

388/388 [==============================] - 8s 19ms/step - loss: 1.1088 - acc: 0.6753

Epoch 4/10

388/388 [==============================] - 8s 19ms/step - loss: 0.5395 - acc: 0.9356

Epoch 5/10

388/388 [==============================] - 8s 19ms/step - loss: 0.3405 - acc: 0.9923

Epoch 6/10

388/388 [==============================] - 8s 19ms/step - loss: 0.3979 - acc: 0.9871

Epoch 7/10

388/388 [==============================] - 8s 19ms/step - loss: 0.5559 - acc: 0.9510

Epoch 8/10

388/388 [==============================] - 8s 19ms/step - loss: 0.3960 - acc: 0.9794

Epoch 9/10

388/388 [==============================] - 8s 20ms/step - loss: 0.3820 - acc: 0.9871

Epoch 10/10

388/388 [==============================] - 8s 19ms/step - loss: 0.3958 - acc: 0.9897

97/97 [==============================] - 3s 33ms/step

Test score: 0.31855019189647793

Test accuracy: 1.0