图像神经风格转换(Neural Style Transfer,NST)

一.概述

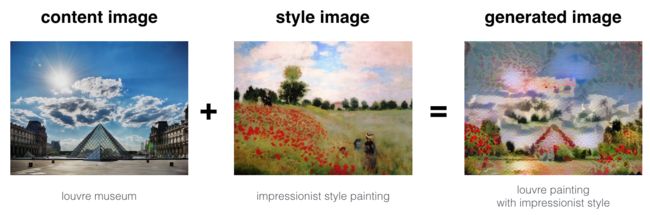

神经风格转换(Neural Style Transfer,NST)是深学习中最有趣的技术之一。如下图所示,它合并两个图像,即“内容”图像(CContent)和“风格”图像(SStyle),以创建“生成的”图像(GGenerated)。生成的图像G将图像C的“内容”与图像S的“风格”相结合。在这个例子中,你将生成一个巴黎卢浮宫博物馆(内容图像C)与一个领袖印象派运动克劳德·莫奈的画(风格图像S)混合起来的绘画。

原理很简单:我们定义了两个差距,一个是内容(Dc),另一个是风格(Ds)。Dc测量两幅图像之间内容的差异程度,而Ds测量两幅图像之间风格的差异程度。然后,我们拍摄第三张图像,输入(例如有噪音的图像),然后对其进行变形,以便将内容与内容图像的差距以及与风格图像的风格差距最小化。

二.pytorch实现

1.图像加载与调整

from PIL import Image

import torch

from torch.autograd import Variable

import torchvision.transforms as transforms

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

use_cuda = torch.cuda.is_available()

imsize = 512 if use_cuda else 128

loader = transforms.Compose([

transforms.Resize(imsize),

transforms.ToTensor()])

def load_image(img_path):

image = Image.open(img_path)

# fake batch dimension required to fit network's input dimensions

image = loader(image).unsqueeze(0)

return image.to(device, torch.float)2.加载vgg19预训练模型

import torch

import torchvision.models as models

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

cnn = models.vgg19(pretrained=True).features.to(device).eval()3.对输入图片做标准化处理

import torch

import torch.nn as nn

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

cnn_normalization_mean = torch.tensor([0.485, 0.456, 0.406]).to(device)

cnn_normalization_std = torch.tensor([0.229, 0.224, 0.225]).to(device)

# create a module to normalize input image so we can easily put it in a

# nn.Sequential

class Normalization(nn.Module):

def __init__(self, mean, std):

super(Normalization, self).__init__()

# .view the mean and std to make them [C x 1 x 1] so that they can

# directly work with image Tensor of shape [B x C x H x W].

# B is batch size. C is number of channels. H is height and W is width.

self.mean = torch.tensor(mean).view(-1, 1, 1)

self.std = torch.tensor(std).view(-1, 1, 1)

def forward(self, img):

# normalize img

return (img - self.mean) / self.std4.计算内容损失

浅层的一个卷积网络往往检测到较低层次的特征,如边缘和简单的纹理,更深层往往检测更高层次的特征,如更复杂的纹理以及对象分类等。

我们希望“生成的”图像G具有与输入图像C相似的内容。假设我们选择了一些层的激活来表示图像的内容,在实践中,如果你在网络中间选择一个层——既不太浅也不太深,你会得到最好的的视觉结果。

假设你选择了一个特殊的隐藏层,现在,将图像C作为已经训练好的VGG网络的输入,然后进行前向传播。让![]() 成为你选择的层中的隐藏层激活,激活值为nH×nW×nC的张量。然后用图像G重复这个过程:将G设置为输入数据,并进行前向传播,让

成为你选择的层中的隐藏层激活,激活值为nH×nW×nC的张量。然后用图像G重复这个过程:将G设置为输入数据,并进行前向传播,让![]() 成为相应的隐层激活,我们将把内容成本函数定义为:

成为相应的隐层激活,我们将把内容成本函数定义为:

这里可以直接利用pytorch里面的MSE均方损失函数,这里 loss, x, y 的维度是一样的,可以是向量或者矩阵,i是下标。

![]()

import torch

import torch.nn as nn

import torch.nn.functional as F

class ContentLoss(nn.Module):

def __init__(self, target):

super(ContentLoss, self).__init__()

self.target = target.detach()

def forward(self, input):

self.loss = F.mse_loss(input, self.target)

return input注意:这里稍微介绍一下detach()函数:

- 返回一个新的 从当前图中分离的 Variable。

- 返回的 Variable 永远不会需要梯度

- 如果 被 detach 的Variable volatile=True, 那么 detach 出来的 volatile 也为 True

- 还有一个注意事项,即:返回的 Variable 和 被 detach 的Variable 指向同一个 tensor

用处:

如果我们有两个网络 A,B, 两个关系是这样的 y=A(x),z=B(y) 现在我们想用 z.backward() 来为 B 网络的参数来求梯度,但是又不想求 A 网络参数的梯度。我们可以这样:

# y=A(x), z=B(y) 求B中参数的梯度,不求A中参数的梯度 # 第一种方法 y = A(x) z = B(y.detach()) z.backward() # 第二种方法 y = A(x) y.detach_() z = B(y) z.backward()

5.计算风格损失

5.1格拉姆矩阵(gram_matrix)

在线性代数中,一组向量(v1,...,v2)的格拉姆矩阵G是点乘的矩阵,计算细节是![]() ,换句话说,Gij比较了vi与vj的相似之处,如果他们非常相似,那么它们的点积就会很大,所以Gij就很大。

,换句话说,Gij比较了vi与vj的相似之处,如果他们非常相似,那么它们的点积就会很大,所以Gij就很大。

在神经风格转换中,可以通过将降维了的过滤器矩阵与其转置相乘来计算风格矩阵:

计算后的结果是维度为(nC,nC)的矩阵,其中nC是过滤器的数量,Gij测量了过滤器i的激活与过滤器j的激活具有多大的相似度。

风格矩阵Gii的一个重要的部分是对角线的元素,它测量了有效的过滤器i的多少。举个例子,假设过滤器i检测的是图像中的垂直的纹理,那么Gii测量的是图像整体中常见的垂直纹理,如果Gii很大,这意味着图像有很多垂直纹理。

通过捕捉不同类型的特征(Gii)的多少,以及总共出现了多少不同的特征(Gij),那么风格矩阵G就测量的是整个图片的风格。

5.2风格损失

在生成了风格矩阵(Gram matrix / Style matrix)之后,我们的目标是最小化风格图像的S与生成的图像G之间的距离。现在我们只使用单个隐藏层a[l],该层的相应的风格成本定义如下:

其中,G(S) 与 G(G)分别是风格图像与生成的图像的Gram矩阵,使用网络中特定隐藏层的激活来计算。

import torch

import torch.nn as nn

import torch.nn.functional as F

def gram_matrix(input):

a, b, c, d = input.size() # a=batch size(=1)

# b=number of feature maps

# (c,d)=dimensions of a f. map (N=c*d)

features = input.view(a * b, c * d) # resise F_XL into \hat F_XL

G = torch.mm(features, features.t()) # compute the gram product

# we 'normalize' the values of the gram matrix

# by dividing by the number of element in each feature maps.

return G.div(a * b * c * d)

class StyleLoss(nn.Module):

def __init__(self, target_feature):

super(StyleLoss, self).__init__()

self.target = gram_matrix(target_feature).detach()

def forward(self, input):

G = gram_matrix(input)

self.loss = F.mse_loss(G, self.target)

return input

6.模型构建

我们创建一个最小化风格和内容成本的成本函数。公式是:

![]()

我们来实现总成本函数,包括内容成本和风格成本。

默认的style_weight=1000000,content_weight=1,step=300,你可以在run_style_transfer函数中修改这些值。

from normalization import Normalization

from contentloss import ContentLoss

from styleloss import StyleLoss

import torch

import torch.nn as nn

import torch.optim as optim

import copy

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

# desired depth layers to compute style/content losses :

content_layers_default = ['conv_4']

style_layers_default = ['conv_1', 'conv_2', 'conv_3', 'conv_4', 'conv_5']

def get_style_model_and_losses(cnn, normalization_mean, normalization_std,

style_img, content_img,

content_layers=content_layers_default,

style_layers=style_layers_default):

cnn = copy.deepcopy(cnn)

# normalization module

normalization = Normalization(normalization_mean, normalization_std).to(device)

# just in order to have an iterable access to or list of content/syle

# losses

content_losses = []

style_losses = []

# assuming that cnn is a nn.Sequential, so we make a new nn.Sequential

# to put in modules that are supposed to be activated sequentially

model = nn.Sequential(normalization)

i = 0 # increment every time we see a conv

for layer in cnn.children():

if isinstance(layer, nn.Conv2d):

i += 1

name = 'conv_{}'.format(i)

elif isinstance(layer, nn.ReLU):

name = 'relu_{}'.format(i)

# The in-place version doesn't play very nicely with the ContentLoss

# and StyleLoss we insert below. So we replace with out-of-place

# ones here.

layer = nn.ReLU(inplace=False)

elif isinstance(layer, nn.MaxPool2d):

name = 'pool_{}'.format(i)

elif isinstance(layer, nn.BatchNorm2d):

name = 'bn_{}'.format(i)

else:

raise RuntimeError('Unrecognized layer: {}'.format(layer.__class__.__name__))

model.add_module(name, layer)

if name in content_layers:

# add content loss:

target = model(content_img).detach()

content_loss = ContentLoss(target)

model.add_module("content_loss_{}".format(i), content_loss)

content_losses.append(content_loss)

if name in style_layers:

# add style loss:

target_feature = model(style_img).detach()

style_loss = StyleLoss(target_feature)

model.add_module("style_loss_{}".format(i), style_loss)

style_losses.append(style_loss)

# now we trim off the layers after the last content and style losses

for i in range(len(model) - 1, -1, -1):

if isinstance(model[i], ContentLoss) or isinstance(model[i], StyleLoss):

break

model = model[:(i + 1)]

return model, style_losses, content_losses

def get_input_optimizer(input_img):

# this line to show that input is a parameter that requires a gradient

optimizer = optim.LBFGS([input_img.requires_grad_()])

return optimizer

def run_style_transfer(cnn, normalization_mean, normalization_std,

content_img, style_img, input_img, num_steps=300,

style_weight=1000000, content_weight=1):

"""Run the style transfer."""

print('Building the style transfer model..')

model, style_losses, content_losses = get_style_model_and_losses(cnn,normalization_mean, normalization_std, style_img, content_img)

optimizer = get_input_optimizer(input_img)

print('Optimizing..')

run = [0]

while run[0] <= num_steps:

def closure():

# correct the values of updated input image

input_img.data.clamp_(0, 1)

optimizer.zero_grad()

model(input_img)

style_score = 0

content_score = 0

for sl in style_losses:

style_score += sl.loss

for cl in content_losses:

content_score += cl.loss

style_score *= style_weight

content_score *= content_weight

loss = style_score + content_score

loss.backward()

run[0] += 1

if run[0] % 50 == 0:

print("run {}:".format(run))

print('Style Loss : {:4f} Content Loss: {:4f}'.format(

style_score.item(), content_score.item()))

print()

return style_score + content_score

optimizer.step(closure)

# a last correction...

input_img.data.clamp_(0, 1)

return input_img

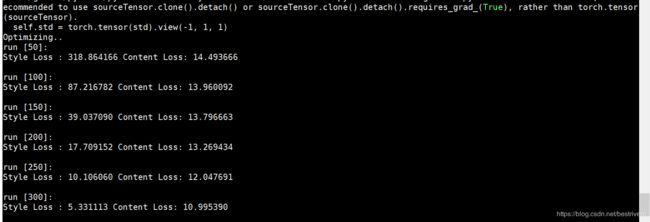

7.运行模型

这部分代码可以写到run.py里面,直接运行run.py即可。

from image_loader import load_image

from style_transfer import run_style_transfer

from load_vgg19 import cnn

import torch

from PIL import Image

import matplotlib.pyplot as plt

import torchvision.transforms as transforms

import os

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

cnn_normalization_mean = torch.tensor([0.485, 0.456, 0.406]).to(device)

cnn_normalization_std = torch.tensor([0.229, 0.224, 0.225]).to(device)

unloader = transforms.ToPILImage()

style_img = load_image("./images/style.jpg")

content_img = load_image("./images/content.jpg")

input_img = content_img.clone()

output = run_style_transfer(cnn, cnn_normalization_mean, cnn_normalization_std,

content_img, style_img, input_img)

image = output.cpu().clone() # we clone the tensor to not do changes on it

image = image.squeeze(0) # remove the fake batch dimension

image = unloader(image)

os.chdir('output')

image.save("output.png")模型运行成功的截图:

最终生成的output.png在output文件夹下。

![J_{style}^{[l]}(S,G) = \frac{1}{4 \times {n_C}^2 \times (n_H \times n_W)^2} \sum _{i=1}^{n_C}\sum_{j=1}^{n_C}(G^{(S)}_{ij} - G^{(G)}_{ij})^2](http://img.e-com-net.com/image/info8/1f42c395056c4046a6c7fcfa42a673c0.gif)