周志华《机器学习》Ch8. 集成学习:AdaBoost的python实现

概述

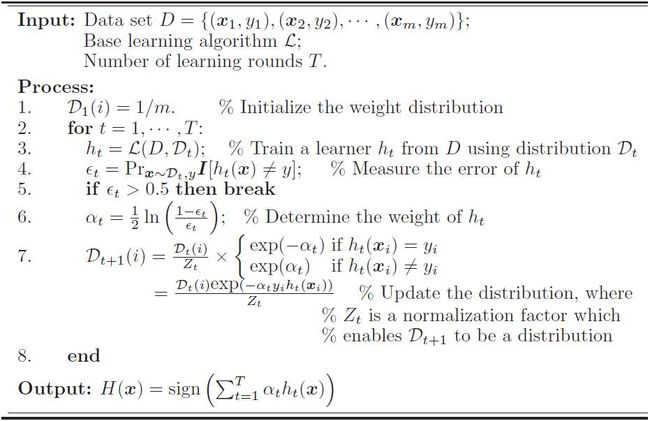

AdaBoost(Adaptive Boosting)是一种集成学习技术,可将弱学习器提升为强学习器。大致思路是:根据初始训练集训练出一个基学习器,再根据基学习器的表现调整训练样本的分布,使得该基学习器分错的样本权重提高,再根据新的分布训练下一个学习器;如此反复直到学习器的数量达到预先指定值T.

算法

推导

针对上面的算法流程中的“6”和“7”进行推导

指数损失函数

AdaBoost采用指数损失函数

![]()

其中![]() 是样本

是样本![]() 的类别,

的类别,![]() (二分类问题,多分类问题采用多个AdaBoost分类器按投票法决定)。

(二分类问题,多分类问题采用多个AdaBoost分类器按投票法决定)。![]() 是分类器。同Sigmoid损失函数一样,指数损失函数是对0-1损失函数的一种连续可微近似。

是分类器。同Sigmoid损失函数一样,指数损失函数是对0-1损失函数的一种连续可微近似。

求分类器权重

当基于分布![]() 的分类器

的分类器![]() 产生后,该分类器的权重

产生后,该分类器的权重![]() 应使得

应使得![]() 最小化指数损失函数

最小化指数损失函数

其中![]() 是分类器

是分类器![]() 在分布

在分布![]() 上的错误率。损失函数求极值,对

上的错误率。损失函数求极值,对![]() 求导

求导

得

该式对应算法流程中的第6步。

更新分布

分布更新的目标是下一轮的基学习器![]() 能够纠正

能够纠正![]() 的全部错误,即最小化

的全部错误,即最小化

上式利用了泰勒公式对![]() 展开到平方项并利用了性质

展开到平方项并利用了性质

![]()

于是

则

该式对应算法流程中的第3步。

求分布更新的递推公式

该式对应算法流程中的第7步。

代码

# -*- coding: utf-8 -*-

"""

Created on Tue Aug 28 17:32:05 2018

AdaBoost

From 'Machine Learning, Zhihua Zhou' Ch8

Model: P192 problem 8.3

Base Learner: One-Layer Decision Tree

Dataset: P84 watermelon_3.0a (watermelon_3.0a.npy)

@author: weiyx15

"""

import numpy as np

import matplotlib.pyplot as plt

class AdaBoost:

def load_data(self, filename):

dic = np.load(filename)

self.x = dic['arr_0']

self.y = dic['arr_1']

self.m = self.x.shape[0]

self.d = self.x.shape[1]

self.k = self.y.max() + 1

def __init__(self, T):

self.load_data('watermelon_3.0a.npz')

self.T = T

self.ind = list() # index of feature of decision trees

self.val = list() # value of selected feature

self.oper = list() # oper=0, <=; oper=1, >

self.W = 1/self.m * np.ones((self.m,))

def error_rate(self, ind, val, oper):

err_rate = 0

if oper == 0:

for i in range(self.m):

if (self.x[i,ind] <= val and self.y[i] == 1)\

or (self.x[i,ind] > val and self.y[i] == 0):

err_rate = err_rate + self.W[i]

else:

for i in range(self.m):

if (self.x[i,ind] > val and self.y[i] == 1)\

or (self.x[i,ind] <= val and self.y[i] == 0):

err_rate = err_rate + self.W[i]

return err_rate

def calculate_separation(self):

data_list = list()

for i in range(self.m):

data_list.append(aPieceofData(self.x[i,:],self.d,self.y[i]))

dl = [None] * self.d

for i in range(self.d):

dl[i] = sorted(data_list, key=lambda apiece : apiece.x[i])

self.Sep = [list()] * self.d

for i in range(self.m):

for j in range(self.d):

if i > 0:

self.Sep[j].append((dl[j][i-1].x[j] + dl[j][i].x[j])/2)

def decision_tree(self):

hlist = [0]*self.m # performance on dataset: -1-right, 1-wrong

err_min = np.inf

for ind in range(self.d):

for val in self.Sep[ind]:

for oper in range(2): # 0 - <=, 1 - >

err = self.error_rate(ind, val, oper)

if (err < err_min):

err_min = err

ind_min = ind

val_min = val

oper_min = oper

if oper_min == 0:

for i in range(self.m):

if ((self.x[i,ind_min] <= val_min and self.y[i] == 0)\

or (self.x[i,ind_min] > val_min and self.y[i] == 1)):

hlist[i] = -1

else:

hlist[i] = 1

else:

for i in range(self.m):

if ((self.x[i,ind_min] > val_min and self.y[i] == 0)\

or (self.x[i,ind_min] <= val_min and self.y[i] == 1)):

hlist[i] = -1

else:

hlist[i] = 1

return err_min, ind_min, val_min, oper_min, hlist

def train(self):

self.calculate_separation()

self.alpha = list()

for t in range(self.T):

err_min, ind_min, val_min, oper_min, hlist = \

self.decision_tree()

self.ind.append(ind_min)

self.val.append(val_min)

self.oper.append(oper_min)

if err_min > 0.5:

break

at = .5 * np.log((1-err_min)/err_min)

self.alpha.append(at)

for i in range(self.m):

self.W[i] = self.W[i]*np.exp(hlist[i]*at)

self.W = self.W / self.W.sum()

def model_print(self):

n_T = len(self.alpha)

for i in range(n_T):

if (self.oper[i] == 0):

print('feature '+str(self.ind[i])+': <='+str(self.val[i])\

+' weight: '+str(self.alpha[i]))

else:

print('feature '+str(self.ind[i])+': >'+str(self.val[i])\

+' weight: '+str(self.alpha[i]))

def test_score(self, xt):

score = 0

n_T = len(self.alpha)

for i in range(n_T):

if ((self.oper[i] == 0 and xt[self.ind[i]] <= self.val[i]) \

or (self.oper[i] == 1 and xt[self.ind[i]] > self.val[i])):

score = score + self.alpha[i]

else:

score = score - self.alpha[i]

return score

def plot_data(self):

x1 = self.x[self.y==1,:]

x0 = self.x[self.y==0,:]

plt.plot(x1[:,0], x1[:,1], 'b.')

plt.plot(x0[:,0], x0[:,1], 'r.')

def test(self, xt):

if self.test_score(xt) > 0:

return 0

else:

return 1

class aPieceofData:

def __init__(self, x, d, y):

self.x = x

self.d = d

self.y = y

if __name__ == '__main__':

ada = AdaBoost(11)

ada.train()

ans = ada.test([0.5, 0.2])

ada.model_print()结果

分类器集成:

feature 1: <=0.2045 weight: 0.770222520474

feature 0: <=0.3815 weight: 0.763028151748

feature 1: <=0.373 weight: 0.662026026121

feature 0: >0.6365 weight: 0.557905864925

feature 0: <=0.3815 weight: 0.432392755165

feature 1: <=0.373 weight: 0.587791717506

feature 1: <=0.126 weight: 0.651166741214

feature 1: <=0.373 weight: 0.381548742991

feature 0: <=0.3815 weight: 0.501460529457

feature 0: >0.5745 weight: 0.606996550442

feature 0: <=0.3815 weight: 0.367149091236