mongodb3.4+sharding分片集群环境搭建

背景:mongodb集群搭建方式有三种,1、主从(官方已经不推荐),2、副本集,3、分片。这里介绍如何通过分片sharding方式搭建mongodb集群。sharding集群方式也基于副本集,在搭建过程中,需要对分片和配置节点做副本集。最后将做好的副本集的分片加入到路由节点,构成集群。

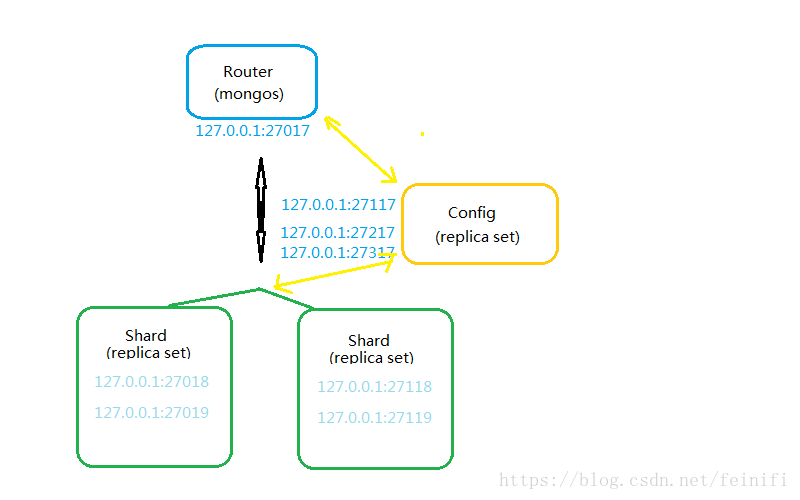

sharding方式的集群中,有三类角色,分别是shard,config,router。如下图所示。

shard:分片节点,存储数据。

config:配置节点,不会存储数据,会存储元数据信息,比如片键的范围。

router:路由节点,是mongo集群与外部客户端连接的入口,他提供mongos客户端,对客户端透明,让客户端感觉使用单节点数据库。

环境介绍:

这里设计的集群如下节点分别如下:

router : 127.0.0.1:27017

config : config/127.0.0.1:27117,127.0.0.1:27217,127.0.0.1:27317

shard : shard1/127.0.0.1:27018,127.0.0.1:27019 shard2/127.0.0.1:27118,127.0.0.1:27119

搭建步骤:

mongodb下载地址:

http://downloads.mongodb.org/linux/mongodb-linux-x86_64-3.4.0.tgz?_ga=2.55492392.2036626358.1530260071-2147164267.1528775467

如果想要下载其他版本,可以在这里选择:http://dl.mongodb.org/dl/linux。

一、设置三类配置文件,分片节点,配置节点,路由节点。

shard01.conf

port=27018

fork=true

dbpath=/home/hadoop/software/mongodb-3.4/data/shard01

logpath=/home/hadoop/software/mongodb-3.4/logs/shard01.log

logappend=true

bind_ip=127.0.0.1

replSet=shard1

shardsvr=true

shard02.conf配置文件和shard02.conf配置文件的区别就是端口改为了27019,另外数据存储路径和日志路径均和文件名一致。

shard21.conf

port=27118

fork=true

dbpath=/home/hadoop/software/mongodb-3.4/data/shard21

logpath=/home/hadoop/software/mongodb-3.4/logs/shard21.log

logappend=true

bind_ip=127.0.0.1

replSet=shard2

shardsvr=true

shard22.conf配置文件和shard21.conf配置文件的区别就是端口改为了27119,另外数据存储路径和日志路径均和文件名一致。

config.conf

port=27117

fork=true

dbpath=/home/hadoop/software/mongodb-3.4/data/config

logpath=/home/hadoop/software/mongodb-3.4/logs/config.log

logappend=true

bind_ip=127.0.0.1

replSet=config

configsvr=true

config2.conf,config3.conf配置文件和config.conf配置文件的区别就是端口改为了27217和27317,另外数据存储路径和日志路径均和文件名一致。

router.conf

port=27017

configdb=config/127.0.0.1:27117,127.0.0.1:27217,127.0.0.1:27317

logpath=/home/hadoop/software/mongodb-3.4/logs/router.log

fork=true

logappend=true

关于配置文件说明:

shard01.conf,shard02.conf是shard1分片的配置。

shard21.conf,shard22.conf是shard2分片的配置。

config.conf,config2.conf,config3.conf是config节点配置。

二、启动分片节点和配置节点。

启动脚本内容:

#!/bin/sh rm -rf data/*/* rm -f logs/* bin/mongod -f conf/shard01.conf bin/mongod -f conf/shard02.conf bin/mongod -f conf/shard21.conf bin/mongod -f conf/shard22.conf bin/mongod -f conf/config.conf bin/mongod -f conf/config2.conf bin/mongod -f conf/config3.conf

启动之后,显示如下信息,表明启动成功。

[root@server mongodb-3.4]# ./start_mongod.sh about to fork child process, waiting until server is ready for connections. forked process: 4870 child process started successfully, parent exiting about to fork child process, waiting until server is ready for connections. forked process: 4895 child process started successfully, parent exiting about to fork child process, waiting until server is ready for connections. forked process: 4920 child process started successfully, parent exiting about to fork child process, waiting until server is ready for connections. forked process: 4944 child process started successfully, parent exiting about to fork child process, waiting until server is ready for connections. forked process: 4970 child process started successfully, parent exiting about to fork child process, waiting until server is ready for connections. forked process: 5002 child process started successfully, parent exiting about to fork child process, waiting until server is ready for connections. forked process: 5035 child process started successfully, parent exiting

三、配置节点构成副本集。

> var config = {_id:'config',members:[{_id:0,host:'127.0.0.1:27117'},{_id:1,host:'127.0.0.1:27217'},{_id:2,host:'127.0.0.1:27317'}]}

> rs.initiate(config)

{ "ok" : 1 }

config:SECONDARY>

config:SECONDARY>

config:PRIMARY> exit

配置分片之后,等待10S左右,当前节点会变为primary节点。

四、分片节点构成副本集。

登录127.0.0.1:27018

bin/mongo --port 27018

> var config = {_id:'shard1',members:[{_id:0,host:'127.0.0.1:27018'},{_id:1,host:'127.0.0.1:27019'}]}

> rs.initiate(config)

{ "ok" : 1 }

shard1:SECONDARY>

shard1:SECONDARY>

shard1:PRIMARY>

接着登录127.0.0.1:27118

bin/mongo --port 27118

> var config = {_id:'shard2',members:[{_id:0,host:'127.0.0.1:27118'},{_id:1,host:'127.0.0.1:27119'}]}

> rs.initiate(config)

{ "ok" : 1 }

shard2:SECONDARY>

shard2:PRIMARY>

两个分片shard1,shard2设置成功之后,等待10s左右就会发现当前节点默认变为了当前分片的primary节点。

五、启动路由节点,并增加分片。

启动脚本内容:

#!/bin/sh bin/mongos -f conf/router.conf

增加分片格式如下:"shard1/127.0.0.1:27018,127.0.0.1:27019"。

[root@server mongodb-3.4]# ./start_mongos.sh

about to fork child process, waiting until server is ready for connections.

forked process: 5928

child process started successfully, parent exiting

[root@server mongodb-3.4]# bin/mongo --port 27017

MongoDB shell version v3.4.0

connecting to: mongodb://127.0.0.1:27017/

MongoDB server version: 3.4.0

Server has startup warnings:

2018-06-29T14:51:29.383+0800 I CONTROL [main]

2018-06-29T14:51:29.383+0800 I CONTROL [main] ** WARNING: Access control is not enabled for the database.

2018-06-29T14:51:29.383+0800 I CONTROL [main] ** Read and write access to data and configuration is unrestricted.

2018-06-29T14:51:29.383+0800 I CONTROL [main] ** WARNING: You are running this process as the root user, which is not recommended.

2018-06-29T14:51:29.384+0800 I CONTROL [main]

mongos> sh.addShard("shard1/127.0.0.1:27018,127.0.0.1:27019")

{ "shardAdded" : "shard1", "ok" : 1 }

mongos> sh.addShard("shard2/127.0.0.1:27118,127.0.0.1:27119")

{ "shardAdded" : "shard2", "ok" : 1 }

数据库集群状态截图:

六、设置数据库启用分片,启用索引。

mongos> use admin

switched to db admin

mongos> sh.enableSharding("push")

{ "ok" : 1 }

mongos> sh.shardCollection("push.user",{name:1})

{ "collectionsharded" : "push.user", "ok" : 1 }

七、写入数据测试。

mongos> for(var i=1;i<=2000000;i++){db.user.save({_id:i,name:"user-"+i,age:18})} WriteResult({ "nMatched" : 0, "nUpserted" : 1, "nModified" : 0, "_id" : 2000000 }) mongos> sh.status() --- Sharding Status --- sharding version: { "_id" : 1, "minCompatibleVersion" : 5, "currentVersion" : 6, "clusterId" : ObjectId("5b35d5a5d35df9180e59a17e") } shards: { "_id" : "shard1", "host" : "shard1/127.0.0.1:27018,127.0.0.1:27019", "state" : 1 } { "_id" : "shard2", "host" : "shard2/127.0.0.1:27118,127.0.0.1:27119", "state" : 1 } active mongoses: "3.4.0" : 1 autosplit: Currently enabled: yes balancer: Currently enabled: yes Currently running: no Balancer lock taken at Fri Jun 29 2018 14:46:03 GMT+0800 (CST) by ConfigServer:Balancer Failed balancer rounds in last 5 attempts: 0 Migration Results for the last 24 hours: 5 : Success databases: { "_id" : "push", "primary" : "shard1", "partitioned" : true } push.user shard key: { "name" : 1 } unique: false balancing: true chunks: shard1 6 shard2 7 { "name" : { "$minKey" : 1 } } -->> { "name" : "user-10" } on : shard1 Timestamp(5, 0) { "name" : "user-10" } -->> { "name" : "user-1142445" } on : shard1 Timestamp(6, 0) { "name" : "user-1142445" } -->> { "name" : "user-1284894" } on : shard2 Timestamp(6, 1) { "name" : "user-1284894" } -->> { "name" : "user-1509894" } on : shard2 Timestamp(5, 2) { "name" : "user-1509894" } -->> { "name" : "user-1734895" } on : shard2 Timestamp(5, 3) { "name" : "user-1734895" } -->> { "name" : "user-191792" } on : shard2 Timestamp(5, 4) { "name" : "user-191792" } -->> { "name" : "user-217119" } on : shard2 Timestamp(4, 7) { "name" : "user-217119" } -->> { "name" : "user-245186" } on : shard2 Timestamp(4, 4) { "name" : "user-245186" } -->> { "name" : "user-390373" } on : shard1 Timestamp(4, 1) { "name" : "user-390373" } -->> { "name" : "user-5636" } on : shard1 Timestamp(3, 3) { "name" : "user-5636" } -->> { "name" : "user-81307" } on : shard1 Timestamp(3, 4) { "name" : "user-81307" } -->> { "name" : "user-9" } on : shard1 Timestamp(2, 4) { "name" : "user-9" } -->> { "name" : { "$maxKey" : 1 } } on : shard2 Timestamp(2, 0)

这里在集群上插入200W条数据。然后查看数据分布情况,发现两个分片shard1,shard2上均有数据。

在shard1分片上验证数据分布。

shard1:PRIMARY> show dbs; admin 0.000GB local 0.046GB push 0.032GB shard1:PRIMARY> use push switched to db push shard1:PRIMARY> db.user.count() 885844

在shard2分片上验证数据分布。

shard2:PRIMARY> show dbs; admin 0.000GB local 0.055GB push 0.042GB shard2:PRIMARY> use push switched to db push shard2:PRIMARY> db.user.count() 1114156