FSRCNN网络资源学习(Fast Region-based Convolutional Network)

FSRCNN(Fast Region-based Convolutional Network)

参考:

https://arxiv.org/pdf/1608.00367v1.pdf Accelerating the Super-Resolution Convolutional Neural Networks (ECCV 2016)

1. FSRCNN放在 Caffe/examples/下

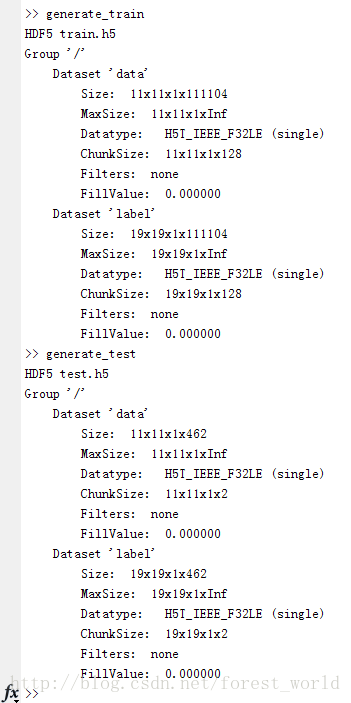

2. 运行generate_train.m和generate_test.m

clear; close all;

%% settings

folder = 'Train/General-100';%

savepath = 'train.h5';%

size_input = 11;% There are 4 pixels padding. Paper presents 7

size_label = 19;% (11-4) *3 - (3-1)

scale = 3;

stride = 4;

%% initialization

data = zeros(size_input, size_input, 1, 1);

label = zeros(size_label, size_label, 1, 1);

padding = abs(size_input - size_label)/2;

count = 0;

%% generate data

filepaths = dir(fullfile(folder,'*.bmp'));

for i = 1 : length(filepaths)

image = imread(fullfile(folder, filepaths(i).name));

image = rgb2ycbcr(image);

image = im2double(image(:, :, 1));

im_label = modcrop(image, scale);

im_input = imresize(im_label, 1/scale, 'bicubic');

[hei,wid] = size(im_input);

for x = 1 : stride : hei - size_input + 1

for y = 1 : stride : wid - size_input + 1

locx = scale * (x + floor((size_input - 1)/2)) - floor((size_label + scale)/2 - 1);

locy = scale * (y + floor((size_input - 1)/2)) - floor((size_label + scale)/2 - 1);

subim_input = im_input(x : size_input + x - 1, y : size_input + y - 1);

subim_label = im_label(locx : size_label + locx - 1, locy : size_label + locy - 1);

count = count + 1;

data(:, :, 1, count) = subim_input;

label(:, :, 1, count) = subim_label;

end

end

end

order = randperm(count);

data = data(:, :, 1, order);

label = label(:, :, 1, order);

%% writing to HDF5

chunksz = 128;

created_flag = false;

totalct = 0;

for batchno = 1:floor(count/chunksz)

last_read = (batchno-1)*chunksz;

batchdata = data(:,:,1,last_read+1:last_read+chunksz);

batchlabs = label(:,:,1,last_read+1:last_read+chunksz);

startloc = struct('dat',[1,1,1,totalct+1], 'lab', [1,1,1,totalct+1]);

curr_dat_sz = store2hdf5(savepath, batchdata, batchlabs, ~created_flag, startloc, chunksz);

created_flag = true;

totalct = curr_dat_sz(end);

end

h5disp(savepath);

3.

运行./build/tools/caffe train –solver examples/FSRCNN/FSRCNN_solver.prototxt

问题:

I1208 11:11:17.523556 2658 layer_factory.hpp:77] Creating layer data

I1208 11:11:17.524196 2658 net.cpp:100] Creating Layer data

I1208 11:11:17.524271 2658 net.cpp:408] data -> data

I1208 11:11:17.524309 2658 net.cpp:408] data -> label

I1208 11:11:17.524338 2658 hdf5_data_layer.cpp:79] Loading list of HDF5 filenames from: examples/FSRCNN/train.txt

I1208 11:11:17.524843 2658 hdf5_data_layer.cpp:93] Number of HDF5 files: 1

HDF5-DIAG: Error detected in HDF5 (1.8.15-patch1) thread 140052527355712:

#000: ../../../src/H5F.c line 604 in H5Fopen(): unable to open file

major: File accessibilty

minor: Unable to open file

#001: ../../../src/H5Fint.c line 990 in H5F_open(): unable to open file: time = Thu Dec 8 11:11:17 2016

, name = 'examples/FSRCNN/train.h5', tent_flags = 0

major: File accessibilty

minor: Unable to open file

#002: ../../../src/H5FD.c line 993 in H5FD_open(): open failed

major: Virtual File Layer

minor: Unable to initialize object

#003: ../../../src/H5FDsec2.c line 343 in H5FD_sec2_open(): unable to open file: name = 'examples/FSRCNN/train.h5', errno = 2, error message = 'No such file or directory', flags = 0, o_flags = 0

major: File accessibilty

minor: Unable to open file

F1208 11:11:17.579452 2658 hdf5_data_layer.cpp:31] Failed opening HDF5 file: examples/FSRCNN/train.h5

*** Check failure stack trace: ***

@ 0x7f60848805cd google::LogMessage::Fail()

@ 0x7f6084882433 google::LogMessage::SendToLog()

@ 0x7f608488015b google::LogMessage::Flush()

@ 0x7f6084882e1e google::LogMessageFatal::~LogMessageFatal()

@ 0x7f6084cac2e3 caffe::HDF5DataLayer<>::LoadHDF5FileData()

@ 0x7f6084ca7b27 caffe::HDF5DataLayer<>::LayerSetUp()

@ 0x7f6084db90af caffe::Net<>::Init()

@ 0x7f6084dba931 caffe::Net<>::Net()

@ 0x7f6084d978ea caffe::Solver<>::InitTrainNet()

@ 0x7f6084d98c67 caffe::Solver<>::Init()

@ 0x7f6084d9900a caffe::Solver<>::Solver()

@ 0x7f6084c18fd3 caffe::Creator_SGDSolver<>()

@ 0x40a569 train()

@ 0x407060 main

@ 0x7f60837f0ac0 __libc_start_main

@ 0x407889 _start

@ (nil) (unknown)

Aborted (core dumped)

解决:

添加:

train.h5 test.h5 (Matlab2016a生成)

训练中:

~/caffe$ ./build/tools/caffe train --solver examples/FSRCNN/FSRCNN_solver.prototxt

I1208 19:20:04.535562 3428 caffe.cpp:210] Use CPU.

I1208 19:20:04.536381 3428 solver.cpp:48] Initializing solver from parameters:

test_iter: 231

test_interval: 5000

base_lr: 0.001

display: 100

max_iter: 10000000

lr_policy: "fixed"

momentum: 0.9

weight_decay: 0

snapshot: 100000

snapshot_prefix: "examples/FSRCNN/N-56-12-4"

solver_mode: CPU

net: "examples/FSRCNN/FSRCNN_net.prototxt"

train_state {

level: 0

stage: ""

}

I1208 19:20:04.536700 3428 solver.cpp:91] Creating training net from net file: examples/FSRCNN/FSRCNN_net.prototxt

I1208 19:20:04.537152 3428 net.cpp:322] The NetState phase (0) differed from the phase (1) specified by a rule in layer data

I1208 19:20:04.537380 3428 net.cpp:58] Initializing net from parameters:

name: "SR_test"

state {

phase: TRAIN

level: 0

stage: ""

}

layer {

name: "data"

type: "HDF5Data"

top: "data"

top: "label"

include {

phase: TRAIN

}

hdf5_data_param {

source: "examples/FSRCNN/train.txt"

batch_size: 128

}

}

layer {

name: "conv1"

type: "Convolution"

bottom: "data"

top: "conv1"

param {

lr_mult: 1

}

param {

lr_mult: 0.1

}

convolution_param {

num_output: 56

pad: 0

kernel_size: 5

stride: 1

weight_filler {

type: "gaussian"

std: 0.0378

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "relu1"

type: "PReLU"

bottom: "conv1"

top: "conv1"

prelu_param {

channel_shared: true

}

}

layer {

name: "conv2"

type: "Convolution"

bottom: "conv1"

top: "conv2"

param {

lr_mult: 1

}

param {

lr_mult: 0.1

}

convolution_param {

num_output: 12

pad: 0

kernel_size: 1

group: 1

stride: 1

weight_filler {

type: "gaussian"

std: 0.3536

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "relu2"

type: "PReLU"

bottom: "conv2"

top: "conv2"

prelu_param {

channel_shared: true

}

}

layer {

name: "conv22"

type: "Convolution"

bottom: "conv2"

top: "conv22"

param {

lr_mult: 1

}

param {

lr_mult: 0.1

}

convolution_param {

num_output: 12

pad: 1

kernel_size: 3

group: 1

stride: 1

weight_filler {

type: "gaussian"

std: 0.1179

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "relu22"

type: "PReLU"

bottom: "conv22"

top: "conv22"

prelu_param {

channel_shared: true

}

}

layer {

name: "conv23"

type: "Convolution"

bottom: "conv22"

top: "conv23"

param {

lr_mult: 1

}

param {

lr_mult: 0.1

}

convolution_param {

num_output: 12

pad: 1

kernel_size: 3

group: 1

stride: 1

weight_filler {

type: "gaussian"

std: 0.1179

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "relu23"

type: "PReLU"

bottom: "conv23"

top: "conv23"

prelu_param {

channel_shared: true

}

}

layer {

name: "conv24"

type: "Convolution"

bottom: "conv23"

top: "conv24"

param {

lr_mult: 1

}

param {

lr_mult: 0.1

}

convolution_param {

num_output: 12

pad: 1

kernel_size: 3

group: 1

stride: 1

weight_filler {

type: "gaussian"

std: 0.1179

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "relu24"

type: "PReLU"

bottom: "conv24"

top: "conv24"

prelu_param {

channel_shared: true

}

}

layer {

name: "conv25"

type: "Convolution"

bottom: "conv24"

top: "conv25"

param {

lr_mult: 1

}

param {

lr_mult: 0.1

}

convolution_param {

num_output: 12

pad: 1

kernel_size: 3

group: 1

stride: 1

weight_filler {

type: "gaussian"

std: 0.1179

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "relu25"

type: "PReLU"

bottom: "conv25"

top: "conv25"

prelu_param {

channel_shared: true

}

}

layer {

name: "conv26"

type: "Convolution"

bottom: "conv25"

top: "conv26"

param {

lr_mult: 1

}

param {

lr_mult: 0.1

}

convolution_param {

num_output: 56

pad: 0

kernel_size: 1

group: 1

stride: 1

weight_filler {

type: "gaussian"

std: 0.189

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "relu26"

type: "PReLU"

bottom: "conv26"

top: "conv26"

prelu_param {

channel_shared: true

}

}

layer {

name: "conv3"

type: "Deconvolution"

bottom: "conv26"

top: "conv3"

param {

lr_mult: 0.1

}

param {

lr_mult: 0.1

}

convolution_param {

num_output: 1

pad: 4

kernel_size: 9

stride: 3

weight_filler {

type: "gaussian"

std: 0.001

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "loss"

type: "EuclideanLoss"

bottom: "conv3"

bottom: "label"

top: "loss"

}

I1208 19:20:04.539083 3428 layer_factory.hpp:77] Creating layer data

I1208 19:20:04.539145 3428 net.cpp:100] Creating Layer data

I1208 19:20:04.539166 3428 net.cpp:408] data -> data

I1208 19:20:04.539196 3428 net.cpp:408] data -> label

I1208 19:20:04.539242 3428 hdf5_data_layer.cpp:79] Loading list of HDF5 filenames from: examples/FSRCNN/train.txt

I1208 19:20:04.539290 3428 hdf5_data_layer.cpp:93] Number of HDF5 files: 1

I1208 19:20:04.540756 3428 hdf5.cpp:32] Datatype class: H5T_FLOAT

I1208 19:20:04.676849 3428 net.cpp:150] Setting up data

I1208 19:20:04.676926 3428 net.cpp:157] Top shape: 128 1 11 11 (15488)

I1208 19:20:04.676942 3428 net.cpp:157] Top shape: 128 1 19 19 (46208)

I1208 19:20:04.676959 3428 net.cpp:165] Memory required for data: 246784

I1208 19:20:04.676972 3428 layer_factory.hpp:77] Creating layer conv1

I1208 19:20:04.677001 3428 net.cpp:100] Creating Layer conv1

I1208 19:20:04.677034 3428 net.cpp:434] conv1 <- data

I1208 19:20:04.677062 3428 net.cpp:408] conv1 -> conv1

I1208 19:20:04.677525 3428 net.cpp:150] Setting up conv1

I1208 19:20:04.677563 3428 net.cpp:157] Top shape: 128 56 7 7 (351232)

I1208 19:20:04.677573 3428 net.cpp:165] Memory required for data: 1651712

I1208 19:20:04.677599 3428 layer_factory.hpp:77] Creating layer relu1

I1208 19:20:04.677630 3428 net.cpp:100] Creating Layer relu1

I1208 19:20:04.677644 3428 net.cpp:434] relu1 <- conv1

I1208 19:20:04.677654 3428 net.cpp:395] relu1 -> conv1 (in-place)

I1208 19:20:04.677721 3428 net.cpp:150] Setting up relu1

I1208 19:20:04.677753 3428 net.cpp:157] Top shape: 128 56 7 7 (351232)

I1208 19:20:04.677762 3428 net.cpp:165] Memory required for data: 3056640

I1208 19:20:04.677786 3428 layer_factory.hpp:77] Creating layer conv2

I1208 19:20:04.677816 3428 net.cpp:100] Creating Layer conv2

I1208 19:20:04.677826 3428 net.cpp:434] conv2 <- conv1

I1208 19:20:04.677839 3428 net.cpp:408] conv2 -> conv2

I1208 19:20:04.677873 3428 net.cpp:150] Setting up conv2

I1208 19:20:04.677903 3428 net.cpp:157] Top shape: 128 12 7 7 (75264)

I1208 19:20:04.677914 3428 net.cpp:165] Memory required for data: 3357696

I1208 19:20:04.677932 3428 layer_factory.hpp:77] Creating layer relu2

I1208 19:20:04.677942 3428 net.cpp:100] Creating Layer relu2

I1208 19:20:04.677953 3428 net.cpp:434] relu2 <- conv2

I1208 19:20:04.677961 3428 net.cpp:395] relu2 -> conv2 (in-place)

I1208 19:20:04.677976 3428 net.cpp:150] Setting up relu2

I1208 19:20:04.678004 3428 net.cpp:157] Top shape: 128 12 7 7 (75264)

I1208 19:20:04.678015 3428 net.cpp:165] Memory required for data: 3658752

I1208 19:20:04.678025 3428 layer_factory.hpp:77] Creating layer conv22

I1208 19:20:04.678041 3428 net.cpp:100] Creating Layer conv22

I1208 19:20:04.678050 3428 net.cpp:434] conv22 <- conv2

I1208 19:20:04.678061 3428 net.cpp:408] conv22 -> conv22

I1208 19:20:04.678098 3428 net.cpp:150] Setting up conv22

I1208 19:20:04.678128 3428 net.cpp:157] Top shape: 128 12 7 7 (75264)

I1208 19:20:04.678139 3428 net.cpp:165] Memory required for data: 3959808

I1208 19:20:04.678153 3428 layer_factory.hpp:77] Creating layer relu22

I1208 19:20:04.678163 3428 net.cpp:100] Creating Layer relu22

I1208 19:20:04.678171 3428 net.cpp:434] relu22 <- conv22

I1208 19:20:04.678184 3428 net.cpp:395] relu22 -> conv22 (in-place)

I1208 19:20:04.678198 3428 net.cpp:150] Setting up relu22

I1208 19:20:04.678400 3428 net.cpp:157] Top shape: 128 12 7 7 (75264)

data_aug.m

clear;

%% To do data augmentation

folder = 'Train/General-100';

savepath = 'Train/General-100-aug/';

filepaths = dir(fullfile(folder,'*.bmp'));

for i = 1 : length(filepaths)

filename = filepaths(i).name;

[add, im_name, type] = fileparts(filepaths(i).name);

image = imread(fullfile(folder, filename));

for angle = 0: 90 :270

im_rot = rot90(image, angle);

imwrite(im_rot, [savepath im_name, '_rot' num2str(angle) '.bmp']);

for scale = 0.6 : 0.1 :0.9

im_down = imresize(im_rot, scale, 'bicubic');

imwrite(im_down, [savepath im_name, '_rot' num2str(angle) '_s' num2str(scale*10) '.bmp']);

end

end

end

下载代码:

http://download.csdn.net/detail/forest_world/9705982

参考资料:

http://blog.csdn.net/zuolunqiang/article/details/52411673 super-resolution技术日记——FSRCNN