实验笔记之——octave conv (dilation_CONV)

本博文为octave layer系列实验,采用空洞卷积代替pool

实验python train.py -opt options/train/train_sr.json

先激活虚拟环境source activate pytorch

tensorboard --logdir tb_logger/ --port 6008

浏览器打开http://172.20.36.203:6008/#scalars

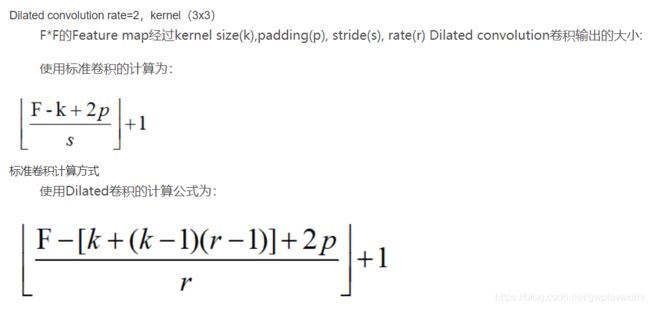

关于空洞卷积可以参考博文《各种卷积层的理解(深度可分离卷积、分组卷积、扩张卷积、反卷积)》

代码改动如下:

##################################################################################

##################################################################################

##################################################################################

#modified octave

# Block for OctConv

####################

class M_NP_OctaveConv(nn.Module):

def __init__(self, in_nc, out_nc, kernel_size, alpha=0.5, stride=1, dilation=1, groups=1, \

bias=True, pad_type='zero', norm_type=None, act_type='prelu', mode='CNA'):

super(M_NP_OctaveConv, self).__init__()

assert mode in ['CNA', 'NAC', 'CNAC'], 'Wong conv mode [{:s}]'.format(mode)

padding = get_valid_padding(kernel_size, dilation) if pad_type == 'zero' else 0

padding1 = get_valid_padding(kernel_size, 4) if pad_type == 'zero' else 0

#self.h2g_pool = nn.AvgPool2d(kernel_size=(2, 2), stride=2)

#self.h2g_pool2 = nn.AvgPool2d(kernel_size=(2, 2), stride=2)

#self.upsample = nn.Upsample(scale_factor=2, mode='nearest')

#self.upsample = nn.Upsample(scale_factor=4, mode='nearest')#double pool

self.stride = stride

self.dilation_CONV = nn.Conv2d(in_nc - int(alpha * in_nc), in_nc - int(alpha * in_nc),

kernel_size, 1, padding1, 4, groups, bias)

self.l2l = nn.Conv2d(int(alpha * in_nc), int(alpha * out_nc),

kernel_size, 1, padding, dilation, groups, bias)

self.l2h = nn.Conv2d(int(alpha * in_nc), out_nc - int(alpha * out_nc),

kernel_size, 1, padding, dilation, groups, bias)

self.h2l = nn.Conv2d(in_nc - int(alpha * in_nc), int(alpha * out_nc),

kernel_size, 1, padding, dilation, groups, bias)

self.h2h = nn.Conv2d(in_nc - int(alpha * in_nc), out_nc - int(alpha * out_nc),

kernel_size, 1, padding, dilation, groups, bias)

self.a = act(act_type) if act_type else None

self.n_h = norm(norm_type, int(out_nc*(1 - alpha))) if norm_type else None

self.n_l = norm(norm_type, int(out_nc*alpha)) if norm_type else None

def forward(self, x):

X_h, X_l = x

#if self.stride ==2:

#X_h, X_l = self.h2g_pool(X_h), self.h2g_pool(X_l)

X_h2h = self.h2h(X_h)

#X_l2h = self.upsample(self.l2h(X_l))

X_l2h = self.l2h(X_l)

#X_l2h = self.upsample(self.l2h(X_l))

X_l2l = self.l2l(X_l)

#X_h2l = self.h2l(self.h2g_pool(X_h))

X_h1=self.dilation_CONV(X_h)

X_h2l = self.h2l(X_h1)

#X_h2l = self.h2l(self.h2g_pool2(self.h2g_pool(X_h)))

#print(X_l2h.shape,"~~~~",X_h2h.shape)

X_h = X_l2h + X_h2h

X_l = X_h2l + X_l2l

if self.n_h and self.n_l:

X_h = self.n_h(X_h)

X_l = self.n_l(X_l)

if self.a:

X_h = self.a(X_h)

X_l = self.a(X_l)

return X_h, X_l

class M_NP_FirstOctaveConv(nn.Module):

def __init__(self, in_nc, out_nc, kernel_size, alpha=0.5, stride=1, dilation=1, groups=1, \

bias=True, pad_type='zero', norm_type=None, act_type='prelu', mode='CNA'):

super(M_NP_FirstOctaveConv, self).__init__()

assert mode in ['CNA', 'NAC', 'CNAC'], 'Wong conv mode [{:s}]'.format(mode)

padding = get_valid_padding(kernel_size, dilation) if pad_type == 'zero' else 0

#self.h2g_pool = nn.AvgPool2d(kernel_size=(2, 2), stride=2)

#self.h2g_pool2 = nn.AvgPool2d(kernel_size=(2, 2), stride=2)

self.stride = stride

###low frequency

self.h2l = nn.Conv2d(in_nc, int(alpha * out_nc),

kernel_size, 1, padding, dilation, groups, bias)

###high frequency

self.h2h = nn.Conv2d(in_nc, out_nc - int(alpha * out_nc),

kernel_size, 1, padding, dilation, groups, bias)

self.a = act(act_type) if act_type else None

self.n_h = norm(norm_type, int(out_nc*(1 - alpha))) if norm_type else None

self.n_l = norm(norm_type, int(out_nc*alpha)) if norm_type else None

def forward(self, x):

#if self.stride ==2:

#x = self.h2g_pool(x)

X_h = self.h2h(x)

#X_l = self.h2l(self.h2g_pool(x))

X_l = self.h2l(x)#without pool

#X_l = self.h2l(self.h2g_pool2(self.h2g_pool(x)))#double pool

if self.n_h and self.n_l:##batch norm

X_h = self.n_h(X_h)

X_l = self.n_l(X_l)

if self.a:#Activation layer

X_h = self.a(X_h)

X_l = self.a(X_l)

return X_h, X_l

class M_NP_LastOctaveConv(nn.Module):

def __init__(self, in_nc, out_nc, kernel_size, alpha=0.5, stride=1, dilation=1, groups=1, \

bias=True, pad_type='zero', norm_type=None, act_type='prelu', mode='CNA'):

super(M_NP_LastOctaveConv, self).__init__()

assert mode in ['CNA', 'NAC', 'CNAC'], 'Wong conv mode [{:s}]'.format(mode)

padding = get_valid_padding(kernel_size, dilation) if pad_type == 'zero' else 0

#self.h2g_pool = nn.AvgPool2d(kernel_size=(2,2), stride=2)

#self.h2g_pool2 = nn.AvgPool2d(kernel_size=(2, 2), stride=2)

#self.upsample = nn.Upsample(scale_factor=2, mode='nearest')

#self.upsample = nn.Upsample(scale_factor=4, mode='nearest')##double pool

self.stride = stride

self.l2h = nn.Conv2d(int(alpha * in_nc), out_nc,

kernel_size, 1, padding, dilation, groups, bias)

self.h2h = nn.Conv2d(in_nc - int(alpha * in_nc), out_nc,

kernel_size, 1, padding, dilation, groups, bias)

self.a = act(act_type) if act_type else None

self.n_h = norm(norm_type, out_nc) if norm_type else None

def forward(self, x):

X_h, X_l = x

#if self.stride ==2:

#X_h, X_l = self.h2g_pool(X_h), self.h2g_pool(X_l)

X_h2h = self.h2h(X_h)

#X_l2h = self.upsample(self.l2h(X_l))

X_l2h = self.l2h(X_l)

X_h = X_h2h + X_l2h

if self.n_h:

X_h = self.n_h(X_h)

if self.a:

X_h = self.a(X_h)

return X_h

class M_NP_octave_ResidualDenseBlockTiny_4C(nn.Module):

'''

Residual Dense Block

style: 4 convs

The core module of paper: (Residual Dense Network for Image Super-Resolution, CVPR 18)

'''

def __init__(self, nc, kernel_size=3, gc=16,alpha=0.5, stride=1, bias=True, pad_type='zero', \

norm_type=None, act_type='leakyrelu', mode='CNA'):

super(M_NP_octave_ResidualDenseBlockTiny_4C, self).__init__()

# gc: growth channel, i.e. intermediate channels

self.conv1 =M_NP_OctaveConv(nc, gc, kernel_size, alpha, stride, bias=bias, pad_type=pad_type, \

norm_type=norm_type, act_type=act_type, mode=mode)

# conv_block(nc, gc, kernel_size, stride, bias=bias, pad_type=pad_type, \

# norm_type=norm_type, act_type=act_type, mode=mode)

self.conv2 = M_NP_OctaveConv(nc+gc, gc, kernel_size, alpha, stride, bias=bias, pad_type=pad_type, \

norm_type=norm_type, act_type=act_type, mode=mode)

# conv_block(nc+gc, gc, kernel_size, stride, bias=bias, pad_type=pad_type, \

# norm_type=norm_type, act_type=act_type, mode=mode)

self.conv3 = M_NP_OctaveConv(nc+2*gc, gc, kernel_size, alpha, stride, bias=bias, pad_type=pad_type, \

norm_type=norm_type, act_type=act_type, mode=mode)

# conv_block(nc+2*gc, gc, kernel_size, stride, bias=bias, pad_type=pad_type, \

# norm_type=norm_type, act_type=act_type, mode=mode)

if mode == 'CNA':

last_act = None

else:

last_act = act_type

self.conv4 = M_NP_OctaveConv(nc+3*gc, nc, kernel_size, alpha, stride, bias=bias, pad_type=pad_type, \

norm_type=norm_type, act_type=act_type, mode=mode)

# conv_block(nc+3*gc, nc, 3, stride, bias=bias, pad_type=pad_type, \

# norm_type=norm_type, act_type=last_act, mode=mode)

def forward(self, x):

x1 = self.conv1(x)

x2 = self.conv2((torch.cat((x[0], x1[0]), dim=1),(torch.cat((x[1], x1[1]), dim=1))))

x3 = self.conv3((torch.cat((x[0], x1[0],x2[0]), dim=1),(torch.cat((x[1], x1[1],x2[1]), dim=1))))

x4 = self.conv4((torch.cat((x[0], x1[0],x2[0],x3[0]), dim=1),(torch.cat((x[1], x1[1],x2[1],x3[1]), dim=1))))

res = (x4[0].mul(0.2), x4[1].mul(0.2))

x = (x[0] + res[0], x[1] + res[1])

#print(len(x),"~~~",len(res),"~~~",len(x + res))

#return (x[0] + res[0], x[1]+res[1])

return x

class M_NP_octave_RRDBTiny(nn.Module):

'''

Residual in Residual Dense Block

(ESRGAN: Enhanced Super-Resolution Generative Adversarial Networks)

'''

def __init__(self, nc, kernel_size=3, gc=16, stride=1, alpha=0.5, bias=True, pad_type='zero', \

norm_type=None, act_type='leakyrelu', mode='CNA'):

super(M_NP_octave_RRDBTiny, self).__init__()

self.RDB1 = M_NP_octave_ResidualDenseBlockTiny_4C(nc=nc, kernel_size=kernel_size,alpha=alpha, gc=gc, stride=stride, bias=bias, pad_type=pad_type, \

norm_type=norm_type, act_type=act_type, mode=mode)

self.RDB2 = M_NP_octave_ResidualDenseBlockTiny_4C(nc=nc, kernel_size=kernel_size,alpha=alpha, gc=gc, stride=stride, bias=bias, pad_type=pad_type, \

norm_type=norm_type, act_type=act_type, mode=mode)

def forward(self, x):

out = self.RDB1(x)

out = self.RDB2(out)

res = (out[0].mul(0.2), out[1].mul(0.2))

x = (x[0] + res[0], x[1] + res[1])

#print(len(x),"~~~",len(res),"~~~",len(x + res))

#return (x[0] + res[0], x[1]+res[1])

return x

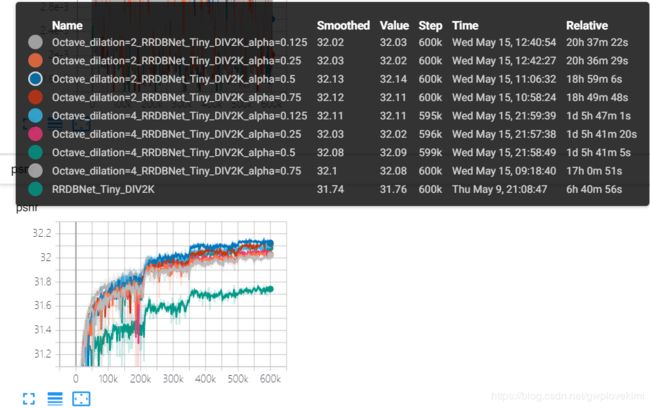

实验结果:

改进

##################################################################################

##################################################################################

##################################################################################

#modified octave

# Block for OctConv

####################

class M_NP_OctaveConv(nn.Module):

def __init__(self, in_nc, out_nc, kernel_size, alpha=0.5, stride=1, dilation=1, groups=1, \

bias=True, pad_type='zero', norm_type=None, act_type='prelu', mode='CNA'):

super(M_NP_OctaveConv, self).__init__()

assert mode in ['CNA', 'NAC', 'CNAC'], 'Wong conv mode [{:s}]'.format(mode)

padding = get_valid_padding(kernel_size, dilation) if pad_type == 'zero' else 0

padding1 = get_valid_padding(kernel_size, 2) if pad_type == 'zero' else 0

#self.h2g_pool = nn.AvgPool2d(kernel_size=(2, 2), stride=2)

#self.h2g_pool2 = nn.AvgPool2d(kernel_size=(2, 2), stride=2)

#self.upsample = nn.Upsample(scale_factor=2, mode='nearest')

#self.upsample = nn.Upsample(scale_factor=4, mode='nearest')#double pool

self.stride = stride

# self.dilation_CONV = nn.Conv2d(in_nc - int(alpha * in_nc), in_nc - int(alpha * in_nc),

# kernel_size, 1, padding1, 4, groups, bias)

self.l2l = nn.Conv2d(int(alpha * in_nc), int(alpha * out_nc),

kernel_size, 1, padding, dilation, groups, bias)

self.l2h = nn.Conv2d(int(alpha * in_nc), out_nc - int(alpha * out_nc),

kernel_size, 1, padding, dilation, groups, bias)

self.h2l = nn.Conv2d(in_nc - int(alpha * in_nc), int(alpha * out_nc),

kernel_size, 1, padding1, 2, groups, bias)

self.h2h = nn.Conv2d(in_nc - int(alpha * in_nc), out_nc - int(alpha * out_nc),

kernel_size, 1, padding, dilation, groups, bias)

self.a = act(act_type) if act_type else None

self.n_h = norm(norm_type, int(out_nc*(1 - alpha))) if norm_type else None

self.n_l = norm(norm_type, int(out_nc*alpha)) if norm_type else None

def forward(self, x):

X_h, X_l = x

#if self.stride ==2:

#X_h, X_l = self.h2g_pool(X_h), self.h2g_pool(X_l)

X_h2h = self.h2h(X_h)

#X_l2h = self.upsample(self.l2h(X_l))

X_l2h = self.l2h(X_l)

#X_l2h = self.upsample(self.l2h(X_l))

X_l2l = self.l2l(X_l)

#X_h2l = self.h2l(self.h2g_pool(X_h))

X_h1=self.dilation_CONV(X_h)

X_h2l = self.h2l(X_h1)

#X_h2l = self.h2l(self.h2g_pool2(self.h2g_pool(X_h)))

#print(X_l2h.shape,"~~~~",X_h2h.shape)

X_h = X_l2h + X_h2h

X_l = X_h2l + X_l2l

if self.n_h and self.n_l:

X_h = self.n_h(X_h)

X_l = self.n_l(X_l)

if self.a:

X_h = self.a(X_h)

X_l = self.a(X_l)

return X_h, X_l

class M_NP_FirstOctaveConv(nn.Module):

def __init__(self, in_nc, out_nc, kernel_size, alpha=0.5, stride=1, dilation=1, groups=1, \

bias=True, pad_type='zero', norm_type=None, act_type='prelu', mode='CNA'):

super(M_NP_FirstOctaveConv, self).__init__()

assert mode in ['CNA', 'NAC', 'CNAC'], 'Wong conv mode [{:s}]'.format(mode)

padding = get_valid_padding(kernel_size, dilation) if pad_type == 'zero' else 0

padding1 = get_valid_padding(kernel_size, 2) if pad_type == 'zero' else 0

#self.h2g_pool = nn.AvgPool2d(kernel_size=(2, 2), stride=2)

#self.h2g_pool2 = nn.AvgPool2d(kernel_size=(2, 2), stride=2)

self.stride = stride

###low frequency

self.h2l = nn.Conv2d(in_nc, int(alpha * out_nc),

kernel_size, 1, padding1, 2, groups, bias)

###high frequency

self.h2h = nn.Conv2d(in_nc, out_nc - int(alpha * out_nc),

kernel_size, 1, padding, dilation, groups, bias)

self.a = act(act_type) if act_type else None

self.n_h = norm(norm_type, int(out_nc*(1 - alpha))) if norm_type else None

self.n_l = norm(norm_type, int(out_nc*alpha)) if norm_type else None

def forward(self, x):

#if self.stride ==2:

#x = self.h2g_pool(x)

X_h = self.h2h(x)

#X_l = self.h2l(self.h2g_pool(x))

X_l = self.h2l(x)#without pool

#X_l = self.h2l(self.h2g_pool2(self.h2g_pool(x)))#double pool

if self.n_h and self.n_l:##batch norm

X_h = self.n_h(X_h)

X_l = self.n_l(X_l)

if self.a:#Activation layer

X_h = self.a(X_h)

X_l = self.a(X_l)

return X_h, X_l

class M_NP_LastOctaveConv(nn.Module):

def __init__(self, in_nc, out_nc, kernel_size, alpha=0.5, stride=1, dilation=1, groups=1, \

bias=True, pad_type='zero', norm_type=None, act_type='prelu', mode='CNA'):

super(M_NP_LastOctaveConv, self).__init__()

assert mode in ['CNA', 'NAC', 'CNAC'], 'Wong conv mode [{:s}]'.format(mode)

padding = get_valid_padding(kernel_size, dilation) if pad_type == 'zero' else 0

#self.h2g_pool = nn.AvgPool2d(kernel_size=(2,2), stride=2)

#self.h2g_pool2 = nn.AvgPool2d(kernel_size=(2, 2), stride=2)

#self.upsample = nn.Upsample(scale_factor=2, mode='nearest')

#self.upsample = nn.Upsample(scale_factor=4, mode='nearest')##double pool

self.stride = stride

self.l2h = nn.Conv2d(int(alpha * in_nc), out_nc,

kernel_size, 1, padding, dilation, groups, bias)

self.h2h = nn.Conv2d(in_nc - int(alpha * in_nc), out_nc,

kernel_size, 1, padding, dilation, groups, bias)

self.a = act(act_type) if act_type else None

self.n_h = norm(norm_type, out_nc) if norm_type else None

def forward(self, x):

X_h, X_l = x

#if self.stride ==2:

#X_h, X_l = self.h2g_pool(X_h), self.h2g_pool(X_l)

X_h2h = self.h2h(X_h)

#X_l2h = self.upsample(self.l2h(X_l))

X_l2h = self.l2h(X_l)

X_h = X_h2h + X_l2h

if self.n_h:

X_h = self.n_h(X_h)

if self.a:

X_h = self.a(X_h)

return X_h

class M_NP_octave_ResidualDenseBlockTiny_4C(nn.Module):

'''

Residual Dense Block

style: 4 convs

The core module of paper: (Residual Dense Network for Image Super-Resolution, CVPR 18)

'''

def __init__(self, nc, kernel_size=3, gc=16,alpha=0.5, stride=1, bias=True, pad_type='zero', \

norm_type=None, act_type='leakyrelu', mode='CNA'):

super(M_NP_octave_ResidualDenseBlockTiny_4C, self).__init__()

# gc: growth channel, i.e. intermediate channels

self.conv1 =M_NP_OctaveConv(nc, gc, kernel_size, alpha, stride, bias=bias, pad_type=pad_type, \

norm_type=norm_type, act_type=act_type, mode=mode)

# conv_block(nc, gc, kernel_size, stride, bias=bias, pad_type=pad_type, \

# norm_type=norm_type, act_type=act_type, mode=mode)

self.conv2 = M_NP_OctaveConv(nc+gc, gc, kernel_size, alpha, stride, bias=bias, pad_type=pad_type, \

norm_type=norm_type, act_type=act_type, mode=mode)

# conv_block(nc+gc, gc, kernel_size, stride, bias=bias, pad_type=pad_type, \

# norm_type=norm_type, act_type=act_type, mode=mode)

self.conv3 = M_NP_OctaveConv(nc+2*gc, gc, kernel_size, alpha, stride, bias=bias, pad_type=pad_type, \

norm_type=norm_type, act_type=act_type, mode=mode)

# conv_block(nc+2*gc, gc, kernel_size, stride, bias=bias, pad_type=pad_type, \

# norm_type=norm_type, act_type=act_type, mode=mode)

if mode == 'CNA':

last_act = None

else:

last_act = act_type

self.conv4 = M_NP_OctaveConv(nc+3*gc, nc, kernel_size, alpha, stride, bias=bias, pad_type=pad_type, \

norm_type=norm_type, act_type=act_type, mode=mode)

# conv_block(nc+3*gc, nc, 3, stride, bias=bias, pad_type=pad_type, \

# norm_type=norm_type, act_type=last_act, mode=mode)

def forward(self, x):

x1 = self.conv1(x)

x2 = self.conv2((torch.cat((x[0], x1[0]), dim=1),(torch.cat((x[1], x1[1]), dim=1))))

x3 = self.conv3((torch.cat((x[0], x1[0],x2[0]), dim=1),(torch.cat((x[1], x1[1],x2[1]), dim=1))))

x4 = self.conv4((torch.cat((x[0], x1[0],x2[0],x3[0]), dim=1),(torch.cat((x[1], x1[1],x2[1],x3[1]), dim=1))))

res = (x4[0].mul(0.2), x4[1].mul(0.2))

x = (x[0] + res[0], x[1] + res[1])

#print(len(x),"~~~",len(res),"~~~",len(x + res))

#return (x[0] + res[0], x[1]+res[1])

return x

class M_NP_octave_RRDBTiny(nn.Module):

'''

Residual in Residual Dense Block

(ESRGAN: Enhanced Super-Resolution Generative Adversarial Networks)

'''

def __init__(self, nc, kernel_size=3, gc=16, stride=1, alpha=0.5, bias=True, pad_type='zero', \

norm_type=None, act_type='leakyrelu', mode='CNA'):

super(M_NP_octave_RRDBTiny, self).__init__()

self.RDB1 = M_NP_octave_ResidualDenseBlockTiny_4C(nc=nc, kernel_size=kernel_size,alpha=alpha, gc=gc, stride=stride, bias=bias, pad_type=pad_type, \

norm_type=norm_type, act_type=act_type, mode=mode)

self.RDB2 = M_NP_octave_ResidualDenseBlockTiny_4C(nc=nc, kernel_size=kernel_size,alpha=alpha, gc=gc, stride=stride, bias=bias, pad_type=pad_type, \

norm_type=norm_type, act_type=act_type, mode=mode)

def forward(self, x):

out = self.RDB1(x)

out = self.RDB2(out)

res = (out[0].mul(0.2), out[1].mul(0.2))

x = (x[0] + res[0], x[1] + res[1])

#print(len(x),"~~~",len(res),"~~~",len(x + res))

#return (x[0] + res[0], x[1]+res[1])

return x

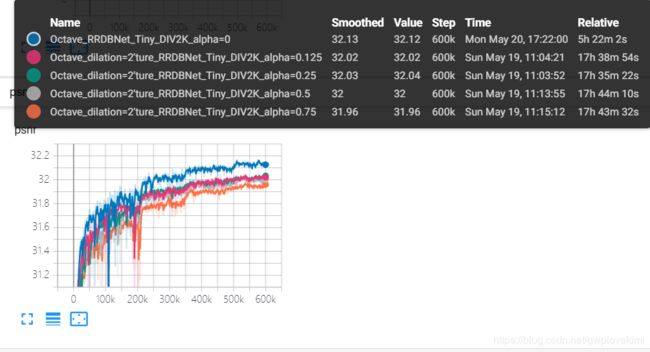

结果

改进1

##################################################################################

##################################################################################

##################################################################################

#modified octave

# Block for OctConv

####################

class M_NP_OctaveConv(nn.Module):

def __init__(self, in_nc, out_nc, kernel_size, alpha=0.5, stride=1, dilation=1, groups=1, \

bias=True, pad_type='zero', norm_type=None, act_type='prelu', mode='CNA'):

super(M_NP_OctaveConv, self).__init__()

assert mode in ['CNA', 'NAC', 'CNAC'], 'Wong conv mode [{:s}]'.format(mode)

padding = get_valid_padding(kernel_size, dilation) if pad_type == 'zero' else 0

padding1 = get_valid_padding(kernel_size, 4) if pad_type == 'zero' else 0

#self.h2g_pool = nn.AvgPool2d(kernel_size=(2, 2), stride=2)

#self.h2g_pool2 = nn.AvgPool2d(kernel_size=(2, 2), stride=2)

#self.upsample = nn.Upsample(scale_factor=2, mode='nearest')

#self.upsample = nn.Upsample(scale_factor=4, mode='nearest')#double pool

self.stride = stride

# self.dilation_CONV = nn.Conv2d(in_nc - int(alpha * in_nc), in_nc - int(alpha * in_nc),

# kernel_size, 1, padding1, 4, groups, bias)

self.l2l = nn.Conv2d(int(alpha * in_nc), int(alpha * out_nc),

kernel_size, 1, padding1, 4, groups, bias)

self.l2h = nn.Conv2d(int(alpha * in_nc), out_nc - int(alpha * out_nc),

kernel_size, 1, padding, dilation, groups, bias)

self.h2l = nn.Conv2d(in_nc - int(alpha * in_nc), int(alpha * out_nc),

kernel_size, 1, padding1, 4, groups, bias)

self.h2h = nn.Conv2d(in_nc - int(alpha * in_nc), out_nc - int(alpha * out_nc),

kernel_size, 1, padding, dilation, groups, bias)

self.a = act(act_type) if act_type else None

self.n_h = norm(norm_type, int(out_nc*(1 - alpha))) if norm_type else None

self.n_l = norm(norm_type, int(out_nc*alpha)) if norm_type else None

def forward(self, x):

X_h, X_l = x

#if self.stride ==2:

#X_h, X_l = self.h2g_pool(X_h), self.h2g_pool(X_l)

X_h2h = self.h2h(X_h)

#X_l2h = self.upsample(self.l2h(X_l))

X_l2h = self.l2h(X_l)

#X_l2h = self.upsample(self.l2h(X_l))

X_l2l = self.l2l(X_l)

#X_h2l = self.h2l(self.h2g_pool(X_h))

#X_h1=self.dilation_CONV(X_h)

X_h2l = self.h2l(X_h)

#X_h2l = self.h2l(self.h2g_pool2(self.h2g_pool(X_h)))

#print(X_l2h.shape,"~~~~",X_h2h.shape)

X_h = X_l2h + X_h2h

X_l = X_h2l + X_l2l

if self.n_h and self.n_l:

X_h = self.n_h(X_h)

X_l = self.n_l(X_l)

if self.a:

X_h = self.a(X_h)

X_l = self.a(X_l)

return X_h, X_l

class M_NP_FirstOctaveConv(nn.Module):

def __init__(self, in_nc, out_nc, kernel_size, alpha=0.5, stride=1, dilation=1, groups=1, \

bias=True, pad_type='zero', norm_type=None, act_type='prelu', mode='CNA'):

super(M_NP_FirstOctaveConv, self).__init__()

assert mode in ['CNA', 'NAC', 'CNAC'], 'Wong conv mode [{:s}]'.format(mode)

padding = get_valid_padding(kernel_size, dilation) if pad_type == 'zero' else 0

padding1 = get_valid_padding(kernel_size, 4) if pad_type == 'zero' else 0

#self.h2g_pool = nn.AvgPool2d(kernel_size=(2, 2), stride=2)

#self.h2g_pool2 = nn.AvgPool2d(kernel_size=(2, 2), stride=2)

self.stride = stride

###low frequency

self.h2l = nn.Conv2d(in_nc, int(alpha * out_nc),

kernel_size, 1, padding1, 4, groups, bias)

###high frequency

self.h2h = nn.Conv2d(in_nc, out_nc - int(alpha * out_nc),

kernel_size, 1, padding, dilation, groups, bias)

self.a = act(act_type) if act_type else None

self.n_h = norm(norm_type, int(out_nc*(1 - alpha))) if norm_type else None

self.n_l = norm(norm_type, int(out_nc*alpha)) if norm_type else None

def forward(self, x):

#if self.stride ==2:

#x = self.h2g_pool(x)

X_h = self.h2h(x)

#X_l = self.h2l(self.h2g_pool(x))

X_l = self.h2l(x)#without pool

#X_l = self.h2l(self.h2g_pool2(self.h2g_pool(x)))#double pool

if self.n_h and self.n_l:##batch norm

X_h = self.n_h(X_h)

X_l = self.n_l(X_l)

if self.a:#Activation layer

X_h = self.a(X_h)

X_l = self.a(X_l)

return X_h, X_l

class M_NP_LastOctaveConv(nn.Module):

def __init__(self, in_nc, out_nc, kernel_size, alpha=0.5, stride=1, dilation=1, groups=1, \

bias=True, pad_type='zero', norm_type=None, act_type='prelu', mode='CNA'):

super(M_NP_LastOctaveConv, self).__init__()

assert mode in ['CNA', 'NAC', 'CNAC'], 'Wong conv mode [{:s}]'.format(mode)

padding = get_valid_padding(kernel_size, dilation) if pad_type == 'zero' else 0

#self.h2g_pool = nn.AvgPool2d(kernel_size=(2,2), stride=2)

#self.h2g_pool2 = nn.AvgPool2d(kernel_size=(2, 2), stride=2)

#self.upsample = nn.Upsample(scale_factor=2, mode='nearest')

#self.upsample = nn.Upsample(scale_factor=4, mode='nearest')##double pool

self.stride = stride

self.l2h = nn.Conv2d(int(alpha * in_nc), out_nc,

kernel_size, 1, padding, dilation, groups, bias)

self.h2h = nn.Conv2d(in_nc - int(alpha * in_nc), out_nc,

kernel_size, 1, padding, dilation, groups, bias)

self.a = act(act_type) if act_type else None

self.n_h = norm(norm_type, out_nc) if norm_type else None

def forward(self, x):

X_h, X_l = x

#if self.stride ==2:

#X_h, X_l = self.h2g_pool(X_h), self.h2g_pool(X_l)

X_h2h = self.h2h(X_h)

#X_l2h = self.upsample(self.l2h(X_l))

X_l2h = self.l2h(X_l)

X_h = X_h2h + X_l2h

if self.n_h:

X_h = self.n_h(X_h)

if self.a:

X_h = self.a(X_h)

return X_h

class M_NP_octave_ResidualDenseBlockTiny_4C(nn.Module):

'''

Residual Dense Block

style: 4 convs

The core module of paper: (Residual Dense Network for Image Super-Resolution, CVPR 18)

'''

def __init__(self, nc, kernel_size=3, gc=16,alpha=0.5, stride=1, bias=True, pad_type='zero', \

norm_type=None, act_type='leakyrelu', mode='CNA'):

super(M_NP_octave_ResidualDenseBlockTiny_4C, self).__init__()

# gc: growth channel, i.e. intermediate channels

self.conv1 =M_NP_OctaveConv(nc, gc, kernel_size, alpha, stride, bias=bias, pad_type=pad_type, \

norm_type=norm_type, act_type=act_type, mode=mode)

# conv_block(nc, gc, kernel_size, stride, bias=bias, pad_type=pad_type, \

# norm_type=norm_type, act_type=act_type, mode=mode)

self.conv2 = M_NP_OctaveConv(nc+gc, gc, kernel_size, alpha, stride, bias=bias, pad_type=pad_type, \

norm_type=norm_type, act_type=act_type, mode=mode)

# conv_block(nc+gc, gc, kernel_size, stride, bias=bias, pad_type=pad_type, \

# norm_type=norm_type, act_type=act_type, mode=mode)

self.conv3 = M_NP_OctaveConv(nc+2*gc, gc, kernel_size, alpha, stride, bias=bias, pad_type=pad_type, \

norm_type=norm_type, act_type=act_type, mode=mode)

# conv_block(nc+2*gc, gc, kernel_size, stride, bias=bias, pad_type=pad_type, \

# norm_type=norm_type, act_type=act_type, mode=mode)

if mode == 'CNA':

last_act = None

else:

last_act = act_type

self.conv4 = M_NP_OctaveConv(nc+3*gc, nc, kernel_size, alpha, stride, bias=bias, pad_type=pad_type, \

norm_type=norm_type, act_type=act_type, mode=mode)

# conv_block(nc+3*gc, nc, 3, stride, bias=bias, pad_type=pad_type, \

# norm_type=norm_type, act_type=last_act, mode=mode)

def forward(self, x):

x1 = self.conv1(x)

x2 = self.conv2((torch.cat((x[0], x1[0]), dim=1),(torch.cat((x[1], x1[1]), dim=1))))

x3 = self.conv3((torch.cat((x[0], x1[0],x2[0]), dim=1),(torch.cat((x[1], x1[1],x2[1]), dim=1))))

x4 = self.conv4((torch.cat((x[0], x1[0],x2[0],x3[0]), dim=1),(torch.cat((x[1], x1[1],x2[1],x3[1]), dim=1))))

res = (x4[0].mul(0.2), x4[1].mul(0.2))

x = (x[0] + res[0], x[1] + res[1])

#print(len(x),"~~~",len(res),"~~~",len(x + res))

#return (x[0] + res[0], x[1]+res[1])

return x

class M_NP_octave_RRDBTiny(nn.Module):

'''

Residual in Residual Dense Block

(ESRGAN: Enhanced Super-Resolution Generative Adversarial Networks)

'''

def __init__(self, nc, kernel_size=3, gc=16, stride=1, alpha=0.5, bias=True, pad_type='zero', \

norm_type=None, act_type='leakyrelu', mode='CNA'):

super(M_NP_octave_RRDBTiny, self).__init__()

self.RDB1 = M_NP_octave_ResidualDenseBlockTiny_4C(nc=nc, kernel_size=kernel_size,alpha=alpha, gc=gc, stride=stride, bias=bias, pad_type=pad_type, \

norm_type=norm_type, act_type=act_type, mode=mode)

self.RDB2 = M_NP_octave_ResidualDenseBlockTiny_4C(nc=nc, kernel_size=kernel_size,alpha=alpha, gc=gc, stride=stride, bias=bias, pad_type=pad_type, \

norm_type=norm_type, act_type=act_type, mode=mode)

def forward(self, x):

out = self.RDB1(x)

out = self.RDB2(out)

res = (out[0].mul(0.2), out[1].mul(0.2))

x = (x[0] + res[0], x[1] + res[1])

#print(len(x),"~~~",len(res),"~~~",len(x + res))

#return (x[0] + res[0], x[1]+res[1])

return x

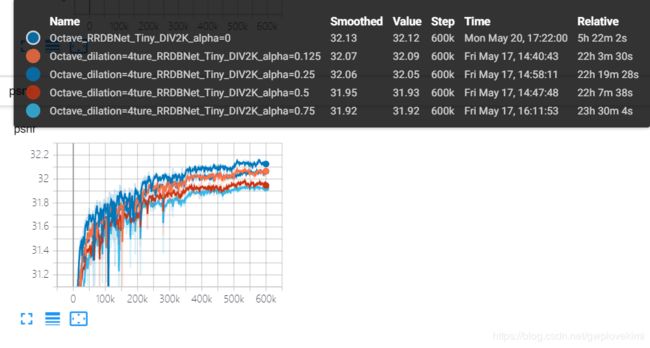

结果