import tensorflow as tf

from tensorflow import keras

import numpy as np

import matplotlib.pyplot as plt

def to_onehot(y,num):

lables = np.zeros([num,len(y)])

for i in range(len(y)):

lables[y[i],i] = 1

return lables.T

mnist = keras.datasets.fashion_mnist

(train_images,train_lables),(test_images,test_lables) = mnist.load_data()

X_train = train_images.reshape((-1,train_images.shape[1]*train_images.shape[1])) / 255.0

Y_train = to_onehot(train_lables,10)

X_test = test_images.reshape((-1,test_images.shape[1]*test_images.shape[1])) / 255.0

Y_test = to_onehot(test_lables,10)

input_nodes = 784

output_nodes = 10

layer1_nodes = 100

layer2_nodes = 50

batch_size = 100

learning_rate_base = 0.8

learning_rate_decay = 0.99

regularization_rate = 0.0000001

epchos = 300

mad = 0.99

learning_rate = 0.005

def train(mnist):

X = tf.placeholder(tf.float32,[None,input_nodes],name = "input_x")

Y = tf.placeholder(tf.float32,[None,output_nodes],name = "y_true")

w1 = tf.Variable(tf.truncated_normal([input_nodes,layer1_nodes],stddev=0.1))

b1 = tf.Variable(tf.constant(0.1,shape=[layer1_nodes]))

w2 = tf.Variable(tf.truncated_normal([layer1_nodes,layer2_nodes],stddev=0.1))

b2 = tf.Variable(tf.constant(0.1,shape=[layer2_nodes]))

w3 = tf.Variable(tf.truncated_normal([layer2_nodes,output_nodes],stddev=0.1))

b3 = tf.Variable(tf.constant(0.1,shape=[output_nodes]))

layer1 = tf.nn.relu(tf.matmul(X,w1)+b1)

A2 = tf.nn.relu(tf.matmul(layer1,w2)+b2)

A3 = tf.nn.relu(tf.matmul(A2,w3)+b3)

y_hat = tf.nn.softmax(A3)

cross_entropy = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits_v2(logits=A3,labels=Y))

regularizer = tf.contrib.layers.l2_regularizer(regularization_rate)

regularization = regularizer(w1) + regularizer(w2) +regularizer(w3)

loss = cross_entropy + regularization * regularization_rate

train_step = tf.train.GradientDescentOptimizer(learning_rate).minimize(loss)

correct_prediction = tf.equal(tf.argmax(y_hat,1),tf.argmax(Y,1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction,tf.float32))

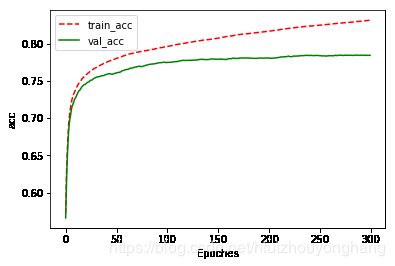

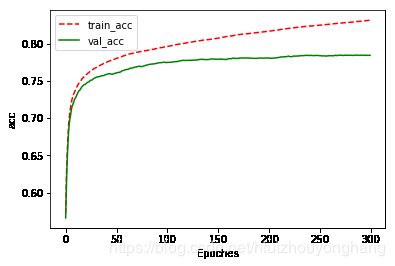

total_loss = []

val_acc = []

total_train_acc = []

x_Xsis = []

with tf.Session() as sess:

tf.global_variables_initializer().run()

for i in range(epchos):

batchs = int(X_train.shape[0] / batch_size + 1)

loss_e = 0.

for j in range(batchs):

batch_x = X_train[j*batch_size:min(X_train.shape[0],j*(batch_size+1)),:]

batch_y = Y_train[j*batch_size:min(X_train.shape[0],j*(batch_size+1)),:]

sess.run(train_step,feed_dict={X:batch_x,Y:batch_y})

loss_e += sess.run(loss,feed_dict={X:batch_x,Y:batch_y})

validate_acc = sess.run(accuracy,feed_dict={X:X_test,Y:Y_test})

train_acc = sess.run(accuracy,feed_dict={X:X_train,Y:Y_train})

print("epoches: ",i,"val_acc: ",validate_acc,"train_acc",train_acc)

total_loss.append(loss_e / batch_size)

val_acc.append(validate_acc)

total_train_acc.append(train_acc)

x_Xsis.append(i)

validate_acc = sess.run(accuracy,feed_dict={X:X_test,Y:Y_test})

print("val_acc: ",validate_acc)

return (x_Xsis,total_loss,total_train_acc,val_acc)

result = train((X_train,Y_train,X_test,Y_test))

def plot_acc(total_train_acc,val_acc,x):

plt.figure()

plt.plot(x,total_train_acc,'--',color = "red",label="train_acc")

plt.plot(x,val_acc,color="green",label="val_acc")

plt.xlabel("Epoches")

plt.ylabel("acc")

plt.legend()

plt.show()

效果并不是很理想,有待于进一步优化此模型